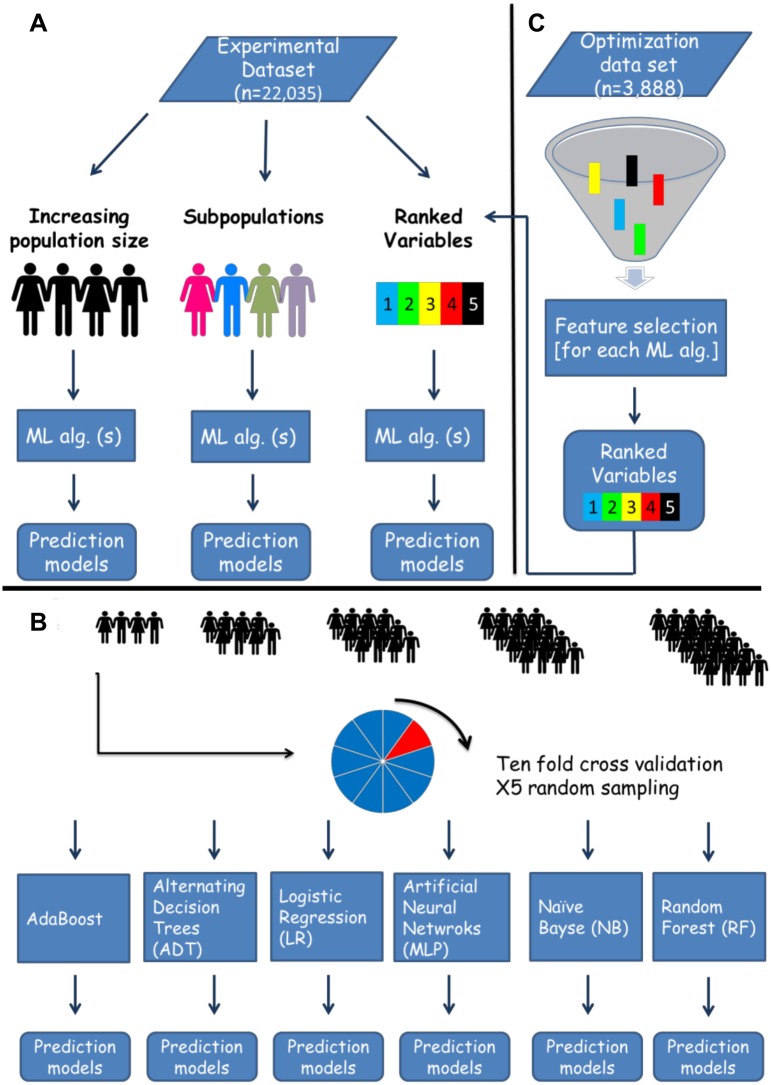

Fig 1. In-silico predictive modeling- experimental design.

The original dataset was randomly split into an optimization and experimental datasets. The former was used for tuning of machine learning algorithms and feature selection. A. Several experiments were run on the experimental dataset, testing the effects of population size, specific subpopulations and number of variables included on predictive performance. B. A detailed explanation of the increasing population size experiment displayed in panel A. Patients were randomly sampled from the experimental dataset, creating samples with an expending size, which were later introduced to six machine learning algorithms. For each sample a prediction model for day 100 NRM was developed, and performance was measured through the area under the receiver operating curve (AUC). Models were trained and tested with 10 fold cross validation. The sampling process was repeated 5 times. C. For estimation of variable importance (ranked variables experiment in panel A) and the number of variables necessary for optimal prediction of day 100 NRM, we ran a feature selection algorithm on the optimization set. Variables were ranked according to their predictive contribution to each algorithm. The next step involved serial introduction of the variables, according to their importance to six machine learning algorithms which were applied on the experimental dataset. In each iteration a prediction model for day 100 NRM was trained and test with 10 fold cross validation. For instance in the first iteration the top ranking variable was introduced, in the second the top 2 variables and so on until all 23 variables were used. Performance was estimated according to the AUC. Machine learning (ML), Algorithm (Alg).