Abstract

Invertible image representation methods (transforms) are routinely employed as low-level image processing operations based on which feature extraction and recognition algorithms are developed. Most transforms in current use (e.g. Fourier, Wavelet, etc.) are linear transforms, and, by themselves, are unable to substantially simplify the representation of image classes for classification. Here we describe a nonlinear, invertible, low-level image processing transform based on combining the well known Radon transform for image data, and the 1D Cumulative Distribution Transform proposed earlier. We describe a few of the properties of this new transform, and with both theoretical and experimental results show that it can often render certain problems linearly separable in transform space.

I. Introduction

Image pattern recognition is an important problem in wide variety of disciplines including computer vision, image processing, biometrics, and remote sensing. The primary goal of pattern recognition is supervised or unsupervised classification of data points (e.g. signals or images). Image transforms have long been used as low level representation models to facilitate pattern recognition by simplifying feature extraction from images. The Fourier transform, Walsh-Hadamard transform, wavelet transform, ridgelet and curvelet transforms, sine and cosine transforms, Radon transform, etc. are examples of such image transforms.

Some interesting applications of invertible image transforms in pattern recognition are presented in [5, 10, 20, 22, 23]. In [20], discrete Fourier transform (DFT) was used for palm print identification. Monro et al. [23] used discrete cosine transform (DCT) for iris recognition. Wavelet coefficients were used as texture features in [10] for image retrieval. Mandal et al. [22] used curvelet-based features for face recognition. The Radon transform was used for Gait recognition in [5]. The list above is obviously not exhaustive. They represent just but a few examples of many applications of image transforms in pattern recognition.

A common property among aforementioned transforms is that they are all invertible linear transforms that seek to represent a given image as a linear combination of a set of functions (or discrete vectors for digital signals). What we mean by an invertible linear transform, ℱ, is that for images I and J, ℱ satisfies ℱ(I) + ℱ(J) = ℱ(I + J), ℱ(αI) = αℱ(I), and ℱ−1 exists. Linear transforms are unable to alter the ‘shape’ of image classes (i.e. distribution of the point cloud data) so as to fundamentally simplify the actual classification task. For example, linear operations are unable to render classification problems that are not linearly separable into linearly separable ones (see Figure 1). When considering many important image classification tasks, it is not hard to understand the problem at an intuitive level. One can often visually observe that in many image categories (e.g. human faces, cell nuclei, galaxies, etc.) a common way in which images differ from one another is not only in their intensities, but also in where the intensities are positioned. By definition, however, linear image transforms must operate at fixed pixel coordinates. As such, they are unable to move or dislocate pixel intensities in any way. Hence, for pattern recognition purposes, linear image transforms are usually followed by a nonlinear operator to demonstrate an overall nonlinear effect (e.g. thresholding in curvelet and wavelet transforms, magnitude of Fourier coefficients, blob detection/analysis in Radon transform, etc.).

Fig. 1.

Overview of role of Radon-CDT in enhancing linear separations of classes.

Many feature extraction methods have been developed for images [7, 30, 40, 41] along side with the end to end deep neural network approaches such as convolutional neural networks (ConvNets) [18, 19] and scattering networks (ScatNets) [6, 32, 41]. These recent methods have proven to be very successful in image classification and they have improved the state of the art classification for a wide range of image datasets. Such methods, however, are often not well suited for image modeling applications, including imaging and image reconstruction, as they provide a noninvertible nonlinear mapping from the image space to the feature space. Meaning that while the nonlinearity of the image classes are captured through the extracted features, any statistical analysis in the feature space does not have a direct interpretation in the image space as the mapping is noninvertible.

Intensity vector flows can represent an interesting alternative for encoding the pixel intensity movements which may help simplify certain pattern recognition tasks. In earlier work [16, 39] we have described a framework that makes use of the L2 optimal transport metric (Earth Mover's distance) to define a new invertible image transform. The framework makes use of a reference (template) image to which a given image is morphed using the optimal transport metric. The optimal transport provides a unique vector flow that morphs the input image into the reference image. The mapping from an image to its designated vector flow can be thought of as a nonlinear image transform. Such a transform is invertible and the inverse transform is obtained by applying the inverse of the computed flow to the established template. Thus, the technique can be used to extract information regarding the pixel intensities, as well as their locations (relative to the reference image). The approach has proven useful in a variety of applications [2, 15, 16, 39]. In [15] we have shown that it can be used to design powerful solutions to learning based inverse problems. We've also shown that encoding pixel movements as well as intensities can be highly beneficial for cancer detection from histopathology [25] and cytology [34] images. In addition, given that the transform is invertible, the approach enables visualization of any regression applied in transform space. It thus enables one to visualize variations in texture and shapes [2, 39], as well as to visualize discriminant information by ‘inverting’ classifiers [39].

The transport-based approach outlined above, however, depends on obtaining a unique transport map that encodes an image via minimization of a transport metric. This can be done via linear programming for images that can be considered as discrete measures [38], or via variational minimization for images that can be considered as smooth probability densities [13]. It is thus relatively cumbersome and slow for large images. Moreover, the mathematical analysis of any benefits regarding enhanced classification accuracy is difficult to perform given the underlying (nonlinear) minimization problem.

In this paper we describe a new 2D image transform by combining the standard 2D Radon transform of an image with the 1D Cumulative Distribution Transform (CDT) proposed earlier [29]. As with our earlier work [29, 39], the transform utilizes a reference (or template), but in contrast to our earlier work, it can be computed with a (nonlinear) closed form formula without the need for a numerical minimization method. An added benefit of this framework is that several of its properties (including enhancements in linear separation) can now be shown mathematically. We show theoretically and experimentally, that the newly defined Radon-CDT improves the linear separability of image classes.

We note that, the Radon transform has been extensively used in imaging applications such as Computerized Tomography (CT) and Synthetic Aperture Radar (SAR) [24, 26]. In addition there has been a large body of work on utilizing the Radon transform to design image features that have invariant properties [8, 9, 14]. What differentiates our work from the invariant feature extraction methods that also use the Radon transform is: 1) the Radon-CDT is a nonlinear and invertible image transform that enables any statistical analysis in the transform space to be directly inverted to the image space, and 2) we provide a theorem that guarantees linear separation of certain image classes in the transform space.

In what follows, we start by briefly reviewing the concept of the cumulative distribution transform [29] and the Radon transform. In Section III, we introduce the Radon cumulative transform and enumerate some of its properties. The details for the numerical implementation of our method is presented in IV. In Section V we demonstrate the capability of the Radon-CDT to enhance linear separability of the data classes on synthetic and real-life image datasets. Finally, we conclude our work in Section VI.

II. Preliminaries

We start by reviewing definitions and certain basic properties of the cumulative distribution [29] and Radon transforms.

A. The Cumulative Distribution Transform

The CDT [29] is a bijective nonlinear signal transform from the space of smooth probability densities to the space of differentiable functions. In contrast to linear signal transformation frameworks (e.g. Fourier and Wavelet transforms) which only employ signal intensities at fixed coordinate points, thus adopting an ‘Eulerian’ point of view (in PDE parlance), the idea behind the CDT is to consider the intensity variations together with the locations of the intensity variations in the signal. Therefore, the CDT adopts a ‘Lagrangian’ point of view (in PDE parlance) for analyzing signals.

More formally, let μ and σ be two continuous probability measures on ℝ with corresponding positive densities I and I0, such that ∫ℝ dμ(x) = ∫ℝ I(x)dx = 1 and ∫ℝ dσ(x) = ∫ℝ I0(x)dx = 1. The forward and inverse CDT transform (analysis and synthesis equations) of I with respect to I0 are defined as [29],

| (1) |

where (I0 ∘ f)(x) = I0(f(x)), id : ℝ → ℝ is the identity function, id(x) = x, ∀x∈ ℝ, and f : ℝ → ℝ is a measurable map that satisfies,

| (2) |

which implies that f′(I ∘ f) = I0, where . For continuous and positive probability densities I0 and I, f is a strictly increasing function and is defined uniquely. Note that f morphs the input signal I into the reference signal I0, through f′(I ∘ f) = I0.

In [29] we showed that the CDT can enhance linear separability of signal classes in transform (i.e. feature) space. A simple example to demonstrate the linear separability characteristic of the CDT is as follows. Let I: ℝ → ℝ+ be a signal and let Ĩ be its corresponding representation in the CDT space with respect to a chosen template signal I0 : ℝ →ℝ+. If we consider the CDT transform of the translated signal (see [29] for a derivation), we have that

| (3) |

Now observe that although I(t − τ) is nonlinear in τ, its CDT representation becomes linear in τ. This effect is not limited to translations and is generalized to larger classes of signal transformations. More precisely, let ℂ be a set of measurable maps and let ℙ and ℚ be sets of positive probability density functions born from two positive probability density functions p0 and q0 (mother density functions) as follows,

| (4) |

The sets ℙ and ℚ are linearly separable in the transform space (regardless of the choice of the reference signal I0) if ℂ satisfies the following conditions,

h ∈ ℂ ⇔ h−1 ∈ ℂ

h1, h2 ∈ ℂ ⇒ αh1 + (1 − α) h2 ∈ ℂ, ∀α ∈ [0,1]

h1, h2 ∈ ℂ ⇒ h1(h2), h2(h1) ∈ ℂ

h′(p0 ∘ h) ≠ q0, ∀h ∈ ℂ

Finally, we note that the CDT has well-understood geometric properties. The Euclidean norm of the signal I in the transform space corresponds to the 2-Wasserstein distance between I and I0, which is given by

| (5) |

where f′(I ∘ f) = I0. Note that, using the optimal transportation (OT) parlance, in one-dimensional problems there only exist one strictly increasing transport map f that morphs I into I0 [35] and hence no optimization, over f, is required to calculate the 2-Wasserstein distance.

B. The Radon transform

The Radon transform of an image I : ℝ2 → ℝ+, which we denote by Î = ℛ(I), is defined as:

| (6) |

where t is the perpendicular distance of a line from the origin and θ is the angle between the line and the y-axis as shown in Figure 2. Furthermore, using the Fourier Slice Theorem [24, 27], the inverse Radon transform is defined as, I = ℛ−1(Î),

Fig. 2. Geometry of the line integral associated with the Radon transform.

| (7) |

where w = ℱ−1(|ω|) is the ramp filter, ℱ−1 is the inverse Fourier transform, and Î(.,θ) * w(.) is the one-dimensional convolution with respect to variable t. We will use the following property of the Radon transform in our derivations in the consequent sections,

| (8) |

which implies that for ∀θi, θj ∈ [0, π].

III. The radon-CDT

Here we combine the CDT [29] and the Radon transform to describe the Radon Cumulative Distribution Transform (Radon-CDT). We then derive a few properties of the Radon-CDT, and extend the CDT results [29] on linear separability of classes of one-dimensional signals [29] to classes of images. Before introducing Radon-CDT we first introduce a metric for images, which we call the Radon Cumulative Distribution (RCD) metric.

Let μ and σ be two continuous probability measures on ℝ2 with corresponding positive probability density functions I and I0. Let the sinograms obtained from their respective Radon transforms be,

| (9) |

Using the Radon property shown in Eq.(8), for a fixed angle θ, there exists a unique one-dimensional measure preserving map, f(.,θ) that warps Î(.,θ) into Î0(.,θ) and satisfies the following:

| (10) |

which implies that f′(.,θ)(Î(., θ) ∘ f(., θ)) = Î0(., θ). Using f we define the RCD metric between images I and I0 as,

| (11) |

In Appendix VIII-A we show that satisfies the non-negativity, coincidence axiom, symmetry, and triangle inequality properties and therefore is a metric. We note that the RCD metric as defined above is also known as the sliced Wasserstein metric in the literature and is used in [4, 28] to calculate barycenters of measures for texture mixing applications.

We now define the Radon-CDT. Given an image I and a template image I0, where both images are normalized such that

the forward and inverse Radon-CDT for image I are defined as,

| (12) |

where g(t, θ) = [f−1(t, θ), θ]T, and Dg is the Jacobian of g. In order to avoid any confusion, we emphasize that f−1(f(.,θ),θ) = id, ∀θ ∈ [0, π], and .

Figure 3 shows the process of calculating the Radon-CDT of a sample image I with respect to a template image I0. The sinograms of images are first computed and denoted as Î and Î0. Then for each θ the measure preserving map, f(.,θ), is found to warp the one-dimensional signal Î(., θ) into Î0(., θ). The one-dimensional warping between Î(.,θ*) and Î0 (.,θ*), where θ* is an arbitrary projection angle, is visualized in Figure 3 to demonstrate the process. Finally, the Radon-CDT is obtained from f and Î0.

Fig. 3.

The process of calculating the Radon-CDT transform of image I with respect to the template image I0 .

Similar to the CDT, the Radon-CDT is a nonlinear isomorphic image transform, since for a given template image I0, f in Eq. (10) provides a unique representation of I. Furthermore, the Euclidean norm of image I in the transformed space corresponds to the RCD metric between the image and the reference image,

| (13) |

In addition, the Euclidean distance between two images Ii and Ij in the transformed space is also the RCD metric between these images,

| (14) |

The proof for the equation above is included as part of the proof for the triangle inequality property of the Radon-CDT metric in Appendix VIII-A.

A. Radon-CDT properties

Here we describe a few basic properties of the Radon-CDT, with the main purpose of elucidating certain of its qualities necessary for understanding its ability to linearly separate certain types of two-dimensional densities.

Translation

Let J(x, y) = I(x − x0, y − y0) and let Ĩ be the Radon-CDT of I. The Radon-CDT of J with respect to a reference image I0 is given by,

| (15) |

For a proof, see Appendix VIII-B. Similar to the CDT example in Eq. 3, it can be seen that while I(x − x0,y − y0) is nonlinear with respect to [x0, y0] the presentation of the image in the Radon-CDT, is linear.

Scaling

Let J(x, y) = α2I(αx, αy) with α > 0 and let Ĩ be the Radon-CDT of I. The Radon-CDT of J with respect to a reference image I0 is given by,

| (16) |

For a proof, see Appendix VIII-C. Similar to the translation property, it can be seen that while α2I(αx, αy) is nonlinear with respect to α the corresponding presentation in the Radon-CDT space, , is linear in .

Rotation

Let J(x, y) = I(x cos(ϕ)+y sin(ϕ), −x sin(ϕ) + y cos(θ)) and let Ĩ be the Radon-CDT of I. For a circularly symmetric reference image I0, the Radon-CDT of J is given by,

| (17) |

for a proof, see Appendix VIII-D. Note that unlike translation and scaling, for rotation the transformed image remains nonlinear with respect to ϕ.

B. Linear separability in the Radon-CDT space

In this section we describe how the Radon-CDT can enhance linear separability of image classes. The idea is to show that if a specific ‘generative model’ is utilized for constructing signal classes, the Radon-CDT can be effective in linearly classifying these. Let ℂ be a set of measurable maps, with h ∈ ℂ, and let ℙ and ℚ be sets of normalized images born from two mother images p0 and q0 as follows,

| (18) |

Before proceeding, it is important to note that h must be absolutely continuous in t and θ, so that det(Dh)(p̂0 ∘ h) and det(Dh)(q̂0 ∘ h) remain in the range of the Radon transform [12]. Now, under the signal generative model described above, it can be shown that the sets ℙ and ℚ become linearly separable in the transform space (regardless of the choice of the reference image I0) if ℂ satisfies the following conditions,

h ∈ ℂ ⇔ h−1 ∈ ℂ

h1, h2 ∈ ℂ ⇒ αh1 + (1 − α) h2 ∈ ℂ, ∀α ∈ [0, 1]

h1, h2 ∈ ℂ ⇒ h1(h2), h2(h1) ∈ ℂ

det(Dh)(p̂0 ∘ h) ≠ q̂0, h(t, θ) = [hθ(t), θ]T, ∀hθ ∈ ℂ

The proof is included in Appendix VIII-E.

It is useful to consider a couple of examples to elucidate the meaning of the result above. Consider for example ℂ = {h|h(t, θ) = t + x0 cos(θ) + y0 sin(θ),∀x0, y0 ∈ ℝ } which corresponds to the class of translations in the image space (i.e. translation by [x0, y0]). Such a class of diffeomorphisms satisfies all the conditions named above, and if applied to two mother signals p̂0 and q̂0 would generate signals in image space which are simple translations of p0 and q0 . The classes ℙ and ℚ would therefore not be linearly separable in signal domain. The result above, however, states that these classes are linearly separable in Radon-CDT domain. Another example is the class ℂ = {h|h(t, θ) = βt,∀β ∈ ℝ+} which corresponds to the class of mass preserving scalings in the image space. It is straightforward to show that such class of mass preserving mappings also satisfies the conditions enumerated above. These cases only serve as few examples that represent such classes of mass preserving mappings. An important aspect to the theory presented above is that the linear separation result is independent of the choice of template I0 used in the definition of the transform. Therefore, in theory, linear separability could be achieved utilizing any chosen template.

IV. Numerical implementation

A. Radon transform

A large body of work on numerical implementation of the Radon transform exists in the literature [1]. Here, we use a simple numerical integration approach that utilizes nearest neighbor interpolation of the given images, and summation. In all our experiments we used 180 projections (i.e. θi = (i −1)°, i ∈ [1, …, 180]).

B. Measure preserving map

In this section, we follow a similar computational algorithm as in Park et al. [29] and describe a numerical method to estimate the measure preserving map that warps Î(.,θ) into Î0(.,θ). Let π be the B-spline of degree zero of width r,

| (19) |

and define Π as,

| (20) |

Using the B-spline of degree zero, we approximate the continuous sinograms, Î(., θ) and Î0(., θ), with their corresponding discrete counterparts c and c0 as follows,

| (21) |

Now the goal is to find f(.,θ) such that,

| (22) |

which is equivalent to,

| (23) |

let for L > 1, and define τ0 and τ such that,

| (24) |

for l = 1, …, L + 1, where τ and τ0 are found using the algorithm defined in Park et al. [29]. From the equation above we have that f(τ0[l],θ) = τ[l]. Finally we interpolate f to obtain its values on the regular grid, tk for k = 1,…, K.

C. Computational complexity

The computational complexity of the Radon transform of N × N images at M projection angles is 𝒪(N2M), and the computational cost for finding the mass preserving map, f(t, θ), from a pair of sinograms is 𝒪(MNlog(N)), hence, the overall computational cost of the Radon-CDT is dominated by the computational complexity of the Radon transform, 𝒪(N2M). We also compare our image transform with the Ridgelet transform. The Ridgelet transform can be presented as the composition of the Wavelet transform and the Radon transform. Since the computational complexity of the Wavelet transform is 𝒪(N2), the computational complexity of the Ridgelet transform is also dominated by the computational complexity of the Radon transform, 𝒪(N2M).

V. Results

In this section, we start by demonstrating the invertible and nonlinear nature of the Radon-CDT. We first show that Radon-CDT provides a strong framework for modeling images. Then, we study the ability of the Radon-CDT to enhance linear separability in a variety of pattern recognition tasks. Starting with a simple synthetic example, we explain the idea of linear separation in the Radon-CDT space. Next, we describe the application of Radon-CDT in pattern recognition by demonstrating its capability to simplify data structure on three image datasets. The first dataset is part of the Carnegie Mellon University Face Images database, as described in detail in [33], and it includes frontal images of 40 subjects under neutral and smiling facial expressions. The second dataset contains 500 images of segmented liver nuclei extracted from histology images obtained from the archives of the University of Pittsburgh Medical Center (UPMC). The nuclei belong to 10 different subjects including five cancer patients suffering from fetal-type hepatoblastoma (FHB), with the remaining images from the liver of five healthy individuals [37] (in average 50 nuclei are extracted per subject). The last dataset is part of the LHI dataset of animal faces [31], which includes 159 images of cat faces, 101 images of deer faces, and 116 images of panda faces.

A. The Radon-CDT representation

In the previous sections we demonstrated few properties of the Radon-CDT. Here we show some implications of these properties in image modeling. Let I0 be an arbitrary image and let I(x, y) = I0(x − x0, y − y0) be a translated version of I0. A natural interpolation between these images follows from Iα(x, y) = I0(x − αx0, y − αy0) where α ∈ [0,1]. The linear interpolation between these images in the image space, however, is equal to,

| (25) |

In fact, above equation is also true for any linear image transform. Take the Radon transform for example, where the linear interpolation in the transform space is equal to,

| (26) |

On the other hand, due to its nonlinear nature, this scenario is completely different in the Radon-CDT space. Let I0 be the template image for the Radon-CDT (the following argument holds even if the template is chosen to be different from I0), then from Equation (15) we have Ĩ0(t, θ) = 0 and . The linear interpolation in the Radon-CDT space is then equal to,

| (27) |

which is the natural interpolation between these images and captures the underlaying translation. Figure 4 summarizes the equations presented above and provides a visualization of this effect.

Fig. 4.

A simple linear interpolation between two images in the image space, the Radon transform space (which is a linear transform), and the Radon-CDT space.

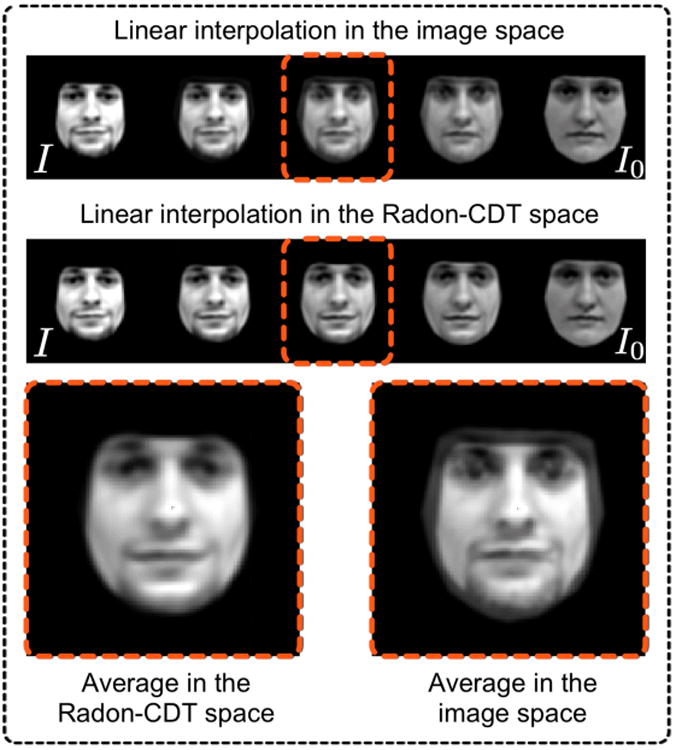

In fact, translation and scaling are not the only effects that are captured by our proposed transform. The Radon-CDT is capable of capturing more complicated variations in the image datasets. In order to demonstrate the modeling (or representation) power of the Radon-CDT we repeat the experiment above on two face images taken from the Carnegie Mellon University Face Images database. Figure 5 shows the interpolated faces in the image space and in the Radon-CDT space. From Figure 5 it can be seen that the nonlinearity of the Radon-CDT enables it to capture variations in a much more efficient way (this will also be demonstrated in the subsequent sections). Note that according to Equation (26) the interpolation in any linear transform space (such as the Radon transform or the Ridgelet transform) leads to the same interpolation in the image space as shown in Figure 5.

Fig. 5.

Interpolation in the image space (or any linear transform space) and in the Radon-CDT space. The corresponding average images in these spaces demonstrate the benefit of modeling images through our proposed nonlinear and invertible transform.

B. Synthetic example

Consider two classes of images ℙ and ℚ which are generated as follows,

| (28) |

Figure 6(a) illustrates these classes of images. Classes ℙ and ℚ are disjoint, however, they are not linearly separable in the image space. This is demonstrated by projecting the image classes onto a linear discriminant subspace, calculated using penalized linear discriminant analysis (pLDA) [36], which is a regularized version of LDA. More precisely, we first prune the image space by discarding dimensions which do not contain data points. This is done using principle component analysis (PCA) and discarding the zero eigenvalues. Then, we calculate the pLDA subspace from the pruned image space. Figure 6(b) shows the projection of the data onto the subspace spanned by the first two pLDA directions.

Fig. 6.

Two example image classes ℙ and ℚ and their corresponding Radon-CDT with respect to the template image I0 (a), and the projection of the data and its transformation onto the pLDA discriminant subspace learned from the image space (top) and the Radon-CDT space (bottom), respectively (b).

Next, we demonstrate the linear separability property of our proposed image transform by calculating the Radon-CDT of classes ℙ and ℚ with respect to an arbitrary image I0 (see Figure 6(a)), and finding the pLDA subspace in the transformed space. Projection of the transformed data, ℙ̃ and ℚ̃, onto the pLDA subspace (as depicted in Figure 6(b)) indicates that the nonlinearity of the data is captured by Radon-CDT and the image classes have become linearly separable in the Radon-CDT space.

C. Pattern recognition in the Radon-CDT space

In this section we investigate the linear separability property of Radon-CDT on real images, where the image classes do not exactly follow the class structures stated in Section III-B. As with the simulated example above, our goal is to demonstrate that the data classes in the Radon-CDT space become more linearly separable. This is done by utilizing linear support vector machine (SVM) classifiers in the image and the Radon-CDT space, and showing that the linear classifiers consistently lead to higher classification accuracy in the Radon-CDT space.

To test our method, we utilized a facial expression dataset [33], a liver nuclei dataset [2], and part of the LHI dataset of animal faces [31]. The facial expression dataset contains two classes of expressions, namely ‘neutral’ and ‘smiling’. The classes in the nuclei dataset are ‘fetal-type hepatoblastoma’ (type of a liver cancer) and ‘benign’ for liver nuclei. The last dataset contains facial images of three different animals, namely cat, deer, and panda under a variety of variations including translation, pose, scale, texture, etc. The animal face dataset is preprocessed by first calculating the image edges using the Canny operator and then filtering the edge-maps of the images with a Gaussian low pass filter.

We compare our Radon-CDT with well-known image transforms such as the Radon transform and the Ridgelet transform [11]. Figure 7 shows sample images from the LHI dataset, the corresponding Radon transform, the Ridgelet transform, and the Radon-CDT of the images. The Radon-CDT and the Ridgelet transform are calculated at discrete projection angles, θ ∈ [0°, 1°,…, 179°], and 3 levels were used for the Ridgelet transform. In addition, Figure 7 depicts the discriminant sub-spaces calculated for this dataset in all transformation spaces. The discriminant subspaces are calculated using the pLDA, as described in Section V-B. It can be clearly seen that the image classes become more linearly separable in the Radon-CDT space.

Fig. 7.

Sample images belonging to each class and their corresponding edge-maps (a), and the projection of the data and its transformations onto the pLDA discriminant subspace learned from the image space, the Radon transform space, the Ridgelet transform space, and the Radon-CDT space (b).

Similarly, sample images from the facial expression dataset, the nuclei dataset, and their corresponding Radon-CDT representation is depicted in Figure 8 (a) and (b). Figure 8, (c) and (d), show the projection of the data and the transformed data onto the top two pLDA directions learned from the data in the image space and in the Radon-CDT space for the face and the nuclei datasets, respectively (the images for the Radon transform and the Ridgelet transform are omitted for the sake of brevity). From both Figures 7 and 8 it can be clearly seen that the Radon-CDT captures the nonlinearity of the data and simplifies the data structure significantly.

Fig. 8.

The image classes and their corresponding Radon-CDT with respect to the template image I0 for the facial expression (a) and the liver nuclei (b) datasets, and the projection of the data and its transformation onto the pLDA discriminant subspace learned from the image space and the Radon-CDT space, for the facial expression (c) and the liver nuclei (d) dataset.

Note that, the eigenvalues of the covariance matrix of the data represent the amount of variations in the data that is captured by the principal components (i.e. its eigenvectors). Figure 9 shows the cumulative percent variance (CPV) captured by the principal components calculated from the image space, the Radon transform space, the Ridgelet transform space, and the Radon-CDT space as a function of the number of principal components for all the datasets. It can be seen that the variations in the datasets are captured more efficiently and with fewer principal components in the Radon-CDT space as compared to the other transformation spaces. This indicates that the data structure becomes simpler in the Radon-CDT space, and the variations in the datasets can be explained with fewer parameters.

Fig. 9.

Percentage variations captured by the principal components in the facial expression dataset (top) and the liver dataset (bottom), in the image space and in the Radon-CDT space.

We used the aforementioned datasets in supervised learning settings. The principal components of the datasets were first calculated and the data points were projected to these principal components (i.e. the dimensions which are not populated by data points were discarded). Next, a ten-fold cross validation scheme was used, in which 90% of the data was used for training and the remaining 10% was used for testing. A linear SVM classifier is learned and cross-validated on the training data and the classification accuracy is calculated on the testing data. The average accuracy, averaged over the accuracies reported in the cross validation, for each dataset is reported in Table I. It can be seen that the linear classification accuracy is not only higher in the Radon-CDT space but also it is more consistent as the standard deviations of the reported accuracies are lower for the Radon-CDT space. We emphasize here that the use of linear SVM over kernel SVM, or any other nonlinear classifier (e.g. K nearest neighbors or random forest classifiers), is intentional. The classification experiments in this section serve as a measure of linear separability of image classes in the corresponding transform spaces and are designed to test our theorem on the linear separability of image classes in the Radon-CDT space. We note that one can utilize any preferred classifier or regressor in the Radon-CDT space.

Table I.

Average classification accuracy for the face dataset (a), the nuclei dataset (b), and the animal face dataset (c), calculated from ten-fold cross validation using linear SVM in the image space, the Radon transform space, the Ridgelet transform space, and the Radon-CDT spaces. The improvements are statistically significant for all datasets.

| Face data | Linear SVM | |

|---|---|---|

| Training accuracy | Testing Accuracy | |

| Image space | 100 | 76.0 ± 11.94 |

| Radon space | 100 | 79.12 ± 12.25 |

| Ridgelet space | 100 | 76.87 ± 13.95 |

| Radon-CDT space | 100 | 82.62 ± 11.5 |

| (a) | ||

| Nuclei data | Linear SVM | |

| Training accuracy | Testing Accuracy | |

| Image space | 100 | 65.2 ± 6.6 |

| Radon space | 100 | 62.56 ± 6.7 |

| Ridgelet space | 100 | 62.92 ± 5.6 |

| Radon-CDT space | 100 | 75.56 ± 6.21 |

| (b) | ||

| Animal Face data | Linear SVM | |

| Training accuracy | Testing Accuracy | |

| Image space | 100 | 46.60 ± 7.71 |

| Radon space | 100 | 47.94 ± 8.15 |

| Ridgelet space | 100 | 69.39 ± 7.07 |

| Radon-CDT space | 100 | 79.42 ± 6.12 |

| (c) | ||

To provide the reader with a reference point for comparing the classification results presented in Table I, we utilized the PCANet framework [7], which is among the state of the art feature extraction methods, and applied it to our datasets. The extracted features from the PCANet are then used for classification. We emphasize that unlike the Radon-CDT, PCANet is not an invertible image transform and it only serves as a nonlinear feature extraction method. For PCANet we used 2 layers with 8 filters (principal patches) learned from the training data at each layer as suggested in [7]. The classification accuracy of PCANet is compared to that of the Radon-CDT in Table II. It is clear the classification accuracies for all datasets are comparable. Here we need to note that changing the structure of the PCANet and fine-tuning it may lead to higher classification accuracies, but we utilized the parameters suggested in Chan et al.[7].

Table II.

Average classification accuracy for the face dataset, the nuclei dataset, and the animal face dataset, calculated from ten-fold cross validation using linear SVM in the PCANet feature space and the Radon-CDT spaces.

| Classification comparison | Linear SVM | |

|---|---|---|

| PCANet | Radon-CDT | |

| Face dataset | 84.12 ± 11.7 | 82.62 ± 11.5 |

| Nuclei dataset | 74.16 ± 4.36 | 75.56 ± 6.21 |

| Animal face dataset | 80.81 ± 5.1 | 79.42 ± 6.12 |

In the experiments presented in this section we used 180 projection angles, θ ∈ [0°, 1°,…, 179°]. Here, a natural question arises regarding the dependency of the classification accuracies with the number of projection angles. To address this question, we experimentally tested the classification accuracies as a function of the number of projection angles. Figure 10 shows the 10-fold cross validated mean accuracies with their corresponding standard deviations as a function of the number of projection angles for all datasets. From Figure 10 it can be noticed that the classification accuracies are stable and there is a slight improvement in the accuracy as the number of projections increases.

Fig. 10.

Classification accuracy in the Radon-CDT space as a function of the number of projection angles.

VI. Summary and discussion

Problems involving classification of image data are pervasive in science and technology. Applications include automating and enhancing cancer detection from microscopic images of cells, person identification from images of irises or faces, mapping and identification of galaxy types from telescope images, and numerous others. The standard processing pipeline in these applications include 1) an image representation step, 2) feature extraction, and 3) statistical learning, though more recently, deep learning architectures have also been employed [3] as an end to end learning approach. Regardless of the learning architecture being used, the image representation step is fundamental given that all feature extraction methods (e.g. SIFT, HOG, Haralick, etc. [21]) require access to a representation model of pixel intensities. Many widely used mathematical image representation methods (e.g. wavelets, short time Fourier transforms, ridgelets, etc.) are linear, and thus, by themselves, are unable to enhance linear class separation in any way. Because linear operations are only able to analyze pixel intensities at fixed locations, they are unable to decode pixel displacements, which we hypothesize are crucial for better modeling intensity variations present in many classes of images.

Here we described a new, non-linear, low-level image transform mean to exploit the hypothesis that analyzing pixel locations, in addition to their intensities, could be useful in problems of telling image classes apart. The new transform, termed the Radon-CDT transform, is derived by combining the 2D Radon transform [27] with the cumulative distribution transform (CDT) [29] described earlier. The transform is invertible as it contains well-defined forward (analysis) and inverse (synthesis) operations. We presented theoretical and experimental evidence towards supporting the hypothesis that the Radon-CDT can enhance the linear separability of certain signal classes. Underlying the theory is a specific generative model for signal classes which is non linear, and generates signals by transporting pixel intensities relative to a ‘mother’ function. The theory and experimental results here add to our understanding in explaining why transport-based approaches have been able to improve the state of the art in certain cancer detection from microscopy images problems [25, 34].

In contrast to our earlier work related to transport-based signal and image analysis [2, 15, 29, 38, 39], the work described here provides a number of important additions and improvements. First, in contrast to our earlier work for 1D signals [29], the work presented here expands the concept of the CDT to 2D signals. In contrast to our earlier work in image analysis [2, 39], the Radon-CDT has a closed form, and hence does not require numerical optimization for computation. Because of this, theoretically analyzing certain of its properties with respect to image translation, scaling, and linear separability, becomes tractable (this analysis is presented in Section III). Finally, because the 2D Radon-CDT is closed form, it is also significantly faster, and simpler to compute.

Using an analogy to the kernel-based methods in machine learning, the Radon-CDT can be described as a kernel embedding space. This connection between the Radon-CDT framework and the kernel methods is fully discussed in a recent work by the authors [17]. In short, we showed in [17] that the kernel methods can be applied to both the image space and the Radon-CDT space, and demonstrated that applying the kernel methods in the Radon-CDT space lead to higher performance compared to applying them to the image space. In addition, as opposed to common kernel-based methods, in the Radon-CDT the transformation to the kernel space is known, and it is invertible at any point. This implies that any statistical analysis in the Radon-CDT space can be ‘inverted’ and presented back into the image space. We also presented theoretical results on the linear separability of data classes in this kernel-space (i.e. the Radon-CDT space) and how they are related to an image generative model which in addition to modifying pixel intensities, also displaces them in relation to a mother (template) image. The model suggests that pixel location information encoded in transport flows represents valuable information for simplifying classification problems. The model also allows one to potentially utilize any known physical information regarding the problem at hand (i.e. are classes expected to include translation, scaling, etc.) in considering whether the Radon-CDT would be an effective tool for solving it.

Finally, we note that, although transport-based methods, by themselves can at times improve upon state of the art methods in certain applications [15, 25, 34], given its ability to simplify recognition tasks, we envision the Radon-CDT to serve as a low level pre-processing tool in classification problems. We note that because the proposed transform is mathematically invertible, it does not involve information loss. Thus, other representation methods such as wavelets, ridgelets, etc., as well as feature extraction methods (e.g. Haralick textures, etc.) can be employed in Radon-CDT space. Future work will involve designing numerically exact digital versions of the image transformation framework presented here, as well as applying combinations of these techniques to numerous estimation and detection problems in image analysis and computer vision.

Acknowledgments

This work was financially supported in part by the National Science Foundation (NSF), grant number 1421502, the National Institutes of Health, grants GM090033, CA188938, and GM103712, and the John and Claire Bertucci Graduate Fellowship.

Biographies

Soheil Kolouri received his B.S. degree in electrical engineering from Sharif University of Technology, Tehran, Iran, in 2010, and his M.S. degree in electrical engineering in 2012 from Colorado State University, Fort Collins, Colorado. He received his doctorate degree in biomedical engineering from Carnegie Mellon University in 2015, were his research was focused on applications of the optimal transport in image modeling, computer vision, and pattern recognition. His thesis, titled, “Transport-based pattern recognition and image modeling”, won the best thesis award from the Biomedical Engineering Department at Carnegie Mellon University.

Serim Park received her B.S. degree in Electrical and Electronic Engineering from Yonsei University, Seoul, Korea in 2011 and is currently a doctoral candidate in the Electrical and Computer Engineering Department at Carnegie Mellon University, Pittsburgh, United States. She is mainly interested in signal processing and machine learning, especially designing new signal and image transforms and developing novel systems for pattern recognition.

Gustavo K. Rohde earned B.S. degrees in physics and mathematics in 1999, and the M.S. degree in electrical engineering in 2001 from Vanderbilt University. He received a doctorate in applied mathematics and scientific computation in 2005 from the University of Maryland. He is currently an associate professor of Biomedical Engineering, and Electrical and Computer Engineering at Carnegie Mellon University.

VIII. Appendix

A. The Radon-CDT metric

Here we show that dRCD(.,.) is indeed a metric as it satisfies,

i. dRCD(I1,I0) ≥0

Proof.

ii. dRCD(I1, I0)=0 ⇔ I1=I0

Proof.

iii. dRCD(I1, I0) = dRCD(I0, I1)

Proof.

where in the second line we used the change of variable u = f(t,θ) and in the fourth line we used .

iv. dRCD(I1, I2) ≤ dRCD(I1, I0) + dRCD(I2, I0)

Proof. let μ, ν, and σ be the continuous probability measures on ℝ2, with corresponding positive probability density functions I1, I2, and I0. Let,

Then we can write

where in the second line we used the change of variables f1(u, θ) = t. Defining fi(ρ) = [fi(t, θ), θ]T for i = 1,2, where ρ = [t, θ]T, above equation can be written as a weighted Euclidean distance with weights Î0(ρ). Therefore we can write,

B. Translation property of Radon-CDT

For J(x, y) = I(x − x0, y − y0) and using the properties of Radon transform we have,

Therefore the Radon-CDT of J can be written as,

where g(t, θ) satisfies,

The left hand side of above equation can be rewritten as,

Where .

C. Scaling property of Radon-CDT

For J(x,y) = α2I(αx,αy) with α > 0 and using the properties of Radon transform we have,

The Radon-CDT of J can be written as,

where g(t, θ) satisfies,

The left hand side of above equation can be rewritten as,

D. Rotation property of Radon-CDT

For J(x, y) = I(x cos(ϕ)+y sin(ϕ),− x sin(ϕ)+y cos(ϕ)) and using the properties of Radon transform we have,

Given a circularly symmetric reference image, the Radon-CDT of J can be written as,

where g(t, θ) satisfies,

The left hand side of above equation can be rewritten as,

E. Linear separability in the Radon-CDT space

Let image classes ℙ and ℚ be generated from Eq. (18). Here we show that the classes are linearly separable in the Radon-CDT space.

Proof. By contradiction we assume that the transformed image classes are not linearly separable,

Where and . Figure 11 shows a diagram which illustrates the interactions between q̂jS, p̂iS, and Î0. It is straightforward to show that and as can also be seen from the diagram in Figure 11. Therefore we can write,

Fig. 11.

The diagram of interactions of the images mass preserving maps.

Defining and , we can rewrite above equation as,

Defining we have,

which implies that , which contradicts with the fourth condition of ℂ.

References

- 1.Averbuch A, Coifman R, Donoho D, Israeli M, Walden J. Fast Slant Stack: A notion of Radon transform for data in a Cartesian grid which is rapidly computible, algebraically exact, geometrically faithful and invertible. Department of Statistics, Stanford University; 2001. [Google Scholar]

- 2.Basu S, Kolouri S, Rohde GK. Detecting and visualizing cell phenotype differences from microscopy images using transport-based morphometry. Proceedings of the National Academy of Sciences. 2014;111(9):3448–3453. doi: 10.1073/pnas.1319779111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Bengio Y. Learning deep architectures for AI. Foundations and trends® in Machine Learning. 2009;2(1):1–127. [Google Scholar]

- 4.Bonneel N, Rabin J, Peyré G, Pfister H. Sliced and radon wasserstein barycenters of measures. Journal of Mathematical Imaging and Vision. 2015;51(1):22–45. [Google Scholar]

- 5.Boulgouris NV, Chi ZX. Gait recognition using radon transform and linear discriminant analysis. Image Processing, IEEE Transactions on. 2007;16(3):731–740. doi: 10.1109/tip.2007.891157. [DOI] [PubMed] [Google Scholar]

- 6.Bruna J, Mallat S. Invariant scattering convolution networks. Pattern Analysis and Machine Intelligence, IEEE Transactions on. 2013;35(8):1872–1886. doi: 10.1109/TPAMI.2012.230. [DOI] [PubMed] [Google Scholar]

- 7.Chan TH, Jia K, Gao S, Lu J, Zeng Z, Ma Y. PCANet: a simple deep learning baseline for image classification? arXiv preprint arXiv:1404.3606. 2014 doi: 10.1109/TIP.2015.2475625. [DOI] [PubMed] [Google Scholar]

- 8.Dahyot R. Statistical hough transform. Pattern Analysis and Machine Intelligence, IEEE Transactions on. 2009;31(8):1502–1509. doi: 10.1109/TPAMI.2008.288. [DOI] [PubMed] [Google Scholar]

- 9.Dahyot R, Ruttle J. Generalised relaxed radon transform (gr 2 t) for robust inference. Pattern Recognition. 2013;46(3):788–794. [Google Scholar]

- 10.Do MN, Vetterli M. Wavelet-based texture retrieval using generalized gaussian density and kullback-leibler distance. Image Processing, IEEE Transactions on. 2002;11(2):146–158. doi: 10.1109/83.982822. [DOI] [PubMed] [Google Scholar]

- 11.Do MN, Vetterli M. The finite ridgelet transform for image representation. Image Processing, IEEE Transactions on. 2003;12(1):16–28. doi: 10.1109/TIP.2002.806252. [DOI] [PubMed] [Google Scholar]

- 12.Gelfand IM, Graev MI, Vilenkin NY. Generalized functions Vol 5, Integral geometry and representation theory. Academic Press; 1966. [Google Scholar]

- 13.Haker S, Zhu L, Tannenbaum A, Angenent S. Optimal mass transport for registration and warping. International Journal of Computer Vision. 2004;60(3):225–240. [Google Scholar]

- 14.Jafari-Khouzani K, Soltanian-Zadeh H. Radon transform orientation estimation for rotation invariant texture analysis. Pattern Analysis and Machine Intelligence, IEEE Transactions on. 2005;27(6):1004–1008. doi: 10.1109/TPAMI.2005.126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kolouri S, Rohde GK. Transport-based single frame super resolution of very low resolution face images; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; 2015. pp. 4876–4884. [Google Scholar]

- 16.Kolouri S, Tosun AB, Ozolek JA, Rohde GK. A continuous linear optimal transport approach for pattern analysis in image datasets. Pattern Recognition. 2015 doi: 10.1016/j.patcog.2015.09.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Kolouri S, Zou Y, Rohde GK. Sliced wasserstein kernels for probability distributions. arXiv preprint. 2015 [Google Scholar]

- 18.Krizhevsky A, Sutskever I, Hinton GE. Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems. 2012:1097–1105. [Google Scholar]

- 19.LeCun Y, Bottou L, Bengio Y, Haffner P. Gradient-based learning applied to document recognition. Proceedings of the IEEE. 1998;86(11):2278–2324. [Google Scholar]

- 20.Li W, Zhang D, Xu Z. Palmprint identification by fourier transform. International Journal of Pattern Recognition and Artificial Intelligence. 2002;16(04):417–432. [Google Scholar]

- 21.Li Y, Wang S, Tian Q, Ding X. Feature representation for statistical-learning-based object detection: A review. Pattern Recognition. 2015 [Google Scholar]

- 22.Mandal T, Majumdar A, Wu QJ. Image Analysis and Recognition. Springer; 2007. Face recognition by curvelet based feature extraction; pp. 806–817. [Google Scholar]

- 23.Monro DM, Rakshit S, Zhang D. DCT-based iris recognition. Pattern Analysis and Machine Intelligence, IEEE Transactions on. 2007;29(4):586–595. doi: 10.1109/TPAMI.2007.1002. [DOI] [PubMed] [Google Scholar]

- 24.Natterer F. The mathematics of computerized tomography. Vol. 32. Siam; 1986. [Google Scholar]

- 25.Ozolek JA, Tosun AB, Wang W, Chen C, Kolouri S, Basu S, Huang H, Rohde GK. Accurate diagnosis of thyroid follicular lesions from nuclear morphology using supervised learning. Medical image analysis. 2014;18(5):772–780. doi: 10.1016/j.media.2014.04.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Patel VM, Easley GR, Healy DM, Jr, Chellappa R. Compressed synthetic aperture radar. Selected Topics in Signal Processing, IEEE Journal of. 2010;4(2):244–254. [Google Scholar]

- 27.Quinto ET. An introduction to X-ray tomography and radon transforms. Proceedings of symposia in Applied Mathematics. 2006;63:1. [Google Scholar]

- 28.Rabin J, Peyré G, Delon J, Bernot M. Scale Space and Variational Methods in Computer Vision. Springer; 2012. Wasserstein barycenter and its application to texture mixing; pp. 435–446. [Google Scholar]

- 29.Rim Park S, Kolouri S, Kundu S, Rohde G. The Cumulative Distribution Transform and Linear Pattern Classification. ArXiv e-prints 1507.05936. 2015 Jul; [Google Scholar]

- 30.Shao L, Liu L, Li X. Feature learning for image classification via multiobjective genetic programming. Neural Networks and Learning Systems, IEEE Transactions on. 2014;25(7):1359–1371. [Google Scholar]

- 31.Si Z, Zhu SC. Learning hybrid image templates (hit) by information projection. Pattern Analysis and Machine Intelligence, IEEE Transactions on. 2012;34(7):1354–1367. doi: 10.1109/TPAMI.2011.227. [DOI] [PubMed] [Google Scholar]

- 32.Sifre L, Mallat S. Computer Vision and Pattern Recognition (CVPR), 2013 IEEE Conference on. IEEE; 2013. Rotation, scaling and deformation invariant scattering for texture discrimination; pp. 1233–1240. [Google Scholar]

- 33.Stegmann MB, Ersbll B, Larsen R. FAME-a flexible appearance modeling environment. Medical Imaging, IEEE Transactions on. 2003;22(10):1319–1331. doi: 10.1109/tmi.2003.817780. [DOI] [PubMed] [Google Scholar]

- 34.Tosun AB, Yergiyev O, Kolouri S, Silverman JF, Rohde GK. Detection of malignant mesothelioma using nuclear structure of mesothelial cells in effusion cytology specimens. Cytometry Part A. 2015 doi: 10.1002/cyto.a.22602. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Villani C. Optimal transport: old and new. Vol. 338. Springer Science & Business Media; 2008. [Google Scholar]

- 36.Wang W, Mo Y, Ozolek JA, Rohde GK. Penalized fisher discriminant analysis and its application to image-based morphometry. Pattern recognition letters. 2011;32(15):2128–2135. doi: 10.1016/j.patrec.2011.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Wang W, Ozolek JA, Rohde GK. Detection and classification of thyroid follicular lesions based on nuclear structure from histopathology images. Cytometry Part A. 2010;77(5):485–494. doi: 10.1002/cyto.a.20853. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Wang W, Ozolek JA, Slepcev D, Lee AB, Chen C, Rohde GK. An optimal transportation approach for nuclear structure-based pathology. Medical Imaging, IEEE Transactions on. 2011;30(3):621–631. doi: 10.1109/TMI.2010.2089693. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Wang W, Slepcev D, Basu S, Ozolek JA, Rohde GK. A linear optimal transportation framework for quantifying and visualizing variations in sets of images. International journal of computer vision. 2013;101(2):254–269. doi: 10.1007/s11263-012-0566-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Zhang L, Zhen X, Shao L. Learning object-to-class kernels for scene classification. Image Processing, IEEE Transactions on. 2014;23(8):3241–3253. doi: 10.1109/TIP.2014.2328894. [DOI] [PubMed] [Google Scholar]

- 41.Zhu F, Shao L. Weakly-supervised cross-domain dictionary learning for visual recognition. International Journal of Computer Vision. 2014;109(1-2):42–59. [Google Scholar]