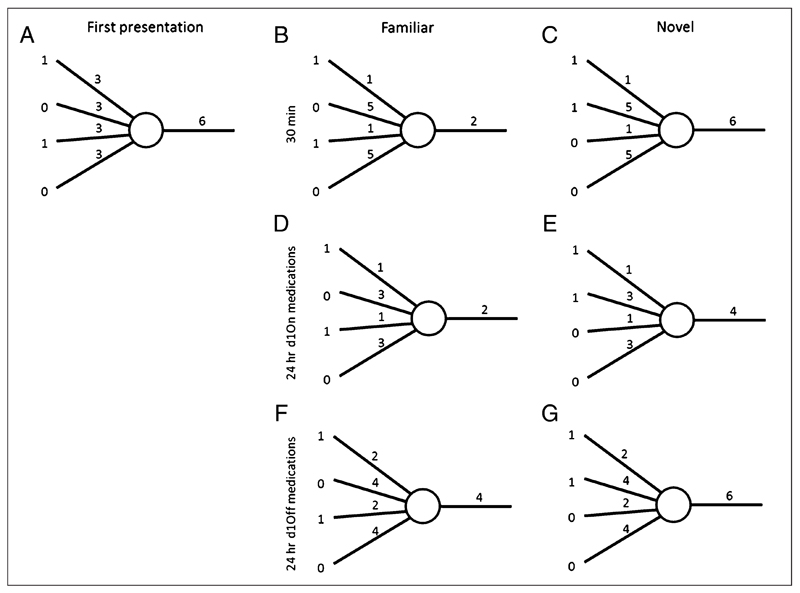

Figure 5.

Anti-Hebbian model with one novelty neuron. Numbers to the left show the activity of inputs (for simplicity just four are shown), the numbers over the four connections indicate their synaptic weights, and the number on the right is the level of neuron’s activity. (A) A novel input pattern is presented to the neuron, and it outputs an activity of 6. Anti-Hebbian learning takes place decreasing the weights of the active connections and increasing the inactive weights to balance overall excitation leading to the weights shown in B. (B) The same pattern (now familiar) is presented again, this time eliciting a lower output activity. (C) Presenting a new novel pattern returns the same output as the original novel pattern because of the increased weights of the inactive connections balancing the excitation. (D) Simulated PD patients on dopamine during learning show decays in the weight increase for the inactive connections, but the activated connections remain decreased so the output for the familiar pattern remains the same. (E) Presentation of the novel pattern from C would now elicit a lowered output, meaning it is more likely to be accepted as familiar. (F) Simulated PD patients off their medication during learning show decays in both increased and decreased weights, leading to higher activity for the familiar stimuli and (G) regular activity for novel patterns, corresponding to an increased response bias.