Abstract

Objectives

Programmes to address chronic disease are a focus of governments worldwide. Despite growth in ‘implementation science’, there is a paucity of knowledge regarding the best means to measure sustainability. The aim of this review was to summarise current practice for measuring sustainability outcomes of chronic disease health programmes, providing guidance for programme planners and future directions for the academic field.

Settings

A scoping review of the literature spanning 1985–2015 was conducted using MEDLINE, CINAHL, PsychINFO and The Cochrane Library limited to English language and adults. Main search terms included chronic disease, acute care, sustainability, institutionalisation and health planning. A descriptive synthesis was required. Settings included primary care, hospitals, mental health centres and community health.

Participants

Programmes included preventing or managing chronic conditions including diabetes, heart disease, depression, respiratory disease, cancer, obesity, dental hygiene and multiple chronic diseases.

Primary and secondary outcome measures

Outcome measures included clarifying a sustainability definition, types of methodologies used, timelines for assessment, criteria levels to determine outcomes and how methodology varies between intervention types.

Results

Among 153 abstracts retrieved, 87 were retained for full article review and 42 included in the qualitative synthesis. Five definitions for sustainability outcome were identified with ‘maintenance of programme activities’ most frequent. Achieving sustainability was dependent on inter-relationships between various organisational and social contexts supporting a broad scale approach to evaluation. An increasing trend in use of mixed methods designs over multiple time points to determine sustainability outcomes was found.

Conclusions

Despite the importance and investment in chronic disease programmes, few studies are undertaken to measure sustainability. Methods to evaluate sustainability are diverse with some emerging patterns in measurement found. Use of mixed methods approaches over multiple time points may serve to better guide measurement of sustainability. Consensus on aspects of standardised measurement would promote the future possibility of meta-analytic syntheses.

Strengths and limitations of this study.

The systematic identification and abstraction of eligible publications assessed by more than one author and the use of manual reference searching and forward citation tracking as well as the use of an appropriate quality assessment tool for classifying the eligible publications.

The scoping method of this review has allowed us to map the heterogeneous body of literature in this field.

The scoping method has also allowed us to include a greater range of study designs and methodologies, currently used in the chronic disease field, to allow for rich and in-depth discussion.

The heterogeneous nature of the literature precludes a meta-analytic review making the results less generalisable.

The systematic method applied in this scoping review means we may have missed some relevant reports if these have been published in the grey literature.

Background

The continuation of an effective health programme, beyond the initial implementation phase, is paramount to maintaining better outcomes for patients with chronic disease. There exists a paucity of knowledge regarding the best means to ensure sustainability of chronic disease health programmes.1 The over-riding challenge is to sustain health programmes after initial programme support has been removed or has expired. However, research providing evidence for effective sustainability strategies for health programmes is underdeveloped,2 and clear recommendations to promote sustainability are limited.3

Measurement of sustainability in the chronic disease field presents a challenge due to the scope of assessments utilised, interventions provided and the heterogeneity between and within illnesses.4 In addition, comorbidities are frequent for people with chronic disease and the subsequent interactions between diseases and treatments provide further challenges for measurement.5 Thus, the interventions developed and implemented in the chronic disease field are complex.

As such, in recent times, it has been suggested that a multifaceted approach to measuring sustainability is required to determine outcomes.6 7 The outcomes are determined by the aim of individual researchers and may vary from sustained health outcomes to continuation of programme activities. For example, Rowley et al8 were interested in evaluating the effectiveness of a new programme to prevent obesity, diabetes and heart disease in a remote indigenous community. Thus, the outcomes of interest to determine sustainability of an effective health programme were specific health measures (body mass index and impaired glucose tolerance).8 In contrast, Brand et al9 were interested in evaluating adherence to clinical practice guidelines for chronic obstructive pulmonary disease. The guidelines were evidence based and thus had previously proven to improve health outcomes. As such, the authors aimed to evaluate effectiveness of adherence to the guidelines since adherence had previously been proven to improve health outcomes.9 A different approach has also been to determine the level of community ownership of the programme. Koskan et al10 found that participants in the evaluation of an obesity prevention programme viewed sustainability as increased community ownership of the programme with less support from outside organisations. Indeed, these examples illustrate that sustainability outcomes often move beyond longitudinal programme outcomes and are reframed to include spread of the programme and community ownership. In this paper, sustainability of chronic disease health programmes focuses on programme processes as opposed to health outcomes.

Clearly, sustainability is a multidimensional concept encompassing a diversity of forms along a continuation process with indicators of success that fall into distinct categories.7 These include: (1) maintenance of the health benefits achieved through a programme; (2) maintenance of the core activities central to the programme; and (3) continued capacity of the community to build and deliver health programmes (the extent to which community members are educated and can access programme resources).7 Scheirer and Dearing6 have added to the list including: (1) programme diffusion (when the underlying concepts or innovations spread to new locations);6 (2) maintaining new organisational practices, procedures and policies;6 and (3) maintaining attention to the issues addressed by the programme.11 Clearly, many variables need to be considered and clearly defined in studies investigating sustainability, and planning for, or measuring, sustainability must be included in the programme development stages.

It is acknowledged that it is not always necessary to sustain all original programme activities.1 7 12 Therefore, Greenhalgh et al13 contend that the more complex approach of drawing narratives from multiple interacting processes can offer unique and in-depth insights into measuring sustainability outcomes as a move away from simply measuring relationships between a set of dependent and independent variables.14 As such, in sustainability research, differing approaches based on either being intervention focused or making an assessment of sustainability as a part of complex systems have implications for the way research in this field is conducted and is influenced by the health discipline.15 Several authors have proposed frameworks for programme sustainability assessment to enable some standardisation and guidance within different disciplines.16–19

A recent review of empirical studies used to investigate health programme sustainability revealed that 40–60% of programmes continued in some form; however, the studies’ designs were weak.20 For example, key variables and definitions were not clearly defined.20 Clearly, more evidence and clearer recommendations are needed for current sustainability research. A current understanding of the research base is needed to provide evidence-based recommendations and facilitate well-informed decision-making on programme sustainability methods in the health field. Without clear evidence, successfully sustaining health programmes may continue to be a challenge.14

Identifying the research question

Chronic diseases, in particular those related to cardiovascular disease and diabetes, are a growing concern to governments in developed and developing countries since they are a major source of health loss in society.21 Until now, the information on how to best sustain and measure the sustainability outcomes of chronic disease prevention and management programmes is rare. The primary aim of this paper was to conduct a review of the current literature describing the sustainability of chronic disease programmes. The second aim was to summarise the empirical methods used to measure sustainability of chronic disease health programmes. Health programmes in the field of chronic disease management within hospital, primary care and community health settings were the primary focus. For this review, outcomes measured for determining if a programme has been sustained are referred to as the ‘sustainability outcome’. The research questions for this scoping review included:

How are sustainability outcomes of health programmes defined for measurement in the field of chronic disease?

What methodologies are used to measure the defined sustainability outcomes including the types of study designs?

What is the typical timeline for assessing the sustainability of a programme? This is designed to enable a better understanding of when the sustainability phase generally begins.

What criteria levels are set to determine if sustainability has been achieved based on the sustainability outcome and are these predefined?

How does the methodology vary between intervention types? This question was designed to explore the relationship between the nature of the programme itself and type of study methodology used.

Methods

Ethics and dissemination

This is a scoping review of the literature, thus formal ethics was not required as patient data or meta-analysis was not conducted.

We conducted a scoping literature review. The aim of a scoping review is to map existing literature in a given field when the topic is of a heterogeneous nature and has not been extensively reviewed.22 Our decision to adopt this approach for our review was based on allowing us to provide an overview of the diverse body of sustainability literature, including the greater range of study designs and methodologies, currently being used in the chronic disease field.

In order to clarify the focus of this scoping review, the outcomes of interest need to be specified.23 Therefore, in this review, a sustainability outcome was defined as the long-term survival of programme activities: health benefits or continued capacity of organisations to deliver and adapt programme activities.7

Identifying relevant studies

An electronic database search was conducted for studies, using MEDLINE, CINAHL, PsychINFO and The Cochrane Library, searching dates between 1 January 1985 and 1 June 2015 using a combination of MeSH and free text by Boolean operators (table 1). The combination of MeSH and free text operators included one of the key MeSH headings together with each of the key words (eg, Programme evaluation with chronic disease, acute care, continuation, institutionalisation, maintenance and programme development) for each of the databases. The reference lists of included papers were also manually searched to identify any potentially relevant studies not found in the electronic search. Forward citation tracking24 was also used to identify additional papers.

Table 1.

Electronic database key word terms and search engines

| MeSH terms | Key words | Search engines |

|---|---|---|

| Program sustainability Program evaluation Diffusion of innovation Organisational innovation |

Chronic disease Acute care Continuation Institutionalisation Maintenance Program development |

MEDLINE—1985–1 February 2013 |

| Program sustainability Program evaluation Diffusion of innovation Organisational innovation |

Chronic disease Acute care Continuation Institutionalisation Maintenance Program development |

Scopus—1985–1 July 2013 |

| Program sustainability Program evaluation Diffusion of innovation Organisational innovation |

Chronic disease Acute care Continuation Institutionalisation Maintenance Program development |

Cumulative Index to Nursing and Allied Health—1985–1 July 2013 |

| Program sustainability Program evaluation Diffusion of innovation Organisational innovation |

Chronic disease Acute care Continuation Institutionalisation Maintenance Program development |

PsychINFO—1985–1 July 2013 |

| Maintenance | The Cochrane Library | |

| Health planning | ||

| Program development |

Study selection

Each potentially eligible publication was independently assessed for inclusion and quality. LF performed the initial review of publications with the following criteria set for inclusion of studies: (1) studies with a focus on health programme sustainability, including the various measures used to determine the sustainability outcome, as well as the reported factors, facilitators and barriers to sustainability, (2) no pre-selected criteria for assessment time periods were used, (3) settings included primary care, hospitals, mental health centres and community health, (4) studies that were randomised controlled, controlled or descriptive and had retrospective or prospectively collected data were included, and finally, (5) multicase and single-case studies without original data were included.

The following criteria were set for exclusion of the studies: (1) studies limited to implementation processes only, (2) programmes that were not related to chronic illness, (3) studies that did not specify clear measures for assessing programme sustainability, (4) studies were not included if the stated measures did not match the main study findings, and finally, (5) opinion pieces and conceptual studies were not included. The co-authors assessed the filtered abstracts or full articles following the above criteria.

After initial review, methodological quality of the studies was assessed by evaluating the design, methods, baseline data, interventions, assignment methods, statistical methods and explanation of results using the Transparent Reporting of Evaluations with Non-randomised Designs (TREND) guidelines.25 Additional items from the Consolidated Standards of Reporting Trials (CONSORT) guidelines were included for the one RCT study included.26 For example, studies were excluded if there was insufficient information on study sample, statement of objectives, methods of recruitment, unit of delivery, methods used to collect data, statement of results for stated primary and secondary outcomes, and clear discussion.

Charting the available evidence

An Excel-based (Microsoft Office version14.2.1) extraction checklist was designed and used to check data using the following descriptors: information on target population, eligibility criteria and methods of participant recruitment, details of intervention delivery, statement of specific objectives and hypotheses, methods used to collect data, sample size, assignment methods, unit of analysis, relevant results, theoretical relevance and generalisability. Since the broad nature of the included studies would preclude meta-analysis, a descriptive synthesis is provided. One study included in the analysis was a randomised controlled trial (RCT). This study was evaluated using the CONSORT guidelines.27 Additional items were added to the excel extraction checklist for these studies including protocol and registration, eligibility criteria, risk of bias, study selection and summary measures.26

Defined sustainability outcomes were extracted from the studies and categorised according to six intervention-based indicators from the literature: (1) maintenance of the health benefits achieved through a programme; (2) maintenance of the core activities central to the programme; (3) continued capacity of the community to build and deliver health programmes,7 (4) programme diffusion;6 (5) maintaining new organisational practices, procedures and policies;6 and (6) maintaining attention to the issues addressed by the programme.11 The indicators were specifically selected since they cover a diverse range of possible outcomes that fit with the broad definition of sustainability outcomes chosen for this review. The indicators were adapted from authors Shediac-Rizkallah and Bone7 and Scheirer and Dearing.6 These authors have shaped different understandings of the concepts of sustainability and have emphasised that a multifaceted approach to measuring sustainability is now required to determine outcomes. Therefore, these indicators were used to provide the framework for describing the sustainability outcomes reported from the included studies. Each study included in this paper was also categorised for intervention type according to Scheirer's19 definitions of intervention type. Six intervention type categories were used: (1) interventions implemented by individual providers, (2) interventions requiring coordination by multiple staff, (3) new policies, procedures and technologies, (4) capacity or infrastructure building, (5) collaborative partnerships and (6) broad scale system change.19

Collating, summarising and reporting the results

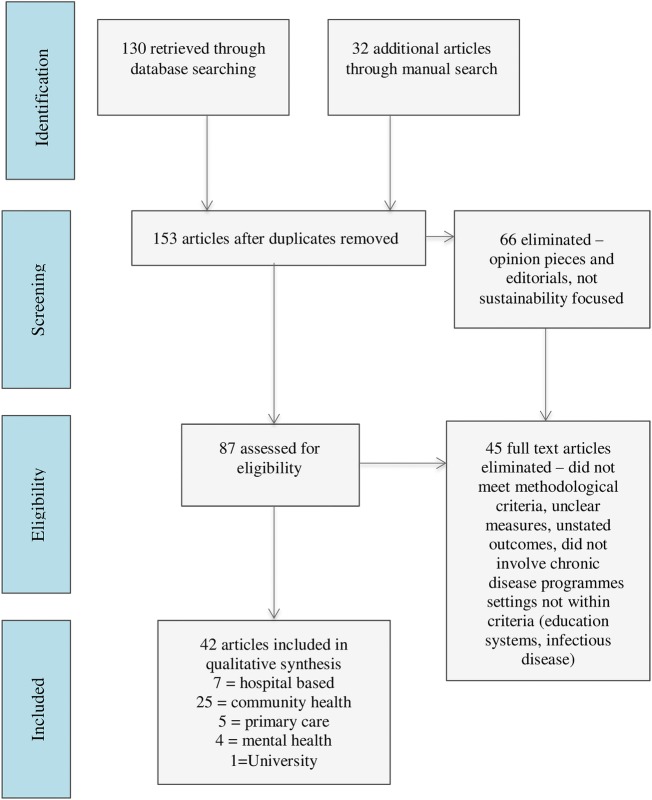

Database searching yielded 130 studies (figure 1) for screening and 32 additional articles were identified through manual searching and forward citation tracking. After duplicates were removed, 153 articles were screened. Sixty-six were eliminated based on the eligibility criteria. A further 45 papers were excluded after full-text review because they did not conform to the criteria for chronic disease programme settings (eg, specialist infectious disease centres); did not meet methodological criteria because they included non-health-based programmes (were based on the education system and settings in public safety—housing, crime, etc), or did not state clear sustainability outcomes or related factors or determinants of sustainability. This left 42 articles meeting the review criteria.

Figure 1.

Literature search flow diagram.

The 42 eligible studies were focused on programmes for preventing or managing chronic conditions including diabetes (n=5), heart disease (n=4), depression (n=8), respiratory disease (n=3), cancer (n=3), obesity (n=5), dental hygiene (n=1), aged care (n=4) and multiple chronic diseases across primary care and/or remote settings (n=9). The qualitative synthesis of the included studies is presented according to each of the specific research questions as outlined below.

Defined sustainability outcomes

The defined outcomes are listed in table 2. The maintenance of programme activities as a defined outcome for measurement was used in the majority of studies (n=37). Specific programme components were identified as the measure for sustainability outcome in these studies. Specific components included: continued adherence to evidence-based recommendations,9 13 28–37 use of programme-specific tools,38 patient referrals,31 39 regular measures of clinical indicators,40 41 and direct outcomes from involvement in programme activities such as the percentage of people attempting dietary change from the Rowley et al8 study.

Table 2.

Defined outcomes, intervention type, unit of analysis and methodology for all studies included in the final analysis

| Author | Health programme | Unit of analysis | Defined outcomes | Intervention type | Method and assessment period | |

|---|---|---|---|---|---|---|

| 1 | Aitaoto28 | Diabetes Today Initiative Centre for Disease Control and prevention funded empowerment programmes for diabetes management |

Multiple community healthcare settings | Continuation of programme activities | Capacity building Broad scale system change |

Case series—uncontrolled longitudinal study Qualitative case study Follow-up interviews with coalition representatives in each community Assessment Period 4 years postfunding |

| 2 | Ament43 | Short stay after breast cancer surgery | Multisite hospital setting | Compliance with key recommendations of the programme Proportion of patients treated in short stay at follow-up |

Intervention requiring coordination by multiple staff New policies, procedures and technology |

Case–control study Before–after design Retrospective chart audit Assessment Period 5 years postimplementation |

| 3 | Barnett44 | Falls prevention intervention: the Stay on Your Feet programme (SOYF) | Multiple community healthcare settings | For health professionals—Recall of SOYF; influence on practice; Use of SOYF resources; For elderly residents—Recall of SOYF strategies; behavioural changes attributed to SOYF | Intervention requiring coordination by multiple staff New policies, procedures and technologies Broad scale system change |

Uncontrolled longitudinal case study Surveys with health professionals and focus groups with elderly community-dwelling residents Assessment Period 5 years postsupport |

| 4 | Bailie29 | Improving delivery of preventive medical services through the implementation of locally developed best practice guidelines for disadvantaged populations in remote settings | Multiple community healthcare settings | Improvement in percentages of delivered services between baseline and follow-up audits | Broad scale system change | Interrupted time series with random sampling Follow-up with repeated audits over a 3-year-period Assessment Period 3 years from baseline |

| 5 | Bereznicki45 | Community pharmacy intervention for asthma medication | Multiple community pharmacies | Average usage of medication | New policies, procedures and technology | Case–control study Before–after design Retrospective audit Assessment Period 5 years postimplementation |

| 6 | Blasinsky57 | Project IMPACT: 7 site RCT on depression treatment in older adults | Multisite primary care setting | Continuation of all or part of the programme after funding ceased | Intervention requiring coordination by multiple staff Broad scale system change |

Interrupted time series Qualitative study; evidence of continuation programme after funding ended; (1) review of grant proposals (2) site visits (3) semistructured telephone interviews with key players Data collected at 3 points; (1) baseline (2) One-year post to explore the implementation experience (3) One-year postcessation of clinical services to 5 explore sustainability Assessment Period 1 year postsupport |

| 7 | Boehm46 | Slim without diet (SWD) Aim to change individuals' eating and diet habits |

Multiple community healthcare settings | Maintain weight loss | Intervention requiring coordination by multiple staff New policies, procedures and technology |

Prospective cohort study Quantitative time-series Questionnaire Assessment period 12 months postsupport |

| 8 | Bond47 | National Implementing Evidence-Based Practices Project in mental healthcare settings | Multiple community healthcare settings | Fidelity to the EBP model Continuation of programme activities |

Intervention requiring coordination by multiple staff Capacity building |

Case–control study Before-after design Interview at 2 years and 6 years postimplementation Quantitative and Qualitative Assessment Period 6 years postimplementation |

| 9 | Bracht58 | Improvement of cardiovascular health in Minnesota (USA) through a heart health programme involving establishment of local boards, community organisation, training and volunteers—The Minnesota Heart Health Program (MHHP) |

Multiple community healthcare settings | Continuation of programmes postfunding | Broad scale system change Collaborative partnerships |

Interrupted longitudinal time-series study Quantitative: Measurement of long-term programme maintenance occurred through annual surveys investigating continued incorporation of programme activities in community groups Assessment Period 6 years postimplementation |

| 10 | Brand9 | Adherence to Chronic Obstructive Pulmonary Disease clinical practice guidelines | Single healthcare organisation | Adherence to COPD guidelines | Intervention requiring coordination by multiple staff | Case study Mixed methods evaluation Survey Interview Focus groups Assessment Period 2 years post baseline |

| 11 | Campbell48 | Ottawa Model of Smoking Cessation Hospital-based inpatient smoking cessation programme |

Multisite hospital-based setting | Improved performance of OMSC activities from baseline | New policies, procedures and technology Intervention requiring coordination by multiple staff |

Multisite case study Qualitative Interviews Assessment Period 7 years from baseline |

| 12 | Carpenter56 | Community clinical oncology programme | 45 Community clinical oncology programmes | Treatment trial accrual Cancer prevention and control accrual Total trial accrual |

Broad scale system change | Longitudinal quasi-experimental Data collected from progress reports and management systems from 2000–2007 Assessment Period 8 years from implementation |

| 13 | Chin42 | Health Disparities Collaborative (HDC) to improve Diabetes care | Individual patient level Multiple community healthcare settings |

Continued patient improvements in diabetes care | Capacity building Broad scale system change |

RCT with embedded prospective longitudinal study Retrospective chart review of randomly selected patients Assessment period 4 years from implementation |

| 14 | Goodson38 | Put Prevention into Practice Institutionalisation of tools for preventive services by primary care providers in USA | Multiple community healthcare settings | Use of tools | New policies, procedures and technologies | Interrupted time series study Interview and audit across three time points from implementation to follow-up Assessment Period 6 years postimplementation |

| 15 | Greenhalgh13 | Three preventive services—Stroke, Kidney and Sexual health | Individual patient level Multiple healthcare organisations |

Health benefits; programme activities; practices and procedures; capacity to undertake quality improvement; interorganisational partnerships | Broad scale system change Intervention requiring coordination by multiple staff |

Case study design Mixed methods Quantitative document review Qualitative interviews Assessment Period 7 years postimplementation |

| 16 | Clinton59 | Multi-intervention physical activity and nutritional health health promotion programs Two programs |

Multiple community healthcare settings | Meetings KPIs Adaptation Degree of implementation Organisational development Progress Collaboration Sustainability Evaluation readiness |

Broad scale system change Intervention requiring coordination by multiple staff |

Interrupted time series study Interview, survey and case studies collected at various time points Assessment Period 4 years post implementation |

| 17 | Cramm60 | Chronic care model—22 disease management programme targeting cardiovascular disease, chronic obstructive pulmonary disease, diabetes, heart failure, stroke, depression, psychotic disorders and comorbidity | Multiple community healthcare settings | Quality of chronic care delivery Routinisation of practice |

New policies, procedures and technologies Intervention requiring coordination by multiple staff |

Longitudinal prospective interrupted time-series study Quantitative administration of survey at three time points Assessment Period 2 years postimplementation |

| 18 | Gundim61 | Telemedicine and telehealth centre | Single University site | Indicative factors of sustainability: Institutional Functional Economic-financial Renewal Academic-scientific Partnerships Social welfare |

Broad scale system change | Retrospective longitudinal study Mixed methods—interviews, documents and reports. Data collected at 6 time points over 10 years Assessment period 10 years |

| 19 | Gruen62 | Improvement of access to specialist services in remote Australian Aboriginal communities by specialist outreach visits | Multisite community health and hospital settings | Number of consultations | Broad scale system change Intervention requiring coordination by multiple staff New policies, procedures and technologies |

Case study Process evaluation of outreach service Document review and semistructured interviews Assessment Period 3 years postimplementation |

| 20 | Hearld63 | Aligning Forces for Quality Improving quality of healthcare for chronically ill people through multistakeholder healthcare alliances. |

Multisite community health and hospital settings and government agencies | Organisational change | Broad scale system change | Interrupted longitudinal time-series study Quantitative: Measurement of long-term programme maintenance occurred through surveys at three time points investigating factors that promote change |

| 21 | Jansen30 | Heartbeat 2 Health counselling programme for high-risk Cardiovascular patients |

Individual patients Single healthcare organisation |

Sustainability of health benefits; programme activities; capacity; commitment Role of external change agent |

Capacity building Intervention requiring coordination among multiple staff |

Case Study Data derived from registrations, reports and focus group interviews Assessment Period 3 years postimplementation |

| 22 | Koskan10 | Promotoras de salud Community health-promotion projects for obesity-related lifestyle behaviours |

Programme planners from multiple community sites | Community empowerment | Broad scale system change Intervention requiring coordination by multiple staff |

Case Study Qualitative in-depth interviews Assessment Period Not stated |

| 23 | Lassen64 | Increase consumption of fruit and veg intake at worksite canteens | Multiple community sites | Fruit and veg consumption | Intervention requiring coordination by multiple staff New policies, procedures and technologies |

Interrupted time series study Mixed-methods Assessment Period 1 year postbaseline—3 time points |

| 24 | Lee31 | Primary Care Treatment of Depression (RESPECT-D) Treatment of depression by care managers in primary care supervised by specialist—RCT |

Individual providers across multiple healthcare organisations | Continued patient referrals; continued programme activities | Broad scale system change Intervention requiring coordination by multiple staff |

Multisite case study Mixed methods Descriptive evaluation conducted at 2 time points. Assessment Period 1 and 3 years post support |

| 25 | Manning49 | Community health networks to reduce cancer disparities in African-American people | Multiple community sites | Continued partner relationships | Collaborative partnerships | Longitudinal interrupted time series study Mixed methods study Interviews, surveys and reports Assessment Period 5 years from implementation |

| 26 | McDermott40 | Improved Diabetes care in remote Australian Aboriginal communities by health worker-run registers, recall and reminder systems, care plans, and specialist outreach | Multiple community healthcare settings Individual patient level |

Number of people on registers; Care processes; appropriate clinical interventions; patient outcome measures | Broad scale system change | Multisite case study Quantitative Three-year follow-up clinical audit Assessment Period 3 years post support |

| 27 | Meredith32 | Depression in primary care | Multiple healthcare organisations | Improved delivery of services; Spread of collaborative efforts | Intervention requiring coordination by multiple staff | Interrupted time series study Process evaluation data and 18-month telephone interviews Semistructured telephone interviews Assessment Period Quantitative—from implementation Qualitative—18 months post support |

| 28 | Nease33 | Improving Depression care collaborative; Implemented across 16 primary care practices. Both depression care and change management processes were taught to staff at implementation | Multiple healthcare organisations | Continued use of interventions Maintenance of change management processes |

Capacity building Intervention requiring coordination among multiple staff |

Case series study Qualitative follow-up telephone interview Assessment Period 2 years post support |

| 29 | O'Loughlin34 | Community-based Cardio-vascular disease risk-factor reduction programs in Canada | Multiple community health settings | Permanence of the programme (self-report perception on Likert scale of permanence) | Intervention requiring coordination among multiple staff | Case Study Quantitative Telephone survey of key informants Assessment Period Up to 10 years postimplementation |

| 30 | Palinkas50 | Multi-faceted Depression and Diabetes (MDDP) Programme | Individual provider level across multiple healthcare organisations Individual patient level |

Improved patient outcomes; Improved access to services; Improved consumer satisfaction | Collaborative partnerships | Qualitative study took place in the context of an RCT Semistructured interviews and focus groups Examined patient and provider perceptions of implementation and sustainability of the programme Assessment Period 2 years postimplementation |

| 31 | Pluye35 | Quebec Heart Health Demonstration Project | Multiple community health settings | Continuation of programme activities Specific routinisation events |

Collaborative partnerships | Retrospective multiple-case study 5 cases—5 community health centres Documents an interviews Assessment Period 10 years postimplementation |

| 32 | Ramsay39 | Educational reminder messages for knee and lumbar spine radiographs | Individual providers across multiple healthcare organisations | Number of referrals | Intervention implemented by individual providers New policies, procedures and technologies |

Interrupted time series Quantitative Monthly total number of referrals over a 1 year period Assessment Period 1 year postbaseline |

| 33 | Reinschmidt51 | Border Health Family Diabetes Programme Community capacity building intervention |

Multiple community health settings | Programme adaptation to other communities | Collaborative partnerships Capacity building Broad scale system change |

Case study Qualitative study Document review Face-to-face interviews with individuals from the adapted diabetes intervention programmes Assessment Period 4 years postimplementation |

| 34 | Rowley8 | Chronic-disease prevention—Obesity, Diabetes and Cardiovascular disease—in a remote Australian Aboriginal community by education, regular physical activity, and cooking classes—a lifestyle improvement programme | Individual patient level | Health measures (body mass index and impaired glucose tolerance); percentage of people attempting dietary change; and physical activity | Intervention requiring coordination among multiple staff | Interrupted time series study Quantitative Assessment Period 2 years postimplementation |

| 35 | Scheirer65 | School-based fluoride mouth rinse program (FMRP). Improving dental hygiene | Community health and education settings | Adoption and continuation of programme activities | Intervention requiring coordination among multiple staff New policies, procedures and technologies |

Interrupted time series study Mixed-methods Telephone interviews following a structured questionnaire with superintendents of public school districts Assessment Period 6 years postimplementation |

| 36 | Sheaff52 | Improving the future for older people—reducing emergency bed days for over 75s | Nine acute hospital sites | Emergency bed days | Broad scale system change Intervention requiring coordination among multiple staff |

Realist case evaluation Mixed methods case study Quantitative content analysis documents and questionnaires Assessment Period 4 years from baseline |

| 37 | Slaghuis53 | Care for Better Improving care in nursing homes |

Multiple nursing homes and home care organisations for the elderly | Factors related to routinisation and institutionalisation to form a sustainability scale | Intervention requiring coordination among multiple staff New policies, procedures and technologies |

Case study Mixed methods case study Questionnaire to team members Assessment Period 1 year postfunding for the programme |

| 38 | Steadman36 | ACCESS Access to community care and effective services and supports for homeless people with mental illness |

Multiple community health settings | Status of services; source of funding secured Systems integration activities |

Collaborative partnerships Capacity building |

Case study Qualitative Assessment Period 1–6 months post support |

| 39 | Swain37 | The National Implementing Evidence-Based Practices Project for people with serious mental illness Examined the implementation of 5 psychosocial practices in routine mental healthcare setting in 8 states |

Multiple healthcare organisations | Continuation of practice | Intervention requiring coordination among multiple staff | Multisite case study Mixed methods Telephone survey gathering qualitative and quantitative data from site representatives with programme leaders and trainers Assessment Period 2 years post implementation |

| 40 | Thorsen54 | Worksite canteen intervention of serving more fruit and vegetables 6-month intervention to increase fruit and veg consumption |

Multiple community health settings | Fruit and veg consumption | Intervention requiring coordination among multiple staff Collaborative partnerships |

Multisite case study Quantitative Measurement of Fruit and Veg consumption over a 3-week period Assessment Period 5 years post support |

| 41 | Wakerman55 | The Sharing Health Care Initiative (SHCI) demonstration Project Chronic disease management in remote Australian Aboriginal communities through community-based self-management education |

Multiple community health settings | Community awareness of chronic disease; community perception of the programme; recording and follow-up activities; improved clinical markers | Broad scale system change | Multisite case study Mixed methods Clinical audit and interview Assessment Period 26 months postimplementation |

| 42 | Whitford41 | Prevention of Diabetic complications in UK general-practice clinics by a multifaceted, diabetes service in primary and secondary care | Individual patient level Multiple healthcare organisations |

Documentary of clinical data; clinical indicators | Intervention requiring coordination among multiple staff | Case–control study Before-after design Assessment Period 10 years postimplementation |

Most often, the system was the unit of analysis in these studies to determine outcome. For example, Meredith et al,32 in their study of depression among 17 primary care centres, found that even though only 11 of the sites continued programme activities, 15 reported spread of the activities to other providers and patient groups, suggesting that the programme had reach into new areas. This provides a holistic overview of the continued performance of activities within the context of organisational culture and programme growth. In addition, Greenhalgh et al,13 in their follow-up study of three services focused on the prevention of stroke, kidney and sexual health in primary and secondary care centres, found that most programme activities continued at 7 years follow-up. In addition, they found that significant cultural changes had occurred within these organisations and that services had spread into new areas.

Summary of study design and methodologies

Varying study designs and methodologies were used in the studies eligible for this review. Designs included case study reports (n=21), through to randomised controlled trials (n=1), and participant numbers varied from 20 to 2000. Table 3 summarises the study designs for all included studies.

Table 3.

Study designs used to measure defined outcomes

| Study design | Frequency |

|---|---|

| Interrupted time-series | 14 |

| Randomised controlled trial (RCT) | 1 |

| Single and multisite case study design | 19 |

| Longitudinal quasi-experimental | 1 |

| Longitudinal case study design | 2 |

| Case control study and Cohort designs | 5 |

Nearly half of all the studies (n=19) were designed using a mixed methods approach to measure outcomes. The combination of methods included quantitative measures (audit data, document review and surveys) combined with qualitative methods (in-depth interviews and focus groups with organisational staff members). Table 4 illustrates the relationship between the various outcomes evaluated and the most common methods used to collect these data. The research methodology varied between studies according to the type of defined outcomes (see table 4). Data collection for studies designed to measure health outcomes mostly used quantitative methods (50%) such as document review and audit.9 13 29–31 35 38–40 42 Mixed methods were most commonly used to measure all other indicators.

Table 4.

Methods of data collection used across defined sustainability outcomes

| Defined outcomes |

||||||

|---|---|---|---|---|---|---|

| Data collection methods | Health benefits (%) | Programme activities (%) | Community capacity (%) | Program diffusion (%) | Policies and procedures (%) | Combined (%) |

| Mixed methods | 33 | 51 | 83 | 60 | 66 | 71 |

| Quantitative methods | 55 | 32 | – | – | 33 | 14 |

| Qualitative methods | 11 | 16 | 16 | 40 | – | 14 |

Timing of data collection

The timing and duration of data collection varied between the studies with assessment periods ranging from 1 to 10 years. Just over half of the studies (n=24) had a single ‘snapshot’ time point to measure sustainability outcome indicators against baseline data.9 13 28 30 31 33–37 40 41 43–55 The other half used longitudinal data14 42 56 or multiple time points8 29 32 38 39 57–65 such as the Ramsey et al39 study. Timing of data collection appeared to vary according to the way sustainability was defined and the indicators selected to measure outcomes. For example, Ramsay et al39 were specifically interested in observing any variability in intervention effects over time. They used monthly quantitative audit data over a 12-month period from baseline to assess adherence to clinical guidelines.39 In contrast, for O'Loughlin et al,34 sustainability was defined according to the level of ‘institutionalisation’ a programme reached within an organisation. Institutionalisation refers to the integration of a programme into the normal routines and everyday practice of an organisation.34 They focused on one single follow-up time point to assess the degree of ‘institutionalisation’ through specific survey questions relating to organisational characteristics and activities.34

In just over half of the studies (n=25), the assessment of the sustainability outcome started immediately post the implementation period. In a quarter of the studies (n=10), data were collected from the time of withdrawal of programme support, while in several studies (n=6) the assessment of sustainability started from baseline. Baseline was commonly defined as the period before active implementation of interventions prior to the start of the programme. The time to the start of that assessment is relevant information to address the first aim of this review—how are sustainability outcomes defined for measurement in the field of chronic disease? We seek to understand perspectives around when sustainability is achieved, as opposed to ongoing programme activity, and when does this start? The results reveal that in just over half of the studies in the review researchers view sustainability as beginning from the implementation phase and beyond.

Criteria for judging sustainability outcome

None of the studies in this review had published preset or post hoc numerical criteria for judging if sustainability had been achieved. Programmes were defined as being sustained if there was evidence of continued improvements from baseline measures or if outcomes were maintained following the implementation phase or cessation of funding, regardless of the magnitude achieved.

Intervention type and study methodology

The most commonly used intervention types were broad scale system change (n=18), interventions requiring coordination of multiple staff (n=26) and new policies, procedures and technologies (n=12) (see table 2). Interventions requiring coordination of multiple staff predominantly used quantitative measures (n=22) and approximately two-thirds qualified the findings with qualitative methods.

The studies designed to assess broad scale system change interventions had longer assessment periods (3–7 years) and more commonly used longitudinal study designs with multiple time points to measure outcomes throughout the programme cycle. For example, Bailie et al29 repeated an audit on five occasions over a 3-year period. Likewise, McDermott et al40 evaluated retrospective patient data over time from implementation.40

Discussion

The scope of care provided to people with chronic illness is heterogeneous, spanning multiple disease types and management interventions. This heterogeneity of care is reflected in the methods used for sustainability research in the chronic disease field. The results of this review reveal that indicators for sustainability, research methodology and timing and duration of data collection methods varied according to the defined outcomes and intervention type.

In most of the studies, the sustainability outcome was defined as the maintenance of programme activities. This fits well with the implementation cycle: the problem area is identified, and goals and activities are designed and implemented and evaluated over time to assess ongoing maintenance.66 67 They are intervention-focused with activity-based outcomes. However, this narrow perspective does not provide evidence on whether health benefits are being achieved based on this ongoing activity, and is often an indirect causal assumption that is implied.

Given that improved outcomes are what most programmes aim for, it was surprising that only about one-quarter of studies included the measurement of patient-level health outcomes as a primary outcome of sustainability. Nevertheless, most of the programme activity outcomes measured in the included studies could be considered reasonable surrogates for achieving health outcomes. For example, adherence to COPD evidence-based guidelines in the Brand et al9 study is likely to result in improved outcomes since the relationship between patient interventions delivered through a programme has an evidence base associated with achieving positive health benefits.

A trend towards defining the primary outcome as the maintenance of programme activities, as evidenced in this review, could be due to a large focus on self-management interventions to manage chronic disease.4 Self-management strategies are typically measured by patient self-report outcomes.4 It has previously been suggested that the effectiveness of self-management interventions are mostly derived from variable evaluation-based measures that carry a substantial amount of measurement error.4 This may contribute to a shift in focus towards activity-based outcomes focusing on the quality and continuation of delivered services rather than health-based outcomes. Clinical indices provide clear reference points for precomparison and postcomparison allowing for concise summaries and recommendations for health providers.

The majority of studies were characterised as broad scale system change interventions.19 These studies used clinical markers as a primary outcome in addition to programme activities, community capacity, programme diffusion and/or policy outcomes. The various outcomes together gave a bigger picture of change and transformation with the whole organisation itself. This method of using health outcome data with other outcomes may provide more robust evidence about the whole broad scale change. Consideration of intervention type may assist in the complex process of planning for sustainability research in the chronic disease field.

Our results reveal that the study methodology varied according to intervention type and this may go some way in explaining the variation that exists in research between programs. For example, evaluations of broad scale system change interventions often employ multiple time points to measure outcomes, demonstrating an interest in researching trends over time. These studies often evaluated multiple outcomes as well. A growing number of studies (one half from this review) are using multiple time points over time to measure indicators. This finding supports Scheirer et al's19 hypothesis that such interventions require ongoing evaluations.

This is an important consideration for future sustainability research on chronic disease management. The feasibility of continuous measurement should be explored through clinical registries or document review from the outset of programme planning.68 69 Longitudinal testing over multiple time points may serve to distinguish residual improvements from implementation to sustained persistence of improvement.70

Measurement needs to encapsulate how programs evolve over time, adapt to changing contexts and transform to suit changing health system needs. Evaluations of such studies should also capture spread into new areas or any difficulties as a result of potential environmental changes. Importantly, none of the studies in this review defined predetermined or post hoc numerical criteria as an empirical measure of sustainability being achieved or what would be considered acceptable degrees of regression/attrition. Decision rules made around defining outcomes for dependent variables appeared to be a subjective process based on the objectives and theoretical underpinnings unique to each study. Moreover, since these programs are situated within complex systems with evolving contexts, it may be that setting rigid criteria for judging outcomes is not realistic or desirable.

Our review supports the conceptualisation of sustainability as a broad scale approach.14 2 13 The results reveal that most studies took a complex approach with mixed methods and defined outcomes that extend beyond continuation of programme activity. For example, Meredith et al32 quantitatively evaluated improved delivery of services and qualified their findings through in-depth interviews to determine the spread of programme into other areas. Consistent with the work of Gruen et al14 and Greenhalgh et al,2 our findings reveal that the sustainability outcome is dependent on a complex set of inter-relationships between various organisational and social systems impacting broad scale change. It extends the work of Gruen et al's14 and Greenhalgh et al's2 by providing a synthesis of the specific research methods used to measure sustainability in the area of chronic disease. It also extends Wiltsey-Stirman et al's15 review of methods used to measure sustainability outcomes by providing a description of intervention types in the chronic disease field. Finally, it supports and extends Scheirer et al's19 framework for evaluating interventions by reviewing intervention types and relating these to the study methodology currently used.

The strengths of our review include the systematic identification and abstraction of eligible publications assessed by more than one author and the use of manual reference searching and forward citation tracking as well as the use of an appropriate quality assessment tool for classifying the eligible publications. The limitations of our review include the fact that we may have missed relevant reports if these have been published in the grey literature. The lack of standardisation and reporting of the sustainability outcomes precluded our ability to perform meta-analytic synthesis. Consensus on aspects of standardised measurement and reporting would promote the future possibility of meta-analytic syntheses.

Conclusion

Overall, despite chronic diseases being a major focus of attention worldwide, owing to the growing financial impacts on health systems and associated disease burden imposed on society, publications on the sustainability of programmes within this field are limited and cover only a small range of conditions, such as diabetes. This review provides an important contribution to understanding the way sustainability is currently measured in the chronic disease field. Currently, research methods to evaluate health programme sustainability in the area of chronic disease management vary widely and very little work in this area has been published. Given the increasing burden of chronic disease in our society, it is also a matter of concern that there are no clear guidelines on the best way to measure sustainability in this field.

However, our review shows some emerging patterns in the research methods used to measure sustainability. First, there is a clear trend in the use of assessment through multiple time points from baseline to gain valuable information about the ongoing effectiveness of given programmes. Second, a clear relationship between intervention type and use of research methods is shown, with broad scale system change interventions using longitudinal designs with multiple time points. Finally, our results support recent recommendations that evaluation of sustainability requires a holistic approach capturing all elements with the use of mixed methods approaches.

Further development of current empirical methods used to measure sustainability, in the area of chronic disease, is needed to provide direction for programme planners and evaluators in designing, implementing and evaluating health programmes. More research into methodology and measurement is needed to provide a more rigorous science behind sustainability research and clearer guidelines for future programme planners and evaluators.

Acknowledgments

The authors thank the Melbourne Health Centre for Excellence in Neuroscience.

Footnotes

Contributors: LF is the first author and performed the initial review of publications. DD and DAC assessed abstracts or full articles to confirm eligibility for full review. DD and DAC contributed to the writing of the draft and revisions of the manuscript for intellectual content, and supervised the research process of the review.

Funding: This work is supported by the NHMRC Centre for Research Excellence Grant 1001216. DAC was supported by a fellowship from the National Health and Medical Research Council (NHMRC; 1063761 co-funded by National Heart Foundation).

Competing interests: None declared.

Ethics approval: This review is part of a larger PhD. Ethics has been submitted and approved by the Melbourne Human Research Ethics Committee. Ethics ID-1137091.

Provenance and peer review: Not commissioned; externally peer reviewed.

Data sharing statement: No additional data are available.

References

- 1.Johnson K, Hays C, Center H et al. Building capacity and sustainable prevention innovations: a sustainability planning model. Eval Program Plann 2004;27:135–49. 10.1016/j.evalprogplan.2004.01.002 [DOI] [Google Scholar]

- 2.Greenhalgh T, Robert G, Macfarlane F et al. Diffusion of innovations in service organizations: systematic review and recommendations. Milbank Q 2004;82:581–629. 10.1111/j.0887-378X.2004.00325.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Pluye P, Potvin L, Denis JL et al. Program sustainability: focus on organizational routines. Health Promot Int 2004;19:489–500. 10.1093/heapro/dah411 [DOI] [PubMed] [Google Scholar]

- 4.Nolte S, Elsworth GR, Newman S et al. Measurement issues in the evaluation of chronic disease self-management programs. Qual Life Res 2013;22:1655–64. 10.1007/s11136-012-0317-1 [DOI] [PubMed] [Google Scholar]

- 5.Giovannetti E, Dy S, Leff B et al. Performance measurement for people with multiple chromic conditions: conceptual model. Am J Manag Care 2015;19:e359–66. [PMC free article] [PubMed] [Google Scholar]

- 6.Scheirer MA, Dearing JW. An agenda for research on the sustainability of public health programs. Am J Public Health 2011;101:2059–67. 10.2105/AJPH.2011.300193 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Shediac-Rizkallah MC, Bone LR. Planning for the sustainability of community-based health programs: conceptual frameworks and future directions for research, practice and policy. Health Educ Res 1998;13:87–108. 10.1093/her/13.1.87 [DOI] [PubMed] [Google Scholar]

- 8.Rowley KG, Daniel M, Skinner K et al. Effectiveness of a community-directed ‘healthy lifestyle’ program in a remote Australian aboriginal community. Aust N Z J Public Health 2000;24:136–44. 10.1111/j.1467-842X.2000.tb00133.x [DOI] [PubMed] [Google Scholar]

- 9.Brand C, Landgren F, Hutchinson A et al. Clinical practice guidelines: barriers to durability after effective early implementation. Intern Med J 2005;35:162–9. 10.1111/j.1445-5994.2004.00763.x [DOI] [PubMed] [Google Scholar]

- 10.Koskan A, Friedman DB, Messias DK et al. Sustainability of promotora initiatives: program planners’ perspectives. J Public Health Manag Pract 2013;19:E1–9. 10.1097/PHH.0b013e318280012a [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Scheirer MA, Hartling G, Hagerman D. Defining sustainability outcomes of health programs: illustrations from an on-line survey. Eval Program Plann 2008;31:335–46. 10.1016/j.evalprogplan.2008.08.004 [DOI] [PubMed] [Google Scholar]

- 12.Goodman RM, Steckler A. A model for the instutionalisation of health promotion programs. Fam Commun Health 1989;11: 63–8. 10.1097/00003727-198902000-00009 [DOI] [Google Scholar]

- 13.Greenhalgh T, Macfarlane F, Barton-Sweeney C et al. “If we build it, will it stay?” A case study of the sustainability of whole-system change in London. Milbank Q 2012;90:516–47. 10.1111/j.1468-0009.2012.00673.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Gruen RL, Elliott JH, Nolan ML et al. Sustainability science: an integrated approach for health-programme planning. Lancet 2008;372:1579–89. 10.1016/S0140-6736(08)61659-1 [DOI] [PubMed] [Google Scholar]

- 15.Wiltsey Stirman S, Kimberly J, Cook N et al. The sustainability of new programs and innovations: a review of empirical literature and recommendations for future research. Implement Sci 2012;7:17 10.1186/1748-5908-7-17 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Schell SF, Luke DA, Schooley MW et al. Public health program capacity for sustainability: a new framework. Implement Sci 2013;8:15 10.1186/1748-5908-8-15 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Luke DA, Calhoun A, Robichaux CB et al. The Program Sustainability Assessment Tool: a new instrument for public health programs. Prev Chronic Dis 2014;11:130184 10.5888/pcd11.130184 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Calhoun A, Mainor A, Moreland-Russell S et al. Using the Program Sustainability Assessment Tool to assess and plan for sustainability. Prev Chronic Dis 2014;11:130185 10.5888/pcd11.130185 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Scheirer MA. Linking sustainability research to intervention types. Am J Public Health 2013;103:e73–80. 10.2105/AJPH.2012.300976 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Scheirer M. Is sustainabaility possible? A review and commentary on emprical studies of program sustainability. Am J Eval 2005;26:320–47. 10.1177/1098214005278752 [DOI] [Google Scholar]

- 21.Murray CJ, Murray CJ, Barber RM et al. Global, regional, and national disability-adjusted life years (DALYs) for 306 diseases and injuries and healthy life expectancy (HALE) for 188 countries, 1990–2013: quantifying the epidemiological transition. Lancet 2015;386:2145–91. 10.1016/S0140-6736(15)61340-X [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Pham MT, Rajić A, Greig JD et al. A scoping review of scoping reviews: advancing the approach and enhancing the consistency. Res Synth Methods 2014;5:371–85. 10.1002/jrsm.1123 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Levac D, Colquhoun H, O'brien KK. Scoping studies: advancing the methodology. Implement Sci 2010;5:69 10.1186/1748-5908-5-69 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Greenhalgh T, Peacock R. Effectiveness and efficiency of search methods in systematic reviews of complex evidence: audit of primary sources. BMJ 2005;331:1064–5. 10.1136/bmj.38636.593461.68 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Jarlais D, Lyles C, Crepaz N, TREND Group. Improving the reporting quality of nonrandomized evaluations of behavioural and public health interventions: the TREND Statement. Am J Public Health 2004;94:361–6. 10.2105/AJPH.94.3.361 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Moher D, Hopewell S, Schulz KF et al. CONSORT 2010 explanation and elaboration: updated guidelines for reporting parallel group randomised trials. BMJ 2010;340:c869 10.1136/bmj.c869 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Moher D, Hopewell S, Schulz KF et al. CONSORT 2010 Explanation and Elaboration: updated guidelines for reporting parallel group randomised trials. J Clin Epidemiol 2010;63:e1–37. 10.1016/j.jclinepi.2010.03.004 [DOI] [PubMed] [Google Scholar]

- 28.Aitaoto N, Tsark J, Braun KL. Sustainability of the Pacific Diabetes Today coalitions. Prev Chronic Dis 2009;6:A130. [PMC free article] [PubMed] [Google Scholar]

- 29.Bailie RS, Togni SJ, Si D et al. Preventive medical care in remote Aboriginal communities in the Northern Territory: a follow-up study of the impact of clinical guidelines, computerised recall and reminder systems, and audit and feedback. BMC Health Serv Res 2003;3:15 10.1186/1472-6963-3-15 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Jansen M, Harting J, Ebben N et al. The concept of sustainability and the use of outcome indicators. A case study to continue a successful health counselling intervention. Fam Pract 2008;25(Suppl 1):i32–7. 10.1093/fampra/cmn066 [DOI] [PubMed] [Google Scholar]

- 31.Lee PW, Dietrich AJ, Oxman TE et al. Sustainable impact of a primary care depression intervention. J Am Board Fam Med 2007;20:427–33. 10.3122/jabfm.2007.05.070045 [DOI] [PubMed] [Google Scholar]

- 32.Meredith LS, Mendel P, Pearson M et al. Implementation and maintenance of quality improvement for treating depression in primary care. Psychiatr Serv 2006;57:48–55. 10.1176/appi.ps.57.1.48 [DOI] [PubMed] [Google Scholar]

- 33.Nease DE Jr, Nutting PA, Graham DG et al. Sustainability of depression care improvements: success of a practice change improvement collaborative. J Am Board Fam Med 2010;23:598–605. 10.3122/jabfm.2010.05.090212 [DOI] [PubMed] [Google Scholar]

- 34.O'Loughlin J, Renaud L, Richard L et al. Correlates of the sustainability of community-based heart health promotion interventions. Prev Med 1998;27(Pt 1):702–12. 10.1006/pmed.1998.0348 [DOI] [PubMed] [Google Scholar]

- 35.Pluye P, Potvin L, Denis JL et al. Program sustainability begins with the first events. Eval Program Plann 2005;28:123–37. 10.1016/j.evalprogplan.2004.10.003 [DOI] [Google Scholar]

- 36.Steadman HJ, Cocozza JJ, Dennis DL et al. Successful program maintenance when federal demonstration dollars stop: the ACCESS program for homeless mentally ill persons. Adm Policy Ment Health 2002;29:481–93. 10.1023/A:1020776310331 [DOI] [PubMed] [Google Scholar]

- 37.Swain K, Whitley R, Mchugo GJ et al. The sustainability of evidence-based practices in routine mental health agencies. Community Ment Health J 2010;46:119–29. 10.1007/s10597-009-9202-y [DOI] [PubMed] [Google Scholar]

- 38.Goodson P, Murphy Smith M, Evans A et al. Maintaining prevention in practice: survival of PPIP in primary care settings. Put Prevention Into Practice. Am J Prev Med 2001;20:184–9. 10.1016/S0749-3797(00)00310-X [DOI] [PubMed] [Google Scholar]

- 39.Ramsay CR, Eccles M, Grimshaw JM et al. Assessing the long-term effect of educational reminder messages on primary care radiology referrals. Clin Radiol 2003;58:319–21. 10.1016/S0009-9260(02)00524-X [DOI] [PubMed] [Google Scholar]

- 40.Mcdermott R, Tulip F, Schmidt B et al. Sustaining better diabetes care in remote indigenous Australian communities. BMJ 2003;327:428–30. 10.1136/bmj.327.7412.428 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Whitford DL, Roberts SH, Griffin S. Sustainability and effectiveness of comprehensive diabetes care to a district population. Diabet Med 2004;21:1221–8. 10.1111/j.1464-5491.2004.01324.x [DOI] [PubMed] [Google Scholar]

- 42.Chin MH, Drum ML, Guillen M et al. Improving and sustaining diabetes care in community health centers with the health disparities collaboratives. Med Care 2007;45:1135–43. 10.1097/MLR.0b013e31812da80e [DOI] [PubMed] [Google Scholar]

- 43.Ament SM, Gillissen F, Maessen JM et al. Sustainability of short stay after breast cancer surgery in early adopter hospitals. Breast 2014;23:429–34. 10.1016/j.breast.2014.02.010 [DOI] [PubMed] [Google Scholar]

- 44.Barnett LM, Van Beurden E, Eakin EG et al. Program sustainability of a community-based intervention to prevent falls among older Australians. Health Promot Int 2004;19:281–8. 10.1093/heapro/dah302 [DOI] [PubMed] [Google Scholar]

- 45.Bereznicki B, Peterson G, Jackson S et al. Perceived feasibility of a community pharmacy-based asthma intervention: a qualitative follow-up study. J Clin Pharm Ther 2011;36:348–55. 10.1111/j.1365-2710.2010.01187.x [DOI] [PubMed] [Google Scholar]

- 46.Boehm G, Bracharz N, Schoberger R. Evaluation of the sustainability of the Public Health Program “Slim without diet”. Cent Eur J Med 2011;123:415–21. [DOI] [PubMed] [Google Scholar]

- 47.Bond GR, Drake RE, Mchugo GJ et al. Long-term sustainability of evidence-based practices in community mental health agencies. Adm Policy Ment Health 2014;41:228–36. 10.1007/s10488-012-0461-5 [DOI] [PubMed] [Google Scholar]

- 48.Campbell S, Pieters K, Mullen KA et al. Examining sustainability in a hospital setting: case of smoking cessation. Implement Sci 2011;6:108 10.1186/1748-5908-6-108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Manning MA, Bollig-Fischer A, Bobovski LB et al. Modeling the sustainability of community health networks: novel approaches for analyzing collaborative organization partnerships across time. Transl Behav Med 2014;4:46–59. 10.1007/s13142-013-0220-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Palinkas L, Ell K, Hansen M et al. Sustainability of collaborative care interventions in primary care settings. J Soc Work 2010;11:99–117. 10.1177/1468017310381310 [DOI] [Google Scholar]

- 51.Reinschmidt KM, Teufel-Shone NI, Bradford G et al. Taking a broad approach to public health program adaptation: adapting a family-based diabetes education program. J Prim Prev 2010;31:69–83. 10.1007/s10935-010-0208-6 [DOI] [PubMed] [Google Scholar]

- 52.Sheaff R, Windle K, Wistow G et al. Reducing emergency bed-days for older people? Network governance lessons from the ‘Improving the Future for Older People’ programme. Soc Sci Med 2014;106:59–66. 10.1016/j.socscimed.2014.01.033 [DOI] [PubMed] [Google Scholar]

- 53.Slaghuis SS, Strating MM, Bal RA et al. A framework and a measurement instrument for sustainability of work practices in long-term care. BMC Health Serv Res 2011;11:314 10.1186/1472-6963-11-314 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Thorsen AV, Lassen AD, Tetens I et al. Long-term sustainability of a worksite canteen intervention of serving more fruit and vegetables. Public Health Nutr 2010;13:1647–52. 10.1017/S1368980010001242 [DOI] [PubMed] [Google Scholar]

- 55.Wakerman J, Chalmers EM, Humphreys JS et al. Sustainable chronic disease management in remote Australia. Med J Aust 2005;183(Suppl):S64–8. [DOI] [PubMed] [Google Scholar]

- 56.Carpenter WR, Fortune-Greeley AK, Zullig LL et al. Sustainability and performance of the National Cancer Institute's Community Clinical Oncology Program. Contemp Clin Trials 2012;33:46–54. 10.1016/j.cct.2011.09.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Blasinsky M, Goldman HH, Unutzer J. Project IMPACT: a report on barriers and facilitators to sustainability. Adm Policy Ment Health 2006;33:718–29. 10.1007/s10488-006-0086-7 [DOI] [PubMed] [Google Scholar]

- 58.Bracht N, Finnegan JR, Rissel C et al. Community ownership and program continuation following a health demonstration project. Health Educ Res 1994;9:243–55. 10.1093/her/9.2.243 [DOI] [PubMed] [Google Scholar]

- 59.Clinton J. The true impact of evalutation: motivation for ECB. Am J Eval 2014;35:120–7. 10.1177/1098214013499602 [DOI] [Google Scholar]

- 60.Cramm JM, Nieboer AP. Short and long term improvements in quality of chronic care delivery predict program sustainability. Soc Sci Med 2014;101:148–54. 10.1016/j.socscimed.2013.11.035 [DOI] [PubMed] [Google Scholar]

- 61.Gundim RS, Chao WL. A graphical representation model for telemedicine and telehealth center sustainability. Telemed J E Health 2011;17:164–8. 10.1089/tmj.2010.0064 [DOI] [PubMed] [Google Scholar]

- 62.Gruen R, Weeramanthri T, Bailie R. Outreach and improved access to specialist services for indigenous people in remote Australia: the requirements for sustainability. J Epidemiol Community Health 2001;56:517–21. 10.1007/s10464-013-9618-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Hearld LR, Alexander JA. Governance processes and change within organizational participants of multi-sectoral community health care alliances: the mediating role of vision, mission, strategy agreement and perceived alliance value. Am J Community Psychol 2014;53:185–97. 10.1007/s10464-013-9618-y [DOI] [PubMed] [Google Scholar]

- 64.Lassen A, Thorsen A, Trolle E et al. Successful strategies to increase the consumption of fruits and vegetables: results from the ‘6 a day’ Work-site Canteen Model Study. Public Health Nutr 2003;7:263–70. [DOI] [PubMed] [Google Scholar]

- 65.Scheirer MA. The life cycle of an innovation: adoption versus discontinuation of the fluoride mouth rinse program in schools. J Health Soc Behav 1990;31:203–15. 10.2307/2137173 [DOI] [PubMed] [Google Scholar]

- 66.Hawe P, Degeling D, Hall J. Evaluating health promotion. Australia: Elsevier, 1990. [Google Scholar]

- 67.Hawthorne G. Introduction to health program evaluation. West Heidelberg, VIC: Program Evaluation Unit—Centre for Health Program Evaluation, 2000. [Google Scholar]

- 68.Cadilhac DA, Ibrahim J, Pearce DC et al. Multicenter comparison of processes of care between Stroke Units and conventional care wards in Australia. Stroke 2004;35:1035–40. 10.1161/01.STR.0000125709.17337.5d [DOI] [PubMed] [Google Scholar]

- 69.Cadilhac DA, Pearce DC, Levi CR et al. Improvements in the quality of care and health outcomes with new stroke care units following implementation of a clinician-led, health system redesign programme in New South Wales, Australia. Qual Saf Health Care 2008;17:329–33. 10.1136/qshc.2007.024604 [DOI] [PubMed] [Google Scholar]

- 70.Bowman CC, Sobo EJ, Asch SM et al. Measuring persistence of implementation: QUERI Series. Implement Sci 2008;3:21 10.1186/1748-5908-3-21 [DOI] [PMC free article] [PubMed] [Google Scholar]