Abstract

Secure aggregation is an essential component of modern distributed applications and data mining platforms. Aggregated statistical results are typically adopted in constructing a data cube for data analysis at multiple abstraction levels in data warehouse platforms. Generating different types of statistical results efficiently at the same time (or referred to as enabling multi-functional support) is a fundamental requirement in practice. However, most of the existing schemes support a very limited number of statistics. Securely obtaining typical statistical results simultaneously in the distribution system, without recovering the original data, is still an open problem. In this paper, we present SEDAR, which is a SEcure Data Aggregation scheme under the Range segmentation model. Range segmentation model is proposed to reduce the communication cost by capturing the data characteristics, and different range uses different aggregation strategy. For raw data in the dominant range, SEDAR encodes them into well defined vectors to provide value-preservation and order-preservation, and thus provides the basis for multi-functional aggregation. A homomorphic encryption scheme is used to achieve data privacy. We also present two enhanced versions. The first one is a Random based SEDAR (REDAR), and the second is a Compression based SEDAR (CEDAR). Both of them can significantly reduce communication cost with the trade-off lower security and lower accuracy, respectively. Experimental evaluations, based on six different scenes of real data, show that all of them have an excellent performance on cost and accuracy.

Introduction

Enormous amounts of rich diverse information are constantly generated in modern large distributed systems, which are also called big data. Such large-scale big data sources create exciting opportunities for service quality monitoring, novelty discovery, or attack detection, etc. However, directly transmitting them to a single node and processing using centralized algorithms are difficult. Distributed aggregation is an efficient way to minimize consumption of energy and bandwidth.

Typical distributed application scenarios can be easy to found big internet firms, such as Google and Bing. Click log data of these service providers is distributed on thousands of servers around the world, which is usually up to megabytes per minute. In these distributed big data scenarios, 90% of regular analytics jobs issue queries against different type of aggregated values, instead of requiring the raw log data records. To efficient generate these aggregated results, performance metrics is generated locally from log data, and then a distributed aggregation can be adopted [1].

As another example, consider the WSNs application scenarios. In these applications, nodes are often equipped with a battery as the energy unit, which means the energy capacities are limited. Meanwhile, such WSNs are envisioned to be spread out over a large geographical area, and the total number of the nodes is huge, so the battery change is impossible. How to save the overall energy resources and extend the lifetime of the networks is essential. Distributed aggregation is also a popular research topic in this area [2].

Enabling multi-functional support is a fundamental requirement in practice. Here, multi-functional support means to provide as many statistical results as possible. Typical aggregation functions include count, summation, mean, median, maximum, minimum, variance, mode, etc. These statistical results are typically adopted in constructing a data cube for data analysis at multiple abstraction levels in data warehouse platforms [3], In order to improve the performance of data mining, it is a basic requirement to keep data feature (i.e. statistics) as much as possible in data cubes, which means that enabling multi-functional support is necessary for corresponding distributed aggregation schemes. System wide properties generated from data aggregation, can also be used as input parameters for other distributed applications and algorithms, or utilized for decision making directly. For example, setting the fan-out of gossip protocol [4] in peer-to-peer applications, or achieving load balancing in content delivery networks [5] need aggregation results as their parameters or inputs.

Serval distributed aggregation schemes [6–8] have been put forward. Security is a basic requirement for most applications. Serval secure distributed aggregation schemes [2, 9–13] have likewise existed. However, most of them can only achieve a very limited type of statistics, and even combine several existed schemes still can’t respond to the request. In fact, efficient obtaining global-related statistical results, such as median and mode, in a distributed manner, even without considering security problem, is still a challenge [3].

In RCDA [14], a homomorphic encryption algorithm is used to provide end-to-end confidentiality, and simple concatenation all sensing data without any information compression method to enable recoverability of all sensing data. Although the scheme can achieve arbitrary method support, the communication cost is too heavy to be applied to large scale networks. Based on RCDA, EERCDA [13] uses a differential data transfer method to reduce the communication cost, in which the difference data rather than raw data from the sensor node are transmitted to the cluster head. However, the total transmission overhead still too heavy for most time.

To the best of our knowledge, securely obtaining typical statistical results simultaneously in the distribution system, without recovering the original data, is still an open problem.

In this paper, we study the problem of multi-functional secure distributed aggregation, in which all the aggregation functions mentioned above can be obtained securely in a single aggregation query. We also propose three complementary schemes to work around this problem. We first present SEDAR, which is a SEcure Data Aggregation scheme under the Range segmentation model, and then proposed two enhanced version REDAR (Random based SEDAR) and CEDAR (Compression based SEDAR).

To reduce the communication cost by capturing the data characteristics, a range segmentation model is adopted in proposed schemes, and different range uses different aggregation strategy. Raw data in dominant range are encoded into well defined vectors at each node to preserve both the order-related and the value-related information during distributed aggregation, and thus different types of statistics can be obtained simultaneously recovering the original data. The vectors are encrypted by a homomorphic scheme, and encrypted vectors are aggregated directly in cipher domain at an intermediate node, so concealment is also achieved. Raw data in other range will be encrypted by traditional asymmetric encryption schemes, and transmitted without in-network aggregation.

The major contributions of this paper are summarised as follows.

We propose a novel and practical scheme, called SEDAR, in which all common statistical results can be securely and efficiently obtained without recovering the original data.

We also present two enhanced versions, namely REDAR and CEDAR, to further reduce the communication cost with the trade-off lower security and lower accuracy, respectively.

We implement these three schemes and extensively evaluate their performance. Evaluation results, based on six different scenes of real data, show that all of them have an excellent performance on cost and accuracy.

The remaining parts of this paper are structured as follows. Section 2 describes terminologies, and additional background knowledge. Section 3, 4 and 5 introduce SEDAR, REDAR and CEDAR. Section 6 and 7 is performance analysis and evaluation results. Section 8 briefly examines the related work. Section 9 provides a summary.

Preliminaries

In this section, we first give a range segmentation model and a network model, and then present problem definition. We also introduce a homomorphic encryption scheme.

Range Segmentation Model

An illustration of range segmentation model is given in Fig 1, terminologies used in this model are defined as follows.

Fig 1. Range Segmentation Model.

Definition 1 (Rm, measurement range) Measurement ranges are those over which the measurement instruments are calibrated. Convincing and reliable results of a given instrument will only appear in its measurement range, i.e., Rm = [XLM, XUM], s.t. Rm ⊆ R

Definition 2 (Re, effective range, operation range, valid range) Effective range is the set of allowed values for a variable in a concrete application. It’s a subset of Rm, i.e., Re = [XLE, XUE], s.t. Re ⊆ Rm

Definition 3 (Rd, dominant range, dominant area, advantaged region, main range) Dominant range is a subset of Re, whose probability is significantly greater than other’s, i.e., Rd = [XL, XU] s.t. Rd ⊆ Re && P(Re) − P(Rd) ≪ 1

Definition 4 (Rb, border region, boundary region, margin area) Border region is the set of allowed values that outside the dominant range. It’s a subset of Re, i.e.,

Network Model

The network is modeled as a connected graph G = (V, E), with |V| vertices and |E| links. Each vertex represents a network node and each link represents a communication channel. Node is a logical concept. For example, in global scale distribution systems, each data center can be regarded as a node.

The sink node S ∈ V, which has a powerful computing and storage capacity, is a trusted node. S is also known as query server. The remainder nodes C ⊂ V are either reliable or unreliable, each node only has one parent node. |C| = N. X = {x1, x2, …, xN} is raw data generated at these nodes. A set of nodes A are selected as aggregator nodes, A ⊂ C. The aggregator nodes are also performed as cluster heads, the others nodes () are cluster members. Each cluster member join an appropriate cluster according certain criterion, such as signal strength in wireless network and delay in wired network.

Problem Definition

Definition 5 (Data aggregation) Given a dataset X = {x1, x2, …, xN}, a aggregation function set F = {f, h, …}, and a aggregation result set Y = {y1}, y2, …, a data aggregation is defined as Y = F(X), s.t. |Y| ≪ |X|.

Definition 6 (Distributed Aggregation) Given a network G, X is the raw data generated at each node, divide X into several subsets {X1, X2, XM}, s.t. M < N, X1 ∪ X2… ∪XM = X, X1 ∩ X2… ∩XM = ϕ. An in-network data aggregation is defined as y = F(X) = f (h(X1),…, h(XM)). Each subset can be further divided, and this definition is still satisfied.

Data aggregation of each subset is accomplished at aggregator nodes, and the final data aggregation is executed at the query server.

Definition 7 (MFSDA, Multifunction Secure Distributed Aggregation) An MFSDA is a distributed aggregation which can provide both privacy confidentiality and multi-functional supporting. Multi-functional means that several statistical results can obtain efficiently in the same query, and results include at least count, summation, average, median, maximum, minimum, variance and standard deviation.

Homomorphic Encryption Scheme

The traditional encryption technology is not suitable for secure distributed aggregation. It only provides concealment but do not support cipher text operations, so the intermediate aggregators will have to decrypt the received data before aggregation. And then the aggregated results need be re-encrypted before sending. Frequent encryption and decryption in intermediate nodes will increase the computing cost and the energy consumption. The key management is also difficult. Each intermediate node has to maintain the private key for decryption, which will increase the risk of leaks.

To reduce the computing cost and enhance the security, a homomorphic encryption scheme is used in the proposed schemes. It is derived from homomorphism in the abstract algebra. By using homomorphism, operations in one algebraic system (plaintext) can be mapped into operation in another algebraic system (cipher text), which means data aggregation can perform on cipher text directly, and only the sink node needs to store the private for decryption. Homomorphic encryption technology includes partially homomorphic and fully homomorphic. In theory, based on the fully homomorphic, all these statistics can be easily computed. However, the fully homomorphic encryption, while revolutionary, is not really practical. Practitioners rely therefore on already existing partially homomorphic encryption, which is constructed from traditional encryption schemes and has been widely used in multiparty computation, electronic voting, non-interactive verifiable secret sharing, e-auction, and others [15, 16].

Homomorphic encryption used in proposed schemes is a partially homomorphic which can only allow homomorphic computation of only one operation (i.e., addition). To further reduce key size with high security and benefit us with the computation cost, an ElGamal Encryption Scheme (EC-EG) [2, 14], which is an elliptic curve based encryption, will be used here. EC-EG is also an asymmetric homomorphic encryption scheme, so the key management is easy. It consists of four parts: Setup, KeyGen, Encryption (HEnc) and Decryption (HDec). Its ciphertext is an elliptic curve over a finite field, and ⊕ is the point addition on elliptic curves.

Theorem 1 (Additive Homomorphic Encryption) EC-EG is an additive homomorphic encryption scheme, namely the addition in plaintext is equivalent to point addition in cipher domain, i.e., m1 + m2 = HDec(HEnc(m1) ⊕ HEnc(m2))

SEDAR

In this section, we introduce SEDAR to solve the multi functional secure distribution aggregation problem. We first give a brief overview of SEDAR. Then, a detail version is presented. Finally, a concrete example is given.

Overview

In the proposed scheme, aggregation is performed in cipher domain. Both the sub-aggregation and the final aggregation results are encrypted vectors, which can be decrypted using the private key owned by the server. All statistics are calculated directly from the final aggregated vector at the server, which makes thing much easier.

As shown in Fig 2, there are five steps in the proposed scheme: mapping, encoding, encryption, aggregation, and decryption. The first three are executed on each node independently, while the last two are executed at aggregation nodes and the server respectively. Mapping and encoding are used to enable multi-function supporting, while the other three are used to achieve data confidentiality.

Fig 2. SEDAR.

There are also five kinds of data corresponding to each step: raw data x, mapped data y, encoded data , encrypted data , aggregation of encrypted data , and aggregation of encoded data .

Raw data xk is the original data gathered at node k, which is belong to a subset of real domain. This real domain is split into several partition based on the range segmentation model, and different strategies will choice for different range.

The lower bound of Rd is defined as , and the upper one is . and are mean and standard deviation estimated using historical or empirical data. β is a factor. β ∈ [1.8, 3], and β = 2 satisfies most application. The bounds of Re and Rm are determined by the application itself.

Effective data in the dominant range (xk ∈ Rd) will be transformed into mapped data yk using the mapping function. yk is belong to a subset of the natural numbers, i.e. yk ∈ (0, L], where and a is the accuracy requirement of xk. The conversion between xk and yk is achieve by the mapping function and its inverse, i.e. fm and . To achieve value-preserved and order-preserved during this conversion, fm and should be monotonic functions. The mapping function can be a linear one or a nonlinear one, which can be either a piecewise function or a non piecewise function. For example, a linear mapping function and its inverse are like , and .

Effective data outside the dominant range (xk ∈ Rb) will be encrypted by traditional asymmetric encryption scheme, and transmitted without in-network aggregation. As P(Re) − P(Rd) ≪ 1, serial transmission of these data will not increase the transmission overhead significantly. Abnormal data that outside Re are also reported to the server without aggregation.

Encoded data is a vector, , where L is the number of elements. The (yk)th element of is 1, and all other elements is 0. The conversion between yk and is achieve by the encoding function and its inverse, i.e., , and For example, the encoding function can be programmed as two instructions, i.e. and , while its inverse function can be achieved by .

Each node k encrypts its into using the homomorphic encryption scheme, and sent to its parent. As all encrypted data are generated with the same public key, we can aggregation it directly in ciphertext . According homomorphism, the final aggregation result can be obtain by decrypting . Then the typical statistical results can be obtained from .

Detail of SEDAR

SEDAR consists of three procedures: Setup, Operations on Clients, and Operations on Server.

Setup

The Setup procedure performs network initialization, encryption initialization, and parameters distribution.

Boundary definitions (i.e., Re, Rd, and Rb) and accuracy requirement (i.e. a) are distributed into each node, include clients and server. Itmsize, Bmsize, Bcsize, and Bnum mentioned above are also public information.

There are two encryption schemes used in this paper: a traditional one and a homomorphic one. Both of them are public key cryptogram schemes.

The traditional encryption scheme is used for Rb, where the key pair is {KPriS, KPubS}, the encryption function is C = Enc(msg, KPubS), and the decryption function is msg = Dec(C, KPriS).

The homomorphic encryption scheme is used for Rd, where the key pair is {KPriSH, KPubSH}, the encryption function is C = HEnc(msg, KPubSH), and the decryption function is msg = HDec(C, KPriSH).

Both KPubS and KPubSH are public information, while private keys (i.e. KPriS and KPriSH) must be keep in privacy only by the server.

Operations on Clients

It consists of three parts: local data processing, received data processing and data transmission.

As shown in algorithm 1, in local data processing, each node gathers the raw data xi, and process it according the range definition. The traditional encryption scheme will be used for the data belong to Rb, the encrypted data will add into bSeti. For data in Rd, mapping fm and encoding fe function will be used before the homomorphic encryption, the encrypted data will add into hSeti. For the data outside the valid range Re, the node ID will be added into the alarm set aSeti after being encrypted.

Algorithm 1 Operations on Client (Part I)

1: procedure local data processing

2: if (xi ∈ Rb) then

3: Ci ← Enc(xi, KPubS)

4: bSeti ← {Ci}

5: else if (xi ∈ Rd) then

6: yi ← fm(xi) ⊳ Mapping

7: s.t. . ⊳ Encoding

8: Chi ← nul

9: for j ← 0 to Bnum − 1 do

10:

11:

12:

13: c ← HEnc(m, KeyPubSH)

14: Chi ← [c, Chi]

15: end for

16: hSeti ← {Chi}

17: else

18: CIDi ← Enc(IDi, KPubS)

19: aSeti ← {CIDi}

20: end if

21: end procedure

As shown in algorithm 2, the received data processing only exists in cluster header (i.e., CHs). Each CH use it to deal with packets received from its children (i.e., CMs). Items in each packet will be classified into three sets, i.e., bSeti, hSeti, and aSeti. All items of hSeti will be aggregated directly in cipher domain, and the aggregation result is Chi.

In the data transmission, all processing results Chi, bSeti, and aSeti are send to its parent.

Each element in (i.e., vk(i)) or is allocated the same size (denote as, Itmsize or |vk(i)|), it is influenced by N and the distribution of x. .

The maximum size (denote as Psize) that the homomorphic encryption function can operate each time, is always large than Itmsize. To reduce the total ciphertext size and the computation cost, several adjacent vector elements can be encrypted at the same time. For example, in Fig 2, each element is allocated three bits, and every two elements are encrypted with each other.

The maximum number of vector elements that can be encrypted by the encryption function is , and the actual size of plaintext for the encryption function is . The corresponding ciphertext size is denoted as Bcsize. When is much larger than Bmsize, the homomorphic encryption function need to repeat times to finish the encryption for . The decryption function also needs to repeat Bnum times.

Algorithm 2 Operations on Client (Part II)

1: procedure received data processing

2: if current node is a CH then

3: for all received Packetk do

4: extract {Chk, bSetk, aSetk} from Packetk

5: bSeti ← bSeti ∪ bSetk

6: aSeti ← aSeti ∪ aSetk

7: hSeti ← hSeti ∪ {Chk}

8: end for

9: for j ← 1 to Bnum do

10: ct1 is initialized as the infinity point of E

11: for all Chk ∈ hSet do

12: ct2 ← Chk [(j − 1)Bcsize + 1, jBcsize]

13: ct1 ← ct1 ⊕ ct2

14: end for

15: Chi [(j − 1)Bcsize + 1, jBcsize]←ct1

16: end for

17: end if

18: end procedure

Operations on Sever

Operations on server consist of five parts: data receiving and retrieving, boundary range data processing, alarm data processing, dominant range data processing, and obtain statistical results. The first three are contained in algorithm 3, while the others are contained in algorithm 4 and 5.

Algorithm 3 Operations on Server (Part I)

1: procedure operations on server

2: for all received Packetk do ⊳ data retrieving

3: extract {Chk, bSetk, aSetk} from Packetk

4: bSet ← ⋃bSetk

5: aSet ← ⋃aSetk

6: hSet ← ⋃Chk

7: end for

8: mSet ← {} ⊳ boundary range data processing

9: for all Ci ∈ bSet do

10: mi ← Dec(KPriS, Ci)

11: mSet ← ⋃ {mi}

12: end for

13: for all cid ∈ aSet do ⊳ alarm data processing

14: IDi ← Dec(KPriS, cid)

15: treat IDi as a potential abnormal node

16: end for

17: end procedure

In the data receiving and retrieving, the server receives all packets from its children. All its children are CH, and the packets like {Chi, bSeti, aSeti}. Items in each packet will be classified into three sets, i.e. bSet, aSet and hSet.

Items in bSet are boundary range data, all of them are encrypted in traditional scheme. The decrypted data are added into mSet.

Items in aSet are IDs of clients which data is out of boundary range. Those nodes are treated as potential abnormal node, and may need further analysis.

Items in hSet are dominant range data, all of them are homomorphic encrypted data, so all items in this set can be aggregated directly in cipher domain. After decrypting the aggregated encrypted data using KPriSH, we get an aggregation result of data in dominant range, in a vector form, i.e., .

Algorithm 4 Operations on Server (Part II)

1: procedure Operations on Server

⊳ dominant range data processing

2: for j ← 1 to Bnum do

3: ct1 is initialized as the infinity point of E

4: for all Ci ∈ hSet do

5: ct2 ← Ci [(j − 1)Bcsize + 1, jBcsize]

6: ct1 ← ct1 ⊕ ct2

7: end for

8: tmp ← HDec(t1, keyPriS)

9:

10: end for

11:

12:

13: for j ← 1 to L do

14: nj ← M [|M| −jItmsize + 1, |M| −(j − 1)Itmsize]

15:

16: end for

17: end procedure

Finally, each statistical results can be obtained directly from and mSet by algorithm 5.

Property of SEDAR

Multi-function

On the one hand, the value-related information needs to be preserved in the transformation for the summation based statistics. yk can be recovered from , and xk can be recovered from yk. itself represents how many in the raw data. So the value-related information is maintained in the .

On the other hand, the order-related information needs to be preserved in the transformation for the comparison based statistics. Assuming that , , and i > j. We recover the raw data as and , and then we can use the monotonicity of fm to judge which one is larger. So order-related information is maintained in the vector .

Therefore, both the summation based statistics and the comparison based statistics can be calculated in the proposed scheme.

Data Privacy

On the one hand, the adversary cannot infer the true position of the non-zero element from an encrypted vector, and thus can not recover xk by using these information. There is a random function in the homomorphic encryption scheme. Even if two elements have the same value, their ciphertexts are still different from each other. For example, in the leaf nodes, each encoded vectors contain only a non-zero elements, and all other elements are zero. It’s easily infering the corresponding value xk in plaintext domain by obtaining the position i of the non-zero elements and using . However, in ciphertext domain, encrypted elements are different to each other, and even encrypted zero elements are also different to each other. As a result, inferring the position of non-zero elements is difficult in encrypted vectors.

Algorithm 5 Operations on Server (Part III)

1: procedure obtain statistic result

2: ⊳ Count

2: ⊳ Summation

4: ⊳ Average/mean

5: imax ← max({i i ∈ (0, L]&&ni > 0})

6:

7: max2 ← max(mSet)

8: MAX ← max {max1, max2} ⊳ Maximum

9: imin ← min({i i ∈ (0, L]&&ni > 0})

10:

11: min2 ← min(mSet)

12: MIN ← min min1, min2 ⊳ Minimum

13: cntL ← #{mSet(* < MIN1)},

⊳ cntL is the total number in {mSet} whose value is less then or equal to MIN.

14:

15:

16: if CNT is odd then ⊳ Median

17:

18: else

19:

20: end if

21:

22:

23:

24: VAR ← E(x2) − E(x)2 ⊳ Variance

25: ⊳ Standard deviation

26:

27:

28: mode2 ← mode(mSet)

29: m2cnt ← sum(mSet = = mode2)

30: if m1cnt > m2cnt then ⊳ Mode

31:

32: else

33: MODE ← mode2

34: end if

35: end procedure

On the other hand, all message relayed or aggregated in the intermediate node are encrypted message. Due to the homomorphic encryption scheme, the aggregation for data in Rd performs on cipher text of directly. Message in aSet and bSet are encrypted by a traditional encryption scheme. So all message relayed or aggregated in the intermediate node are encrypted message, all private keys keep in privacy only by the server. Without private key, no one can decrypt, so data confidentiality is achieved.

A Concrete Example for SEDAR

Assuming Re = (20, 40], Rd = (30, 34] and accuracy requirement is a = 1. As shown in Table 1, there are 10 nodes in the given network. Raw data of each node list in the 2nd column, the 3rd and 4th columns are data classification and processing results.

Table 1. Example for SEDAR.

| ID | Raw data | Range | Result |

|---|---|---|---|

| 1 | 32 | Rd | (0 1 0 0) |

| 2 | 16 | aSet = {“3”} | |

| 3 | 32 | Rd | (0 1 0 0) |

| 4 | 33 | Rd | (0 0 1 0) |

| 5 | 28 | mSet = {28} | |

| 6 | 33 | Rd | (0 0 1 0) |

| 7 | 34 | Rd | (0 0 0 1) |

| 8 | 49 | aSet = {“8”} | |

| 9 | 33 | Rd | (0 0 1 0) |

| 10 | 25 | mSet = {25} |

Raw data of node 2 and node 8 are outside of the valid data range Re = (20, 40]. Both of them will be regarded as illegal data and discarded, and their IDs will be added into alarm set aSet.

Raw data of node 5 and node 10 are in the boundary range . Both of them will be added into boundary set bSet.

Other raw data are in the dominant range Rd. Each of them will be transformed into yk (yk ∈ (0, 4]) by using the mapping function. Each valid mapped data yk will then be encoded into a vector whose length is L. The yk-th elements is 1, while all the remaining elements are set to 0. For example, in node 1, the raw data is x1 = 32, the mapped data yk = 2 is obtained after mapping step, and in the encoding step, the 2nd (yk-th) element of the vector is set to 1, while other elements are 0, i.e. .

Each vector will be encrypted by the homomorphic encryption scheme and in-network aggregation will perform directly in cipher domain.

Elements of aSet and bSet will be encrypted by the traditional encryption scheme, and be relayed to the server without in-network aggregation.

According the homomorphic property, the aggregation of vectors in cipher text domain is equivalent to that in plaintext. Therefore, the server can obtain the final aggregation result by decrypting the received data. Encrypted data in aSet and bSet can also be decrypted by server. The final data obtained at the server include

Each statistic can be calculated using algorithm 5.

CNT = (2 + 3 + 1) + 2 = 8;

SUM = 2 × (2 + 30) + 3 × (3 + 30) + 1 × (4 + 30) + (25 + 28) = 250;

MEAN = 31.25;

imax = 4, max1 = 34, max2 = 28, MAX = 34;

imin = 2, min1 = 32, min2 = 25, MIN = 25;

cntL = 2, M = 2, M′ = 3, MEDIAN = 32.5;

SUM(x2) = 2 × (2 + 30)2+3 × (3 + 30)2+1 × (4 + 30)2+(252+282) = 7880; ; E(x)2 = MEAN2;

VAR = 8.4375; STD = 2.9;

m1cnt = 3; imode1 = 3; mode2 = 25. (In mSet, 25 and 28 have the same frequency, and the first element, i.e., 25, is chosen as its mode.)

m2cnt = 1; because m1cnt > m2cnt, .

Note that CNT is 8 instead of 10; this is because there are two nodes whose data is out of the operation range, which means there a failure is caused by node failure or other reasons. That is to say, computation of the final statistics, can automatically adapt to the dynamic network.

REDAR

In SEDAR, most elements of the encoded data near the leaf nodes are zero, which means it contains redundant information. Directly transmitting these low information data using a full vector is too expensive. As these encrypted zeros are used to hidden the exact position of the encrypted non-zero elements. Encrypting all zeros is not necessary, especially when L large.

In this section, we propose REDAR, which can significantly reduce the communication cost with the trade-off of lower security on leaf node. In REDAR, all non-zero elements and a small number of random selected zero elements of the leaf nodes’ vector are encrypted.

Random Encryption

Random selection zero-elements are used to reduce packet size, as well as provide security for the non-zero elements.

First, are split the into several segments. Then, all non-zero elements and a small number of randomly chose zero elements are encrypted.

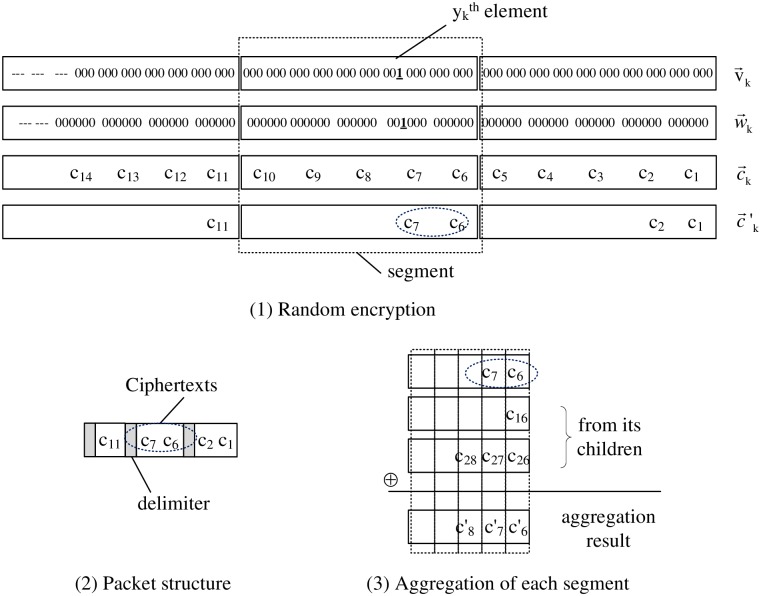

For example, in Fig 3-1, there are 27 elements in , each element contains 3 bits, each cipher element is encrypted from 2 elements, where the leftmost cipher element only contain 1 element in this case. is split into 3 segments, each of the right two segments has 5 cipher elements at most, and the last segments has 4 cipher elements at most.

Fig 3. REDAR.

For each segment whose elements are zero, a random number r will generate between 1 and ns, where ns is the number of elements in the segment. Then the r rightmost elements of the segment are encrypted using the homomorphic encryption scheme. For example, in Fig 3-1, all elements of the leftmost and the rightmost segments are zero, i.e., ns = 4 and ns = 5 respectively, and thus one cipher element in the leftmost segment and two cipher elements in the rightmost segment are obtained.

For the segment containing a non-zero element, a random number r is generated, where r is between 0 and ns − py, and py is the position of non-zero elements in the segment with respect to the right end. Then the r + py rightmost elements of the segment are encrypted. For example, in Fig 3-1, the 2nd segment contains a non-zero element, and py = 2. Because r = 0 is return by the random function, only py + r = 2 leftmost elements are encrypted.

Packing and Unpacking

The packing step is used for constructing packet from the random encryption result. As show Fig 3-2, encrypted data in each segment are packed together in the original order, and a delimiter is added between adjacent segments. Because each cipher has the same size and only several leftmost elements are encrypted, the original encrypted vector can be reconstructed in the unpacking step.

Secure Data Aggregation

All received packets are unpacked to get sets, and then aligned together with the local generated encrypted data. Finally, data aggregation will performs directly on cipher domain column by column, and aggregation results of all segments are packed and send to its parent.

For example, in Fig 3-3, the first line is the encrypted data generated locally, the 2nd and 3rd are received from its children, and all of them are the 2nd segment of each vector. Segments received from different children may have a different number of elements, all of them are aligned to the right side. Because c17 is not exist in the 1st child, we ignore it, and just aggregate other two elements, i.e. . Among these three segments, the maximum number of elements is 3 (the 3nd line in Fig 3-3), so the elements number in the aggregation result of this segment is also 3.

Correctness and Security

As random selected encryption only performs on zero elements, all no-zero elements in each vector are encrypted, and aggregated into the final result. No raw data is loss in REDAR, so the final results are the same as that in SEDAR.

In REDAR, the communication cost is reduced for much less zero elements is encrypted and contained in the packet. However, as the number of encrypted data is reduced, it benefits the adversary with guessing the true position of non-zero elements. An inappropriate distribution of the encrypted data also benefits the adversary with the success probability of guess. So we need carefully design the random function and make sure that sufficient encrypted data is still retained after using random selected encryption. The more the encrypted elements, the lower probability it is guessed successfully. After aggregating at intermediate node, the encrypted elements will contain more than one encrypted non-zero elements, which means the success probability of the adversary will decrease significantly. More specifically, in the leaf node i, where only one non-zero elements in the vector. Assuming ni encrypted data exist in the final packet, then the adversary’s success probability is . In the cluster header, assuming k nodes aggregate together, the probability reduces to , where n = ∑j maxi(nij), and nij is the number of elements in the jth segment of node i. As n and k increase along the aggregation tree, the adversary’s success probability decrease obviously.

For example, when n ≥ 25 and k ≥ 4, the success probability no larger than 2.56 × 10−6. As in cluster-based networks, the cluster member in each cluster is often large than 4, which means when n ≥ 25, except in the leaf node, no encrypted data can be success guessed with probability larger than 2.56 × 10−6. When k = 6 and n = 35, the success probability already decreases to 5.44 × 10−10.

CEDAR

In this section, we present CEDAR. CEDAR and REDAR are complementary schemes. CEDAR is used before encoding, while REDAR is used after encoding.

In SEDAR, the total communication cost is mainly determined by the size and accuracy of Rd, i.e. . L sometimes is large, so the total communication cost is still heavy. In order to reduce the communication cost, a compression step is introduced in CEDAR. As shown in Fig 4, mapping data y is compressed from a lager space with size of L into a smaller space with size of L′. Encoding step executes on compression data z, which make the vector length decreased from L to L′. Compression function can be a linear one or a non-linear one. Due to the limited space, we only illustrate the linear one.

Fig 4. CEDAR.

A linear compression function fc can compress y into z, i.e., , c is the compression factor, which is larger than 1. Encoding step is based on z instead of y, i.e., . So the total communication cost is reduce from L to . One can recover as an estimate of y, using the decompressing function on z, i.e., .

Performance Analysis

In this section, we analyse communication and computation performance of proposed schemes. Performance criteria includes whether there are existing a bottleneck, and whether it achieves load balance.

Communication performance

First, we analyse the maximum packet size to judge whether exists a bottleneck. Then analyse the distribution attribution of packet size to judge whether it achieves load balance. After a thorough analysis, we find out that no bottleneck exist, and it achieves load balance. The detailed analysis goes as follows.

Data in Rd are encrypted by a homomorphic scheme, so encrypted data can aggregate directly in cipher domain in the intermediate nodes, and thus the total length will not change. Data in Rb uses a traditional encryption scheme. Without the private key, the intermediate nodes have to cascade each encrypted data and replay forwarding. So the length will increase, the minimum values of the data packets size appear in the leaf node of the aggregation tree, and the maximum length of the package is in the vicinity of the server node.

Now, let’s analyse packet length for Rd and Rb respectively. For the sake of simplicity, data length analysis is based on plain text.

Communication cost for Rd is determined by the number of elements in the vector and the data length of each element. The former is determined by the range length of Rd and accuracy requirement a. The latter determined by the largest number of samples fall in the same point, and the worst case is all samples in Rd (i.e. N × P(Rd)) fall in the same position. In practice, the probability of the worst case can be ignored. So, .

Now, let’s consider the communication cost for Rb. Since the data in Rb is not aggregated in the intermediate nodes, the total data length will reach the maximum in the vicinity of the server node. The maximum value is determined by the total number of samples N and the probability (P(Rb)) of the sample in the region Rb, as well as the transmission overhead of a single sample. In practice, we can assume that the probability of abnormal data is much less than the normal one, which means the number of elements outside Re can be ignored. So, . and then .

In order to judge wether it achieves load balance, let’s analyze the distribution of the whole network traffic first.

The minimum values of the data packets size appear in the leaf node of the aggregation tree. In the leaf node, when x ∈ Rb, no encoding step is used, so its data length is . When x ∈ Rd, the encoding data length is . The former one (Let’s denote it as C0) is much less than the last one. However, C0 only exists in very small number of leaf node. Assuming each cluster contains m leafs. The probability that C0 appears at the same time in m nodes is (1 − P(Rd))m. For example, in a normal distribution, assuming β = 2, when m = 3, (1 − P(Rd))m = 9.48 × 10−5. The probability is small, which means even a small number of leaf node has a packet size of C0, its parent will at least . Therefore, C0 is lack of significance, and the representative minimum value should be selected as .

In the whole network, the minimum packet appears in the leaf nodes, the maximum packet appears in the vicinity of the server. In the path of the leaf node to the root node, the size of the packet increases from the minimum to the maximum value. Because P(Rd) ≫ P(Rb), the growth rate of packet size is small enough, and the average packet size .

The maximum value of the data packet is, . On the one hand, once the Rd is determined, can be treat as a const. If is too large, CEDAR can be used. So is controllable. On the other hand, according the define of Rd, P(Rd) ≈ 1, so is small enough. As a result, the maximum packet size can be regarded as a controllable const, and there is no bottleneck in the network.

As the difference among Costmin, Costmax and is small, we can easily make a conclusion that it achieves load balance.

Computation performance

For computation performance, we also analyse the maximum computation cost to judge whether exists a bottleneck, and analyse the distribution attribution of computation cost to judge whether it achieves load balance. We find out that no bottleneck exist, and it achieves load balance. The detailed analysis goes as follows.

Each data can either be homomorphic encrypted after encoded, or encrypted by traditional scheme directly, according the range it belongs to. Let’s denote the computation cost of the former as C11, and the latter one as C21.

For the data inside the Rd, mapping and encoding are required before homomorphic encryption. Both of them cost much less than encryption and decryption, thus can be ignored. For the homomorphic encrypted data, the intermediate nodes will not decrypt it, and aggregate them directly in cipher domain. Assuming a single cipher domain addition cost C13, the total aggregation cost is C13(N × P(Rd) − 1), due to that N × P(Rd) − 1 times aggregation operation are necessary for N × P(Rd) elements in Rd.

The final aggregated result will be decrypted in the server, and the decryption cost is C12. Each encrypted data in the boundary range, will also decrypted in the server, and the decryption cost is C22.

So the total computational cost is C = C11 N × P(Rd) + C13(N × P(Rd) − 1)+C12 + N × P(Rb)(C21+C22).

Average computational cost is .

In instances of homomorphic encryption, encryption cost and decryption cost are often much larger than the cipher domain aggregation cost. For example, in the ECC-based version, the main operations of encryption and decryption are scalar multiplication, and the main operation of cipher domain aggregation is point addition. The former is far greater than the latter, so C13 can also be ignored, and Cavg ≈ C11 P(Rd) + (C21+C22)(1 − P(Rd)).

There are two main computational cost operations, i.e. encryption and decryption. Both of them not exist in the same node. Each client only performs one type of encryption operation, i.e. homomorphic one or traditional one. Two types of decryption exist in the server.

Since each client only chooses one of the two kinds of encryption mechanisms, each data is encrypted only once, so the computation cost is C11 or C21. In general, the encode data has larger length than the raw data, so C11 > C21, so the maximum computational cost of client is C11. Two types of decryption exist in the server, the corresponding overhead is C12 + N × P(Rb)C22. Because N × P(Rb) is often small, and the server node has a large computational power, the decryption operation is not a difficult task. Therefore, there is no computational bottleneck exist.

Each client only encryption once, and the aggregation operations in each intermediate node are not compute-intensive, so the the proposed scheme also achieve load balance in computation.

Statistics functions supported

In this section, we compare the proposed schemes with other data aggregation schemes on statistics functions and encoding method. Table 2 is the comparison result. All of them are distributed aggregation schemes, which mean that intermediate nodes generate partial aggregation results from their received data.

Table 2. Comparison on Statistics Functions and Encoding Method.

| Encoding | Statistics | |

|---|---|---|

| Considine et al. [9] | Synopsis generation function | CNT, SUM, AVG VAR, STD |

| Roy et al. [11] | ||

| Li et al. [17] | Slicing and assembling technique | |

| Yang et al. [18] | ||

| Castelluccia et al. [10] | No | |

| Lu et al. [19] | ||

| Ertaul et al. [20] | No | MAX, MIN |

| Samanthula et al. [21] | ||

| RCDA [14] |

l = ⌈logL⌉, β = l(i − 1), |

CNT, SUM, AVG VAR, STD, MAX MIN, MODE, MEDIAN |

| EERCDA [13] | ||

| Proposed schemes | , |

Evaluation

Data sets description

Evaluation is based on six datasets gathered from different type of sensors. All of them are obtained from TAO (Tropical Atmosphere Ocean) project. The TAO is a project of NOAA (National Oceanic and Atmospheric Administration), which aim to enable real-time collection of high quality oceanographic and surface meteorological data for monitoring, forecasting, and understanding of climate swings associated with El Nino and La Nina.

Table 3 is the general description of each dataset. Rh0n156e_hr is a dataset of relative humidity. Bp0n156e_hr is sea level pressure. W0n156e_hr is wind direction. Sst0n147e_hr and sst0n156e_hr are different datasets of sea surface temperature. rad0n156e_hr is shortwave radiation. The 2nd column is the sample size of each dataset. The 3rd and 4th columns are skewness and kurtosis respectively. The 5th and 6th columns are mean and standard deviation estimated using the history record.

Table 3. General description of datasets.

| Dataset | Size | Skewness | Kurtosis | ||

|---|---|---|---|---|---|

| rh0n156e_hr | 144250 | 0.331 | 3.301 | 5.508 | 78.587 |

| bp0n156e_hr | 122035 | -0.174 | 2.852 | 1.820 | 1008.3 |

| w0n156e_hr | 146702 | -0.409 | 1.992 | 96.268 | 197.416 |

| sst0n147e_hr | 65535 | 0.313 | 3.706 | 0.469 | 29.712 |

| rad0n156e_hr | 39916 | -0.008 | 1.757 | 234.273 | 596.817 |

| sst0n156e_hr | 4672 | 0.829 | 4.683 | 0.358 | 29.338 |

These datasets cover several different scenarios. The distribution characteristics of them are different from each other, and thus has certain representativeness.

Effectiveness of Range Segmentation Model

In the proposed schemes, a range segmentation model is introduced to reduce the encoded vectors length, and thus reduce the total communication cost as long as the P(Rb) is small enough. To achieve this purpose, we should choose dominate range carefully and make sure that samples beside this range is small enough. Now, let’s verify whether the boundary setting of the dominate range (Rd) is effective.

As we described above, the lower bound XL and the upper bound XU of dominate range Rd are determined by and . XLE and XUE are const defined in TAO project. and are estimated from history data, which can also be regarded as const. Different dominate range can be generated by different β.

The proportion of data outside Rd, i.e. P(Rb), under different parameters are list in Table 4. As β increase, P(Rb) reduce significantly. For example, when β = 2.2, P(Rb) is no larger than 3.5% in all six datasets, and two of them are even reduced to zero.

Table 4. P(Rb) in different dominant range setting.

| Dataset | β = 1.4 | β = 1.8 | β = 2.2 | β = 2.6 | β = 3 |

|---|---|---|---|---|---|

| rh0n156e_hr | 15.61% | 7.39% | 3.22% | 1.40% | 0.50% |

| bp0n156e_hr | 16.40% | 6.78% | 2.54% | 0.66% | 0.19% |

| w0n156e_hr | 17.11% | 4.82% | 0 | 0 | 0 |

| sst0n147e_hr | 14.10% | 6.73% | 3.27% | 1.55% | 0.75% |

| rad0n156e_hr | 18.70% | 0 | 0 | 0 | 0 |

| Sst0n156e_hr | 14.75% | 5.78% | 3.47% | 1.85% | 1.02% |

However, it’s not means the larger of β, the better of the communication performance. As β increase, the encoded vector length also increase, and the communication cost for Rd will increase. We need to achieve a balance between the communication cost for Rd and Rb.

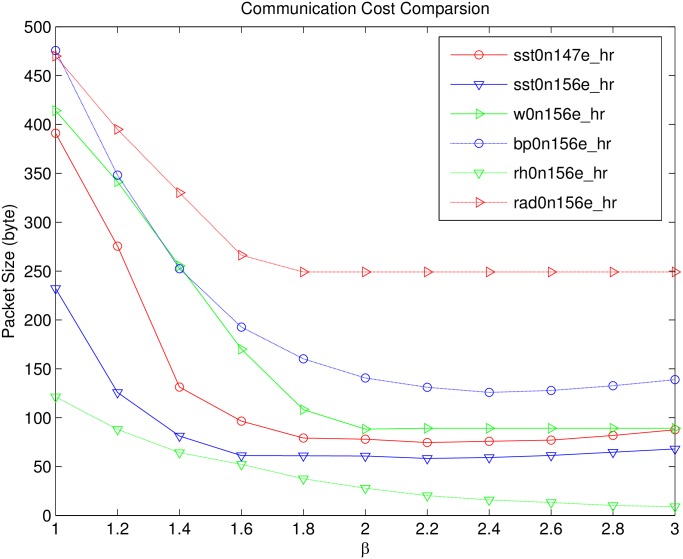

Fig 5 is the relationship between the maximum packet size and dominant range Rd setting. The network size is 1000. We can easily find out that, as β increasing, the maximum packet size reduces significantly, and when β > 1.8, the decreasing rate becomes moderate. Let’s analysis the reason. As the increase of β, the communication cost for the boundary range Rb reduce significantly. More specifically, the communication overhead reduced in Rb is much larger than that increased in Rd, so the total packet size is still significantly decreased. When β reaches a certain value, the maximum packet size reaches a minimum value. E.g., β = 1.6 for sst0n156e_hr and β = 2 for w0n156e. In some case, when β is larger than its optimal value, P(Rb) is small enough, the communication cost for Rb can be ignored, and the cost for Rd is may increase as the bound of Rd still in Re, so the maximum packet size may increase mildly.

Fig 5. Communication cost in different Rd setting.

Communication Cost of SEDAR in Different Rd Setting

Fig 5 is the communication cost of SEDAR in different Rd setting. According to this figure, when β is between 1.8 and 3, the change of the maximum packet size in each dataset is relatively small. Which means, any β ∈ [1.8, 3] meets the basically requirements and doesn’t significantly reduce the communication performance. This feature is very useful, which means Rd setting is easy.

Although it is difficult to achieve optimal performance by setting an accurate dominant range in advance, by choosing arbitrary β ∈ [1.8, 3], we can still obtain a suboptimal performance, which is very similar to the optimal one. For example, in following evaluation, we directly set β = 2 for different datasets, and still obtain a good result.

Comparsion with RCDA and EERCDA

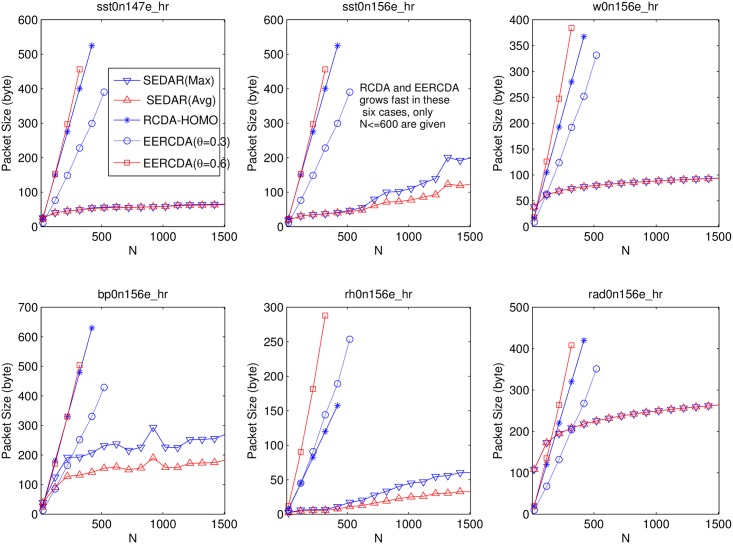

In this section, we compare SEDAR with RCDA and EERCDA. Both of them support mutil-functional security data aggregation, and homomorphic encryption scheme is used in all of them. The main difference lies in the encoding function. Each client encrypts collected and encoded data. The aggregation performs on cipher text directly at each intermediate aggregator, and the decryption performs on the server. The computation cost of encryption and decryption are near-linear related to the encoded data length. Limited to space, we only concern the comparison on communication cost. In these evaluations, β = 2. The comparison result lists in Fig 6. (θ is used to characterize the intensity of data fluctuation in a given application for EERCDA.)

Fig 6. Comparsion with RCDA and EERCDA.

According Fig 6, we can easily find out that the proposed scheme is obviously superior to RCDA and EERCDA. And due to the slow growth of communication cost as the increase of N, it can be applied to large scale networks.

In w0n156e_hr, bp0n156e_hr and rad0n156e_hr, when N is small, RCDA and EERCDA is better than SEDAR. In these datasets, when β = 2, the dominate range is a little large. So when the network size N is small, the communication cost is larger than that in RCDA and EERCDA. However, when N increases to a certain value, the advantage of this scheme is very obvious. In other three datasets, the dominate region size is small, and most samples are in the dominate range when β = 2, so the proposed scheme has an absolute advantage even in the small network.

In sst0n147e_hr, w0n156e_hr and rad0n156e_hr, the average and maximum communication cost are almost have the same value, this is because most elements are in Rd. In contrast, the average and maximum communication cost aren’t the same in other three datasets.

Table 5 is comparison on end-to-end aggregation time. Due to limited space, we only compare SEDAR with RCDA. The evaluation is built on MICAz. MICAz has a low-power 8-bit microcontroller ATmega128L and an IEEE 802.15.4 compliant CC2420 transceiver. The clock frequency of ATmega128L is 8 MHz. The claimed data rate of CC2420 is 250 kbps. Meulenaer et al. [22] measured the effective data rate for transmitting is 121 kbps which far below the claimed rates. In the energy models used in this paper, we use 121 kbps as the data rate for the evaluation of communication delay. For the computational cost evaluation, we decide to implement the proposed scheme based on TinyECC [23]. According to its evaluation result based on MICAz, the execution time for encryption is 3907.46ms, which is similar to the one used in RCDA [14]. According to RCDA, MICAz needs 73.71 ms to aggregate two data in cipher domain. According to the comparison results, end-to-end aggregation time of SEDAR is much smaller than that of RCDA. With the increase of network size, this advantage will be more obvious.

Table 5. Comparison on end-to-end aggregation time (unit: ms; N = 1000; cluster-based network.

Compu.: computation delay; Commu.: communication delay; Total: total delay).

| SEDAR | RCDA | |||||

|---|---|---|---|---|---|---|

| Compu. | Commu. | Total | Compu. | Commu. | Total | |

| sst0n147e_hr | 1.52 × 104 | 15.59 | 1.52 × 104 | 4.96 × 105 | 339.25 | 4.96 × 105 |

| sst0n156e_hr | 1.15 × 104 | 20.63 | 1.15 × 104 | 4.35 × 105 | 339.25 | 4.35 × 105 |

| w0n156e_hr | 2.30 × 104 | 23.54 | 2.30 × 104 | 4.37 × 105 | 237.48 | 4.37 × 105 |

| bp0n156e_hr | 2.33 × 104 | 42.06 | 2.34 × 104 | 7.56 × 105 | 407.11 | 7.56 × 105 |

| rh0n156e_hr | 1.29 × 103 | 6.65 | 1.29 × 103 | 7.97 × 104 | 101.77 | 7.98 × 104 |

| rad0n156e_hr | 6.50 × 104 | 66.48 | 6.51 × 104 | 1.05 × 106 | 271.40 | 1.05 × 106 |

Cost and Accuracy Evaluation for CEDAR

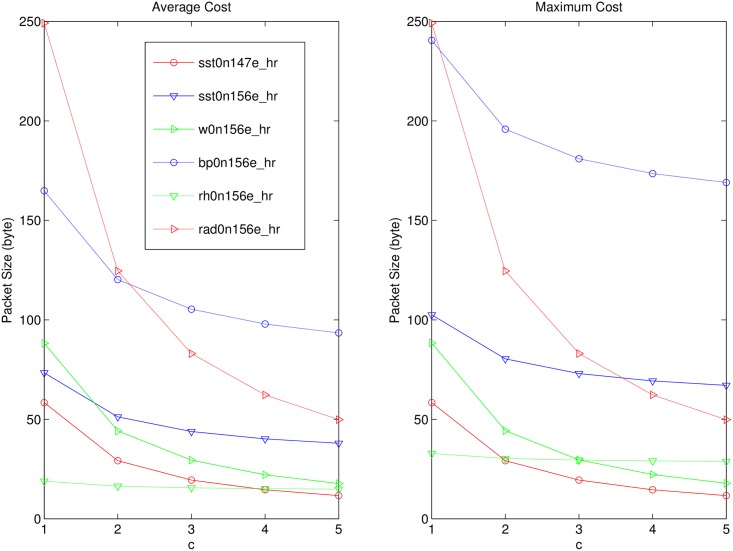

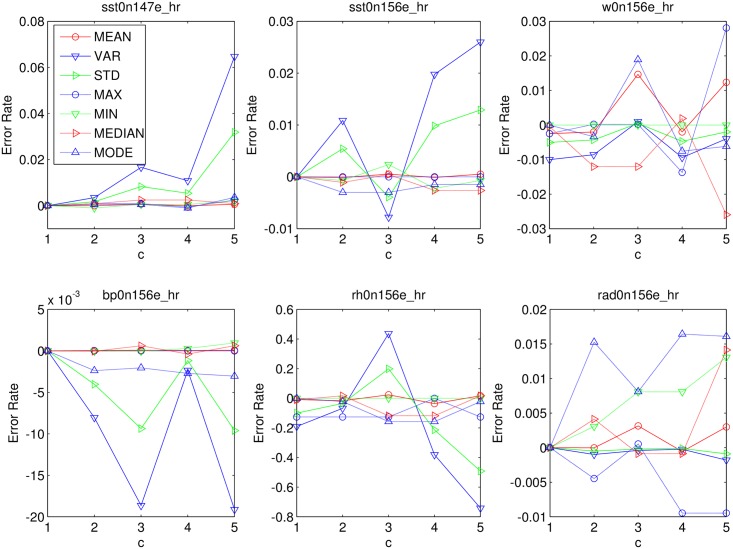

We measure the performance of CEDAR in this section. Figs 7 and 8 are the communication overhead and accuracy in different compression factor c.

Fig 7. Cost Evaluation of CEDAR.

Fig 8. Accuracy Evaluation of CEDAR.

According to Fig 7, we can know that with the increase of c, the average and the maximum communication cost of each dataset are reduced to some extent. The reduction trend in each dataset is not entirely consistent. When the compression factor is large, the communication volume curve is flat, which means the compression effect is decreased. According to Fig 8, with the increase of C, the error rates in each dataset are increasing. So it is necessary to ensure that a balanced between the communication cost and the error rate.

The growth trend of the error rate has a certain degree of relationship with the initial communication cost. The initial communication cost is the corresponding communication cost in SEDAR. The error rates increase more quickly in cases that have much larger initial communication cost. For example, as shown in Fig 8, the initial communication cost of rad0n156e_hr is relatively large, and when c = 5, the bound of error rates is still less than ±0.015. In sst0n147e_hr, w0n156e_hr, and rh0n156e_hr, in which the initial communication cost is small, the bound is close to or more than pm0.03 when c = 5. In particular, error bound of rh0n156e_hr, is greater than 0.4 when c = 4. In fact, according to Fig 7, the initial communication cost of rh0n156e_hr is the minimal one, and the reduction tendency of rh0n156e_hr is not obvious.

Hence, we can choose a large compression factor for the case with large initial communication cost, and we should choose a small one, or even give up the CEDAR for the case with small initial communication cost.

Related work

Distributed Aggregation

Distributed aggregation is a traditional research topic in database community. Kuhn and Oshman [6] studied the complexity of computing count and minimum in synchronous directed networks. Hobbs et al. [7] presented a distributed protocol to compute maximum and average under the SINR model. Cormode and Yi [8] focused on tracking the value of a aggregation function on distributed monitoring area. Cheng et al. [24], Li and Cheng [25] considered the approximate aggregation problem and presented (ϵ, δ)-approximate schemes based on Bernoulli sampling. Xie and Wang [26] and Shen et al. [27] studied network construction and message routing algorithm for data aggregation.

Secure Distributed Aggregation

Several secure distributed aggregation schemes have been proposed. Most of them focus on secure itself, and very limited numbers of aggregation functions can be supported. Considine et al. [9] and Roy et al [11] proposed secure distributed aggregation scheme for duplicate sensitive aggregation based on synopsis generation function. Li et al. [17] and Yang et al. [18] proposed slice-mix based schemes for additive aggregation functions, which guarantees data privacy through data “slicing and assembling” technique. Castelluccia et al. [10] and Lu et al. [19] proposed secure distributed aggregation scheme based on homomorphic encryption, which is also only support summation-based statistical functions, such as CNT and SUM. Agrawal et al. [28] presented the order-preserving encryption scheme. Ertaul et al. [20] and Samanthula et al. [21] applied it to secure distributed aggregation, to get comparison-based statistics, such as MAX, MIN. However, summation-based statistics is not support in these schemes. Chien-Ming et al. [14] and Jose et al. [13] adopted encoding steps before encryption to achieve arbitrary aggregation function. However, their encoding steps are simple concatenation all sensing data without any information compression method, and the communication cost is too heavy to extend to large scale networks. Enabling operation in cipher domain is also an important topic in cloud computing [29–31]. In addition to traditional encryption scheme, data privacy can be achieved by steganography [32, 33]. Beside data privacy, date authentication is also necessary. Ren et al. [34] proposed an efficient mutual verifiable provable data possession scheme. Guo et al. [35] designed a lightweight and tolerant authentication to guarantee data security.

Conclusions

In this paper, we have studied the problem of multifunction secure distributed aggregation, and also have proposed three complementary schemes (i.e., SEDAR, REDAR and CEDAR) to solve this problem. The first one can obtain accurate aggregation results. The other two can significantly reduce communication cost with the trade-off lower security and lower accuracy, respectively. Extensive analysis and experiments, based on six different scenes of real data, have shown that all of them have an excellent performance.

Acknowledgments

This work is supported by the Foundation of Hunan Educational Committee (14C0484), National Natural Science Foundation of China under Grant (61502054), Yongzhou Science and Technology Plan ([2013]3), Open Research Fund of Hunan Provincial Key Laboratory of Network Investigational Technology (2016WLZC016), Foundation of Hunan University of Science and Engineering (13XKYTA003). The authors declare that they have no conflict of interests.

Data Availability

All relevant data files are available from the database of Tropical Atmosphere Ocean (TAO) project (http://www.pmel.noaa.gov/tao).

Funding Statement

This work is supported by the Foundation of Hunan Educational Committee (14C0484), National Natural Science Foundation of China under Grant (61502054), Yongzhou Science and Technology Plan ([2013]3), Open Research Fund of Hunan Provincial Key Laboratory of Network Investigational Technology (2016WLZC016), and Foundation of Hunan University of Science and Engineering (13XKYTA003).

References

- 1. Yan Y, Zhang J, Huang B, Sun X, Mu J, Zhang Z, et al. Distributed Outlier Detection using Compressive Sensing In: SIGMOD’15. ACM; 2015. p. 3–16. [Google Scholar]

- 2. Shim KA, Park CM. A Secure Data Aggregation Scheme based on Appropriate Cryptographic Primitives in Heterogeneous Wireless Sensor Networks. IEEE Transactions on Parallel and Distributed Systems. 2014;PP(99):1–1. [Google Scholar]

- 3. Han J, Kamber M, Pei J. Data Mining: Concepts and Techniques, 3rd ed Morgan Kaufmann; 2011. [Google Scholar]

- 4. Wuhib F, Stadler R, Spreitzer M. A Gossip Protocol for Dynamic Resource Management in Large Cloud Environments. IEEE Transactions on Network and Service Management. 2012;9(2):213–225. 10.1109/TNSM.2012.031512.110176 [DOI] [Google Scholar]

- 5. Zhang G, Liu W, Hei X, Cheng W. Unreeling Xunlei Kankan: Understanding Hybrid CDN-P2P Video-on-Demand Streaming. IEEE Transactions on Multimedia. 2015;17(2):229–242. 10.1109/TMM.2014.2383617 [DOI] [Google Scholar]

- 6. Kuhn F, Oshman R. The complexity of data aggregation in directed networks In: DISC’11. Springer; 2011. p. 416–431. [Google Scholar]

- 7. Hobbs N, Wang Y, Hua QS, Yu D, Lau FC. Deterministic distributed data aggregation under the SINR model In: TAMC’12. Springer; 2012. p. 385–399. [Google Scholar]

- 8. Cormode G, Yi K. Tracking distributed aggregates over time-based sliding windows In: SSDBM’12. Springer; 2012. p. 416–430. [Google Scholar]

- 9. Considine J, Hadjieleftheriou M, Li F, Byers J, Kollios G. Robust approximate aggregation in sensor data management systems. ACM Transactions on Database Systems. 2009;34(1):6 10.1145/1508857.1508863 [DOI] [Google Scholar]

- 10. Castelluccia C, Chan ACF, Mykletun E, Tsudik G. Efficient and provably secure aggregation of encrypted data in wireless sensor networks. ACM Transactions on Sensor Networks. 2009;5(3):1–36. 10.1145/1525856.1525858 [DOI] [Google Scholar]

- 11. Roy S, Conti M, Setia S, Jajodia S. Secure Data Aggregation in Wireless Sensor Networks. IEEE Transactions on Information Forensics and Security. 2012;7(3):1040–1052. 10.1109/TIFS.2012.2189568 [DOI] [Google Scholar]

- 12. Lin YH, Chang SY, Sun HM. CDAMA: Concealed Data Aggregation Scheme for Multiple Applications in Wireless Sensor Networks. IEEE Transactions on Knowledge and Data Engineering. 2013;25(7):1471–1483. 10.1109/TKDE.2012.94 [DOI] [Google Scholar]

- 13. Jose J, Manoj Kumar S, Jose J. Energy efficient recoverable concealed data aggregation in wireless sensor networks In: ICE-CCN’13. IEEE; 2013. p. 322–329. [Google Scholar]

- 14. Chien-Ming C, Yue-Hsun L, Ya-Ching L, Hung-Min S. RCDA: Recoverable Concealed Data Aggregation for Data Integrity in Wireless Sensor Networks. IEEE Transactions on Parallel and Distributed Systems. 2012;23(4):727–734. 10.1109/TPDS.2011.219 [DOI] [Google Scholar]

- 15. Fousse L, Lafourcade P, Alnuaimi M. Benaloh’s dense probabilistic encryption revisited In: Progress in Cryptology–AFRICACRYPT 2011. Springer; 2011. p. 348–362. [Google Scholar]

- 16.Guellier A. Can Homomorphic Cryptography ensure Privacy? [Research Report]. IRISA; Supélec Rennes, équipe Cidre; Array; 2014.

- 17. Li H, Lin K, Li K. Energy-efficient and high-accuracy secure data aggregation in wireless sensor networks. Computer Communications. 2011;34(4):591–597. 10.1016/j.comcom.2010.02.026 [DOI] [Google Scholar]

- 18. Yang G, Li S, Xu X, Dai H, Yang Z. Precision-enhanced and encryption-mixed privacy-preserving data aggregation in wireless sensor networks. International Journal of Distributed Sensor Networks. 2013;. 10.1155/2013/427275 [DOI] [Google Scholar]

- 19. Lu M, Shi Z, Lu R, Sun R, Shen XS. PPPA: A practical privacy-preserving aggregation scheme for smart grid communications In: (ICCC’13. IEEE; 2013. p. 692–697. [Google Scholar]

- 20. Ertaul L, Kedlaya V. Computing Aggregation Function Minimum/Maximum using Homomorphic Encryption Schemes in Wireless Sensor Networks In: ICWN’07. IEEE; 2007. p. 186–192. [Google Scholar]

- 21. Samanthula BK, Jiang W, Madria S. A Probabilistic Encryption Based MIN/MAX Computation in Wireless Sensor Networks In: MDM’13. IEEE; 2013. p. 77–86. [Google Scholar]

- 22. De Meulenaer G, Gosset FCCO, Standaert FCCOX, Pereira O. On the energy cost of communication and cryptography in wireless sensor networks. In: WiMob; 2008;. [Google Scholar]

- 23. Liu A, Ning P. TinyECC: A configurable library for elliptic curve cryptography in wireless sensor networks In: IPSN’08. IEEE; 2008. p. 245–256. [Google Scholar]

- 24. Cheng S, Li J, Ren Q, Yu L. Bernoulli Sampling Based (ϵ, δ)-Approximate Aggregation in Large-Scale Sensor Networks In: INFOCOM’10. IEEE; 2010. p. 1–9. [Google Scholar]

- 25. Li J, Cheng S. (ϵ, δ)-Approximate Aggregation Algorithms in Dynamic Sensor Networks. IEEE Transactions on Parallel and Distributed Systems. 2012;23(3):385–396. 10.1109/TPDS.2011.193 [DOI] [Google Scholar]

- 26. Xie S, Wang Y. Construction of tree network with limited delivery latency in homogeneous wireless sensor networks. Wireless personal communications. 2014;78(1):231–246. 10.1007/s11277-014-1748-5 [DOI] [Google Scholar]

- 27. Shen J, Tan H, Wang J, Wang J, Lee S. A novel routing protocol providing good transmission reliability in underwater sensor networks. Journal of Internet Technology. 2015;16(1):171–178. [Google Scholar]

- 28. Agrawal R, Kiernan J, Srikant R, Xu Y. Order preserving encryption for numeric data In: SIGMOD’04. ACM; 2004. p. 563–574. [Google Scholar]

- 29. Fu Z, Ren K, Shu J, Sun X, Huang F. Enabling Personalized Search over Encrypted Outsourced Data with Efficiency Improvement IEEE Transactions on Parallel and Distributed Systems. 2015;PP(99):1–1. [Google Scholar]

- 30. Xia Z, Wang X, Sun X, Wang Q. A Secure and Dynamic Multi-Keyword Ranked Search Scheme over Encrypted Cloud Data. IEEE Transactions on Parallel and Distributed Systems. 2016;. 10.1109/TPDS.2015.2401003 [DOI] [Google Scholar]

- 31. Fu Z, Sun X, Liu Q, Zhou L, Shu J. Achieving efficient cloud search services: multi-keyword ranked search over encrypted cloud data supporting parallel computing. IEICE Transactions on Communications. 2015;98(1):190–200. 10.1587/transcom.E98.B.190 [DOI] [Google Scholar]

- 32. Xia Z, Wang X, Sun X, Wang B. Steganalysis of least significant bit matching using multi-order differences. Security and Communication Networks. 2014;7(8):1283–1291. 10.1002/sec.864 [DOI] [Google Scholar]

- 33. Xia Z, Wang X, Sun X, Liu Q, Xiong N. Steganalysis of LSB matching using differences between nonadjacent pixels. Multimedia Tools and Applications. 2016;75(4):1947–1962. 10.1007/s11042-014-2381-8 [DOI] [Google Scholar]

- 34. Ren Y, Shen J, Wang J, Han J, Lee S. Mutual verifiable provable data auditing in public cloud storage. Journal of Internet Technology. 2015;16(2):317–323. [Google Scholar]

- 35. Guo P, Wang J, Li B, Lee S. A variable threshold-value authentication architecture for wireless mesh networks. Journal of Internet Technology. 2014;15(6):929–936. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All relevant data files are available from the database of Tropical Atmosphere Ocean (TAO) project (http://www.pmel.noaa.gov/tao).