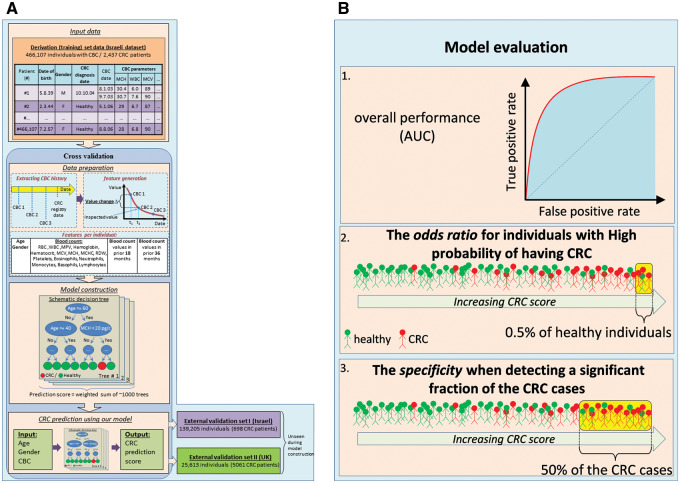

Figure 1:

(A) Model construction and evaluation. Shown is an illustration of the different steps of our model construction procedure. For every individual with CBC data, the input training data (top) consists of his/her age, gender, and all available sets of blood count panel parameters. In the data preparation phase (middle), the CBC data of every individual are aggregated (generating a CBC history), and features are generated, including the values of the parameters and the change in these values in the last 18 and 36 months. Next, in the model construction phase (lower middle), we automatically generate decision trees aimed at identifying CRC cases. The trees constructed are then combined into a single unified model. The parameters of the data preparation and model construction phases are optimized using cross-validation – we use 90% of the data as a learning set, construct a model, and evaluate its performance on the remaining 10%. This process is repeated 10 times by partitioning the data into different learning and testing sets. The resulting model can then use as input the age, gender, and CBC data of an unseen individual and produce a risk stratification score of having CRC (bottom left). The model is then validated on external datasets including previously unseen Israeli and UK populations (bottom right). (B) Model evaluation criteria. Shown are the 3 different measures used to evaluate model performance: (1) the area under the receiver operating characteristic curve (AUC) is used to measure overall performance, as it is a standard measure of performance in classification problems; (2) to assess the utility of our model for identifying individuals with the highest probability of having CRC, we consider a model threshold score that corresponds to false positive rate of 0.5% (i.e., a model score for which only 0.5% of the individuals without CRC score above it, representing the population with the highest scores), and use the odds-ratio measure to compare the CRC prevalence of individuals whose model score is above or below that threshold; (3) to examine the utility of our model for identifying a large portion of the CRC cases, we compute the fraction of individuals classified correctly as not having CRC (specificity) at a model score threshold that corresponds to 50% sensitivity (i.e., a model score for which 50% of the individuals with CRC score above it).