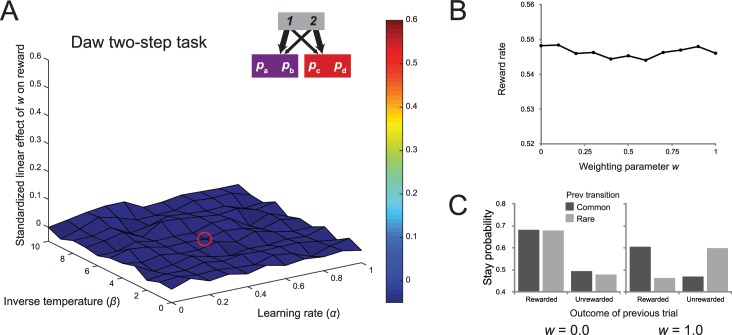

Fig 3. Results of simulation of accuracy-demand trade-off in the Daw two-step task.

(A) Surface plot of the standardized linear effect of the weighting parameter on reward rate in the original version of the two-step task. Each point reflects the average of 1000 simulations of a dual-system reinforcement-learning model of behavior of this task with different sets of drifting reward probabilities, as a function of the learning rate and inverse temperature of the agents. The red circle shows the median fit. Importantly, across the entire range of parameters, the task does not embody a trade-off between habit and reward. (B) An example of the average relationship between the weighting parameter and reward rate with inverse temperature = 5.0 and α = 0.5 (mirroring the median fits reported by Daw and colleagues [8]) across 1000 simulations. (C) The probabilities of repeating the first-stage action as a function of the previous reward and transition for a purely model-free agent and purely model-based agent.