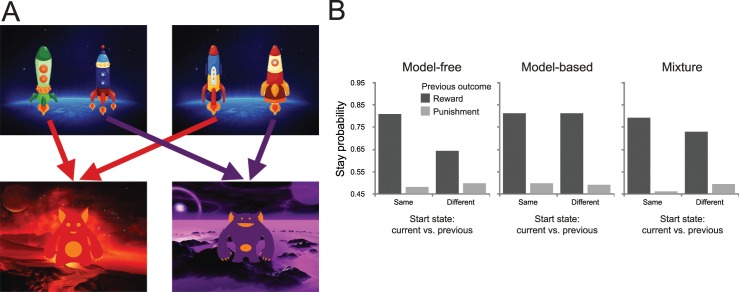

Fig 15. Design of the novel two-step task.

(A) State transition structure of the paradigm. At the first stage, participants choose between one of two pairs of spaceships. Each choice deterministically leads to a second-stage state that was associated with a reward payoff that changed slowly according to a random Gaussian walk over the duration of the experiment. Note that the choices in the two different first-stage states are essentially equivalent. (B) Predicted behavior from the generative reinforcement-learning model of this task (using median parameter estimates, and w = 0.5 for the agent with a mixture of strategies). Note that in this task the model does not produce qualitatively different behavior for the different systems as reported in Fig 5. Instead, the differences in behavior are subtler, and therefore differences in strategy arbitration are better captured using model-fitting techniques.