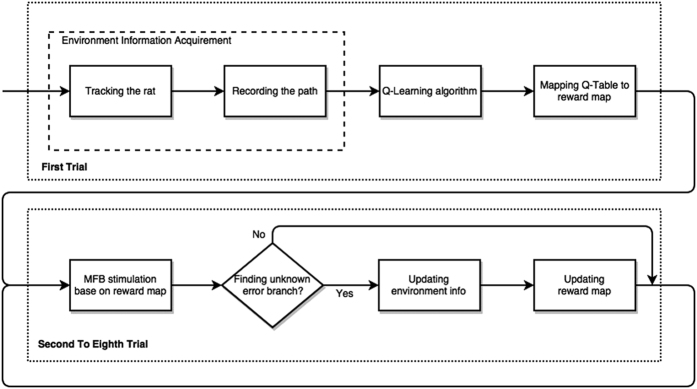

Figure 6. Pipeline of the learning algorithm.

Before the first trial of the task T1, there is no prior spatial knowledge about the maze for the ratbots or rats. In the first trial, the computer tracked the rat’s position and recorded the rat’s movement in the maze. When the rat finished the first trial, the environment information of the maze was achieved. The maze problem modeled as a Markov decision process was solved by Q-learning algorithm after the “environment information acquirement” step. After got the converged Q-table, the computer mapped it to the reward map for the current maze. In the trial 2 to trial 8, the MFB stimuli were configured according to the reward map. If an unknown error branch in the maze were visited by the rat, the environment information of the maze would be updated immediately. The reward map would also be updated after the ratbot finished the trial.