Abstract

Background

Risk-adjusted mortality (RAM) models are increasingly used to evaluate hospital performance, but the validity of the RAM method has been questioned. Providers are concerned that these methods might not adequately account for the highest levels of risk and that treating high-risk cases will have a negative impact on RAM rankings.

Methods

Using cases of isolated coronary artery bypass grafting (CABG) performed at 1002 sites in the United States participating in The Society of Thoracic Surgeons (STS) Adult Cardiac Surgery Database from 2008 to 2010 (N = 494,955), the STS CABG RAM model performance in high-risk patients was assessed. The ratios of observed to expected (O/E) perioperative mortality were compared among groups of hospitals with varying expected risks. Finally, RAM rates during the overall study period for each site were compared with its performance in a simulated “nightmare year” in which the site's highest risk cases over a 3-year period were concentrated into a 1-year period of exceptional risk.

Results

The average predicted mortality for center risk groups ranged from 1.46% for the lowest risk quintile to 2.87% for the highest. The O/E ratios for center risk quintiles 1 to 5 during the overall period were 1.01 (95% confidence interval, 0.96% to 1.06%), 1.00 (0.95% to 1.04%), 0.98 (0.94% to 1.03%), 0.97 (0.93% to 1.01%), and 0.80 (0.77% to 0.84%), respectively. The sites’ risk-adjusted mortality rates were not increased when the centers’ highest risk cases were concentrated into a single “nightmare year.”

Conclusions

Our results show that the current risk-adjusted models accurately estimate CABG mortality and that hospitals accepting more high-risk CABG patients have equal or better outcomes than do those with predominately lower-risk patients.

In 1986, Medicare first released hospital-specific mortality rates for given conditions and procedures, initiating the era of public provider profiling [1]. This hospital rating system was based on claims data and came under criticism for its limited ability to adjust for patient clinical factors and hospital case mix [2, 3]. In response, groups developed sophisticated statistical models for risk adjustment using clinical data [4–7]. The Society of Thoracic Surgeons (STS) developed a risk-adjusted mortality (RAM) model for coronary artery bypass grafting (CABG) and subsequently used this as a principal metric of quality assessment [8, 9]. The STS recently became the first professional society to voluntarily release hospital-specific performance data to the public [10–12].

Although the STS hospital rating system uses detailed clinical data for sophisticated risk adjustment, many still voice concerns that RAM models may not adequately compensate providers for high-risk cases. In the setting of mandatory public reporting, these concerns could lead providers to avoid high-risk cases out of fear for their performance rankings [13, 14]. To date, there has been limited research evaluating any detrimental impact that taking on high-risk CABG cases may have on hospital mortality rankings.

Our study investigated both the accuracy of current STS CABG RAM models in high-risk cases and the impact of these cases on hospital performance ratings. Specifically, we evaluated (1) the calibration of the current STS RAM model among high-risk and extreme-risk CABG patients, (2) the observed association between hospital case mix and risk-adjusted hospital outcomes, and (3) the impact of increasing the overall case risk profile on hospital risk-adjusted rankings.

Material and Methods

STS-ACSD

The Society of Thoracic Surgeons Adult Cardiac Surgery Database (STS-ACSD) is the largest clinical registry of cardiac surgery in North America, capturing data from more than 3,000 surgeons and 1,000 hospitals. These data represent more than 90% of cardiac surgery programs in the United States. Internal validation and external audits assure data quality [15].

Study Population

The study included patients in the STS-ACSD undergoing isolated CABG from 2008 to 2010. Patients were excluded for the following reasons: (1) missing data preventing calculation of predicted risk of mortality or (2) treatment at hospitals performing fewer than 50 CABG procedures over the 3-year study period, resulting in unstable center estimates.

Outcome

The primary outcome was operative mortality, defined as death during index hospitalization or within 30 days of the index procedure. This outcome was adjusted for predicted risk by use of the current STS CABG RAM model, which includes nearly 30 preoperative clinical factors, including age, gender, comorbidity profile, and cardiac factors [16].

Statistical Analysis

We estimated the predicted risk for each patient from the STS 2008 isolated CABG risk model [16]. On the basis of patient-level data, we evaluated model calibration (observed vs expected mortality rates) across the risk spectrum divided into deciles and focused our attention on patients with more extreme preoperative risk. Using previously established cutoffs [17], we prospectively defined very high-risk patients as those who had a predicted mortality risk of greater than 10%.

Second, we developed site-level predicted RAM by summating patient-specific predicted risk among all patients treated by each site and grouped sites into five risk quintiles based on average predicted risk. Patient-level data were used to compare patient characteristics among these hospital risk groups. Grouping patients based on hospital risk quintile, we estimated risk-adjusted mortality ratios between risk groups using observed to expected (O/E) mortality ratios. The 95% confidence intervals (CI) surrounding the O/E ratios were calculated on the basis of a binomial distribution. We then assessed whether centers with higher predicted risk had worse RAM than centers treating those with lower predicted risk. As a sensitivity analysis we reclassified hospitals based on the overall percentage of their cases that qualified as very high-risk (>10% predicted risk of mortality).

Third, we evaluated the impact of high-risk cases on center RAM by using the center as its own control, creating a simulation in which each center's highest risk cases seen over 3 years were concentrated into a single “nightmare year.” Specifically, we determined whether a site's O/E mortality ratio would have had an adverse impact if the site had performed all of its highest-risk CABG procedures during a 3-year period in a single year. In this nightmare scenario, all cases treated by a hospital over a 3-year period were rank ordered based on the patient's predicted risk. Keeping average annual case volume constant, we selected a hospital's highest-risk cases over the 3-year period and concentrated them into a single year's caseload. Then, we calculated their O/E (nightmare year) divided by the O/E for their overall average. Ratios greater than 1 indicated a site that appeared to perform worse when it took on a higher-risk case mix. If concentrating high-risk cases into a single year had a neutral or positive impact on risk-adjusted outcomes, then the ratio would be 1 or less. We used linear regression in a generalized estimating equation model to assess the performance of centers during the overall and “nightmare year” analyses. Using the hospital as its own control, we attempted to address concerns that centers willing to take on high-risk patients were providing higher-quality care and compensating for model underestimation of risk in higher-risk patients.

Finally, providers and hospital administrators have voiced concerns that treating a higher volume of high-risk cases may increase the institutional risk of being flagged as a low-performing “outlier” through increased variability in center performance [18, 19], equivalent to Las Vegas high-stakes bidding, where such bids lead to “winning or losing big.” We performed an analysis of how many centers would be flagged as outliers during their “nightmare year” relative to a more typical year. We calculated the 95% CI for observed mortality for each center during its “nightmare year” using a binomial distribution and then tested whether the 95% CI included the predicted mortality for that center. A center whose 95% CI lower limit for observed mortality was greater than the predicted mortality of that center was flagged as an outlier. The rate of outliers in the “nightmare year” was then compared with the rate of outliers in the overall dataset.

Results

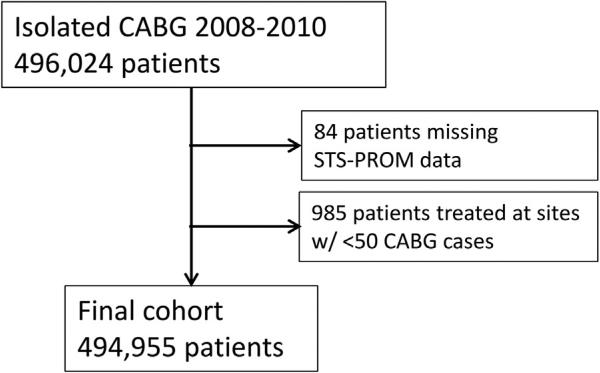

From 2008 to 2010, the STS-ACSD captured clinical data on 496,024 isolated CABG procedures. After excluding patients missing predicted risk of mortality (n = 84) or treated at centers with fewer than 50 CABG procedures during the study period (n = 985, centers excluded = 36), 494,955 patients 1,002 hospitals remained for analysis (Fig 1). The distribution of predicted CABG mortality risk for this population had a median of 1.04% (25th to 75th percentile: 0.57% to 2.09%) with 5th and 95th percentiles of 0.32% and 6.52%, respectively.

Fig 1.

Patient selection from the Society of Thoracic Surgeons Adult Cardiac Surgery Database (STS-ACSD). CABG = indicates coronary artery bypass grafting; STS-PROM = Society of Thoracic Surgeons predicted risk of mortality.

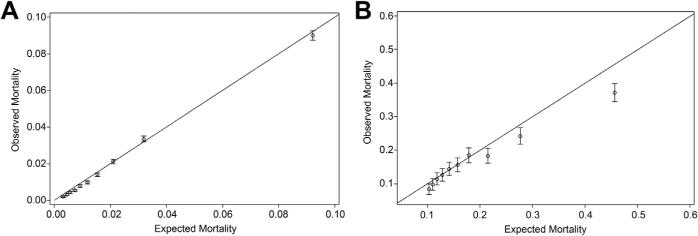

Model Calibration

The model calibration plots of O/E mortality ratios demonstrated good model performance in the overall cohort (Fig 2A) and among the 2.5% of cases with a STS predicted risk of more than 10% (Fig 2B). Although the model was well calibrated overall and among high-risk cases, the riskiest 1% of patients (predicted mortality >20%) had an O/E ratio less than 1, indicating that the current risk model overcompensated centers for these extreme-risk patients (Fig 2B).

Fig 2.

(A) Overall model calibration plot of observed versus predicted mortality using Society of Thoracic Surgeons Adult Cardiac Surgery Database predicted risk of mortality model incoronaryartery bypassgrafting (CABG). (B) High-risk patient (predicted mortality >10%) model calibration plot of observed versus predicted mortality using Society of Thoracic Surgeons Adult Cardiac Surgery Database predicted risk of mortality model in CABG.

Patient and Site Characteristics

Table 1 provides the site and patient characteristics after stratifying based on center-level average predicted risk of mortality (quintiles). Hospitals in the lowest-risk quintile had an average predicted mortality rate of 1.46%, whereas hospitals in the highest-risk quintile had an average predicted mortality rate of 2.87%. As expected, patients in higher center risk groups showed a higher prevalence of known risk factors, including older age, comorbidities, left main disease, and intraaortic balloon pump use (Table 1).

Table 1.

Patient and Site Characteristics Grouped by Center-Level Average Predicted Risk of Mortality

| Center Risk Group |

|||||

|---|---|---|---|---|---|

| Variable | Q1 (Lowest) | Q2 | Q3 | Q4 | Q5 (Highest) |

| N (centers) | 200 | 201 | 200 | 201 | 200 |

| N (patients) | 95,150 | 113,396 | 105,274 | 100,652 | 80,483 |

| Annual CABG volume per site (IQR) | 352 (195, 565) | 444 (291, 680) | 438 (236, 715) | 415 (255, 640) | 307 (193, 488) |

| Age, years (IQR) | 64 (57, 71) | 65 (57, 72) | 65 (57, 73) | 66 (58, 73) | 66 (58, 74) |

| Age ≥75 years, % | 16.9 | 19.3 | 20.3 | 21.7 | 23.4 |

| Female, % | 26.1 | 26.5 | 26.9 | 26.9 | 27.7 |

| Race, % | |||||

| White | 88.8 | 88.1 | 87.5 | 85.3 | 81.3 |

| Black | 6.9 | 6.9 | 6.6 | 7.5 | 7.5 |

| Asian | 1.2 | 1.8 | 1.8 | 2.5 | 4.7 |

| Hispanic ethnicity, % | 3.1 | 3.9 | 5.0 | 7.3 | 8.5 |

| Creatinine >1.5 mg/dL, % | 7.3 | 8.3 | 9.1 | 9.3 | 10.9 |

| Comorbidities, % | |||||

| Diabetes | 38.7 | 39.4 | 40.5 | 41.0 | 43.6 |

| Hypertension | 83.9 | 84.4 | 85.4 | 85.6 | 87.1 |

| Chronic lung disease: moderate or severe | 7.1 | 8.0 | 9.9 | 11.0 | 15.7 |

| Peripheral vascular disease | 12.3 | 13.6 | 15.4 | 16.0 | 18.1 |

| Cerebrovascular disease | 12.8 | 13.9 | 14.7 | 14.8 | 15.8 |

| Cerebrovascular accident | 5.7 | 6.4 | 7.1 | 7.0 | 7.6 |

| Renal failure; dialysis | 1.8 | 2.2 | 2.4 | 2.7 | 3.6 |

| Myocardial infarction | 43.0 | 44.7 | 47.8 | 49.4 | 51.7 |

| Intraaortic balloon pump | 7.0 | 9.0 | 10.3 | 10.9 | 13.4 |

| NYHA class IV | 19.5 | 22.5 | 25.8 | 26.3 | 31.7 |

| Congestive heart failure | 11.4 | 13.0 | 17.6 | 18.3 | 22.6 |

| Three-vessel disease, % | 73.5 | 74.3 | 74.5 | 75.8 | 77.0 |

| Left main disease >50%, % | 30.0 | 30.4 | 31.7 | 33.0 | 33.5 |

| Procedure status, % | |||||

| Urgent | 46.3 | 53.1 | 53.9 | 56.0 | 60.1 |

| Emergent | 2.7 | 3.9 | 4.4 | 5.5 | 6.9 |

| Predicted risk of mortality, % | 1.46 | 1.75 | 1.97 | 2.23 | 2.87 |

CABG = coronary artery bypass grafting; IQR = interquartile range; NYHA = New York Heart Association.

Site Performance

In the overall patient cohort, there was no evidence that hospitals treating patients with the highest predicted risk had worse risk-adjusted mortality profiles (Table 2). The center O/E ratios for mortality decreased slightly as center risk group increased. The center O/E ratios were near 1 for all center risk groups, with the exception of the centers treating the highest-risk patients (quintile 5), where the O/E ratio was 0.80 (95% CI, 0.77% to 0.84%), indicating that hospitals taking on the highest-risk cases were performing better than expected (Table 2).

Table 2.

O/E Mortality Ratios Among Sites Grouped by Center Predicted Risk Of Mortality

| Mortality (%) |

|||||

|---|---|---|---|---|---|

| Center-Level Predicted Risk | N (Centers) | N (Patients) | Observed | Expected | O/E (95% CI) |

| Q1 (lowest) | 200 | 95,150 | 1.48 | 1.46 | 1.01 (0.96–1.06) |

| Q2 | 201 | 113,396 | 1.75 | 1.75 | 1.00 (0.95–1.04) |

| Q3 | 200 | 105,274 | 1.94 | 1.97 | 0.98 (0.94–1.03) |

| Q4 | 201 | 100,652 | 2.17 | 2.23 | 0.97 (0.93–1.01) |

| Q5 (highest) | 200 | 80,483 | 2.31 | 2.87 | 0.80 (0.77–0.84) |

CI = confidence interval; O/E = observed/expected ratio.

As a sensitivity analysis, this process was repeated after centers were regrouped based on the percentage of total caseload deemed to contain very high-risk patients (predicted mortality >10%). The mean site-predicted risk of mortality varied from 1.51% for centers in the lowest-risk quintile to 2.85% for centers in the highest-risk quintile (Table 3). Once again, hospitals treating a larger percentage of very high-risk patients did better than their peers treating a lesser percentage of high-risk patients. Quintiles 4 and 5 had O/E ratios of 0.94 (95% CI, 0.90% to 0.98%) and 0.84 (95% CI, 0.80% to 0.88%), respectively, indicating better than expected performance (Table 3).

Table 3.

O/E Mortality Ratios Among Sites Grouped by Center Rate of High-Risk Patients

| Mortality (%) |

|||||

|---|---|---|---|---|---|

| Center-Level Predicted Risk | N (Centers) | N (Patients) | Observed | Expected | O/E (95% CI) |

| Q1 (lowest) | 200 | 91,964 | 1.55 | 1.51 | 1.03 (0.97–1.08) |

| Q2 | 201 | 114,605 | 1.71 | 1.75 | 0.98 (0.93–1.02) |

| Q3 | 200 | 106,038 | 1.93 | 1.97 | 0.98 (0.94–1.03) |

| Q4 | 201 | 106,768 | 2.08 | 2.21 | 0.94 (0.90–0.98) |

| Q5 (highest) | 200 | 75,580 | 2.40 | 2.85 | 0.84 (0.80–0.88) |

High-risk refers to those patients with predicted risk of mortality greater than 10%.

CI = confidence interval; O/E = observed/expected ratio.

“Nightmare Year” Simulation

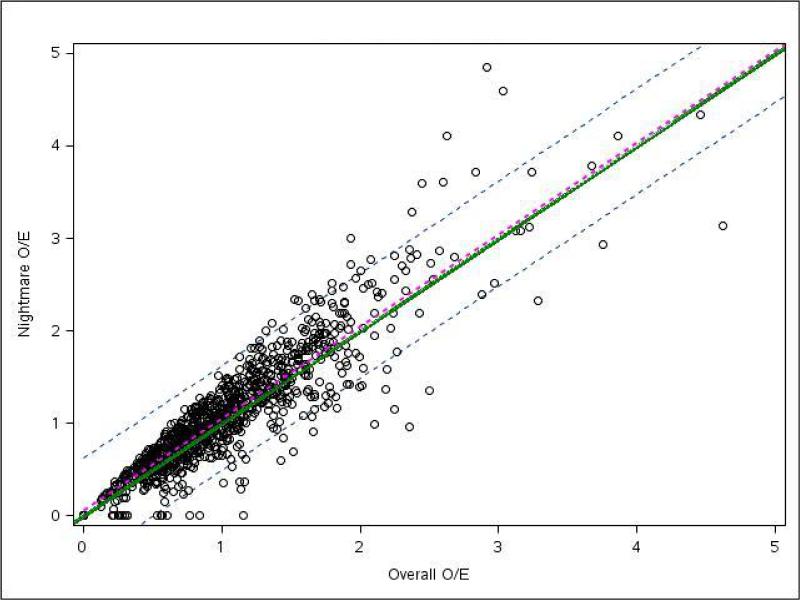

We then reevaluated the hospital O/E ratios after concentrating their highest-risk cases over a 3-year period into a single “nightmare year.” This analysis demonstrated that when most hospitals took on higher-risk cases their O/E ratios remained similar; however, hospitals in the lower-risk quintiles (quintiles 1 and 2) had O/E ratios of 1.11 (95% CI, 1.04% to 1.17%) and 1.07 (1.01% to 1.12%), respectively, suggesting worse than expected performance when they took on higher-risk cases (Table 4). Quintiles 3 and 4 performed as expected, and quintile 5 performed better than expected with an O/E ratio of 0.82 (CI, 0.78% to 0.86%).

Table 4.

O/E Mortality Ratios During “Nightmare Year” Grouped by Center Predicted Risk of Mortality

| Mortality (%) |

|||||

|---|---|---|---|---|---|

| Center-Level Predicted Risk | N (Centers) | N (Patients) | Observed | Expected | O/E (95% CI) |

| Q1 (lowest) | 200 | 31,294 | 3.43 | 3.10 | 1.11 (1.04–1.17) |

| Q2 | 201 | 37,316 | 4.08 | 3.83 | 1.07 (1.01–1.12) |

| Q3 | 200 | 34,633 | 4.57 | 4.39 | 1.04 (0.99–1.09) |

| Q4 | 201 | 33,114 | 5.12 | 5.05 | 1.01 (0.97–1.06) |

| Q5 (highest) | 200 | 26,454 | 5.52 | 6.73 | 0.82 (0.78–0.86) |

CI = confidence interval; O/E = observed/expected ratio.

To further explore the impact of increasing a hospital's risk profile, we plotted the O/E mortality ratio for the “nightmare year” scenario versus the O/E ratio of the overall cohort for each hospital (Fig 3). The ratios falling on the diagonal solid green line would indicate that a hospital's performance during the “nightmare year” was the same as its overall performance. Ratios falling below the solid green line would represent hospitals whose O/E mortality ratios during the “nightmare year” were less than the O/E ratio in the overall cohort, which, in turn, would imply that these hospitals performed better when taking on more high-risk cases as compared with their overall patient cohort. The hospitals performing worse during the “nightmare year” would fall above the solid green line. The overall linear regression line and 95% CI demonstrate that hospitals had almost identical performance during the “nightmare year” in comparison with their overall cohort.

Fig 3.

Site observed to expected (O/E) mortality ratios for during “nightmare year” and overall study period. Each circle represents an individual site with its position representing that site's overall O/E for mortality (x axis) compared with its “nightmare year” O/E for mortality (y axis). The green line represents perfect correlation between overall and “nightmare year” O/E. Sites falling above the green line performed worse during their “nightmare year” compared with their overall cohort. Sites falling below the green line performed better during the “nightmare year.” The regression line (dotted magenta) and 95% confidence interval (dotted grey) for this plot indicate that centers, in general, had nearly identical O/E ratios during the “nightmare year” compared with the overall cohort.

Given that one of the most concerning aspects of risk-adjusted mortality reports is the potential for a hospital to be flagged as a poor performer, we also categorized hospitals into low-performing outliers (defined as hospitals whose O/E mortality ratio had a 95% CI lower limit greater than 1) and high-performing outliers (sites whose O/E mortality ratio had a 95% CI upper limit less than 1). Out of 1,002 STS hospitals evaluated during the overall period, 100 centers (10%) were flagged as low-performing outliers and 86 (8.6%) were classified as high-performing outliers. During the “nightmare year,” 98 hospitals (9.8%) were flagged as low performers and 66 (6.6%) as high-performing outliers. Twenty-five sites (2.5%) were reclassified from performing as expected to low-performing outlier during the “nightmare year,” and 28 centers (2.8%) were reclassified from low-performing outlier to performing as expected. Thus, there was no trend toward more centers being identified as low-performing or high-performing outliers had they taken on more high-risk caseloads.

Comment

Patients with higher baseline risk tend to derive greater benefit from CABG [20]; however, providers may be less willing to take on high-risk CABG patients after the implementation of public reporting because of the impact on risk-adjusted mortality rankings [21, 22]. In contrast to these concerns, our study found that the current STS risk model was well calibrated to predict both overall CABG mortality and the outcomes in those at the highest surgical risk. We also found that hospitals operating on the highest-risk CABG patients had risk-adjusted outcomes that were equal to or better than those of their peers treating lower-risk patients. Finally, using a scenario in which a hospital's highest-risk patients seen over a 3-year period were concentrated into a single year, we again found that its risk-adjusted outcomes were no worse in the simulated “nightmare year” in comparison with its overall O/E mortality ratios. Combined, these data provide strong reassurance that existing STS risk-adjustment models adequately account for high-risk cases and that concerns about adverse effects on hospital rankings are unfounded.

Risk-adjusted mortality has been a widely used method for providing performance feedback to hospitals and communities, demonstrating the ability to improve quality and outcomes in CABG [23]. However, unless risk adjustment works appropriately, the advantages of quality improvement may come at the cost of high-risk case avoidance because of concerns that these high-risk cases will be detrimental to performance rankings. Concerns about the fairness of risk-adjusted outcome comparisons have become even more important as these results become publicly released. Many states currently benchmark CABG mortality, including New York and Pennsylvania [23, 24], and the STS has recently initiated voluntary public reporting [12]. Although the public release of outcomes data has been lauded for increasing transparency, providers have voiced concerns that public reporting of RAM punishes hospitals that take on high-risk CABG cases [18,19]. In addition, survey data indicate that public reporting decreases providers’ willingness to perform CABG on high-risk patients [21,22], and the evidence for high-risk case avoidance remains controversial [13, 14, 25].

Using New York state data from 1990 to 1992, Hannan and colleagues [26] first reported that hospitals performing higher-risk CABG had overall equal or better risk-adjusted performance relative to peers. Although that study is important, its research represents results from more than two decades ago. Risk profiles of CABG patients have become more severe, with CABG being routinely performed in older, sicker patients. The study by Hannan and colleagues also represents a limited number of centers from New York, a unique state that first instituted public reporting of hospital CABG mortality data. These centers may be more risk-averse than hospitals that were not profiled. Finally, the study by Hannan and colleagues did not address the potential confounder that centers treating more high-risk patients may actually be of a higher quality than centers unwilling to take on these patients. If so, their higher-quality care overall may compensate for any shortcomings in the risk adjustment process.

The present study analyzed current practice from more than 1,000 nationally representative hospitals. It examined a contemporary risk adjustment method and model. Our study used each hospital as its own control in the “nightmare year” scenario to address criticisms that hospitals taking on high-risk cases are higher-quality centers with better overall performance that corrects for risk model underestimation of surgical risk in high-risk cases. In this simulation, hospitals taking on more high-risk cases during the “nightmare year” performed no worse than during the period of a more typical case mix. We also examined different definitions of high-risk case mix, including overall average hospital-predicted mortality and the proportion of very high-risk cases (>10% predicted mortality). Finally, we found no increase in hospitals flagged as low-performing outliers during their “nightmare year” scenario relative to their performance in a typical year. Thus, we show no evidence to support the theory that avoiding high-risk cases is an effective strategy for improving hospital mortality rankings.

The current study has limitations. The current STS-RAM model was developed in data from 2000 through 2006, and subsequent expansion in the list of potential risk predictors, such as severe liver disease, should allow models currently under development an even richer dataset from which to predict outcomes. Unmeasured confounders, such as frailty, unfavorable anatomy, or rare comorbidities are difficult to capture and will remain a challenge to appropriate risk adjustment. The current study examined only isolated CABG, and its findings cannot be extended to other cardiac surgical procedures. Although the STS performs data quality checks and site audits, sites that fail to accurately reflect the risk profiles of their patients, either through upcoding of patient risk or failure to record comorbidities, will not have O/E ratios that accurately reflect their performance. This limitation was mitigated by using the hospital as its own control in the “nightmare year” analysis. Finally, we did not examine the appropriateness of surgical intervention in high-risk cases and could not evaluate whether the long-term benefits of surgical procedures justified the high operative risks.

Overall, these results should assuage fears that accepting high-risk patients for CABG will have an adverse impact on a hospital's risk-adjusted mortality ranking. This study provides evidence that the STS-RAM model in CABG performs well, even among high-risk CABG patients. Hospital RAM rankings were no worse when increased caseloads of high-risk patients were simulated. With no evidence that RAM models are biased against high-risk CABG patients, hospitals should not avoid high-risk patients to improve their hospital mortality performance rankings.

FURTHER POINTS.

Any (grey) halftones (photographs, micrographs, etc.) are best viewed on screen, for which they are optimized, and your local printer may not be able to output the greys correctly.

If the PDF files contain colour images, and if you do have a local colour printer available, then it will be likely that you will not be able to correctly reproduce the colours on it, as local variations can occur.

If you print the PDF file attached, and notice some ‘non-standard’ output, please check if the problem is also present on screen. If the correct printer driver for your printer is not installed on your PC, the printed output will be distorted.

Acknowledgments

This research was partially supported by grant 5U01HL-107023 from the NHLBI. All authors are paid or volunteer staff of the STS or the Duke Clinical Research Institute (analytic center for the STS-ACSD).

References

- 1.Brinkley J. U.S. releasing lists of hospitals with abnormal mortality rates. The New York Times. 1986 Mar 12; 1986. [Google Scholar]

- 2.Blumberg MS. Comments on HCFA hospital death rate statistical outliers. Health Care Financing Administration. Health Serv Res. 1987;21:715–39. [PMC free article] [PubMed] [Google Scholar]

- 3.Rosen HM, Green BA. The HCFA excess mortality lists: a methodological critique. Hosp Health Serv Adm. 1987;32:119–27. [PubMed] [Google Scholar]

- 4.Edwards FH, Grover FL, Shroyer AL, et al. The Society of Thoracic Surgeons National Cardiac Surgery Database: current risk assessment. Ann Thorac Surg. 1997;63:903–8. doi: 10.1016/s0003-4975(97)00017-9. [DOI] [PubMed] [Google Scholar]

- 5.Grover FL, Shroyer AL, Hammermeister KE. Calculating risk and outcome: the Veterans Affairs database. Ann Thorac Surg. 1996;62:S6–11. doi: 10.1016/0003-4975(96)00821-1. discussion S31–12. [DOI] [PubMed] [Google Scholar]

- 6.Hannan EL, Kilburn H, Jr, O'Donnell JF, et al. Adult open heart surgery in New York State: an analysis of risk factors and hospital mortality rates. JAMA. 1990;264:2768–74. [PubMed] [Google Scholar]

- 7.Jones RH, Hannan EL, Hammermeister KE, et al. Identi-fication of preoperative variables needed for risk adjustment of short-term mortality after coronary artery bypass graft surgery. The Working Group Panel on the Cooperative CABG Database Project. J Am Coll Cardiol. 1996;28:1478–87. doi: 10.1016/s0735-1097(96)00359-2. [DOI] [PubMed] [Google Scholar]

- 8.Shahian DM, Edwards FH, Ferraris VA, et al. Quality measurement in adult cardiac surgery: part 1. Conceptual framework and measure selection. Ann Thorac Surg. 2007;83:S3–12. doi: 10.1016/j.athoracsur.2007.01.053. [DOI] [PubMed] [Google Scholar]

- 9.O'Brien SM, Shahian DM, DeLong ER, et al. Quality measurement in adult cardiac surgery: part 2. Statistical considerations in composite measure scoring and provider rating. Ann Thorac Surg. 2007;83:S13–26. doi: 10.1016/j.athoracsur.2007.01.055. [DOI] [PubMed] [Google Scholar]

- 10.Shahian DM, Edwards FH, Jacobs JP, et al. Public reporting of cardiac surgery performance: part 1. History, rationale, consequences. Ann Thorac Surg. 2011;92:S2–11. doi: 10.1016/j.athoracsur.2011.06.100. [DOI] [PubMed] [Google Scholar]

- 11.Shahian DM, Edwards FH, Jacobs JP, et al. Public reporting of cardiac surgery performance: part 2. Implementation. Ann Thorac Surg. 2011;92:S12–23. doi: 10.1016/j.athoracsur.2011.06.101. [DOI] [PubMed] [Google Scholar]

- 12.Ferris TG, Torchiana DF. Public release of clinical outcomes data-online CABG report cards. N Engl J Med. 2010;363:1593–5. doi: 10.1056/NEJMp1009423. [DOI] [PubMed] [Google Scholar]

- 13.Omoigui NA, Miller DP, Brown KJ, et al. Outmigration for coronary bypass surgery in an era of public dissemination of clinical outcomes. Circulation. 1996;93:27–33. doi: 10.1161/01.cir.93.1.27. [DOI] [PubMed] [Google Scholar]

- 14.Apolito RA, Greenberg MA, Menegus MA, et al. Impact of the New York State Cardiac Surgery and Percutaneous Coronary Intervention Reporting System on the management of patients with acute myocardial infarction complicated by cardiogenic shock. Am Heart J. 2008;155:267–73. doi: 10.1016/j.ahj.2007.10.013. [DOI] [PubMed] [Google Scholar]

- 15.Shahian DM, Jacobs JP, Edwards FH, et al. The Society of Thoracic Surgeons national database. Heart. 2013;99:1494–501. doi: 10.1136/heartjnl-2012-303456. [DOI] [PubMed] [Google Scholar]

- 16.Shahian DM, O'Brien SM, Filardo G, et al. The Society of Thoracic Surgeons 2008 cardiac surgery risk models: part 1. Coronary artery bypass grafting surgery. Ann Thorac Surg. 2009;88:S2–22. doi: 10.1016/j.athoracsur.2009.05.053. [DOI] [PubMed] [Google Scholar]

- 17.Leon MB, Smith CR, Mack M, et al. Transcatheter aortic-valve implantation for aortic stenosis in patients who cannot undergo surgery. N Engl J Med. 2010;363:1597–607. doi: 10.1056/NEJMoa1008232. [DOI] [PubMed] [Google Scholar]

- 18.Green J, Wintfeld N. Report cards on cardiac surgeons. Assessing New York State's approach. N Engl J Med. 1995;332:1229–32. doi: 10.1056/NEJM199505043321812. [DOI] [PubMed] [Google Scholar]

- 19.Werner RM, Asch DA. The unintended consequences of publicly reporting quality information. JAMA. 2005;293:1239–44. doi: 10.1001/jama.293.10.1239. [DOI] [PubMed] [Google Scholar]

- 20.Jones RH. In search of the optimal surgical mortality. Circulation. 1989;79:I132–6. [PubMed] [Google Scholar]

- 21.Burack JH, Impellizzeri P, Homel P, et al. Public reporting of surgical mortality: a survey of New York State cardiothoracic surgeons. Ann Thorac Surg. 1999;68:1195–200. doi: 10.1016/s0003-4975(99)00907-8. discussion 1201–2. [DOI] [PubMed] [Google Scholar]

- 22.Schneider EC, Epstein AM. Influence of cardiac-surgery performance reports on referral practices and access to care. A survey of cardiovascular specialists. N Engl J Med. 1996;335:251–6. doi: 10.1056/NEJM199607253350406. [DOI] [PubMed] [Google Scholar]

- 23.Hannan EL, Kilburn H, Jr, Racz M, et al. Improving the outcomes of coronary artery bypass surgery in New York State. JAMA. 1994;271:761–6. [PubMed] [Google Scholar]

- 24.Hannan EL, Sarrazin MS, Doran DR, Rosenthal GE. Provider profiling and quality improvement efforts in coronary artery bypass graft surgery: the effect on short-term mortality among Medicare beneficiaries. Med Care. 2003;41:1164–72. doi: 10.1097/01.MLR.0000088452.82637.40. [DOI] [PubMed] [Google Scholar]

- 25.Peterson ED, DeLong ER, Jollis JG, et al. The effects of New York's bypass surgery provider profiling on access to care and patient outcomes in the elderly. J Am Coll Cardiol. 1998;32:993–9. doi: 10.1016/s0735-1097(98)00332-5. [DOI] [PubMed] [Google Scholar]

- 26.Hannan EL, Siu AL, Kumar D, et al. Assessment of coronary artery bypass graft surgery performance in New York. Is there a bias against taking high-risk patients? Med Care. 1997;35:49–56. doi: 10.1097/00005650-199701000-00004. [DOI] [PubMed] [Google Scholar]