Abstract

Adaptation is a phenomenological umbrella term under which a variety of temporal contextual effects are grouped. Previous models have shown that some aspects of visual adaptation reflect optimal processing of dynamic visual inputs, suggesting that adaptation should be tuned to the properties of natural visual inputs. However, the link between natural dynamic inputs and adaptation is poorly understood. Here, we extend a previously developed Bayesian modeling framework for spatial contextual effects to the temporal domain. The model learns temporal statistical regularities of natural movies and links these statistics to adaptation in primary visual cortex via divisive normalization, a ubiquitous neural computation. In particular, the model divisively normalizes the present visual input by the past visual inputs only to the degree that these are inferred to be statistically dependent. We show that this flexible form of normalization reproduces classical findings on how brief adaptation affects neuronal selectivity. Furthermore, prior knowledge acquired by the Bayesian model from natural movies can be modified by prolonged exposure to novel visual stimuli. We show that this updating can explain classical results on contrast adaptation. We also simulate the recent finding that adaptation maintains population homeostasis, namely, a balanced level of activity across a population of neurons with different orientation preferences. Consistent with previous disparate observations, our work further clarifies the influence of stimulus-specific and neuronal-specific normalization signals in adaptation.

Keywords: adaptation, Bayesian, scene statistics, divisive normalization

Introduction

Both perception and neural processing of a stimulus are dramatically influenced by what has been observed in the past, its temporal context. Such influences, broadly called adaptation (Carandini et al., 2005; Clifford et al., 2007; Kohn, 2007; Solomon & Kohn, 2014; Webster, 2011), have been a topic of fascination at least since the time of Aristotle, as revealed by perceptual aftereffects (Clifford & Rhodes, 2005; Schwartz, Hsu, & Dayan, 2007). Adaptation has been observed in many areas of the brain, such as the visual (Kohn, 2007), auditory (Pérez-González & Malmierca, 2014), olfactory (Kurahashi & Menini, 1997; Wilson, 2009), and somatosensory (Maravall, Petersen, Fairhall, Arabzadeh, & Diamond, 2007) regions. In the natural environment, sensory signals are always embedded in a temporal context, and correct inferences about the perceptual identity and behavioral relevance of the signals depend heavily on such context. This context-dependent inference has led to the proposal that adaptation is a hallmark of systems optimized to the temporal structure of the natural environment (e.g., Barlow & Földiák, 1989; Dayan, Sahani, & Deback, 2002; Fairhall, Lewen, Bialek, & de Ruyter Van Steveninck, 2001; Lochmann, Ernst, & Deneve, 2012; Schwartz et al., 2007; Wainwright, Schwartz, & Simoncelli, 2002; see also Attneave, 1954; Barlow, 1961).

Here we address primary visual cortex (V1) as a paradigmatic example and focus on orientation adaptation phenomena that are within the classical receptive field (RF). Over the past decades, visual cortical adaptation has been studied in great detail. Early studies revealed that the responses of neurons can be altered and often reduced over time in response to prolonged stimulation (Maffei, Fiorentini, & Bisti, 1973; Movshon & Lennie, 1979; Vautin & Berkley, 1977). Adaptation to oriented stimuli reveals a host of effects that range from contrast adaptation (Albrecht, Farrar, & Hamilton, 1984; Bonds, 1991; Ohzawa, Sclar, & Freeman, 1982, 1985; Sclar, Lennie, & DePriest, 1989), to suppression and repulsion of tuning curves in single neurons (Dragoi, Sharma, & Sur, 2000; Felsen et al., 2002; Müller, Metha, Krauskopf, & Lennie, 1999; Patterson, Wissig, & Kohn, 2013), to the recent finding that adaptation counteracts small biases in the stimulus ensemble to achieve homeostasis or equalization of population responses (Benucci, Saleem, & Carandini, 2013). Adaptation is further multifaceted in that it is triggered by some features but not others (termed stimulus specificity; Solomon & Kohn, 2014), and its strength often depends on neuronal selectivity (Benucci et al., 2013). Moreover, adaptation operates at a range of timescales, from milliseconds to seconds and minutes, or even longer (Dragoi et al., 2000; Kohn, 2007; Patterson et al., 2013; Solomon & Kohn, 2014). A more comprehensive treatment of experimental adaptation effects in V1 is discussed in the Introduction to V1 Adaptation Experimental Literature section.

Despite this rich experimental characterization, there is currently not a single modeling framework that encompasses this range of effects. A long-standing proposal is that divisive normalization, a unifying descriptive model for many aspects of signaling in sensory areas, could naturally account for adaptation (Albrecht & Geisler, 1991; Carandini & Heeger, 2012; Heeger, 1992). However, descriptive models do not determine when adaptation should occur or the parameters that govern its strength, which is crucial for capturing the range of experimental data we consider here. A complementary approach is to consider theoretical frameworks that posit that adaptation can be explained as optimal processing of temporal signals (Buiatti & van Vreeswijk, 2003; Lochmann et al., 2012; Stevenson, Cronin, Sur, & Kording, 2010; Stocker & Simoncelli, 2006; Wainwright et al., 2002). These studies rely, in different ways, on the hypothesis that sensory processing is matched to the statistical properties of natural scenes (Barlow, 1961; Olshausen & Field, 2000) and that it is a form of Bayesian inference based on a generative model, that is, a probabilistic relationship between objects in the environment and the sensory inputs they produce (Barlow, 2001; Berkes, Orbán, Lengyel, & Fiser, 2011; Dayan & Abbott, 2001; Knill & Richards, 1996; Stocker & Simoncelli, 2006). However, none of these recent studies addressed a crucial requirement of this approach, namely, that such a model be learned from the temporal statistics of natural movies to form, and update, a Bayesian prior about temporal expectations in the natural environment.

To address these limitations, we took a different approach. We hypothesized that cortical neurons are sensitive to temporal regularities inherent in the natural visual environment. We constructed a model that learns such prior knowledge and performs Bayesian inference on the visual input based on that prior knowledge. Because objects in the world generally are either static (e.g., a tree, a rock) or move slowly and smoothly (e.g., a person walking on the beach; Weiss & Adelson, 1998), the inputs to V1 neurons change little over short timescales (e.g., within one eye fixation). Therefore, present and past visual inputs are often highly redundant. Across longer timescales, or in contexts with quickly changing motion, such as a carousel, temporal sequences of visual inputs are less redundant (Figure 1, lion vs. carousel). Our model developed similar prior knowledge through exposure to an ensemble of natural movies and learned to judge the statistical similarity between the past and the present in new inputs. We thus developed a Bayesian modeling framework that learned regularities in natural movies over time and then sought to reproduce some adaptation effects in V1.

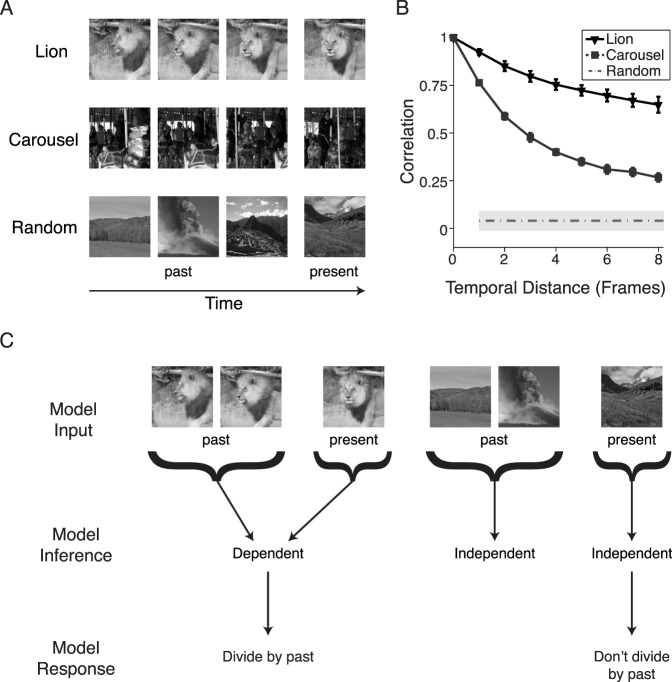

Figure 1.

Dependencies in natural movies and a generalized divisive normalization model for reducing dependencies. (A) Past (left columns) and present (right column) frames of natural movies (top two rows) versus a movie composed of random still natural images (bottom row). (B) Filled symbols: Correlation coefficient of the responses of a standard complex cell model to the movies in (A), namely, the square root of sum of squared responses of two quadrature pair–oriented filters. The abscissa is the temporal distance used for the calculation of correlation between the “present” and “past” frames. The error bars are ±1 SD across different filter orientations. Dashed line and shaded area: Average correlation coefficient ±1 SD over all correlations for all distances, because the order of the random frames is artificial, for the movie composed of random still images. (C) Flexible divisive normalization. The model divides the present by the past if the past and present share dependencies (left case) and does not divide the present by the past when the past and present are independent (right case). Lion images adapted from “Be Bold as a Lion” by Kids on the Move, https://vimeo.com/146515611, available under the Creative Commons Attribution license.

The framework is closely related to divisive normalization, whereby in the temporal version, the response of a neuron is divisively normalized by the responses of other neurons across time (Heeger, 1992). However, the Bayesian framework extends the standard normalization model in a critical way; responses are normalized only to the degree that past and present inputs are inferred to be statistically similar. We first optimized a set of parameters in the generative model that characterize the dependencies between movie frames over hundreds of milliseconds. This approach captured some neurophysiology data for short-term adaptation. Furthermore, the Bayesian model could be naturally extended to capture longer timescales, on the order of seconds, by updating the parameters that govern the generative model. This allowed the model to also reflect longer-term statistics of the experimental stimulus ensemble and to capture a broader range of experimental data.

In designing our model, we found it useful to distinguish between two types of normalization, as has been done previously in the adaptation literature (e.g., Benucci et al., 2013; Solomon & Kohn, 2014): (a) stimulus-specific (i.e., strongest normalization when the past adapting stimulus is similar to the present test stimulus) and (b) neuronal-specific (i.e., strongest normalization when the adapting stimulus matches the preference of the neuron). Each model implementation captured a subset of adaptation effects, suggesting that these represent slices through a more complicated space. Indeed, depending on the adaptation conditions, both effects are likely present to different degrees.

Overall, this work provides a step forward toward a common framework for understanding a diverse set of visual adaptation phenomena.

Introduction to V1 adaptation experimental literature

We provide a brief summary of adaptation neurophysiology in V1 and then specify the effects that we seek to address with our modeling approach. We focus specifically on orientation adaptation effects in V1. Other factors such as spatial and temporal frequency are also known to influence adaptation (e.g., King, Lowe, & Crowder, 2015; Ledue, King, Stover, & Crowder, 2013; Saul & Cynader, 1989) but are beyond the scope of our current modeling effort, which is based on a population of oriented RFs. We also focus on effects confined to the classical RF of V1 neurons. A more comprehensive review of adaptation effects in V1 and other areas can be found in several review papers (Clifford & Rhodes, 2005; Clifford et al., 2007; Ibbotson, 2005; Kohn, 2007; Krekelberg, Boynton, & van Wezel, 2006; Schwartz et al., 2007; Solomon & Kohn, 2014).

A main property of V1 adaptation that has been documented extensively is the reduced response to test stimuli following adaptation. When the test stimulus is matched in orientation to the RF of a given neuron, most suppression is typically found when the adapting stimulus also matches the neuron's preferred RF (Carandini, Movshon, & Ferster, 1998; Crowder et al., 2006; Nelson, 1991). Adaptation to a given stimulus is often depicted in terms of changes in tuning curves. For an adapter optimally matched to the classical RF, multiple labs have found response suppression of tuning curves (e.g., Crowder et al., 2006; Dragoi et al., 2002; Felsen et al., 2002; Müller et al., 1999).

An interesting facet of adaptation is that when one adapts on the flank of the tuning curve, this often results in a repulsive shift of the tuning curve. This effect is striking and has been observed across multiple labs (Dragoi et al., 2000; Felsen et al., 2002; Müller et al., 1999; Patterson et al., 2013), even when adapting for short timescales of hundreds of milliseconds (Dragoi, Sharma, Miller, & Sur, 2002; Müller et al., 1999). This is the classical example of the so-called stimulus-specific adaptation, because the amount of suppression is maximal when the adapter is matched to the test, not when it is matched to the neuron's preferred RF. This distinction cannot be made for the aforementioned case of tuning curve suppression, because the maximal suppressive effect is observed only when the adapter is matched to both the test stimulus and the preferred orientation of the neuron. The observation of stimulus specificity also applies to other stimulus attributes, as Movshon and Lennie (1979, p. 852) noted in earlier work with spatial frequency: “Our most surprising observation is that the loss of sensitivity in cortical neurones can be specific to the adapting stimulus.”

Adaptation is influenced by timescale. Effects of tuning curve changes, such as suppression and repulsion, are typically increased for longer adaptation times (Dragoi et al., 2000; Patterson et al., 2013), although there are exceptions to such duration scaling (Patterson et al., 2013; Solomon & Kohn, 2014).

Adaptation, however, can sometimes enhance responsivity and lead to attractive shifts in tuning curves. This has been observed in the context of surround influences and disinhibition (Solomon & Kohn, 2014; Webb, Dhruv, Solomon, Tailby, & Lennie, 2005; Wissig & Kohn, 2012). Enhancement and attraction have also been documented in area MT; however, it is interesting to note that disparities between V1 and MT on such fronts have also been attributed to stimulus size (Patterson, Duijnhouwer, Wissig, Krekelberg, & Kohn, 2014; Wissig & Kohn, 2012). Some studies have suggested attraction in V1 for long adaptation periods of minutes (Jeyabalaratnam et al., 2013), but these also included stimuli that were much larger than the classical RF. We do not have surround influences in our adaptation model and so focus only on effects that pertain to the classical RF.

Unlike adapters that are equal to the preferred orientation or on the flank of the tuning curve, orthogonal adapters have led to more variable results. They may, in some cases, result in an increased response for test stimuli (see Dragoi et al., 2002, for short timescales). Enhancement is possibly due to recovery from reduced responsiveness to previous adaptation (see discussion in Solomon & Kohn, 2014). But in some cases, orthogonal adapters have resulted in suppression (Crowder et al., 2006), although this effect is usually weaker than adaptation to the preferred orientation (Carandini et al., 1998).

Another factor that has been studied extensively in adaptation is the influence of contrast. Contrast adaptation causes a change in contrast sensitivity via a rightward shift of the contrast response curve (with larger shifts for adaptation to higher contrasts) and may also change its saturation level (Albrecht et al., 1984; Bonds, 1991; Ohzawa et al., 1982, 1985; Sclar et al., 1989).

In this article, we first show that we can replicate some adaptation effects, which have been described in multiple labs, namely, suppression and repulsion of tuning curves. We explain how these effects are natural consequences of our model, even on short timescales, because of its learning of regularities in movie statistics. Normalization occurs in our framework only to the degree that past and present are deemed statistically dependent. We then consider other experimental phenomena. We examine contrast adaptation and shifts in tuning curves, which have also been documented in many labs. We show that, in line with other normalization frameworks, we can capture such contrast shifts. This is captured by our model, provided that we update our model during the adaptation, thereby reflecting an adapting period on the order of seconds. We then focus on an interesting recent phenomenon, response equalization, documented by Benucci et al. (2013). In this paradigm, multiple orientations are presented for a prolonged period of adaptation over several seconds. Although one orientation (the biased orientation) is presented more frequently than other orientations, the responses of neurons across the population are equalized following adaptation, reflecting a form of homeostasis. We show that these data could not be explained by the same model that captured stimulus-specific suppression. Modeling response equalization required a variant of the model, which includes neuronal-specific suppression. Finally, in the Discussion section, we consider how to apply our approach to other longer-term adaptation studies that have found strengthening of tuning curve suppression and repulsion for longer adaptation durations. We also discuss limitations of our approach.

Methods

Overview of modeling framework

We consider a framework closely related to divisive normalization models of adaptation. In the standard view of normalization, the response of a neuron to a sequence of images is obtained by applying an oriented filter and then dividing by a combination of the outputs of other filters at different times and orientations. Here we link this operation to the statistics of natural movies and consider several extensions.

Following a large body of work on image statistics, we hypothesize that the firing rates of different V1 neurons represent the intensity of features that may be present in the visual input, such as oriented Gabors. In other words, given the visual input, a neuron computes the intensity of the feature it represents. This cannot be achieved simply by linear filtering; for instance, the output of a vertical filter applied to a vertical, low-contrast image may be the same as when it is applied to a diagonal, high-contrast image. In this example, division by the sum of squares of the outputs of filters with different orientations (i.e., a measure of contrast) disambiguates the two cases.

In general, natural visual inputs are much more complex, as they combine multiple features of different contrasts across time and space. Here we focus specifically on the temporal domain. Therefore, to compute feature intensity, neurons need to rely on some knowledge about the structure of images across time, to correctly infer the intensity. In studies of image statistics, this knowledge is typically formalized by a generative model of images, namely, a model that specifies how likely individual features and contrasts are, a priori, and how to combine them. Inference then requires inverting the generative model (i.e., applying Bayes' rule to compute a posterior probability over feature intensity given the visual input).

This article is based on a class of generative models known as Gaussian scale mixtures (GSM; Andrews & Mallows, 1974; Wainwright & Simoncelli, 2000) and extensions to mixtures of GSM (MGSM; Coen-Cagli, Dayan, & Schwartz, 2009; Schwartz, Sejnowski, & Dayan, 2006). Our previous work showed that these models are closely related to divisive normalization and account for some spatial context phenomena (Coen-Cagli, Dayan, & Schwartz, 2012; Coen-Cagli et al., 2009; Coen-Cagli & Schwartz, 2013; Schwartz, Sejnowski, & Dayan, 2009). The models also relate to ideas of breakdown of spatial homogeneity and salience in V1 (Li, 1999). Here we apply the MGSM modeling framework to temporal stimuli (i.e., movie frames) and neural adaptation effects.

Before describing the models in detail, we provide here the intuition behind the temporal GSM and MGSM. Subsequent frames of a natural movie are highly redundant, as objects tend to move smoothly and slowly, and therefore the outputs of filters applied to subsequent frames are statistically dependent. Such dependencies are modeled in the GSM as arising from the multiplication between a set of local variables (analogous to feature intensities across time and orientations) and a global variable (analogous to contrast). Given an input movie and the corresponding filters' outputs, the model neural response (i.e., an estimate of the local intensity variable) is obtained by estimating the contrast variable and dividing it out, a form of divisive normalization. This model, however, cannot account for the fact that the dependencies may be stronger between some features than others or absent altogether (e.g., when there is fast motion through the visual field and subsequent frames contain different objects). This is captured by MGSMs, in which inference proceeds in two steps: Given an input movie, one first infers which subset of features, if any, are statistically dependent; second, one then divides the target filter's output by a normalization signal computed only from the other filters that were inferred dependent with it.

Below, we briefly describe the linear filters we used to quantify how strongly past and present frames of a movie drive both the RF and the normalization pool of model neurons. This is followed by an overview of the general formulation for this type of model and the link to divisive normalization. We then describe two different implementations of the model for adaptation in V1. The two implementations differ by how they capture the statistical similarity (i.e., the dependence) between the past and present, namely, whether it is stimulus specific or neuronal specific. We then briefly enumerate the process by which we trained the models. Finally, we conclude by extending the models to address temporal dynamics over longer timescales.

V1 model RFs

We used a bank of oriented visual filters, qualitatively similar to V1 RFs, to compute both the excitatory drive to the RF of a neuron and the normalization signal. Specifically, the filters were drawn from the first level of a steerable pyramid (Simoncelli, Freeman, Adelson, & Heeger, 1992), with a diameter of 9 pixels and a peak spatial frequency of 1/6 cycles/pixels. We considered filters with four possible orientations (0°, 45°, 90°, and 135°), denoted by θ, along with two possible phases (0° and 90° to form a quadrature pair) and nine temporal positions (eight past and one present temporal position). Using movies with frame rates of 30 frames per second (fps), we chose an adapt period of eight frames, which corresponds to about 240 ms, because this is within the range of standard short-term adaptation experimental paradigms (Kohn, 2007). Below, we further discuss model extensions for adaptation over longer timescales.

For a given image, the output of a filter, from here on simply referred to as the RF output, is given by the inner product between the image and the filter. We generically denote the vector of present RF outputs by xt and the past RF outputs by xp. We considered different choices for xt and xp, as detailed below for the different versions of the model (see the sections titled “Binary MGSM and Flexible Divisive Normalization” and “Multiorientation MGSM Model”).

Standard Gaussian scale mixture model and divisive normalization

A general property of natural movies is that for a single spatiotemporal position, neighboring pixels in the spatial and temporal domains are highly redundant (Figure 1A). The spatial redundancy is due to global properties of the image, such as contrast, which are shared between neighboring areas. Because movies change slowly and smoothly over time (Weiss & Adelson, 1998), neighboring temporal positions (e.g., in the past and present time frames) are highly redundant as well (e.g., Dong & Atick, 1995). The GSM (Andrews & Mallows, 1974; Wainwright & Simoncelli, 2000) is a generative model that captures these dependencies (i.e., generates RF outputs that have the typical dependencies observed in natural stimuli).

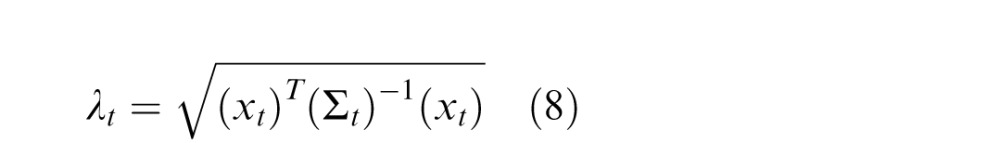

The GSM generates the RF outputs for the past and present frames, xt and xp, respectively, such that they are statistically dependent, by the multiplication of two random variables: (a) a Gaussian variable that represents structure local to each RF, which we label gt and gp, for the present and past RFs, respectively, and (b) a positive scalar random variable, v, which is shared between multiple RFs (and essentially captures the dependence among them). The nonlinear dependencies between RF outputs in the past and present are introduced via the multiplication of the local Gaussian variable, g, with the shared mixer variable, v. Therefore, we model the dependent RF outputs of the present time frame and the past time frames as

|

where gt and gp are vectors representing the local Gaussian variable in the present and past, respectively, and are the same size as the filter outputs to which they correspond in Equation 1, that is, xt and xp. To connect the GSM to temporal contextual effects, we assume that the Gaussian components associated with the present frame, gt, relate to the firing rates of V1 neurons. Estimating this Gaussian component amounts to a form of divisive normalization, by essentially inverting the multiplicative model above (thus amounting to division).

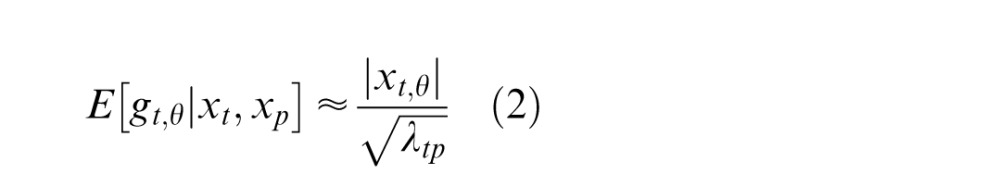

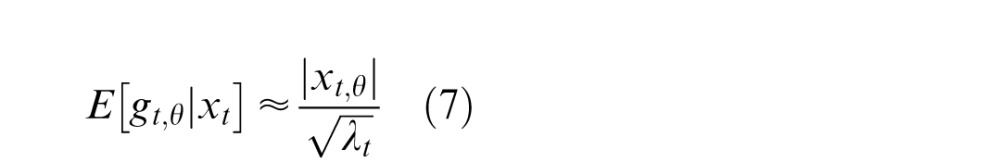

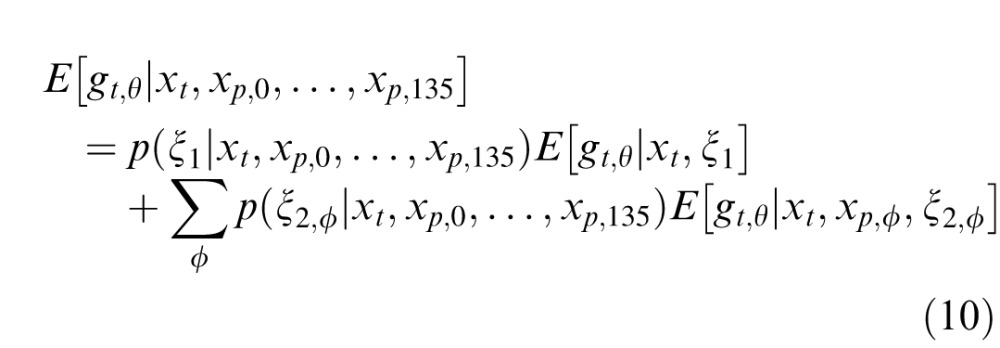

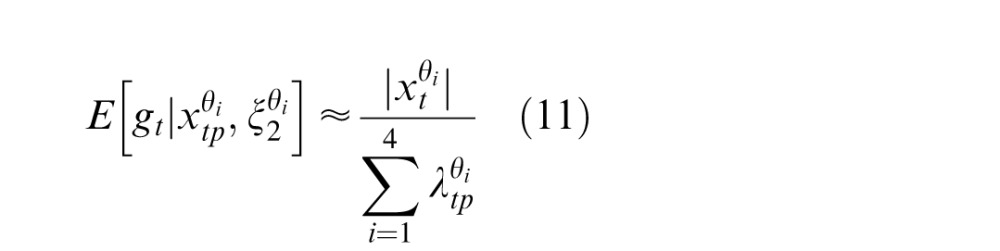

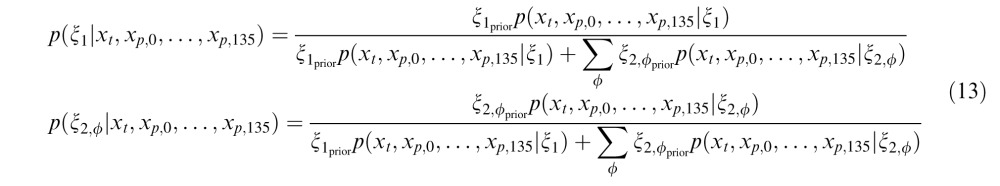

More specifically, we need to expand the notation of Equation 1 to include the preferred orientation of a given neuron. We associate each entry of the vector (which we label θ for the preferred orientation of the corresponding RF), gt,θ, with the firing of a neuron whose preferred orientation reflects the corresponding RF output, xt,θ, in V1. We focus on the Gaussian component because it represents structure local to an RF at a single point in time. In contrast, the mixer variable, v, represents the link between RF responses across time. As the GSM is a generative model, by inverting the model and using Bayes's rule, we can estimate the value of the local Gaussian components, gt. Given a set of stimuli, we collect the set of RF outputs in the past and present times, denoted by the vector (xt,xp), and then calculate the expected value of the local Gaussian component for the present. Because the generative model is multiplicative (as in Equation 1), the inverse operation of computing the local Gaussian amounts to a form of divisive normalization. It has been shown (Schwartz & Simoncelli, 2001) that such an operation also relates to efficient coding as it reduces the statistical dependencies that, in the GSM, are due to this common mixer variable, v. The resulting divisive normalization equation is given by (see Coen-Cagli et al., 2009; Schwartz et al., 2006, for derivations):

|

where the numerator of Equation 2 is the rectified RF response corresponding to the preferred orientation of the given model neuron and the denominator of Equation 2 is given by , which represents the normalization signal and is defined as follows:

, which represents the normalization signal and is defined as follows:

|

The covariance matrix Σtp is a measure of the linear dependencies between elements of the past and present Gaussians, gp and gt, and intuitively may be seen as representing how strongly the features (of orientation in the past and the present) are statistically dependent in natural scenes. Equation 3 thus amounts to a weighted sum of quadratic and bilinear combinations of the RF responses that contribute to the divisive normalization signal. If the covariance matrix is the identity matrix, then this reduces simply to a sum of squares, similar to the original formulation of divisive normalization (Heeger, 1992).

We suggest that this GSM framework can explain both neuron- and stimulus-specific adaptation effects as determined by the statistical similarity of the past and present inputs. The next two sections describe the two different metrics of similarity.

Binary MGSM and flexible divisive normalization

We introduce here one of the models we used to capture V1 adaptation effects, termed the binary mixture of Gaussian scale mixtures (MGSM), which is an extension to the temporal domain of techniques previously applied to spatial context (Coen-Cagli et al., 2009). The binary model will capture neuron-specific adaptation, as described below.

In the standard GSM, the shared mixer variable between RFs captures the dependencies that exist between frames of natural movies. However, when we empirically observe movie frames over time, we find that for particular movie sequences, the present frame is not always statistically dependent with the past frames, for instance, if the past and present correspond to different textures or objects (Figure 1). We model this independent case by assuming that the past and present RFs each have their own mixer variable (vp and vt), rather than a shared mixer:

|

However, a richer characterization of the dependencies in natural movies should capture the possibility that some movie frames are dependent (shared mixer) whereas others are independent (no shared mixer). Technically, this is achieved by a mixture of the dependent GSM and independent GSM.

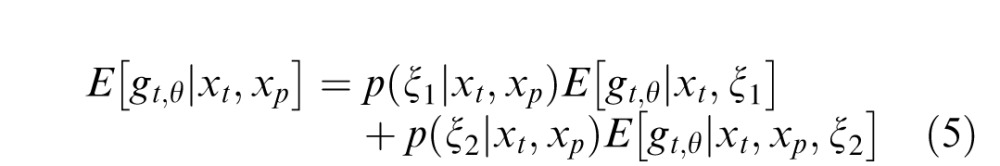

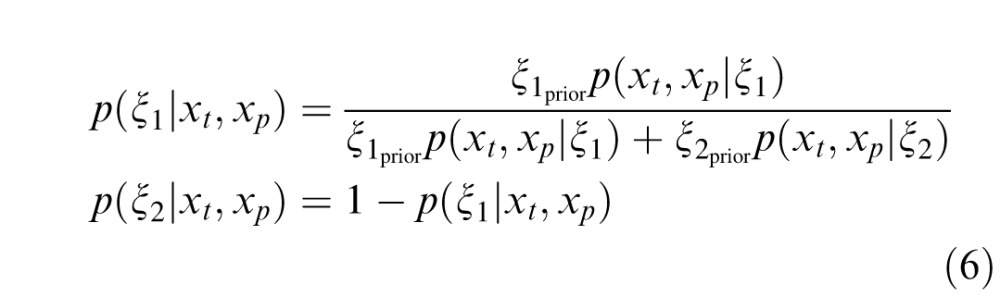

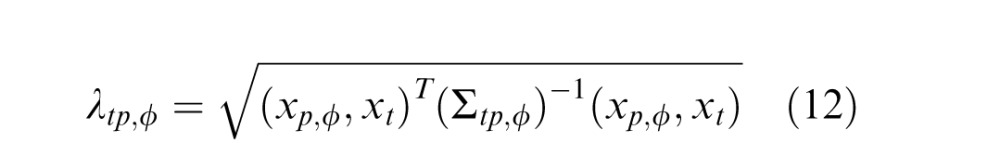

The model is termed binary in that for any given input, it is a linear mixture of two GSM models: one in which the past and present are deemed independent (as in Equation 4) and therefore the present is not divisively normalized by the past, and one in which the past and present are deemed dependent (as in Equation 1 and in the standard GSM model) and therefore the present is normalized by the past.

In the binary model, the estimate of the local Gaussian variable for a neuron with a preferred orientation, θ, amounts to a weighted sum of two conditions, one in which the past and present are independent (denoted ξ1) and one in which they are dependent (denoted ξ2). In the independent condition, the RF response in the present, xt,θ, is not normalized by the past. In the dependent condition, the present is normalized by a set of past responses with an RF orientation matching the preferred orientation of the neuron θ, xp,θ. In addition, similar to Coen-Cagli et al. (2009), we include in the normalization pool of both the independent and dependent conditions the multiple orientations in the present. This is motivated by the strong dependence typically observed between overlapping RFs with different orientations (Schwartz & Simoncelli, 2001), and it also guarantees local contrast normalization of the model neural responses (Heeger, 1992).

The weight for the independent condition is the posterior probability that past and present are independent, and the weight for the dependent condition is the posterior probability that the past and present RF outputs are dependent. The greater the dependency between the past and the present, the greater the proportion that the second, dependent condition contributes to the calculation of the model output. Conversely, the lesser the degree of dependency, the greater the contribution of the first, independent condition. This model implements neuronal-specific suppression, as the tuning of the normalization signal is fixed to the neuron's preferred orientation (Benucci et al., 2013; Clifford, Wenderoth, & Spehar, 2000; Schwartz et al., 2009; Seriès, Stocker, & Simoncelli, 2009). We will show that the binary model can replicate neuronal population equalization (Benucci et al., 2013; Results section “Population-Level Response Equalization With Longer-Term Adaptation”). Further mathematical details for the binary MGSM are provided in Appendix A.

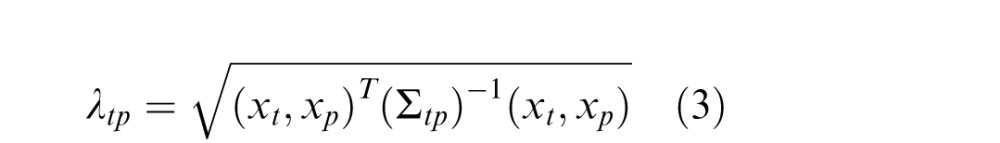

Multiorientation MGSM model

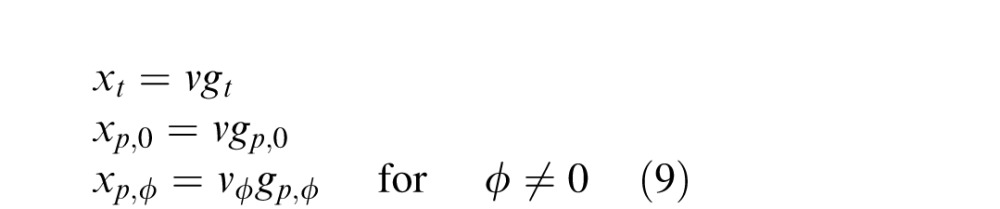

We introduce here the second model we used to capture V1 adaptation effects, termed the multiorientation MGSM, analogous to previous work in the spatial domain (Coen-Cagli et al., 2012). As its name implies, there is no longer just a binary choice of normalization by the past, on or off, but rather there are multiple past orientation pool conditions.

The excitatory drive to both the present RF and the present normalization pool are identical to the binary MGSM. However, we consider several past normalization pools and allow model neurons to choose which (if any) pool to use for a given movie. Specifically, we considered four past pools, one per orientation, each including all past RFs with the same orientation, denoted by , with ϕ = 0°, 45°, 90°, and 135°. In the first condition, denoted ξ1, the present and past are independent similar to the binary model, and the present, xt,θ, is not normalized by the past. However, unlike the binary model, which has only one dependent condition, there are now multiple conditions in which past and present may be deemed dependent, each pertaining to a different past RF orientation. These are denoted ξ2,ϕ, with ϕ again corresponding to each of the possible past orientations. Thus, there are now four dependent pools. In each of these conditions, the RF response, xt,θ, is normalized by the corresponding orientation in the past, xp,θ. In addition, in all conditions, the present is normalized by the other orientations in the present, as in the binary model.

, with ϕ = 0°, 45°, 90°, and 135°. In the first condition, denoted ξ1, the present and past are independent similar to the binary model, and the present, xt,θ, is not normalized by the past. However, unlike the binary model, which has only one dependent condition, there are now multiple conditions in which past and present may be deemed dependent, each pertaining to a different past RF orientation. These are denoted ξ2,ϕ, with ϕ again corresponding to each of the possible past orientations. Thus, there are now four dependent pools. In each of these conditions, the RF response, xt,θ, is normalized by the corresponding orientation in the past, xp,θ. In addition, in all conditions, the present is normalized by the other orientations in the present, as in the binary model.

The normalized responses are computed for each of the above conditions separately and then summed after weighting each condition by its posterior probability. For example, a movie with strong vertical structure that persists in time will produce a high probability that the present RF and the past vertical RFs are dependent. Therefore, the term whereby the present is normalized by the vertical past will have a larger weight than the terms with horizontal past or no past normalization.

We further assumed, for computational tractability, that all neurons share the same set of normalization pools and their corresponding weights. For example, the expected value for a model neuron with a vertical RF is a proportional mix of the vertical RF response normalized only by the present and not the past, normalized both by the present and the vertical past, normalized both by the present and the horizontal past, and so on, for each of the orientations ϕ. Similarly, the expected value for a model neuron with a horizontal RF is a proportional mix of the horizontal RF response normalized only by the present and not the past, normalized both by the present and the vertical past, normalized both by the present and the horizontal past, and so on. Therefore, the normalization pool for a vertical RF and a horizontal RF is shared. By sharing normalization pools, the effective tuning of the normalization signal is determined exclusively by the orientation of the stimulus shown in the present and its match to the past stimuli and not by the tuning of the neuron's RF. Hence, we term this a stimulus-specific normalization model (Benucci et al., 2013; Solomon & Kohn, 2014) and apply it to replicate tuning curve suppression and repulsion (see the section titled “Tuning Curve Adaptation Reflects Sensitivity to Inputs' Statistical Similarity”). Further mathematical details for the multiorientation MGSM are provided in Appendix B.

Model training

The parameters of the model are the prior probabilities that each past orientation normalizes the present (denoted ξprior; see Appendices A and B) and the covariances Σ (see Equation 3) that parameterize the likelihood function. Parameters were optimized on an ensemble of natural movies culled from YouTube, because of the lack of a database of standardized natural movies. The stimulus ensemble consisted of 20,000 temporal sequences, each nine frames long, extracted from 100 frames of 14 natural movies with varying temporal and spatial properties, each normalized to the same range of luminance values (copies of the clips are available from the corresponding author). To find the optimal parameters, we maximized the likelihood through a generalized expectation-maximization algorithm (the approach and equations are described in Coen-Cagli et al., 2012). Briefly, in the expectation step (E-step), we estimate the posterior probabilities for ξ given the current parameter values. In the maximization step (M-step), we search for the parameter values that maximize the so-called complete data log-likelihood, namely, the expectation of log(p(xt,xp,ξ)) under the estimated posterior over ξ. We divided the M-step into multiple steps, one for the dependent and one for each of the independent covariance matrices, and iterated repeatedly between the complete E-step and each partial M-step.

The training was performed unsupervised. As the EM algorithm is not guaranteed to find a global maximum, the training was run multiple times with randomized initial conditions. We found that the parameter values at convergence were similar across multiple runs. All initial parameters for both model types were learned on the multiorientation model. We introduced some minimal constraints on the learned parameters to ensure that the scale of the covariance matrices was similar, both across orientations and between dependent and independent conditions for each orientation.

After the training was complete, we introduced a free parameter, ω, to quantitatively match the overall suppressive strength of recorded neuronal responses, which varies widely across experiments. The free parameter, ω, scales the normalized response in all the dependent conditions relative to the independent condition.

The value of ω is the ratio of the learned model's response normalized by the relative suppression in the data set being replicated. Note that this additional parameter does not affect in any way the qualitative behavior of the models; it only sets the overall suppression strength.

Model extensions for long-term adaptation

In both versions of the MGSM, the model first learns the parameters from an ensemble of natural movies and then holds these parameters fixed and uses them to make response predictions as new experimental stimuli are presented. Whereas the response predictions change with each set of new stimuli, the prior parameters used to calculate the predictions remain static even as the stimulus history diverges farther from the training stimulus ensemble. Given our nine time steps (eight in the past and one in the present) and a frame rate of 30 fps, the current short-term models are able to capture only effects that exist on the order of hundreds of milliseconds. Longer-term effects, which reveal themselves only on the order of seconds to minutes, would not be captured by this model.

We therefore considered a version of the models in which the prior probabilities were dynamically updated with each new set of stimulus presentations (that included eight past and one present). Specifically, for the nth movie sequence, which contains movie frames [n −8, n −7, . . . , n] and RF outputs , we computed the posterior

, we computed the posterior using as a prior

using as a prior , which itself was the posterior computed for the (n − 1)th movie sequence. The procedure for updating was the same for each of the MGSM models (binary and multiorientation), so we describe this generically for one model. Practically, for a given movie consisting of many frames, the model was initially presented with the first set of nine frames, [S1, S2, . . . , S9], where S1 through S8 represent the past and S9 represents the present. Using the learned prior, the model determined the posterior probability for that set of nine stimuli. The model was then presented with Frames 2 through 10 as a new set of stimuli, [S2, S3, . . . , S10], where S2 through S9 represent the past and S10 represents the present. The calculated posterior from the previous step for Frames 1 to 9 now became the new prior, and the model once again calculated the posterior probability for Frames 2 through 10. This process was repeated as each new set of stimuli were presented, up to the last nine frames. Thus, the prior was updated recursively as each new frame of the movie was presented. A recursive Bayesian estimator has similarly been used to model multiple timescales in the retina (Wark, Fairhall, & Rieke, 2009). This long-term model is now able to track the changing visual environment on the order of seconds (Figure 2).

, which itself was the posterior computed for the (n − 1)th movie sequence. The procedure for updating was the same for each of the MGSM models (binary and multiorientation), so we describe this generically for one model. Practically, for a given movie consisting of many frames, the model was initially presented with the first set of nine frames, [S1, S2, . . . , S9], where S1 through S8 represent the past and S9 represents the present. Using the learned prior, the model determined the posterior probability for that set of nine stimuli. The model was then presented with Frames 2 through 10 as a new set of stimuli, [S2, S3, . . . , S10], where S2 through S9 represent the past and S10 represents the present. The calculated posterior from the previous step for Frames 1 to 9 now became the new prior, and the model once again calculated the posterior probability for Frames 2 through 10. This process was repeated as each new set of stimuli were presented, up to the last nine frames. Thus, the prior was updated recursively as each new frame of the movie was presented. A recursive Bayesian estimator has similarly been used to model multiple timescales in the retina (Wark, Fairhall, & Rieke, 2009). This long-term model is now able to track the changing visual environment on the order of seconds (Figure 2).

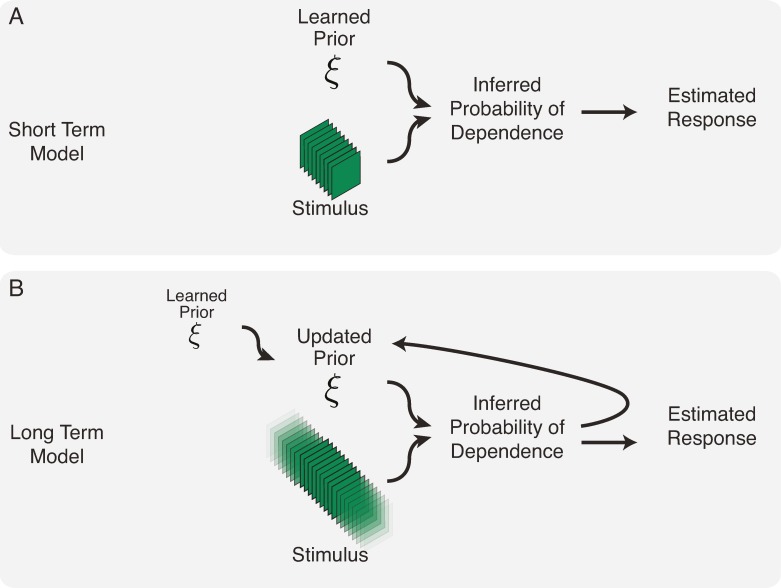

Figure 2.

Schematic of model learning and inference at different timescales. (A) Short-term (static) model: The model determines the (posterior) probability of dependence between the present and past for a series of experimental stimuli, using the prior parameters learned from the movie ensemble. The prior parameters are therefore held fixed and do not change with the new experimental stimuli. The posterior probabilities in turn are used to calculate the flexible divisive normalization signal and the resulting estimated neural response. (B) Long-term (dynamic) model: The prior parameters are no longer fixed but rather updated as new experimental stimuli are presented. Only after the prior is updated does the model calculate the (posterior) dependencies inherent in a new test stimulus. The posterior probabilities are then used as in (A) to estimate the neural response.

Results

Cortical modeling of temporal dependencies in natural movies

To test our hypothesis that adaptation can be predicted by Bayesian inference about dynamic visual stimuli, we first characterized the statistical structure of natural movies through V1-like RFs and then constructed a Bayesian model optimized to such structure.

To quantify the redundancy inherent in natural movies, we examined sequences of small patches of movie frames (Figure 1A), corresponding to the feedforward inputs into a V1 RF, and considered a standard description of a V1 complex cell, given by the square root of the sum of squares of quadrature pairs of filters (Carandini et al., 2005; Heeger, 1992). We found that responses to temporally adjacent frames were correlated. The strength of the correlation, for a given movie, decreased as a function of temporal distance between frames (Figure 1B). Furthermore, correlations decreased faster over time for sequences that included several objects sweeping fast through the RF, compared with movies containing little overall motion (Figure 1B, carousel vs. lion). These correlations are typical of dynamic signals generated at any given position in the visual field over short time intervals, such as the inputs to a V1 RF during a single fixation. Correlations were abolished for a control movie constructed from random still natural images rather than from movie frames (Figure 1B, random). The different levels of redundancy for the different movies are a signature of the nonstationary character of natural visual inputs and a general property of natural signals (Parra, Spence, & Sajda, 2001). Note that the correlations of Figure 1B represent both linear and nonlinear dependencies between RF outputs because they are computed on the squared RF outputs (see Coen-Cagli et al., 2009).

We hypothesized that neurons in V1 are sensitive to temporal dependencies and more specifically that the system reduces such dependencies by divisively normalizing neuronal responses to the present stimulus when it is redundant with past stimuli. To specify the computation required to remove the dependencies, one needs to understand how they are generated in the first place. Here, similar to recent studies of spatial contextual modulation (e.g., Coen-Cagli et al., 2012; Schwartz et al., 2009), we assumed that the dependencies in RF responses to movie sequences arise from structure that is shared between RFs across frames (e.g., due to slow fluctuations in contrast over time).

Specifically, we first considered a bank of visual RFs (Portilla & Simoncelli, 2000; Simoncelli et al., 1992, see the Methods section) covering multiple orientations at subsequent time steps. We modeled the dependencies between RFs across frames as the multiplication between a shared global variable (linking together RF responses across time) and a set of local hidden variables (each representing structure local to an RF at a single point in time; Andrews & Mallows, 1974; Wainwright & Simoncelli, 2000). As is common in such modeling approaches, we then assumed that visual cortical neurons invert the generative model (Dayan & Abbott, 2001) to estimate the local variables and so remove the redundancy induced by the global variable. Because of the multiplicative interaction we assumed above, the inversion amounts to a form of divisive normalization of the current RF response by other RF responses (Coen-Cagli et al., 2009, 2012; see the Methods section), in this case, the recent past.

However, the assumption that there are always dependencies between RFs over time is too restrictive, as suggested by the reduced dependencies in the carousel movie (Figure 1B). In general, frames from natural movies are rarely either wholly dependent or independent but more often lie somewhere between the two extremes. A model attempting to reduce the dependencies between frames must first be able to quantify the degree of dependency between input frames and then reduce them accordingly, suggesting a flexible divisive normalization (see the Methods section). Briefly, the model response was determined in two steps: First, we computed the probability that the RF responses in the present are statistically coordinated with any group of RFs in the past; second, we divisively normalized RF responses in the present by the past RF responses only to the degree that they were inferred to be dependent (Figure 1C). Importantly, the parameters that determined the prior probability of dependence for the model were optimized to match RF dependencies in an ensemble of natural movies (see the Methods section).

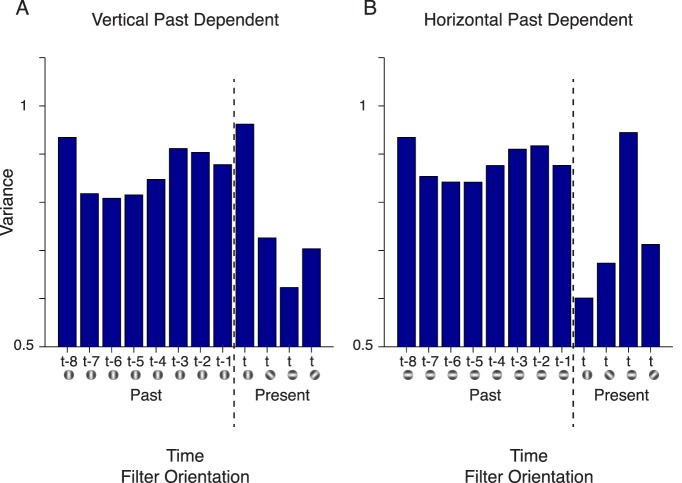

To interpret what the model learns about the movie ensemble and to gain some intuition about the model behavior, it is useful to analyze the learned covariance matrices (see Equation 3). The covariance matrices reflect the typical patterns of temporal dependence in the model. Each covariance characterizes the statistics of the subset of movie sequences that are best explained by the corresponding model component. For instance, the bars in Figure 3A are the learned variances for the model component in which the present filters and past vertical filters are dependent. The learned variance of the present vertical filter is similar to the past vertical filters, whereas the other present orientations have lower variance. This learning reflects a form of similarity metric between past and present orientations, whereby the variance is high when the past vertical orientation matched the present vertical orientation. Similarly, a complementary pattern was learned for the model component in which present filters and past horizontal filters are dependent (Figure 3B). This time, the variance is high when the past horizontal orientation matched the present horizontal orientation. A similar trend occurs with respect to the covariance (off diagonal) elements of the matrix. Overall, for given experimental adaptation and test stimuli, this results in the highest probability of dependency when the past and present stimulus orientations are matched. This in turn enables the stimulus-specific orientation selectivity in the divisive normalization.

Figure 3.

Learned variances in the multiorientation model. (A) Model component in which vertical past filters and present filter are dependent. The ordinate represents the learned variance of the Gaussian latent variables corresponding to a vertical filter in the past (left of the dotted line) and four filter orientations in the present (right of the dotted line). The top row of the labels on the abscissa refers to the temporal position of the frames relative to the present frame (t); for example, one frame in the past is t-1. The icons below each temporal position depict the filter orientation. (B) Same as for (A) but for the model component in which horizontal past filters and present filters are dependent.

Comparing model simulations to experimental data

In the next part of the Results, we simulate adaptation experiments on our model. A specific prediction of our framework is that visual adaptation results in strong divisive suppression of cortical activity only when the visual inputs are inferred statistically dependent but not otherwise. This inference relies on the prior expectation (set by exposure to the natural environment) that visual inputs are often, but not always, dependent over short time spans. Furthermore, we suggest that long-term adaptation effects could be described as a consequence of updating such prior expectations.

Tuning curve adaptation reflects sensitivity to inputs' statistical similarity

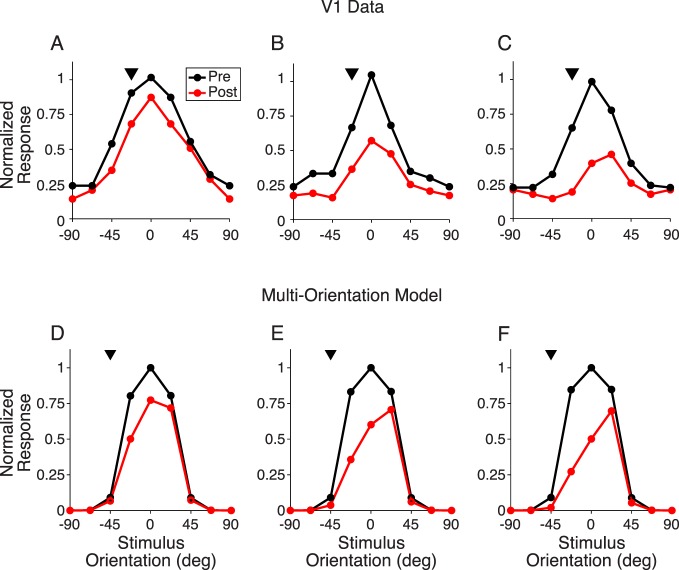

We first tested whether the specific model prediction for short-term adaptation, namely, that the effects are contingent on the inferred statistical dependency of the inputs, can account for experimental orientation adaptation data (Figure 4A, D). We specifically considered classical experiments on suppressive and repulsive changes in tuning curves that have been documented in multiple labs, even for short timescales of adaptation of hundreds of milliseconds (Dragoi et al., 2002; Felsen et al., 2002; Müller et al., 1999; Patterson et al., 2013). Here we show that our model can replicate the main qualitative result of tuning curve suppression and repulsion. In the Discussion section, we further address studies that have found strengthening of effects with adaptation time.

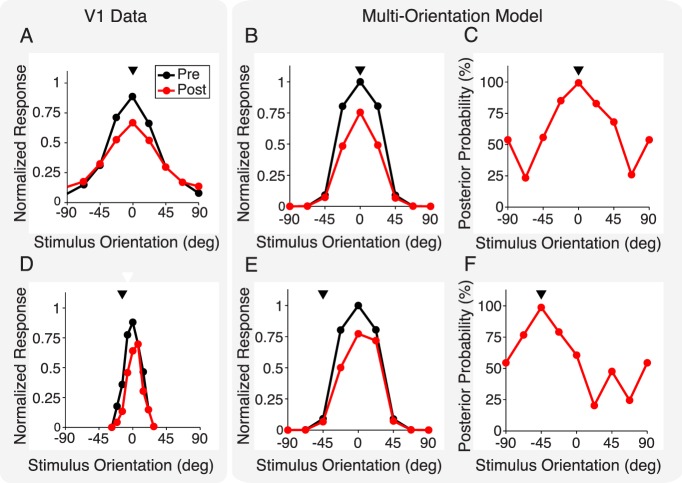

Figure 4.

Effects of adaptation on neuronal response tuning curves. (A) Average orientation tuning curve responses in V1 data for pre (black line) and post (red line) adaptation. The neuron's preferred orientation in the data (aligned to zero for visualization) was between 0° and 15° away from the adapter orientation (arrowhead). Adapted from Wissig and Kohn (2012). (B) Model prediction of neuronal response pre- and postadaptation, for a neuron that prefers a stimulus of 0° and was adapted to a stimulus of that orientation. (C) Inferred posterior probability, for a 0° normalization pool, that past and present stimuli are dependent, for the model neuron in (B). Note that the tuning curves of the inferred probability peak at the orientation of the adapter (arrowhead). (D) Same as (A) but for an adapter 14° away from preferred orientation, resulting in repulsion, namely, a shift of the tuning curve peak away from the adapter. Adapted from Müller et al. (1999). (E, F) Same as (B, C) but for an adapter 45° away from the model neuron's preferred orientation. (B, E) Overall suppression strength in the model was controlled by a free parameter (Methods), which we set here to match the suppression level in (A) when both adapter and test have an orientation of 0°.

We computed model responses to experimental stimuli consisting of short sequences of oriented gratings. To simulate orientation adaptation and its influence on tuning curves in the model, we considered stimulus sequences analogous to experiments on changes in tuning curves. The gratings' orientation was fixed for the past frames (adapter) and varied on each trial of the present frame (test) to measure the effects of adaptation on the neuron's tuning curve.

Figure 4A illustrates the typical effect of response suppression when an adapter is matched to the orientation preference of the neuron and the tuning curve amplitude is reduced by adaptation (experimental data plotted from Wissig & Kohn, 2012; suppression also occurs for shorter timescales of adaptation, as documented in the summary figure 2 of Patterson et al., 2013). The model reproduced this suppressive effect (Figure 4B). This can be understood by considering the inferred probability of dependence and its influence on the divisive normalization. The probability inferred by the model increased with the similarity between adapter and test orientations (Figure 4C), resulting in large response suppression around the peak of the tuning curve and weak or no suppression toward the tails (Figure 4B).

We then considered the case in which the adapter orientation does not match the preferred orientation of the neuron. Experimentally, in this case, the tuning curve is both reduced in amplitude and shifted away from the adapter, an effect termed repulsion (Figure 4D; experimental data plotted from Müller et al., 1999; see also repulsion in other data across a range of timescales and adapting orientations on the flank in Dragoi et al., 2000, 2002; Felsen et al., 2002; Patterson et al., 2013; Wissig & Kohn, 2012). Note that the model is limited in predicting tuning curves to adapter orientations matched to one of four filter orientations detailed in the Methods, thus, the difference in adapter orientation between the plotted experimental data of Müller et al. (1999) and modeling predictions (14° in Figure 4D and 45° in Figure 4E). The repulsion could be explained qualitatively by our model (Figure 4E), because the dependence probability (and therefore the degree to which normalization was engaged) was determined by the match between test and adapter. To understand the model behavior, first recall that the model uses four normalization pools each with a different orientation tuning. The adapter drives most strongly the normalization pool with matching preferred orientation (0° in Figure 4B; 45° in Figure 4E), regardless of the test stimulus. However, the normalization signal computed by such pools is used to normalize the neural response only to the degree that test and adapter are inferred dependent. Because the probability of dependence is highest when the adapting and test stimuli are matched in orientation (Figure 4F), adaptation is stronger when test stimuli are closer to the adapter. Our modeling framework thus provides a normative explanation from movie statistics for stimulus-specific adaptation (Solomon & Kohn, 2014).

Long-term adaptation and updating the model priors

We have focused so far on some classical adaptation experiments that examined how visual cortical responses to test stimuli are influenced by repeated exposure to an individual stimulus over a short period of time, on the scale of hundreds of milliseconds. In real life, we are constantly exposed to stimuli, and it is likely that the cortex is sensitive to different timescales through different mechanisms. Here we consider that the visual cortex keeps track of the changing environmental statistics by updating its prior knowledge about the environment. Specifically, we study how prolonged exposure to visual stimuli affects model responses by updating the prior probability of dependence. We first describe how we extend our model to capture longer-term adaptation and then apply it to shifts in contrast response curves with adaptation and to other recent data (Benucci et al., 2013). In the Discussion section, we also consider the implications of our model updating on capturing stronger changes in tuning curve suppression and repulsion with longer adaptation time.

In our model thus far, for any short sequence of eight adapting frames, the probability of dependence is obtained by combining the prior probability learned from natural movies with the evidence provided by the adapting and test frames (Figure 2A). This short-term model, which captures dependencies only over a small number of frames in the past, is unable to reproduce effects that require longer timescales of adaptation. We posit that on longer timescales of adaptation, the system is no longer just tuned to the statistics of natural movies but rather updates its model based on the current statistics of the experimental stimuli. We therefore extended the model and allowed it to update the prior probability of dependency as each new set of stimuli was presented (Figure 2B).

Contrast response following long-term adaptation

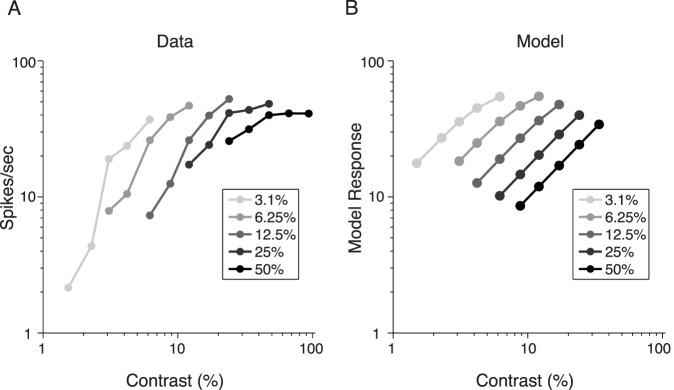

We next tested our model's ability to account for effects of contrast adaptation. To simulate the effects seen in contrast adaptation, we held the orientation of the grating fixed while varying the contrast of the test and adapter gratings. Figure 5A depicts the experimental findings for contrast adaptation that is typically measured on the order of seconds, namely, that increasing the contrast of the adapter shifts the contrast response curve down and to the right (Albrecht et al., 1984; Ohzawa et al., 1985), thereby reducing the contrast sensitivity of neurons.

Figure 5.

Contrast response functions after adaptation. (A) Neuronal response, in spikes per second, of a complex V1 neuron after adaptation to gratings of various contrasts for 80 s. Each line corresponds to a different grating contrast as indicated by the legend. After adaptation, the neurons were presented with test gratings of contrasts ranging from 1.5% to 94%. Adapted from Ohzawa et al. (1985). (B) Model predictions for the same paradigm as used to generate the experimental results in (A).

To replicate this paradigm with our model, we repeated the adapting contrast for 30 frames, which for our movies approximates as 1 s. As each new stimulus was presented, the model updated its prior probability of dependency, based on the posterior inferred from the previous stimuli (Figure 2B). The model is able to generate similar qualitative results to the experimental data (Figure 5B) because of the role contrast plays in divisive normalization as well as the updating of the prior probability of dependency. As the adapting contrast increases, so too does the magnitude of the normalization signal (as expected by divisive normalization frameworks). This is due to a high probability of dependency between the adapting and test stimuli in our model following the adaptation. The repeated exposure of the model to the adapting contrast results in a prior probability heavily weighted toward the dependent regime, so a high-contrast past is inferred to be dependent with any contrast in the present. Note that the slope of the curve is different between the model and neuron because of the choice of prior in the GSM model (Rayleigh for computational tractability), which influences the exponent of the normalization term and therefore the slope of the contrast response function.

Without this updating in the model, when there is a significant difference (at least fourfold) between the adapter and test contrasts, there is a decreased predicted probability of dependence between the past and the present. This can lead to a situation in which a moderate contrast adapter may facilitate a low-contrast test on short timescales in our model. We return to this in the Discussion section.

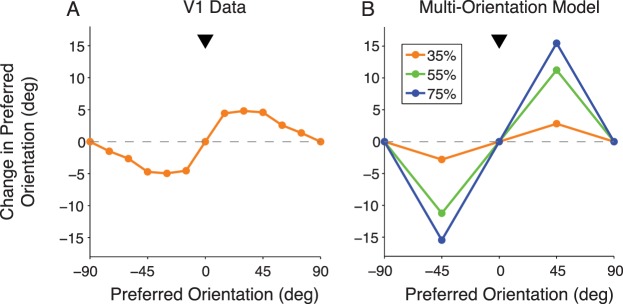

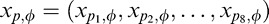

Population-level response equalization with longer-term adaptation

We next considered some recent data (Benucci et al., 2013) in which a longer-term adaptation paradigm has been used experimentally to test adaptation to a distribution of environmental statistics, rather than to a single stimulus. Benucci et al. (2013) considered an ensemble of gratings of different orientations, presented over several seconds. Each orientation was presented at random times with equal frequency except for one orientation (the biased stimulus orientation), which was shown with a greater frequency. To measure the effects on V1 populations, Benucci et al. (2013) measured the tuning curves for each group of neurons sharing a specific orientation preference. When the stimuli were drawn from a distribution biased to a single orientation, the adapted responses still reproduced the classical effects of tuning curve suppression and repulsion (Figure 6A).

Figure 6.

Effect of bias in the stimulus ensemble on shifts in tuning curve peak. (A) V1 neural responses after adaptation to stimuli that are shown more frequently at 0° (arrowhead; 35% bias) than at other orientations. Each point represents the average change in preferred orientation across all neurons with preadaptation preference indicated in the abscissa. Data adapted from Benucci et al. (2013). (B) Model predictions for three different frequency biases (indicated in the inset).

We first verified that for the biased stimuli, as for the classical adaptation paradigm (which may be seen as an extreme 100% bias case, because a single orientation is presented 100% of the time and all other orientations are not presented at all), our model learns to update the prior probability of dependence over time, leading to an increased probability for the normalization group whose orientation preference matches the biased orientation in the stimulus ensemble. We next confirmed that our model achieves both repulsion and suppression with this paradigm. In addition, the model is able to predict how the strength of the repulsive shift is affected by the degree of bias to a single orientation (Figure 6B). This is a prediction of the model that has yet to be tested experimentally.

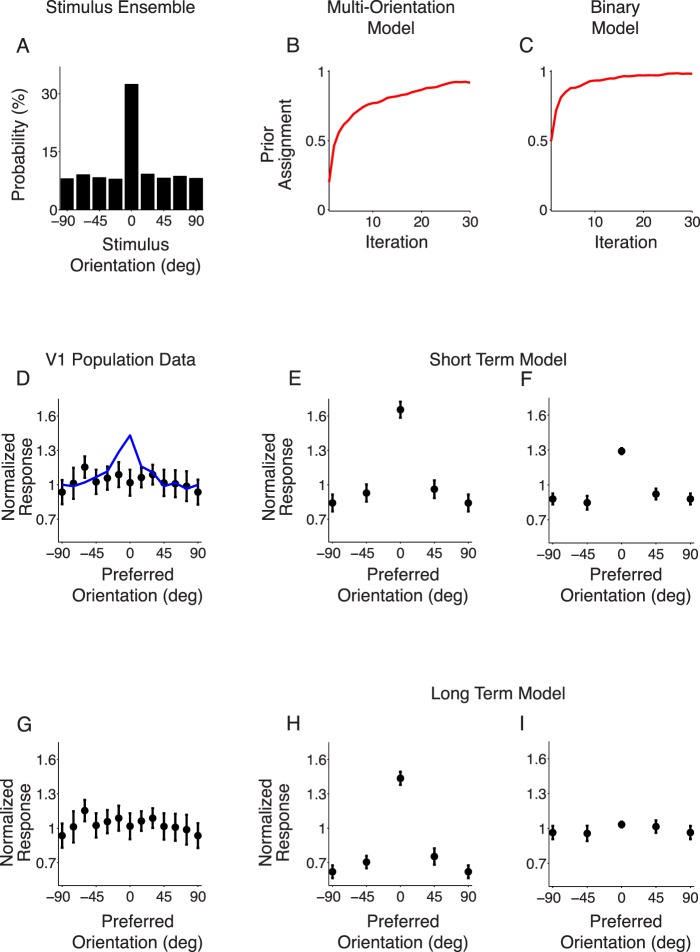

The experimental paradigm described above offers a richer set of data beyond the classical suppression and repulsion. In this paradigm, a stimulus of a specific orientation (the adaptive stimulus) is shown with a greater frequency than other orientations. One of the main experimental findings of Benucci et al. (2013) was that the effects of adaptation on tuning curves compensate exactly for the overrepresented stimulus. The time average of population responses to stimuli drawn from the distribution biased to a single orientation was not significantly different from that of stimuli drawn from the uniform distribution, a phenomenon that has been termed equalization (Benucci et al., 2013; Figure 7G). Stated differently, on one hand, the biased orientation was presented more frequently than the other orientations, but on the other hand, the response of neurons preferring the biased orientation was suppressed more strongly than neurons preferring other orientations. These two effects counterbalanced each other, such that the average response over the entire stimulus ensemble did not differ between neurons with different orientation preferences.

Figure 7.

Neuronal response equalization. (A) The probability of presentation for different stimulus orientations. Zero degrees is shown 35% of the time; the others are shown less than 10% of the time. (B, C) The prior probability of dependence for the biased orientation as a function of iteration number for the two models. (D) Experimental finding showing V1 population responses to gratings drawn from the biased distribution, normalized by the mean responses to gratings drawn from the uniform distribution. Error bars represent ±1 SD. The blue line is a prediction of normalized response from a model without adaptation. Adapted from Benucci et al. (2013). (E, F) Population responses of the short-term model to the biased stimulus ensemble, normalized to the uniform ensemble as in (D). We simulated one experiment by presenting the stimulus for 300 frames (roughly 10 s), averaging the responses and normalizing by the uniform distribution. We then repeated the experiment six times; the error bars represent ±1 SD across repeated experiments, similar to (D). (G) V1 population responses replotted from (D). (H, I) Same as (E, F) but for the long-term model outlined in Figure 2B. (E, F, H, I) Overall suppression strength in the model was controlled by a free parameter (Methods), which we set here to match the suppression level in Benucci et al. (2013) when both adapter and test have an orientation of 0°.

To simulate the equalization experiment, we created grating stimulus ensembles drawn from a biased distribution (Figure 7A). We then exposed the model to both the biased distribution and the uniform distribution. As expected from Benucci et al. (2013; Figure 7D, blue curve), using the short-term model to predict equalization failed to adequately suppress the biased orientation. As a result, the response of neurons preferring the biased orientation was much larger than neurons with different preferences, as illustrated by the central peak in Figure 7E.

We therefore asked whether the long-term model could account for population-level equalization. We started from the parameters learned initially on the natural movie ensemble (approximately a flat prior probability of dependence across orientations) and updated the prior as the biased stimulus ensemble was presented. As shown in Figure 7B, the prior correctly increased for the biased orientation. The prior for the orthogonal orientation decreased, whereas it did not change as much for the intermediate orientations (not shown). This updated prior led to stronger suppression overall (compare Figure 7H to the short-term model, Figure 7E). Nonetheless, the model was unable to equalize population responses, as illustrated by the central peak in Figure 7H.

To understand this failure of the model, we must first note that the findings in Benucci et al. (2013) have been described as arising from a combination of stimulus-specific and neuronal-specific adaptation. First, the responses to test stimuli that are matched to the biased orientation are more strongly suppressed than to stimuli with different orientations; this effect is consistent with sensitivity to the statistical similarity of the past and present inputs. Furthermore, regardless of the test stimulus, the responses of neurons whose preference matches the biased orientation are more strongly suppressed than those of other neurons; this effect has been ascribed to neuronal fatigue (i.e., a decrease in responsivity for neurons that are stimulated more often or more strongly).

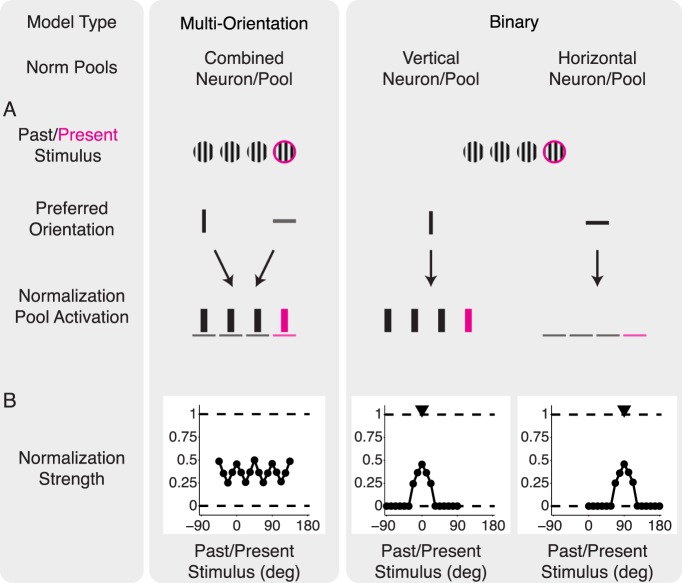

Equalization via neuronal-specific normalization model

The model we have considered thus far, which we have denoted the multiorientation model, lacks the second component of neuronal-specific adaptation and thus cannot capture equalization. More specifically, the model uses four normalization pools, each comprising a set of past RFs with a given orientation (Figure 8A, left). When a short movie sequence is presented, the model computes the probability of dependence between the present RFs and the past RFs of each of the normalization pools. Subsequently, the response of the model neuron is normalized by each of these pools according to the estimated probabilities. The resulting normalization signal depends only on the properties of the stimulus and is identical for all RFs in the present, regardless of their preferred orientation (Figure 8B, left). For example, if both the adapter and test stimuli are vertical, then both a neuron with preferred vertical orientation and a neuron with preferred horizontal orientation will be suppressed divisively by the vertical normalization pool (Figure 8A, left). As a consequence, even with the biased stimulus ensemble, model neurons tuned to the biased orientation are not suppressed more strongly than other neurons, and population responses cannot be equalized (Figure 7H).

Figure 8.

Comparison of normalization signals in the binary model and multiorientation model. We include only two filter orientations for illustration purposes, although the models use four orientations as detailed in the Methods. (A) Both models were exposed to a single set of past (black) and present (magenta) vertical stimuli (top row). We consider, for each model, two model neurons with vertical and horizontal preferred orientation (middle row). Bottom row: Two normalization pools are illustrated, with different orientation preferences. Bar thickness represents a cartoon of the strength of the output of the filters in each normalization pool. In the multiorientation model (left), both neurons are normalized by the most active normalization pool. In the binary model (right), each neuron has its private normalization pool, and therefore the normalization signal is stronger for the vertical neuron. (B) Normalization strength, which is the amount of suppression of the postadaptation response relative to the preadaptation response, , for each normalization pool at a wide range of past/present stimuli orientations. The multiorientation model (left) shows consistently high normalization strength across a wide range of orientations. In the binary model (right), only the ranges around the preferred orientations (arrowheads) show substantial normalization. The unevenness in the multiorientation model curve is due to using only four neuronal orientations, as detailed in the Methods.

, for each normalization pool at a wide range of past/present stimuli orientations. The multiorientation model (left) shows consistently high normalization strength across a wide range of orientations. In the binary model (right), only the ranges around the preferred orientations (arrowheads) show substantial normalization. The unevenness in the multiorientation model curve is due to using only four neuronal orientations, as detailed in the Methods.

We therefore considered a different choice of divisive normalization pools, which we denote the binary model (see the Methods section for details). In this version, each model neuron has a private normalization pool with an orientation that is matched to its preferred orientation (Figure 8A, right). The divisive normalization signal for each neuron is still weighted by the statistical similarity between past and present stimuli, but it is tuned only to the neuron's preferred orientation. Therefore, each neuron in this model has a different tuning of the normalization signal (Figure 8B, right). For example, if both the adapter and test stimuli are vertical, this will result in strong suppression for a vertical neuron but not for a horizontal neuron (Figure 8A, right). This binary model implements a neuronal-specific normalization.

We then tested whether the binary model with the biased stimulus ensemble could account for population-level equalization. We started from the prior probability of dependence learned initially on the natural movie ensemble. In this case, each neuron has its own private normalization pool. First, we computed the averaged responses to the biased stimulus ensemble for the short-term model (i.e., without updating the prior). Figure 7F shows that, in this model, the difference in the average responses across neurons was smaller than in the multiorientation model (Figure 7E). This is because the neuron whose preference matched the biased orientation was driven more strongly by the stimuli, but also suppressed more strongly by the context, as explained above. However, the population responses were not precisely equalized.

We then considered the long-term version of the binary model. When we updated the prior to reflect the biased ensemble, the prior correctly increased for the neuron whose preference matched the biased orientation (Figure 7C). As a result of the prior updating, suppression increased for the neuron whose preference was matched to the biased orientation more than for the other neurons (not shown); this relative increase in suppression reflected the stimulus ensemble statistics and led to equalization of population responses (illustrated by the lack of a central peak in Figure 7I).

To further demonstrate that equalization was due to the correct updating of the prior, we also considered stimulus ensembles with different amounts of bias. We found that ensembles with larger bias led to higher values for the updated prior of dependence reflecting precisely the ensemble bias level (Supplementary Figure S1D, E); this in turn led to stronger relative suppression for the matched neuron, and therefore equalization was maintained for a range of bias levels (Supplementary Figure S1A, B). However, when the bias became too large, the updated prior saturated (i.e., it reached a value of 1; Supplementary Figure S1F), and therefore the relative suppression could not be further increased, leading to a breakdown of equalization (Supplementary Figure S1C) similar to the experimental observation of Benucci et al. (2013). Changes in contrast follow the same response trend as changes in bias. Increasing contrast generally leads to larger probability of dependence and therefore stronger suppression, as we have seen in the spatial domain (Coen-Cagli et al., 2012).

Although the binary model with an updating prior led to population-level equalization, we found that it could not account for tuning curve repulsion (Supplementary Figure S2E). This is because the binary model captures neuronal-specific but not stimulus-specific adaptation, because of the choice of the normalization pool and how the statistical similarity between adapter and target is computed. In particular, for the binary model, the inferred probability of dependence is determined by how similar both the adapter and test stimulus are to the neuron's preferred orientation. For example, in Supplementary Figure S2E, F, we considered a neuron tuned for vertical orientation (0°) and used an adapter fixed at 45°; in this case, regardless of the test stimulus, the inferred probability was close to 0, because the adapter was largely different from the neuron's preference (Supplementary Figure S2F), and therefore test stimuli at +45° or −45° did not lead to different responses (Supplementary Figure S2E).

In summary, our modeling framework explained both short-term adaptation effects on tuning curves and longer-term adaptation effects on contrast and population responses, as sensitivity of the visual cortex to the statistics of the visual environment on different timescales. However, each set of effects could be captured only by a particular implementation of the model (and a respective normalization signal that is either stimulus specific or neuronal specific), an issue that we further address in the Discussion.

Discussion

We have considered the link between movie statistics, divisive normalization models, and adaptation phenomena in primary visual cortex. Our approach was to learn the parameters of an interpretive model of adaptation based on scene statistics, an approach more prevalently used in the spatial context domain (Coen-Cagli et al., 2012; Rao & Ballard, 1999; Schwartz & Simoncelli, 2001; Spratling, 2012). Although descriptive models address what neural circuits do and mechanistic models describe how they do it, interpretive models focus on why the neural systems operate in the way they do (Dayan & Abbott, 2001). Descriptive and mechanistic models typically rely on data or circuitry, respectively, as the basis for their construction. Interpretive models (which may be built on top of descriptive or mechanistic approaches) use computational principles, such as efficient coding or Bayesian inference, as their foundation. Here we assume that when confronted with sensory stimuli over time, the brain attempts to reduce the dependency in the visual inputs. This is implemented in our model by performing what amounts to Bayesian inference based on its prior belief about the world and the likelihood for the current stimulus. In our modeling framework, inference amounts to a generalized form of divisive normalization.

Here we have focused on the implications of this scene statistics approach for adaptation. Many models of adaptation are descriptive in nature; they describe observed effects using a parameterized function that can be fit to data. Descriptive models of adaptation have included divisive normalization, a ubiquitous computation in neural systems (Carandini & Heeger, 2012; Dhruv, Tailby, Sokol, & Lennie, 2011; Heeger, 1992). There have also been other models of adaptation that are more mechanistic in nature (Cortes et al., 2012; Teich & Qian, 2003). Our model does not challenge descriptive or mechanistic models of temporal contextual effects but rather incorporates some of their aspects, such as divisive normalization, and provides a principled explanation for why cortex performs such computation.