Abstract

Fearful faces are believed to be prioritized in visual perception. However, it is unclear whether the processing of low-level facial features alone can facilitate such prioritization or whether higher-level mechanisms also contribute. We examined potential biases for fearful face perception at the levels of perceptual decision-making and perceptual confidence. We controlled for lower-level visual processing capacity by titrating luminance contrasts of backward masks, and the emotional intensity of fearful, angry and happy faces. Under these conditions, participants showed liberal biases in perceiving a fearful face, in both detection and discrimination tasks. This effect was stronger among individuals with reduced density in dorsolateral prefrontal cortex, a region linked to perceptual decision-making. Moreover, participants reported higher confidence when they accurately perceived a fearful face, suggesting that fearful faces may have privileged access to consciousness. Together, the results suggest that mechanisms in the prefrontal cortex contribute to making fearful face perception special.

Keywords: fearful face perception, perceptual decision-making, metacognition, dorsolateral prefrontal cortex (DLFPC), voxel-based morphometry (VBM)

Introduction

Many studies suggest that fearful face perception is special, in that a fearful face is easier or quicker to be perceived than faces with neutral or other emotional expressions. For instance, some studies have shown that fearful faces were more likely to be perceived when attention was directed away (e.g. attentional bink; Milders et al., 2006; Stein et al., 2009). Other studies have shown that fearful faces are more resistant to suppression by visual noise or dichoptic suppression (Yang et al., 2007; Amting et al., 2010; Stein et al., 2010, 2014; Stienen and de Gelder, 2011).

Yet, it is unclear if such prioritized perception of fearful faces is merely due to lower-level visual processing. Although previous studies carefully controlled for low-level physical features such as luminance contrast and spatial frequency, bottom-up processing of local features such as the increased eye white exposure may be sufficient to explain why fearful faces are easier to be seen. For instance, Yang et al. (2007) showed that fearful faces resisted continuous flash suppression better than other faces. But importantly, the effect was preserved even when the faces were inverted. These results seem to be compatible with the interpretation that it was local features rather than Gestalt facial processing that account for the prioritized perception of fearful faces.

On the other hand, it is also clear that high-level mechanisms contribute to visual perception in general. For example, it has been shown that mechanisms in the prefrontal cortex contribute to our perception by forming perceptual decisions, that is to statistically evaluate the most likely identity of the perceived object given the accumulating sensory evidence encoded in lower sensory areas (Gold and Shadlen, 2007). This highlights the importance of examining high-level processings in an attempt to thoroughly understand the mechanism underlying our visual perception. Yet, while the low-level mechanisms have been the focus in previous studies (Yang et al., 2007), the contribution of high-level mechanisms in the fearful face perception has been rarely investigated. We speculated that these high-level mechanisms may also contribute to prioritize fearful face perception, by biasing perceptual decisions in favor of fearful faces.

Another high-level mechanism involved in visual perception is metacognition, with which we monitor our ongoing perceptual decisions and derive a level of subjective confidence when we are likely to be correct. It has been shown that fearful faces are more likely to reach conscious perception (Yang et al., 2007; Amting et al., 2010; Stein et al., 2010, 2014; Stienen and de Gelder, 2011), which in turn has been linked to metacognition (Lau and Rosenthal, 2011; Ko and Lau, 2012). Thus, we also speculated that fearful faces may have a prioritized access to the metacognitive system, such that fearful faces may be perceived with higher confidence.

The possibility that high-level mechanisms contribute to prioritize fearful face perception is not incompatible with low-level accounts. Given that fearful faces could forecast approaching dangers in the environment, there may be a survival advantage to prioritize perception of fearful faces at both the higher and lower levels.

Therefore, in this study, we examined potential biases for fearful face perception at the levels of perceptual decision-making and metacognition. To selectively examine biases due to high-level mechanisms, we carefully controlled for lower-visual processing sensitivity by titrating the luminance contrasts of backward masks, as well as the emotional intensity expressed in fearful, angry and happy faces. We also used voxel-based morphometry (VBM) to examine the involvement of dorsolateral prefrontal cortex (DLPFC), which plays an important role in both perceptual decision-making (Kim and Shadlen, 1999; Leon and Shadlen, 1999; Heekeren et al., 2006; Philiastides et al., 2011) and metacognition (Lau and Passingham, 2006; Lau and Rosenthal, 2011; Fleming et al., 2012).

Methods

Participants

Forty-one students (17 males, mean age 23.2) from Columbia University participated in this study. All participants were recruited to complete two sessions conducted on two separate days, once with the male image set and once with the female image set (order counterbalanced; see Stimuli for details). Yet, only 24 participants completed both sessions. For the remaining 17 participants, 13 were tested only with the female face image set and 4 were tested only with the male face image set. Data collection was stopped after the number of participants who completed both sessions met the sample size which was predetermined based on the previously reported similar psychophysics experiments (Koizumi et al., 2015). In 24 out of the 41 participants (14 completed both image sets, 2 completed only the male image set and 8 completed only the female image set), we had access to structural MRI images of their brains (10 males, mean age 23.7) from another experiment not mentioned here, thus their data were further used in VBM analysis. All participants received $10–15 per hr of participation. Participants completed a written informed consent form prior to participation. The study was approved by the Columbia University’s Committee for the Protection of Human Subjects.

Stimuli

We used the NimStim stimulus set (Tottenham et al., 2009) to select the images of fearful, angry, happy and neutral faces, posed by one male and one female models (model number 34M and 07F) [to demonstrate in Figure 1, another model (03F) with publication permission was used]. Only the images with open mouth were selected so that participants could not perform the tasks solely based on whether the mouth was open in that image. The images were morphed from neutral to fearful, angry and happy expressions of the same model using the Norrkross MorphX software version 2.11. Each morph sequence (e.g. neutral to fear) had 99 intervals, producing a continuum of 100 images with the gradual increment of emotional intensity. The morphed images were converted into gray scale, and their luminance contrast, spatial frequency spectrum and overall luminosity were equated across the entire image set with the SHINE toolbox (Willenbockel et al., 2010) implemented in Matlab. The image contrast was 30% of the original images. The size of the image was 8.4 × 6.5 in visual angle with a viewing distance of 75 cm.

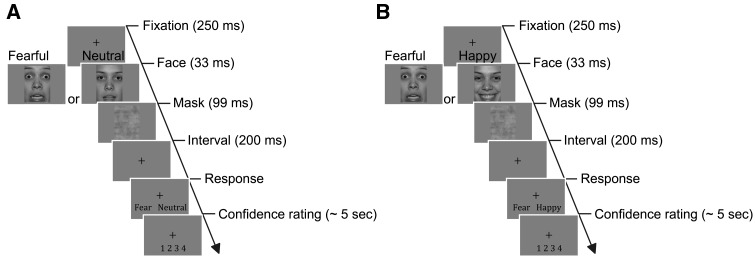

Fig. 1.

Schematic depiction of a trial sequence for (A) the Detection Tasks and (B) the Discrimination Tasks. (A) An example of a trial in the Detection Task, where either an emotional face (fearful in this case) or neutral face was presented. (B) An example trial in the Discrimination Task (fearful/happy in this example). For visualization purposes, the face images here are presented with the maximum emotional intensity (i.e. 100%) and high contrast; in the experiment these parameters were titrated to achieve near-threshold performance levels.

The face image was followed by a mask (see Experimental Design, Figure 1) so as to manipulate the task difficulty but the mask presentation was not meant to completely abolish awareness of the face. The mask images were created by modifying the to-be-masked face images from that trial in a manner that the phase of face stimuli was scrambled using Matlab while maintaining other physical properties (e.g. luminosity and spatial frequency spectrum).

Experimental design

Detection calibration

Participants first went through Detection Calibration, in which they performed fearful, angry and happy face detection tasks, in orders counterbalanced across participants. The aim of calibration was to identify a threshold-level intensity for each emotion so that the participants would perform equally well (i.e. same sensitivity) in detecting faces with three different emotions. This allowed us to access detection biases while making sure that such biases were not due to differences in the emotional intensity between the faces. During calibration, we titrated the emotional intensity of the target face (see Stimuli earlier) with the Quest threshold estimation procedure (Watson and Pelli, 1983), so that the participants were expected to perform at an accuracy of about 75% in a 2-interval forced-choice (2IFC) detection task (details later). Each task had 16 practice trials and 96 calibration trials. The calibration trials consist of two randomly interleaved Quest sequences of 48 trials each. Each Quest sequence independently estimated the threshold emotional intensity, and the average of the two estimates was used in the following Discrimination Calibration and experimental tasks. The means of morph intensity for fear, angry and happy face were M = 48.63 ± s.d. 27.21, M = 51.38 ± 27.29, M = 40.23 ± 19.50, respectively. A one-way analysis of variance (ANOVA) revealed a significant main effect of Emotion [F(2, 39) = 6.88, P = 0.003, partial η2 = 0.26], which was due to that the calibrated morphed intensity was higher for an anger face (P = 0.002, Bonferroni corrected) and tended to be higher for a fearful face (P = 0.06), relative to a happy face.

On each trial, one neutral face as well as one target emotional face (fearful, happy or angry, in different blocks) were presented consecutively in random order. A trial started with a face (33 ms) followed by a mask (99 ms). After a blank (750 ms), a second face (33 ms) was followed by another mask (99 ms). The mask contrast was matched with the contrast of face image and was kept constant throughout Detection Calibration. To avoid the overlap of locations between the two faces, the location of the first face was jittered from the center of screen to a random direction by a Euclidean distance of 1.2 degree, and the location of the second face was jittered by the same distance to a different direction. Immediately after the second mask, the key-assignment appeared in the lower screen. Participants used 1- or 2-key to report the perceived sequence of faces, i.e. whether the emotional face came first, or the neutral face came first. The key assignment was counterbalanced across the participants. When a response was registered or a 5 s period elapsed from the offset of the second mask, a fixation cross (0.35°) was presented for 250 ms, followed by the next trial.

Discrimination calibration

With the emotional intensity levels determined in Detection Calibration, participants went through Discrimination Calibration in which they performed three tasks to discriminate fearful/angry, fearful/happy and happy/angry emotion pairs, in orders counterbalanced across participants. The aim of this additional calibration was to identify a threshold contrast for the backward mask for each emotion pair, so that the participants would discriminate each pair with similar accuracy. The reason is that even when each emotional face is similarly detectable, discrimination between each face pair may still show different sensitivities. The luminance contrast of the backward mask was titrated with the Quest threshold estimation procedure (Watson and Pelli, 1983) to achieve an accuracy of 75% in a 2IFC task. The procedure was similar to Detection Calibration. In each calibration task, a total of 96 trials were divided into two randomly interleaved Quest sequences of 48 trials. Each sequence independently estimated the threshold mask contrast, and the average of the two estimates was used in the experimental Discrimination Tasks.

The means of calibrated Michelson contrast for the backward mask in the discrimination tasks with fearful/angry, fearful/happy and happy/angry pairs were M = 34.06 ± s.d. 13.60, M = 26.96 ± 13.82, M = 34.62 ± 13.78, respectively. A one-way ANOVA revealed a significant main effect of Emotion [F(2, 39) = 5.65, P = 0.007, partial η2 = 0.23], which was due to that the mask contrast for the discrimination task was significantly lower for the fearful/happy pair compared with the fearful/angry pair as well as the happy/angry pair (P = 0.033, P = 0.019, respectively).

Detection tasks

With the estimated threshold intensity for each emotion, participants went through three Detection Tasks (fearful/neutral, angry/neutral and happy/neutral) and three Discrimination Tasks (fearful/angry, fearful/happy and happy/angry), in orders counterbalanced across participants. The mask with the same contrast as the face images were used in the Detection Tasks, and the mask contrasts estimated in Discrimination Calibration were used in the Discrimination Tasks. In Detection Tasks (Figure 1A), participants were presented with either a neutral face or an emotional face expressing fearful, angry or happy emotions. Each trial started with a face (33 ms) followed by a mask (99 ms). Immediately after the mask, a key assignment was shown in the lower portion of the screen. Participants used 1- or 2-key to indicate which of the two face images (e.g. fearful or neutral) was perceived. The key assignment was counterbalanced across participants. After 200 ms from the response, a key assignment for confidence rating was indicated in the lower portion of the screen. Participants rated how confident they were that their response was accurate. They used the 1- to 4-keys on a keyboard to indicate from lowest to highest confidence. When confidence was rated or 5 s elapsed from the mask offset, a fixation cross (0.35 angle) was presented for 250 ms, followed by the next trial. The location was matched for the face and mask images within a trial but was jittered by a distance of 1.2 degree in a random direction across trials.

Discrimination tasks

The procedure for the Discrimination Tasks (Figure 1B) was similar to those of the Detection Tasks. On each trial, participants were presented with one of the face images from the emotion pair in that task (e.g. fearful/happy). They indicated their perceptual responses (e.g. fearful) and confidence levels in the same manner as in the Detection Tasks.

Analyses

Behavioral analyses

To assess perceptual sensitivity, d’a was calculated for the Detection Tasks and Discrimination Tasks with standard signal detection theory (SDT) methods (Macmillan and Creelman, 2005). We used d’a, rather than the more commonly used d’, because d’a is adjusted for the unequal variances of the internal response distributions for the target (e.g. fearful face) and noise (e.g. neutral face), which is often observed in detection tasks (Macmillan and Creelman, 2005). Criterion measure, c, was also calculated for Detection Tasks and Discrimination Tasks with SDT methods (Macmillan and Creelman, 2005). In the subsequent analyses, the performance with the female and male image sets were combined for the participants who completed the experiment with both image sets, as they were generally similar to each other (Supplementary Tables S1–S3) unless otherwise stated in Results.

To examine metacognitive performance in the Discrimination Tasks, we calculated meta-d’ for each response type (e.g. response of perceiving a fearful face), which quantifies how well confidence ratings track perceptual accuracy over trials (Maniscalco and Lau, 2012, 2014) with a code implemented in Matlab (http://www.columbia.edu/∼bsm2105/type2sdt/fit_rs_meta_d_MLE.m). Meta-d’ was not calculated for the Detection Tasks, given that there remain some methodological issues to be solved in such calculation (Maniscalco and Lau, 2014), namely that the unequal variance assumption mentioned earlier is unlikely to be met for detection tasks.

VBM analyses

Structural brain images were preprocessed with SPM8 (http://www.fil.ion.ucl.ac.uk/spm) in a similar manner as previous studies (Fleming et al., 2010; McCurdy et al., 2013): the T1 scan images were segmented into gray matter, white matter and cerebrospinal fluid. To enhance accuracy of intersubject alignment, the gray matter images were aligned and warped to a template which was iteratively modified with the VBM diffeomorphic anatomical registration through exponentiated lie algebra (DARTEL) algorithm (Ashburner, 2007). This DARTEL template was registered to Montreal Neurological Institute stereotactic space, and the gray matter images were modulated while their tissue volumes were preserved. A Gaussian kernel of 8 mm full width at half-maximum was used to smooth the images.

Given our a priori hypotheses, we selected regions of interest (ROIs) in DLPFC and Amygdala (Figure 4A). DLPFC was selected based on previous literature showing the brain sites important for perceptual decision-making (Heekeren et al., 2006; Philiastides et al., 2011). Amygdala was selected based on the literature showing its primary role in fearful face perception (Morris et al., 1996; Adolphs, 2008; Fusar-Poli et al., 2009). The ROI for Amygdala was defined using wfu PickAtlas Tool (http://fmri.wfubmc.edu/software/PickAtlas). For the localization of DLPFC, the masks covering Brodmann’s areas (BA) 9 and 46 were created separately for the left and right hemispheres, using wfu PickAtlas Tool. Gray Matter density within each ROI was calculated and extracted using SPM8 and Matlab (7.10.0). The calculated values were adjusted for the effects of total brain volume, gender and age and were sent to the subsequent analyses.

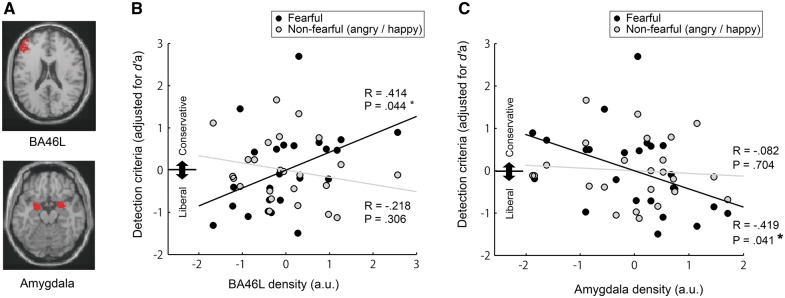

Fig. 4.

The correlations of detection criteria with the gray matter density in BA46L (DLFCP) as well as in Amygdala. (A) ROIs for BA46L and Amygdala. (B) Reduced BA46L density was related with more liberal criteria (i.e. smaller value) particularly for fearful face detection (i.e. bias to report perceiving a fearful face). Meanwhile, (C) increased Amygdala density was related with more liberal criteria in fearful face detection. (*P < 0.05).

Results

Detection tasks

First we analyzed perceptual sensitivity, d’a (Figure 2A), in three Detection Tasks with fearful, angry and happy faces. A one-way ANOVA with Emotion (fearful, angry and happy) as a within-subject-factor showed no significant main effect [F(2, 39) = 2.32, P = 0.11, partial η2 = 0.11], indicating that detection d’a did not differ across the tasks. This means that perceptual sensitivity was successfully equated in Detection Calibration.

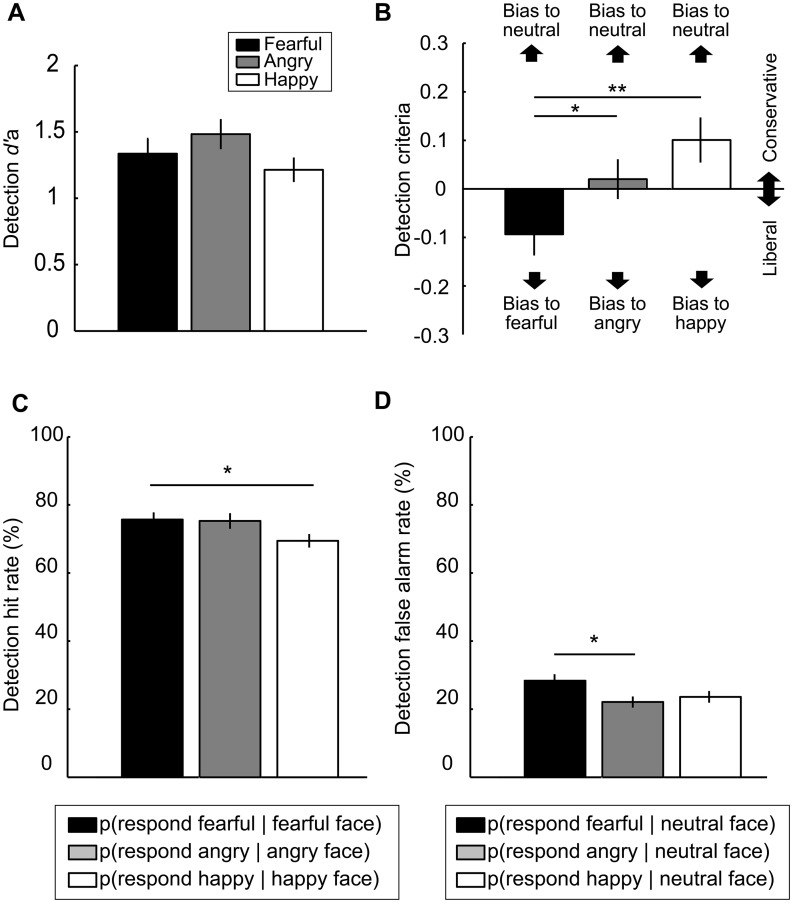

Fig. 2.

Results from Detection Tasks. (A) Detection d’a was similar across the three Detection Tasks (fearful, angry and happy). (B) Criteria for detecting a fearful face was significantly more liberal (i.e. smaller value) than criteria for detecting an angry or happy face. (C) Hit rate was higher when detecting a fearful than a happy face. (D) FAR was significantly higher when detecting a fearful face than an angry face. (**P < 0.01, *P < 0.05).

For detection criteria (Figure 2B), a one-way ANOVA showed a significant main effect of Emotion [F(2, 39) = 7.05, P = 0.002, partial η2 = 0.27]: criteria in the fearful face Detection Task was more liberal (i.e. smaller value) than in the angry face Detection Task (P = 0.04) and happy face Detection Task (P = 0.002). This liberal bias in fearful face detection was also apparent in the analyses of hit rate (HR) and false alarm rate (FAR) (Figure 2C and D, respectively). For HR, a one-way ANOVA revealed a significant main effect of Emotion [F(2, 39) = 4.73, P = 0.01, partial η2 = 0.20], which was due to that HR was higher in detecting a fearful face than a happy face (P = 0.02).

Likewise, for FAR, there was a significant main effect of Emotion [F(2, 39) = 4.68, P = 0.02, partial η2 = 0.19], which was due to that FAR in detecting a fearful face was higher than detecting an angry face (P = 0.01).

Taken together, these results show that participants were more likely to report perceiving a ‘fearful’ face in the context of fearful face detection, regardless of whether a fearful face was physically presented.

Given one earlier study (Rahnev et al., 2011), one could suggest a somewhat trivializing account for the currently observed liberal bias in fearful face detection: Rahnev et al. (2011) showed that liberal criteria can be due to a large trial-by-trial variance of the internal perceptual response. If we assume the detection criterion is constant across task conditions, when the variance of internal response is large, this could mean that the response may frequently cross the detection criterion, leading to many ‘yes’ responses. Thus the observed liberal criterion for fearful face detection may be because fearful faces were associated with higher variance for the internal perceptual response.

However, analyses on the internal response variability did not support this account (Rahnev et al., 2011). Specifically, we examined the degree to which the variance of target distribution (emotional faces) was different from the variance of noise distribution (neutral faces) in each Detection Task. The ratio of the two variances is reflected by slope of the standardized receiver operating characteristics (z-ROC) curves (Macmillan and Creelman, 2005). Thus, a slope value smaller than 1 indicates a larger variance for the signal than for the noise distribution. A one-way ANOVA on the estimated slope revealed a marginally significant main effect of Emotion [F(2, 39) = 2.70, P = 0.08]. This effect was due to that the slope relatively larger (i.e. closer to 1, where slope = 1 indicates equal variance), rather than smaller, in the fearful face Detection Task (M = 0.93 ± 0.29) compared with the Detection Tasks with happy faces (M = 0.83 ± 0.34) and angry faces (M = 0.89 ± 0.24), although the differences were not statistically significant (P = 0.75 and 0.49, respectively). These results suggest that the internal response for a fearful face had a relatively smaller variance, rather than a larger variance, compared with the responses for happy and angry faces. These results thus suggest that a simple account based on differences in variances of internal response between the face emotions is unlikely.

Although the slope (i.e. the degree of unequal variance) differed across the Detection Tasks, the slope was significantly smaller than 1 in all three tasks (P < 0.05), where slope = 1 indicates the equality of variance between the signal and noise. This result means that the variance of internal signal was larger for the signal (e.g. fearful face) than for the noise (i.e. neutral face) in all three tasks, which is a typically observed trend in a detection task (Macmillan and Creelman, 2005). That all of the current Detection Tasks indeed had an unequal variance between the signal and noise highlights the need to adopt d’a, rather than d’, as a measure of sensitivity in our data set (Macmillan and Creelman, 2005).

To further ensure that the liberal bias in fearful face perception was not due to differences in variance for the internal responses for the different face emotions, we examined criteria in fearful face perception in the Discrimination Tasks. As we will report later, the variances between the two stimuli categories were generally equal in the Discrimination tasks.

As expected from the purpose of calibration to modulate the perceptual sensitivity, calibrated morphing intensity of emotions showed correlation with perceptual sensitivity (d’a) in the Detection tasks. Higher intensity of a happy face was positively correlated with d’a in the Detection task for a happy face (R = 0.57, P = 0.004), and higher intensity of an angry face was positively correlated with d’a in the Detection task for an angry face (R = 0.66, P < 0.001). Additionally, d’a in the angry Detection task was positively correlated with the intensity of a fearful face (R = 0.49, P = 0.016). Meanwhile, the calibrated morphing intensity did not correlate with the criteria (P > 0.16).

Discrimination tasks

For Discrimination d’a (Figure 3A), a one-way ANOVA with Emotion Pair as a within-subject factor showed a significant main effect [F(2, 39) = 7.03, P = 0.002, partial η2 = 0.27]. This was due to that d’a was significantly lower in the happy/angry Discrimination Task than in the fearful/happy Discrimination Task (Bonferroni adjusted, P = 0.002). Meanwhile, d’a in the fearful/happy Discrimination task did not significantly differ from the fearful/angry Discrimination tasks (P = 0.80). There was no difference in d’a between the happy/angry and fearful/angry Discrimination tasks (P = 0.18).

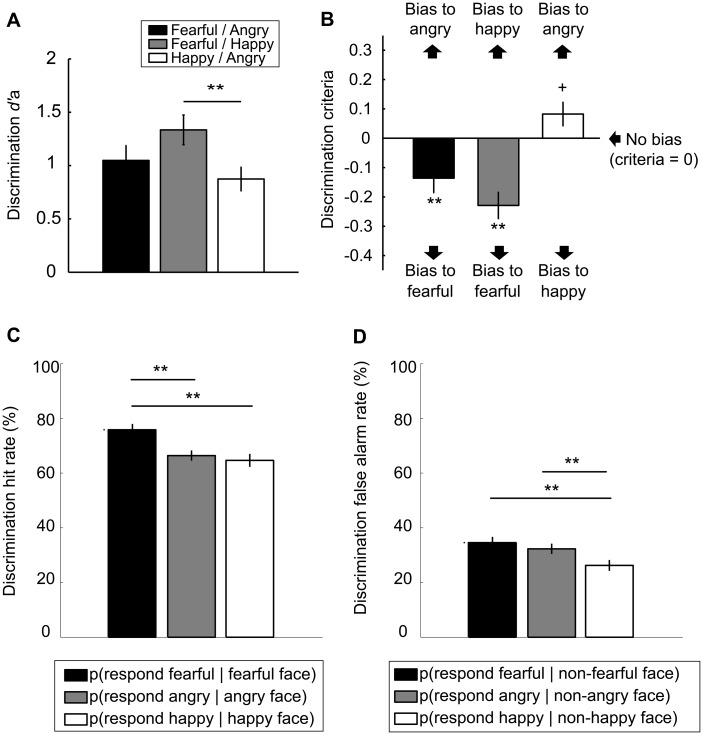

Fig. 3.

Results from the Discrimination Tasks. (A) Discrimination d’a was similar across the three tasks with different emotion pairs (fearful/angry, fearful/happy, happy/angry) except that it was lower for the happy/angry Discrimination Task than for the fearful/happy Discrimination Task. (B) Criteria for the Discrimination Tasks involving a fearful face (i.e. fearful/angry, fearful/happy) consistently showed a bias to report perceiving a fearful face. (C) Hit rate and (D) FAR also demonstrated that participants tended to report perceiving a fearful face when they were presented with a fearful face (i.e. higher HR) as well as when they were presented with a non-fearful (happy/angry) face (i.e. higher FAR). (**P < 0.01, +P < 0.10).

For discrimination criteria (Figure 3B), another one-way ANOVA showed a main effect of Emotion Pair [F(2, 39) = 7.03, P = 0.002, partial η2 = 0.27]. To further examine this main effect, we analyzed whether the mean criteria in each Discrimination Task significantly differed from 0, where criteria = 0 corresponds to no-bias (i.e. equally likely to make two alternative responses in a given task, such as ‘fearful’ and ‘angry’). This analysis revealed that participants showed a significant bias to report perceiving a fearful face (i.e. criteria smaller than 0 in Figure 3B) in both Discrimination Tasks that involved a fearful face (fearful/angry pair, P = 0.007; fearful/happy pair, P < 0.001). Meanwhile, the analysis showed a marginally significant bias to report perceiving a ‘happy’ face in the happy/angry Discrimination Task (P = 0.052). Although the results for discrimination criteria were qualitatively similar between the male and female image sets, it was predominantly observed with the male image set (Supplementary Table S2). To further demonstrate the observed bias toward fearful face perception, HR and FAR (Figure 3C and D, respectively) were averaged across the three Discrimination Tasks. Here, HR for perception of a fearful face was calculated as the proportion of a fearful face response when a fearful face was presented. Similarly, HR for perception of an angry and happy face was each calculated as the proportion of an angry or happy face response when an angry or happy face was presented, respectively. That is,

HRfearful = P(respond fearful | fearful face).

HRangry = P(respond angry | angry face).

HRhappy = P(respond happy | happy face).

Likewise, FAR for perception of a fearful, angry and happy face was each calculated as

FARfearful = P(respond fearful | non-fearful face).

FARangry = P(respond angry | non-angry face).

FARhappy = P(respond happy | non-happy face).

An one-way ANOVA on HR revealed a significant main effect of Emotion [F(2, 39) = 14.80, P < 0.001, partial η2 = 0.43]. Multiple comparisons revealed that HRfearful was significantly higher than HRangry and HRhappy (P < 0.01). For FAR, there was also a significant main effect of Emotion [F(2, 39) = 7.82, P = 0.001, partial η2 = 0.29], which was due to that FARfearful and FARangry were significantly higher than FARhappy (P = 0.002, P = 0.006, respectively). Collectively, these results suggest that participants were likely to report perceiving a fearful face, when a fearful face was actually presented (i.e. hit) as well as when an angry or happy face was presented (i.e. false alarm).

Although d’a was relatively higher for the fearful/happy Discrimination task, this unlikely explains the results in criteria as the liberal bias in perceiving a fearful face was similarly observed in the fearful/happy as well as in the fearful/angry Discrimination tasks.

Unlike in the Detection Tasks, the slope of the ROC curves in each Discrimination Task did not statistically differ from 1, where slope = 1 corresponds to the equality of variance (P > 0.40).

The calibrated contrast of a backward mask showed correlation with sensitivity (d’a) in some of the Discrimination task. The contrast of mask for the happy/angry Discrimination task was negatively correlated with its d’a (R = −0.75, P < 0.001). Similarly, the mask contrast for the fearful/happy task showed a negative correlation with d’a in the fearful/happy Discrimination task as well as in the fearful/angry Discrimination task (R = −0.51, P = 0.012; R = −0.50, P = 0.013). The mask contrast in the fearful/angry Discrimination task was also negatively correlated with its discrimination d’a (R = −0.701, P < 0.001). The mask contrast did not correlate with the Discrimination criteria. The calibrated morphing intensity of facial emotion did not correlate with Discrimination d’a and criteria, except that the morphing intensity of a happy face showed positive correlation with the criteria in the fearful/happy Discrimination (R = 0.55, P = 0.005), indicating that participants with stronger calibrated intensity of a happy face was more liberal to report perceiving a happy face.

Voxel-based morphometry

The earlier analyses revealed a liberal bias to report perceiving a fearful face in the Detection Tasks as well as in the Discrimination Tasks. Next, we examined whether such bias would correlate with the brain anatomy related with higher-level cognitive processing. To do so, we analyzed whether liberal criteria in fearful face perception correlates with the individual differences in the gray matter density of DLPFC, which is one important region for perceptual decision-making (Heekeren et al., 2006; Philiastides et al., 2011). The correlations of perceptual bias with left and right DLPFC (BA9 and 46) were tested separately, given previous literatures suggesting distinctive cognitive functions of DLPFC between the hemispheres (Kaller et al., 2011; Barbey et al., 2013). We also analyzed whether such liberal criteria would correlate with the gray matter density of bilateral Amygdala, which is a critical site for fearful face perception (Morris et al., 1996; Adolphs, 2008; Fusar-Poli et al., 2009). In the subsequent analyses, we controlled for perceptual sensitivity (d’a) so as to specifically examine the neural correlates of criteria, by first removing the effects of sensitivity in regression analyses. Specifically, we regressed out d’a from the criteria in the corresponding task. That is, we regressed out d’a in the fearful face Detection task from the criteria in the corresponding fearful face Detection task. Likewise, we regressed out d’a in each of the Detection tasks with an angry and happy face from the criteria in the corresponding Detection tasks, and we then averaged the residual criterion values between the two Detection tasks.

First, we examined the brain structural correlates of criteria in the Detection Tasks with left DLPFC. As Figure 4B shows, reduced DLPFC (BA46L) density was significantly related with more liberal criteria (i.e. <0) in detecting a fearful face (R = 0.414, P = 0.044). Meanwhile, less dense DLPFC (BA46L) did not correlate with the criteria in detecting a non-fearful (angry or happy) face (R = −0.218, P = 0.306), which was the case even when the criterion results were separately analyzed for an angry face (R = −0.01, P = 0.95) and a happy face (R = −0.35, P = 0.10). The two correlations, one with the fearful face detection criteria and the other with the non-fearful face detection criteria, significantly differed from each other [t(21) = −3.10, P = 0.005], as revealed by a William’s t-test (Williams, 1959; Weaver and Wuensch, 2013). This means that participants with reduced DLPFC (BA46L) density were more likely to report perceiving a fearful face, while they were less likely to report perceiving an angry or happy face. Yet, the correlation between BA46L and liberal criteria in detecting a fearful face should be treated as a tentative result, given it would not survive a correction for multiple comparisons (i.e. α threshold is to be adjusted as 0.025 when compensating for testing with BA46L and BA9L). BA9L did not show significant correlation with neither the criteria in detecting a fearful face nor a non-fearful face (R = 0.24, P = 0.27; R = −0.04, P = 0.85, respectively).

When focusing on right DLPFC, both right BA46R and BA9R showed numerically similar results as BA46L, yet they were not statistically significant.

Meanwhile, the Amygdala density correlated with the fearful face detection criteria in the opposite manner as the DLPFC density (Figure 4C). That is, higher Amygdala density, rather than lower density, was related with more liberal criteria for detecting a fearful face (R = −0.419, P = 0.041). Amygdala density did not correlate with criteria for detecting non-fearful faces (R = −0.082, P = 0.704), which was the case even when the results were separately analyzed for an angry and happy face (R = −0.24, P = 0.26; R = 0.11, P= 0.60, respectively). The two correlations of the Amygdala density with a fearful and non-fearful faces did not significantly differ from each other [t(21) = 1.38, P = 0.181].

The correlations of detection criteria with BA46L and Amygdala density mentioned earlier were qualitatively similar even when d’a was not controlled for: lower BA46L density was related with bias toward detecting a fearful face (R = 0.32, P = 0.13), while it was not the case when detecting non-fearful faces (R = −0.23, P = 0.28). Meanwhile, there was a trend that higher Amygdala density was related with bias toward perceiving a fearful face (R = −0.36, P = 0.09), although there was no such correlation between Amygdala density and bias toward perceiving non-fearful faces (R = −0.07, P = 0.74).

The same correlation analyses for the criteria in Discrimination Tasks (Supplementary Figure S1) showed similar results as the Detection Tasks (Figure 4), although the results were not statistically significant.

The densities of BA46L and Amygdala were not related with the calibrated stimulus properties, as shown by lack of their correlations with the morphing intensity of facial emotions (P > 0.69) as well as the calibrated contrast of the backward mask (P > 0.38). This rules out the possibility that the aforementioned correlations between the brain anatomical differences and the fearful face detection criteria are explained by the differences in the stimulus properties determined through calibration.

Metacognitive performance

In addition to the bias at the level of perceptual decision-making (i.e. criteria) as described earlier, we examined potential biases in fearful face perception at the level of metacognition. We analyzed the confidence rating as well as the metacognitive sensitivity which was measured as response-specific meta-d’ for the response of perceiving a fearful face as well as for the response of perceiving a non-fearful (happy/angry) face (Maniscalco and Lau, 2014). Meta-d’ is a signal detection theoretic measurement of metacognitive sensitivity which quantifies how well confidence rating can track the perceptual accuracy (Maniscalco and Lau, 2012), such that meta-d’ becomes larger if confidence is rated higher after a correct than incorrect perceptual response. Although meta-d’ quantifies the metacognitive sensitivity independently from perceptual sensitivity (d’), meta-d’ is calculated on the same scale as d’, enabling an intuitive interpretation of meta-d’ in relation to d’ (Maniscalco and Lau, 2012). Because analysis of meta-d’ is not straightforward when the variances between the two stimulus distributions are unequal (Maniscalco and Lau, 2014), we focused on the Discrimination Tasks where the equal variance assumption held, as reported earlier.

First, as for confidence rating, a one-way ANOVA with a within-subject factor of Responded emotion revealed a significant main effect [F(2, 39) = 3.48, P = 0.04, partial η2 = 0.15]. This main effect was due to that confidence rating was higher when participants responded perceiving a fearful than angry or happy face, although the differences were only marginally significant (P = 0.07, P = 0.08, respectively) (Figure 5A, left).

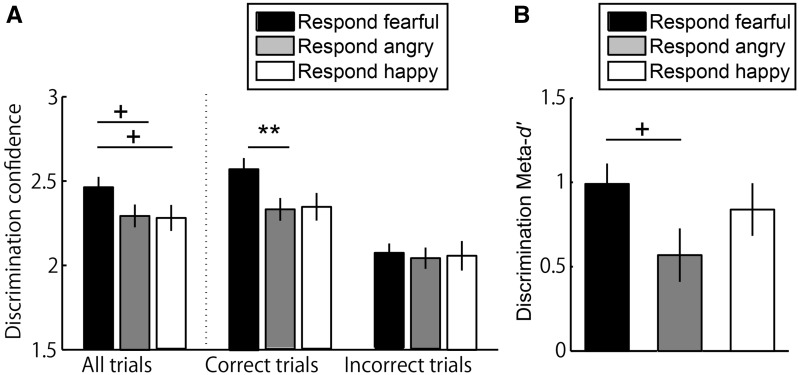

Fig. 5.

Enhanced metacognitive performance for fearful face discrimination. (A) When participants reported perceiving a fearful face, they tended to rate their confidence to be higher, compared with when they reported perceiving a non-fearful (either angry or happy) face. More specifically, this elevated confidence for fearful face perception was observed only when such perception was correct but not when incorrect. (B) Metacognitive sensitivity measured as meta-d’ tended to be higher when participants responded perceiving a fearful face than an angry face. (**P < 0.01, +P < 0.10).

This elevated confidence for fearful face perception depended on the perceptual accuracy (Figure 5A, right), as revealed by a significant interaction between Perceptual Accuracy (correct/incorrect) and Responded emotion (fearful/angry/happy) in a repeated measures ANOVA [F(2, 39) = 12.92, P < 0.001]. Participants reported higher confidence only when they accurately perceived a fearful face (i.e. report perceiving a fearful face when such face was actually presented) than when they accurately perceived an angry face (P = 0.002, Bonferroni corrected). They also reported higher confidence upon accurate perception of a fearful face when compared with a happy face, yet this difference was not significant (P = 0.29). There was no such difference in confidence rating when perception was incorrect. This interaction suggests that, when a fearful face was perceived compared with when a non-fearful angry face was perceived, metacognitive sensitivity was higher in that confidence rating better tracked the perceptual accuracy. Accordingly, meta-d’ significantly differed across three emotions (A main effect of Emotion in one-way ANOVA, [F(2, 39) = 3.26, P = 0.049, partial η2 = 0.14]), which was due to that meta-d’ tended to be higher for the response of fearful face perception than the response of angry face perception (Figure 5B) (P = 0.073).

Discussion

It has been shown that fearful face perception is prioritized in that such faces are often perceived more easily or quickly (Milders et al., 2006; Phelps et al., 2006; Yang et al., 2007; Stein et al., 2009, 2010, 2014; Amting et al., 2010; Stienen and de Gelder, 2011). Yet, it was unclear whether such prioritized perception of fearful faces was merely due to bottom-up processing of low-level physical features (Yang et al., 2007). Alternatively, it could also be case that the emotional intensity expressed in fearful faces used by previous studies was stronger than those in other emotions; the actors might have expressed fear in a way that was more salient than they expressed other emotions. This study addressed this issue explicitly by matching perceptual sensitivity via titration of the emotional intensity in the face stimuli. We showed that fearful face perception is biased at the levels of perceptual decision-making and metacognition.

Specifically, participants showed liberal criteria in perceiving a fearful face, in both Detection Tasks and Discrimination Tasks. In addition, participants reported higher confidence when they accurately discriminated a fearful face than an angry or happy face. As we carefully controlled for the low-level processing capacity of face images, the observed biases are likely to arise from higher-level mechanisms rather than low-level visual processing. The involvement of higher-level mechanisms was further supported by the result that the liberal bias in perceiving a fearful face was stronger among individual with reduced gray matter density in DLPFC, which is involved in higher-level cognitive functions such as perceptual decision-making (Heekeren et al., 2006; Philiastides et al., 2011).These are in agreement with the view that visual perception of emotionally salient visual stimuli involves multiple processing pathways (Tamietto et al., 2015), including the prefrontal cortex (Morris et al., 1996; Adolphs, 2008; Fusar-Poli et al., 2009; Pessoa and Adolphs, 2010).

In addition to its role in accumulation of sensory evidence in perceptual decision-making, the DLPFC has also been linked to emotional regulation (c.f., Ochsner et al., 2002), and more specifically in the context of fearful face processing (Bishop et al., 2004). For instance, one study showed greater activity in DLPFC (BA8/9) when a fearful face was suppressed in binocular rivalry (Amting et al., 2010). This is congruent with the current result in suggesting that less involvement of DLPFC can lead to higher likelihood of fearful face perception, although our interpretation is tentative because our structural brain analysis does not allow us to speak direct on the physiological mechanisms. Although further studies should directly examine the exact role of DLPFC in biasing fearful face perception, one possibility is that it reflects prior expectation for the likelihood of fearful face occurrence, as it has been shown that prior expectation relates with perceptual bias as well as DLPFC activity (Rahnev et al., 2011).

Nevertheless, given the role of DLPFC in a variety of cognitive control functions (Passingham and Wise, 2012), there could be alternative accounts for the correlation between DLPFC and the bias in fearful face perception. For instance, as DLPFC relates with inhibition of irrelevant information (Suzuki and Gottlieb, 2013), its density may correlate with the participants’ tendency to selectively focus on a single diagnostic feature for a fearful face perception, such as an open mouth. However, this possibility may be unlikely because it would lead to a prediction that the DLPFC density correlates with the performance of stimulus calibration (e.g. focusing more locally on the mouth would improve the calibration performance, such as lowered morphing intensity for a fearful face), which was shown not to be the case (see Results).

It is well known that criteria could reflect response strategy independently from perception (Macmillan and Creelman, 2005). Given this, one trivial interpretation would be that participants merely reported to perceive a fearful face more often, even though they did not actually perceive that to be the case. However, this would be curious as to why participants may do so, given that there is no particular benefit given the task instructions. Importantly, criteria could also reflect differences in perception itself, including perceptual shifts driven by visual illusions (Witt et al., 2015). Likewise, another study observed liberal criteria for detection in the peripheral vision, and the authors argued that this reflects the inflated subjective sense of perception in the periphery (Solovey et al., 2015). Perhaps the current results likewise reflect biases at the perceptual level. This interpretation is congruent with the results on metacognitive bias too, especially because perceptual confidence and metacognition have been argued to reflect conscious awareness (Lau and Rosenthal, 2011).

Another limitation of this study was that we used the face images of only two models. We chose to use such a small image set to carefully match perceptual sensitivity (d’a) across the tasks, which was necessary to control for lower-level visual processing. If we had used a larger image set, it would have been difficult to control for the variance of fearful face signal, as well as to match perceptual sensitivity carefully. However, although we used face images of only two models, liberal criteria in fearful face perception did not differ between the models, in both Detection Tasks and Discrimination Tasks [i.e. interaction of Emotion or Emotion Pair with Model; F(1, 63) = 1.03, P = 0.32; F(1, 63) = 0.15, P = 0.70, respectively], suggesting these results may generalize. Yet, given that the face images were tightly calibrated prior to Detection and Discrimination tasks, participants may have learned some strategies specific to the characteristics of the face images used (e.g. mouth configuration). Moreover, such learning may have taken place particularly for the fearful faces, given that a fearful face contains unique local features such as an open mouth, and that the emotion of fear generally facilitates learning (LeDoux, 1996). However, this learning effect is unlikely to explain the liberal bias in fearful face detection because such bias involves not only enhanced perception of a fearful face but also increased false alarm in perceiving non-fearful faces as fearful. The increase in false alarm cannot be explained by the potentially learned strategies specific to the fearful faces. Nevertheless, given that a small image set is certainly a limitation in this study, future studies may examine the generalizability of our findings with different image sets.

To conclude, future work should benefit by addressing the current limitations by using fMRI where one could directly examine the neurophysiological mechanisms underlying the observed biases, as well as by using a wider variety of face images to further ensure the generalizability of the results. Yet, this study presents an initial step toward uncovering the mechanisms as high up in the prefrontal cortex that bias the perception of fearful face, particularly at the levels perceptual decision-making and metacognition.

Supplementary Material

Acknowledgements

The authors thank Ellen Tedeschi for assisting the collection of brain images and Brian Maniscalco for advising on the analyses of metacognitive performance.

Funding

A.K. was supported by US-Japan Brain Research Cooperation Program (National Institute for Physiological Sciences, Japan) during the early phase of project, as well as by Japan Society for the Promotion of Science (JSPS) (Tokyo, Japan) during the late phase of project. This work was supported by the National Institute of Neurological Disorders and Stroke of the National Institutes of Health (Grant No. R01NS088628 to H.L.).

Supplementary data

Supplementary data are available at SCAN online.

Conflict of interest. None declared.

References

- Adolphs R. (2008). Fear, faces, and the human amygdala. Current Opinion in Neurobiology, 18(2), 166–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amting J.M., Greening S.G., Mitchell D.G. (2010). Multiple mechanisms of consciousness: the neural correlates of emotional awareness. Journal of Neuroscience, 30(30), 10039–47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ashburner J. (2007). A fast diffeomorphic image registration algorithm. Neuroimage, 38(1), 95–113. [DOI] [PubMed] [Google Scholar]

- Barbey A. K., Koenigs M., Grafman J. (2013). Dorsolateral prefrontal contributions to human working memory. Cortex; a Journal Devoted to the Study of the Nervous System and Behavior, 49(5), 1195–205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bishop S., Duncan J., Brett M., Lawrence A.D. (2004). Prefrontal cortical function and anxiety: controlling attention to threat-related stimuli . Nature Neuroscience, 7(2), 184–8. [DOI] [PubMed] [Google Scholar]

- Fleming S.M., Huijgen J., Dolan R.J. (2012). Prefrontal contributions to metacognition in perceptual decision making. Journal of Neuroscience, 32(18), 6117–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fleming S. M., Weil R. S., Nagy Z., Dolan R. J., Rees G. (2010). Relating introspective accuracy to individual differences in brain structure. Science, 329(5998), 1541–3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fusar-Poli P., Placentino A., Carletti F., et al. (2009). Functional atlas of emotional faces processing: a voxel-based meta-analysis of 105 functional magnetic resonance imaging studies. Journal of Psychiatry & Neuroscience, 34(6), 418–32. [PMC free article] [PubMed] [Google Scholar]

- Gold J.I., Shadlen M.N. (2007). The neural basis of decision making. The Annual Review of Neuroscience, 30, 535–74. [DOI] [PubMed] [Google Scholar]

- Heekeren H.R., Marrett S., Ruff D.A., Bandettini P.A., Ungerleider L.G. (2006). Involvement of human left dorsolateral prefrontal cortex in perceptual decision making is independent of response modality. Proceedings of the National Academy of Sciences of the United States of America, 103(26), 10023–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaller C. P., Rahm B., Spreer J., Weiller C., Unterrainer J. M. (2011). Dissociable contributions of left and right dorsolateral prefrontal cortex in planning. Cerebral Cortex, 21(2), 307–17. [DOI] [PubMed] [Google Scholar]

- Kim J.N., Shadlen M.N. (1999). Neural correlates of a decision in the dorsolateral prefrontal cortex of the macaque. Nature Neuroscience, 2(2), 176–85. [DOI] [PubMed] [Google Scholar]

- Ko Y., Lau H. (2012). A detection theoretic explanation of blindsight suggests a link between conscious perception and metacognition. Philosophical Transactions of the Royal Society of London. Series B, 367(1594), 1401–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koizumi A., Maniscalco B., Lau H. (2015). Does perceptual confidence facilitate cognitive control? Attention, Perception, & Psychophysics, 77(4), 1295–306. [DOI] [PubMed] [Google Scholar]

- Lau H., Rosenthal D. (2011). Empirical support for higher-order theories of conscious awareness. Trends in Cognitive Sciences, 15(8), 365–73. [DOI] [PubMed] [Google Scholar]

- Lau H.C., Passingham R.E. (2006). Relative blindsight in normal observers and the neural correlate of visual consciousness. Proceedings of the National Academy of Sciences of the United States of America, 103(49), 18763–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LeDoux J.E. (1996). The Emotional Brain. New York: Simon and Schuster. [Google Scholar]

- Leon M.I., Shadlen M.N. (1999). Effect of expected reward magnitude on the response of neurons in the dorsolateral prefrontal cortex of the macaque. Neuron, 24(2), 415–25. [DOI] [PubMed] [Google Scholar]

- Macmillan N.A., Creelman C.D. (2005). Detection Theory: A User’s Guide, 2nd edn. Mahwah (NJ): Lawrence Erlbaum Associates. [Google Scholar]

- Maniscalco B., Lau H. (2012). A signal detection theoretic approach for estimating metacognitive sensitivity from confidence ratings. Consciousness and Cognition, 21(1), 422–30. [DOI] [PubMed] [Google Scholar]

- Maniscalco B., Lau H. (2014). Signal detection theory analysis of type 1 and type 2 data: meta-d’, response-specific meta-d’, and the unequal variance SDT model In Fleming S.M., Frith C.D., editors. The Cognitive Neuroscience of Metacognition, 25–66, Springer. [Google Scholar]

- McCurdy L.Y., Maniscalco B., Metcalfe J., Liu K.Y., de Lange F.P., Lau H. (2013). Anatomical coupling between distinct metacognitive systems for memory and visual perception. The Journal of Neuroscience, 33(5), 1897–906. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Milders M., Sahraie A., Logan S., Donnellon N. (2006). Awareness of faces is modulated by their emotional meaning. Emotion, 6(1), 10–7. [DOI] [PubMed] [Google Scholar]

- Morris J.S., Frith C.D., Perrett D.I., et al. (1996). A differential neural response in the human amygdala to fearful and happy facial expressions. Nature, 383(6603), 812–5. [DOI] [PubMed] [Google Scholar]

- Ochsner K.N., Bunge S.A., Gross J.J., Gabrieli J.D. (2002). Rethinking feelings: an FMRI study of the cognitive regulation of emotion. Journal of Cognitive Neuroscience, 14(8), 1215–29. [DOI] [PubMed] [Google Scholar]

- Passingham R.E., Wise S.P. (2012). The Neurobiology of the Prefrontal Cortex: Anatomy, Evolution, and the Origin of Insight. New York, NY: Oxford University Press. [Google Scholar]

- Pessoa L., Adolphs R. (2010). Emotion processing and the amygdala: from a ‘low road’ to ‘many roads’ of evaluating biological significance. Nature Reviews Neuroscience, 11(11), 773–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Phelps E.A., Ling S., Carrasco M. (2006). Emotion facilitates perception and potentiates the perceptual benefits of attention. Psychological Science, 17(4), 292–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Philiastides M.G., Auksztulewicz R., Heekeren H.R., Blankenburg F. (2011). Causal role of dorsolateral prefrontal cortex in human perceptual decision making. Current Biology, 21(11), 980–3. [DOI] [PubMed] [Google Scholar]

- Rahnev D., Lau H., de Lange F.P. (2011). Prior expectation modulates the interaction between sensory and prefrontal regions in the human brain. Journal of Neuroscience, 31(29), 10741–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Solovey G., Graney G. G., Lau H. (2015). A decisional account of subjective inflation of visual perception at the periphery. Attention, Perception & Psychophysics, 77(1), 258–71. [DOI] [PubMed] [Google Scholar]

- Stein T., Peelen M.V., Funk J., Seidl K.N. (2010). The fearful-face advantage is modulated by task demands: evidence from the attentional blink. Emotion, 10(1), 136–40. [DOI] [PubMed] [Google Scholar]

- Stein T., Seymour K., Hebart M.N., Sterzer P. (2014). Rapid fear detection relies on high spatial frequencies. Psychological Science, 25(2), 566–74. [DOI] [PubMed] [Google Scholar]

- Stein T., Zwickel J., Ritter J., Kitzmantel M., Schneider W.X. (2009). The effect of fearful faces on the attentional blink is task dependent. Psychonomic Bulletin & Review, 16(1), 104–9. [DOI] [PubMed] [Google Scholar]

- Stienen B.M., de Gelder B. (2011). Fear modulates visual awareness similarly for facial and bodily expressions. Frontiers in Human Neuroscience, 5, 132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Suzuki M., Gottlieb J. (2013). Distinct neural mechanisms of distractor suppression in the frontal and parietal lobe. Nature Neuroscience, 16(1), 98–104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tamietto M., Cauda F., Celeghin A., et al. (2015). Once you feel it, you see it: insula and sensory-motor contribution to visual awareness for fearful bodies in parietal neglect. Cortex, 62, 56–72. [DOI] [PubMed] [Google Scholar]

- Tottenham N., Tanaka J.W., Leon A.C., et al. (2009). The NimStim set of facial expressions: judgments from untrained research participants . Psychiatry Research, 168(3), 242–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watson A.B., Pelli D.G. (1983). QUEST: a Bayesian adaptive psychometric method. Perception & Psychophysics, 33(2), 113–20. [DOI] [PubMed] [Google Scholar]

- Weaver B., Wuensch K.L. (2013). SPSS and SAS programs for comparing Pearson correlations and OLS regression coefficients. Behavior Research Methods, 45(3), 880–95. [DOI] [PubMed] [Google Scholar]

- Willenbockel V., Sadr J., Fiset D., Horne G.O., Gosselin F., Tanaka J.W. (2010). Controlling low-level image properties: the SHINE toolbox. Behavior Research Methods, 42(3), 671–84. [DOI] [PubMed] [Google Scholar]

- Williams E.J. (1959). The comparison of regression variables. Journal of the Royal Statistical Society Series B-Statistical Methodology, 21(2), 396–9. [Google Scholar]

- Witt J.K., Taylor J.E.T., Sugovic M., Wixted J.T. (2015). Signal detection measures cannot distinguish perceptual biases from response biases. Perception, 44(3), 289–300. [DOI] [PubMed] [Google Scholar]

- Yang E., Zald D.H., Blake R. (2007). Fearful expressions gain preferential access to awareness during continuous flash suppression. Emotion, 7(4), 882–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.