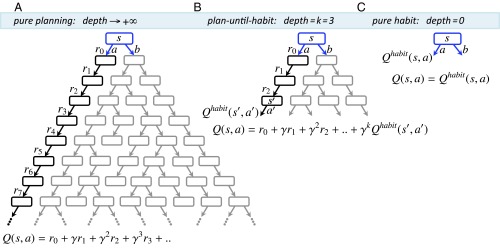

Fig. 1.

Schematic of the algorithm in an example decision problem (see SI Appendix for the general formal algorithm). Assume an individual has a “mental model” of the reward and transition consequent on taking each action at each state in the environment. The value of taking action at the current state is denoted by and is defined as the sum of rewards (temporally discounted by a factor of per step) that are expected to be received upon performing that action. can be estimated in different ways. (A) “Planning” involves simulating the tree of future states and actions to arbitrary depths ( and summing up all of the expected discounted consequences, given a behavioral policy. (B) An intermediate form of control (i.e., plan-until-habit) involves limited-depth forward simulations ( in our example) to foresee the expected consequences of actions up to that depth (i.e., up to state ). The sum of those foreseen consequences () is then added to the cached habitual assessment [] of the consequences of the remaining choices starting from the deepest explicitly foreseen states (). (C) At the other end of the depth-of-planning spectrum, “habitual control” avoids planning () by relying instead on estimates that are cached from previous experience. These cached values are updated based on rewards obtained when making a choice.