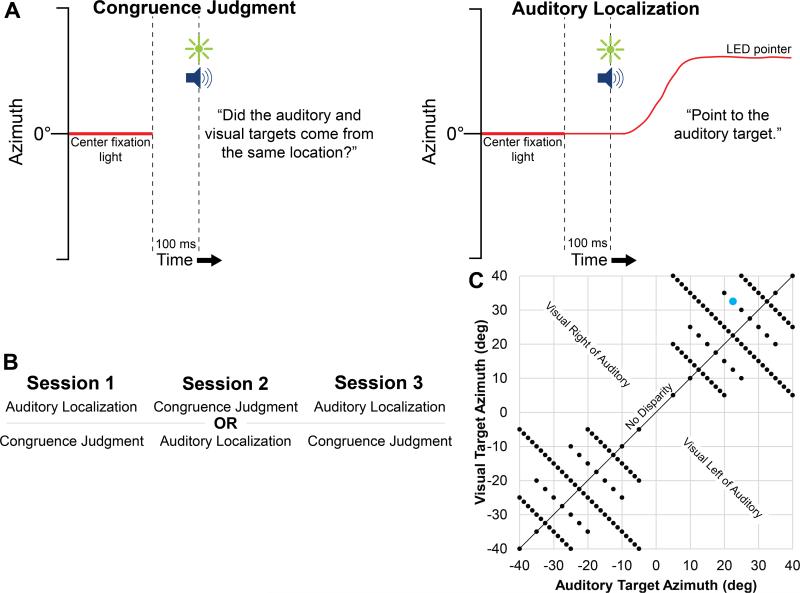

Fig. 1.

Experimental protocol. A, Trial timeline for both tasks. Between trials, subjects look at the center fixation light and maintain fixation until after target presentation. At the start of a trial, the center fixation light was extinguished, and 100 ms later an auditory and a visual target were presented from independent locations, in order to produce a disparity in azimuth between them. After target presentation, subjects were instructed to either indicate whether or not the targets came from the same location (Congruence Judgment) or point a LED pointer to the auditory target (Auditory Localization). B, Task sequence across experimental sessions. Subjects were randomly assigned to perform either the auditory localization or congruence judgment task in sessions 1 and 3, and the other task in session 2. C, Target array for both tasks. Auditory and visual target pairs were distributed in azimuth as shown, with each dot representing one pair of auditory and visual target locations. Location pairs were selected from the array in a pseudorandom order, and presented once each. Audio-visual disparity ranged from 0° (i.e. no disparity, black line) to 35° (visual target at ±5° and auditory target at ±40°, or vice versa) in azimuth. The highlighted point indicates the example target locations in panel A.