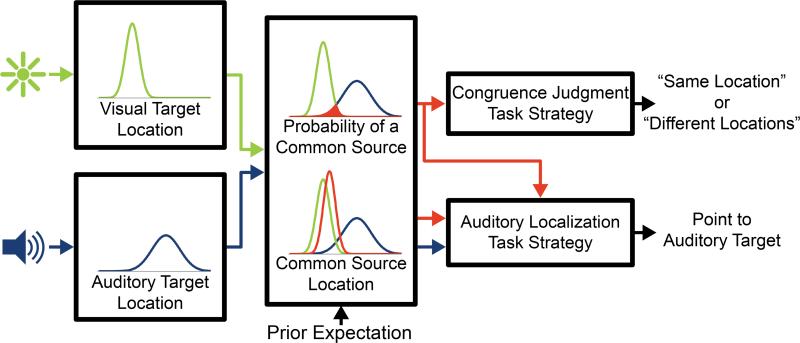

Fig. 2.

Audio-visual spatial integration model. Auditory and visual target locations are encoded by their respective sensory pathways, in accordance with each pathway's accuracy and precision. Encoded locations are combined with prior expectation to compute the common source location (weighted sum of encoded target locations), and the probability that the targets originated from a common source. At this point the model diverges based on task, with the auditory localization task dependent on common source location and probability, and the congruence judgment task dependent on common source probability alone. This model allows us to directly compare performance across tasks by estimating model parameters that are decoupled from task.