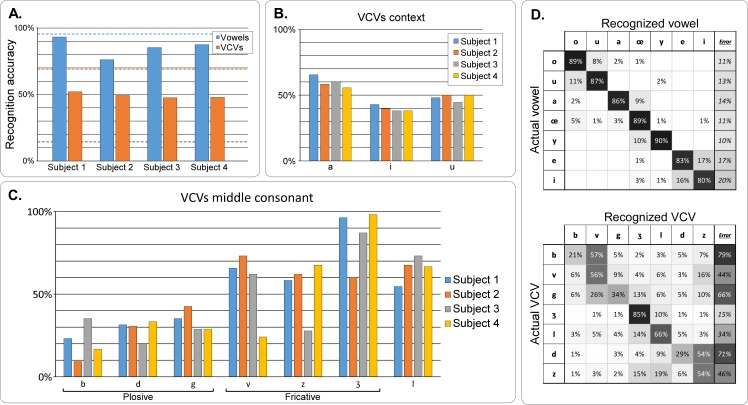

Fig 11. Results of the subjective listening test for real-time articulatory synthesis.

A–Recognition accuracy for vowels and consonants, for each subject. The grey dashed line shows the chance level, while the blue and orange dashed lines show the corresponding recognition accuracy for the offline articulatory synthesis, for vowels and consonants respectively (on the same subsets of phones). B–Recognition accuracy for the VCVs regarding the vowel context, for each subject. C–Recognition accuracy for the VCVs, by consonant and for each subject. D–Confusion matrices for vowels (left) and consonants from VCVs in /a/ context (right). Rows correspond to ground truth while columns correspond to user answer. The last column indicates the amount of errors made on each phone. Cells are colored by their values, while text color is for readability only.