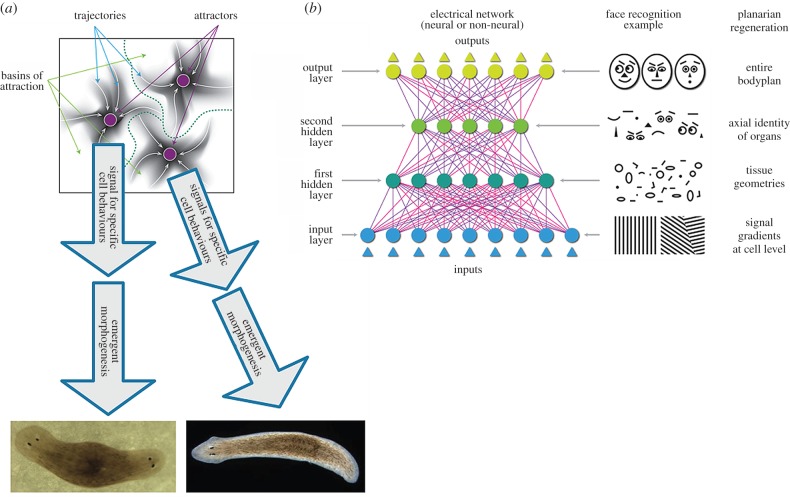

Figure 5.

Representation of multiple levels within bioelectric (neural-like) networks. (a) Neural networks of different kinds—e.g. deep nets, attractor nets—are able to learn internal representations of external entities directly from (unannotated) input data, at multiple levels, as they become tuned to the statistics of their inputs during learning [47]. For example, generative neural networks trained to reproduce in output the same figures (e.g. faces, objects or numbers) they receive in input often learn simpler-to-more-complex object or face features at increasingly higher hierarchical layers of the network, in ways that are (sometimes) biologically realistic [118,119]. Learning proceeds differently depending on the specific neural network architecture. For example, basins of attraction in the state space of attractor neural networks can represent specific memories [65]. If somatic tissues undergoing remodelling can be represented by similar frameworks, then it is possible that stable states in the network can represent specific patterning outcomes (like the one- or two-headed flatworms that result from editing of bioelectric networks in vivo [120–122]). (b) One advantage of neural network architectures is that they show a proof of principle of how collections of cells can represent higher-level topological information; here are shown analogies between information representation in an ANN during a face recognition tasks and in flatworm regeneration. Neural network layers represent progressively increasingly abstract features of the input, in well-understood ways. The functioning of specific neural network architectures such as attractor, generative or deep nets (or other [123–125]) may give clues as to how collections of somatic cells can learn and store memories about tissues, organs, and entire body plan layouts. The current state of the art in the field of developmental bioelectricity is that it is known, at the cellular level, how resting potentials are transduced into downstream gene cascades, as well as which transcriptional and epigenetic targets are sensitive to change in developmental bioelectrical signals [126–128]. What is largely missing however (and may be provided by a network approach or other possible conceptualizations) is a quantitative understanding of how the global dynamics of bioelectric circuits make decisions that orchestrate large numbers of individual cells, spread out over considerable anatomical distances, towards specific pattern outcomes. The mechanisms by which bioelectrics and chromatin state interact at a single cell level will be increasingly clarified by straightforward reductive analysis. The more difficult, major advances in prediction and control will require a systems-level model of pattern memory and encoding implemented in somatic bioelectrical networks whose output is signals that control growth and form. Images in panels (a) and (b) drawn by Jeremy Guay of Peregrine Creative.