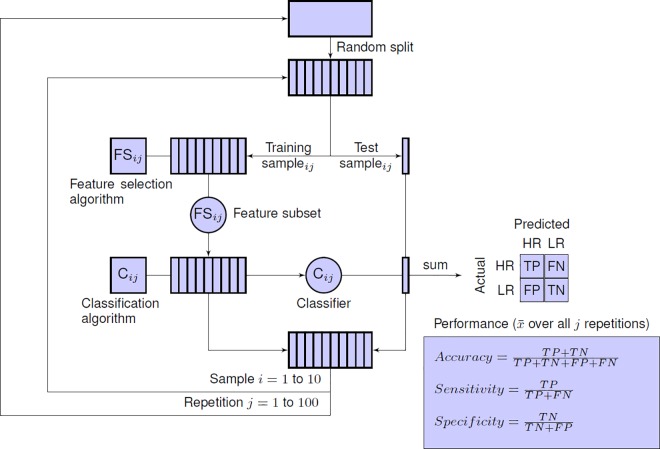

Fig 2. Experimental test protocol based on repeated cross-validation with internal feature selection.

The entire dataset is randomly divided into 10 subsets, setting aside one subset (10% of all subjects) as a test sample and the remaining nine subsets (90% of all subjects) as a training sample. A feature selection algorithm is applied on the training sample to select a subset of n features. Using this feature subset, a classification algorithm is applied on the training sample, producing a parametrized classifier as output. This classifier is then used to classify the subjects in the test sample and the predicted results are compared to the actual identity (HR or LR) of the test subjects. This step is iterated 10 times, with a different training and test set for each iteration. After one completed run of 10-fold cross validation, each subject in the entire dataset has been tested exactly once, while we still have maintained a strict separation between training and test subjects. To reduce the variance of the cross-validated performance estimate, the whole process is repeated 100 times with different initial random splits of the original dataset. The final estimate of the expected predictive performance is calculated by averaging the cross-validation performance over all 100 repetitions. This estimate represents the expected prediction accuracy of the final model. The final model–the one we would deploy in practice–is the classifier we would build from the entire dataset using feature selection method m to select n features.