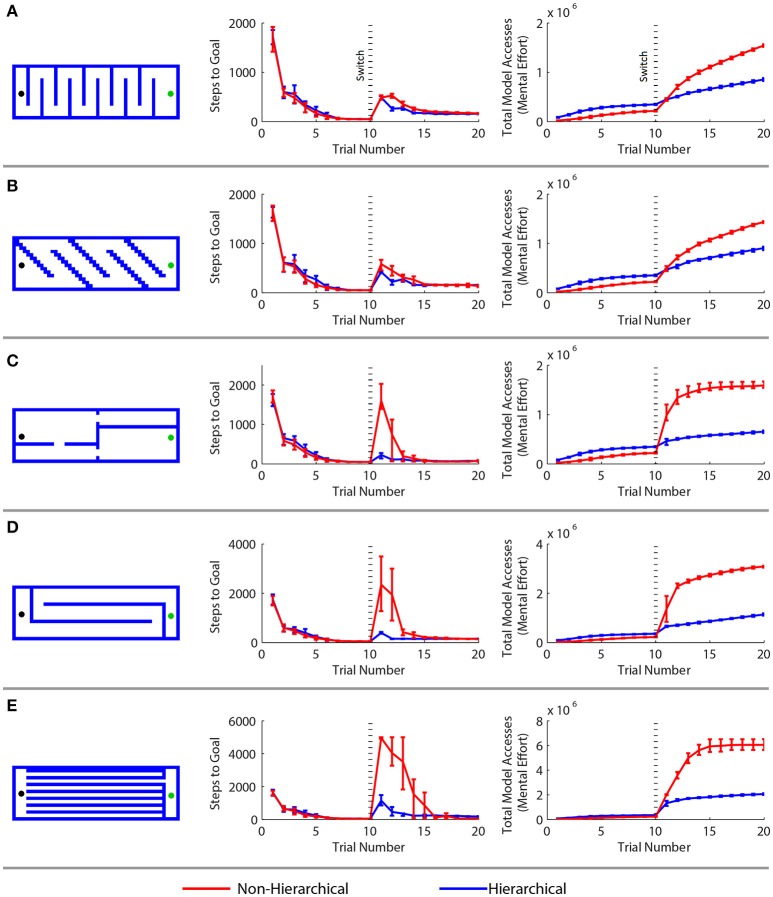

Figure 5.

Comparison of a standard model-based reinforcement learning algorithm with the hierarchical approach in a spatial navigation and adaptation task. (A–E) Results for five different adaptation tasks. Results are arranged as follows. Left: new maze boundaries that the agents were required to learn after being trained in an open arena for 10 trials. Center: the mean number of steps needed to reach the goal in each trial—the first 10 trials correspond to the open arena, the next 10 to the new maze. Right: the cumulative cognitive effort expended, measured by the mean cumulative sum of model access performed. Error bars show the 2.5 and 97.5 percentile values.