Abstract

An adaptive treatment strategy (ATS) is an outcome-guided algorithm that allows personalized treatment of complex diseases based on patients’ disease status and treatment history. Conditions such as AIDS, depression, and cancer usually require several stages of treatment due to the chronic, multifactorial nature of illness progression and management. Sequential multiple assignment randomized (SMAR) designs permit simultaneous inference about multiple ATSs, where patients are sequentially randomized to treatments at different stages depending upon response status. The purpose of the article is to develop a sample size formula to ensure adequate power for comparing two or more ATSs. Based on a Wald-type statistic for comparing multiple ATSs with a continuous endpoint, we develop a sample size formula and test it through simulation studies. We show via simulation that the proposed sample size formula maintains the nominal power. The proposed sample size formula is not applicable to designs with time-to-event endpoints but the formula will be useful for practitioners while designing SMAR trials to compare adaptive treatment strategies.

Keywords: sample size, power, Sequential Multiple Assignment Randomized Trial (SMART), Adaptive Treatment Strategy (ATS)

1. Introduction

An adaptive treatment strategy (ATS) is an outcome-guided algorithm that allows personalized treatment [1] of complex diseases based on disease status (response, recurrence, remission, relapse) and intermediate treatment history. Complex diseases such as AIDS, depression, and cancer usually involve several stages of treatment due to dynamic disease progression. For instance, a patient with depression may benefit if she initiates treatment with citalopram (CIT). Depending on response, she may remain on CIT or switch to or add another medication or psychosocial treatment during the next phase of treatment [2]. In principle, a clinician monitors a depressed patient and decides on interventions at different time points based on the patient’s clinical status. Availability of multiple treatment options at each stage of treatment, various possibilities for the duration of each stage, and various responses that can be achieved through different stages of therapy could lead to a multitude of adaptive treatment strategies. Examples of treatment strategies for a patient with moderate depression include [2]:

Treat with CIT for 6–8 weeks; if response is not achieved with CIT, augment with cognitive behavioral therapy (CBT) for 8 weeks, otherwise continue with CIT for another 8 weeks.

Treat with CIT for 6–8 weeks; if response is not achieved with CIT, switch to CBT for 8 weeks, otherwise switch to BUS (buspirone) for another 8 weeks.

Treat with CIT for 6–8 weeks; if response is not achieved with CIT, switch to CBT for 8 weeks, otherwise switch to BUP-SR (bupropion sustained release) for another 8 weeks.

Treat with CIT for 6–8 weeks; if response is not achieved with CIT, switch to SERT (sertraline) for 8 weeks, otherwise switch to CBT for another 8 weeks.

ATSs are often compared via sequential multiple assignment randomized (SMAR) designs [3, 4, 5]. Even though SMAR trials are useful for comparing ATSs because different ATSs can be tested from the same experimental design and the procedure for inference about ATSs from data arising from such trials are well-established, the design issues (e.g. sample size and power) have not been adequately addressed. This may be due to the challenges posed by the adaptive and sequential nature of SMAR designs. Nevertheless, a few articles have alluded to the development of sample size formula for SMAR designs.

Murphy [5] provides a sample size formula to test the equality of two strategies that do not share same initial sets of treatments, making data from two groups of patients following these strategies statistically independent. Feng and Wahed [6] also constructed a sample size formula for survival outcomes. However, their formula was developed for censored survival times to test equality of point-wise survival probabilities under two ATSs that have the same initial, but different second stage treatments. They also proposed another formula based on weighted log-rank test for the equality of survival curves under two strategies that share different initial treatments [7]. Recently, Li and Murphy [8] presented a sample size formula for survival data to relax the assumptions set forth by Feng and Wahed [6, 7]. Oetting et al. [9] establishes four sample size formulas, of which only two are relevant to adaptive treatment strategies. One of the formulas (referred to as #3 in their chapter) deals with a hypothesis testing the equality of a pair of strategy means. The other relevant formula (referred to as #4 in their chapter) is developed with the goal of finding the best strategy (as opposed to hypothesis testing comparing multiple strategies). Dawson and Lavori [10] also devised a sample size formula for the nested structure of successive SMAR randomizations when the outcome is continuous. They extended the sample size for the usual t-test to be applicable to SMAR trials. Using a semi-parametric approach, their formula includes stage-specific variance inflation factor (VIF) and marginal outcome variance . Due to the presence of between-strategy covariance, one cannot make inference for a pair of strategy means that share the same initial treatments by just pooling the VIF’s and marginal outcome variances across the stages. As a remedy, Dawson and Lavori [11] proposed a conservative approach to adjust the sample size formula using the VIF. The caveat with their approach is its difficulty of application. It involves cumbersome computation of all stage-specific VIFs, and coefficient of determination by regressing the final outcome on previous states. A more recent simulation work by Ko and Wahed [13] looked into the power for detecting differences between multiple strategy means for arbitrary sample sizes for a two-stage SMAR design.

Most of the works related to sample sizes in SMAR trials are either confined to two-strategy comparison [3, 7] or require assumptions about population parameters that are difficult to ascertain (e.g. VIF’s and stage-specific variances) in multi-strategy comparison settings. The goal of this paper is to provide sample size formulas for a variety of SMAR designs in order to test specific alternative hypotheses related to continuous outcomes. Specifically, we consider three SMAR designs that are being used in various disease areas. The parameters needed to be specified in advance correspond to well-defined subgroups in the patient population and hence are relatively simple to specify. We verify the sample size formulas through simulation experiments.

2. Set-up

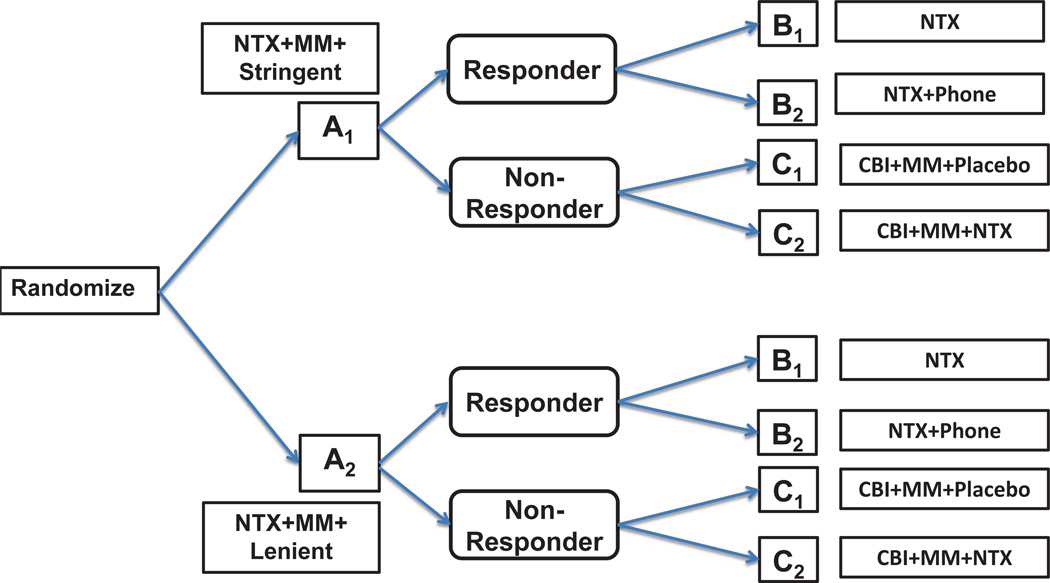

We consider three two-stage SMAR designs. Figures 1, 2 and 3 display the three SMAR designs. In the first design, n subjects are to be randomized to two initial treatments Aj, j = 1, 2. Then second stage treatments, Bk, k = 1, 2, are to be administered randomly if they responded to initial treatments, or else they are randomized to Cl, l = 1, 2.

Figure 1.

Design 1. At entry, patients are randomized to initial treatments A1 and A2. If a patient responds to the initial treatment she is randomized to either B1 or B2, otherwise the patient is randomized to either C1 or C2.

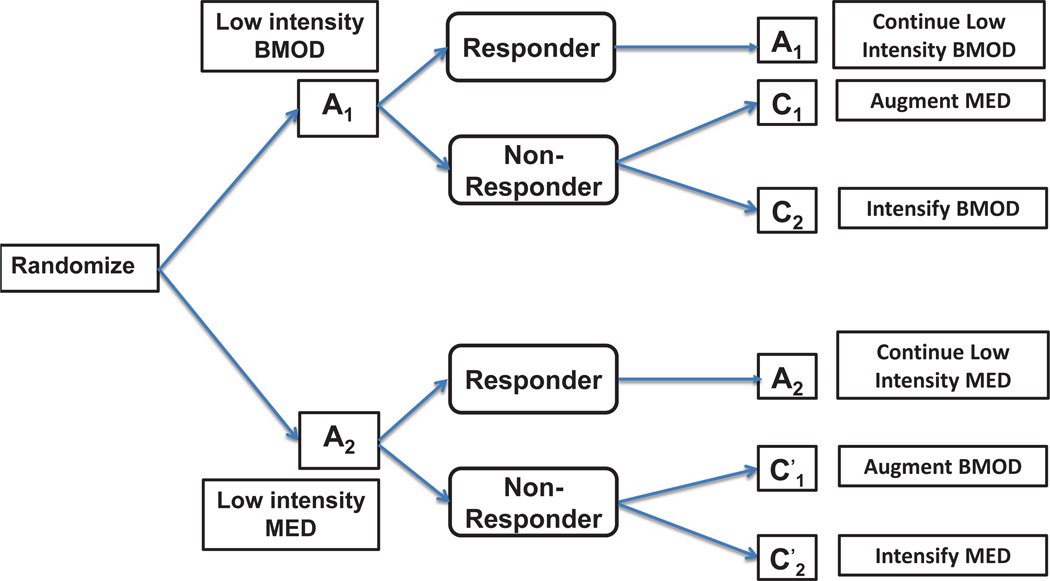

Figure 2.

Design 2. At entry, patients are randomized to initial treatments A1 and A2. If a patient responds to the initial treatment she stays on the same initial treatment, otherwise the patient is re-randomized to subsequent treatments: C1 or C2 if she does not respond to A1; or if she does not respond to A2.

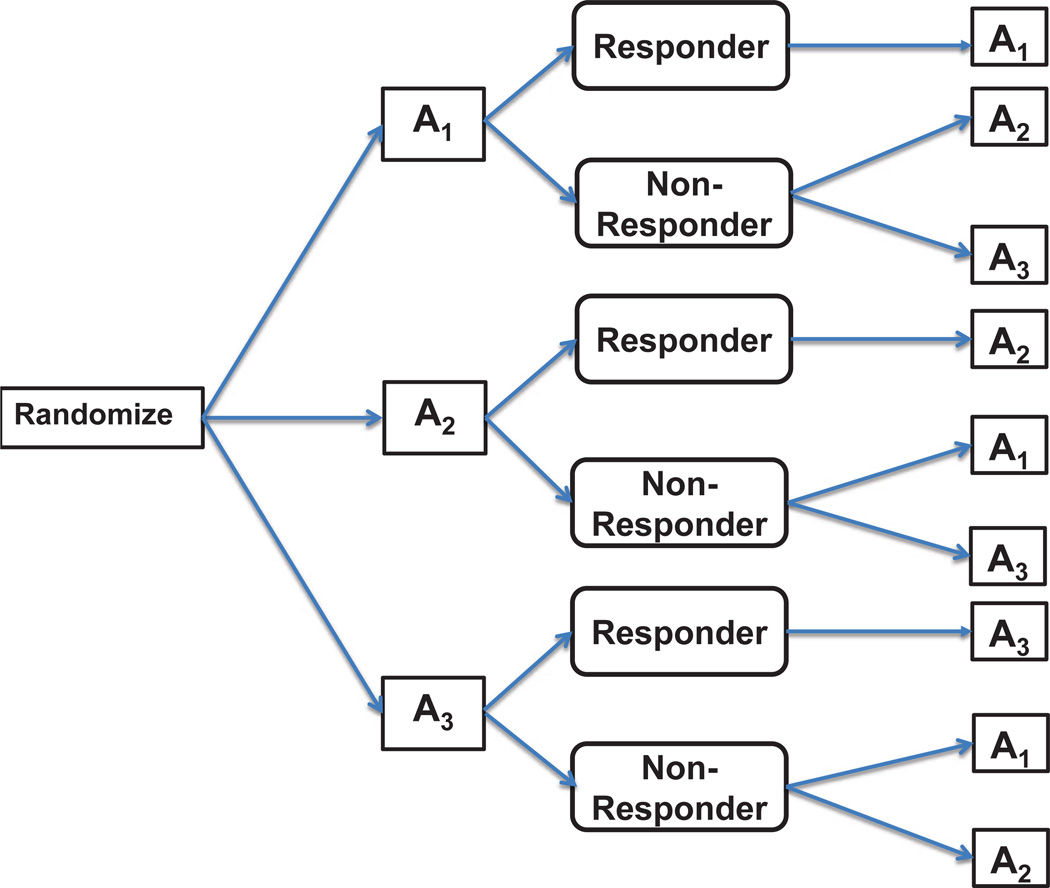

Figure 3.

Design 3. At entry, patients are randomized to initial treatments A1, A2 and A3. If a patient responds to the initial treatment she stays on the same initial treatment, otherwise the patient is re-randomized to subsequent treatments: A2 or A3 if she does not respond to A1; A1 or A3 if she does not respond to A2; A1 or A2 if she does not respond to A3.

We use the Lei et al. [1] design for alcohol-dependence interventions as an example to explain the first design (Figure 1). All patients are provided with “NTX+MM” as their initial treatment (NTX = naltrexone, MM = medical management). Then patients are randomized to two groups based on how the intermediate response to “NTX+MM” would be ascertained. In one group, referred to as A1, the response criteria would be stringent (5+ days of heavy drinking), whereas in the other group, referred here forth as A2, the criterion would be lenient (2+ days of heavy drinking). Following eight weeks of treatment, participants are randomized to the second line treatments depending on their non-response status. Non-responders were re-randomized to either “NTX” (B1) or “NTX+Phone” (B2), otherwise, they were re-randomized to two maintenance treatments: “CBI+MM+Placebo” (C1) or “CBI+MM+NTX” (C2), where CBI = combined behavioral intervention. At the end of the study, the primary outcome (defined as “percent of heavy drinking days” over the last two months of the study) was obtained.

The above design allows inference related to eight possible ATSs, namely AjBkCl, j, k, l = 1, 2, where AjBkCl stands for “Treat with Aj followed by Bk if they respond, or by Cl if not”. For example, one might want to test the equality of all strategy means H0 : μ111 = μ112 = μ121 = μ122 = μ211 = μ212 = μ221 = μ222, where μijk is the mean response under strategy AjBkCl, j, k, l = 1, 2 against the alternative of at least one pair being different. Testing equality of any combination of treatment strategies (e.g. pairwise comparisons) may also be of interest. In the sequel we consider the sample sizes required to test varieties of treatment strategy comparisons with adequate statistical power.

The second design was used by Pelham et al. [14] for an Attention Deficit Hyperactivity Disorder (ADHD) clinical trial (Figure 2). This trial involved treating children with ADHD with behavioral and pharmacological interventions during stage 1. In the first stage participants were randomized to low intensity “psychostimulant drug (low intensity MED)” (A1) or low intensity “behavioral modification (low intensity BMOD)” (A2). Behavioral modification consists of school-based, weekend and at-home activity sessions. A child’s response to the first line treatment is assessed using Impairment Rating Scale (IRS) and an individualized list of target behaviors (ITB). IRS is a comprehensive measure of improvement in social performance while ITB is a child-specific monitor of social performance. IRS and ITB are “tailoring” variables that determine response status and randomization to the second stage treatments. Based on these tailoring variables, participants who responded to first-stage treatment remained on the same treatment whereas non-responders were re-randomized. Children who did not respond to low intensity BMOD (A1) were re-randomized to either intensified BMOD (C1) or BMOD augmented with MED (C2). Those children who did not respond to low intensity MED (A2) were re-randomized to either intensified MED or MED augmented with BMOD .

Thus, if a patient responds to A1 then she stays on A1 but is randomized to C1 or C2 otherwise. Similarly, if a patient responds to A2 then she stays on A2, otherwise she is randomized to either or . Formally, there are 4 possible treatment strategies for this design; namely, A1C1, A1C2, , or , where, for example, A1C1 stands for “treat with A1, if do not respond to A1, treat with C1. It might be of interest to test equality of all 4 strategy means, H0 : μ11 = μ12 = μ21 = μ22, where μ1l and μ2l are the mean responses for the population following strategy A1Cl and respectively for l = 1, 2.

The third design considered is described in Thall et al. [16] (Figure 3). Patients received one of three initial treatments A1, A2 and A3 during the first randomization. If a patient initially assigned to A1 responded, she would remain on A1 during the second stage; otherwise she would be randomized to A2 or A3. Similarly, if a patient responds to initial treatment A2 then he/she would continue A2 in the second stage; otherwise would be randomized to A1 or A3. Similarly, patients not responding to A3 would be re-randomized to A1 or A2 in the second stage. Six possible strategies for Design 3 are AjAl, j, l = 1, 2, 3; j ≠ l, where AjAl is defined as “treat with Aj followed by Al if he/she is a non-responder”. The null hypothesis of equality of strategy means is, H0 : μ12 = μ13 = μ21 = μ23 = μ31 = μ32, where μjl is the mean response under strategy AjAl, l ≠ j; j, l = 1, 2, 3.

For all the three designs, we develop a sample size formula to detect meaningful differences between strategy means. The derivation and discussion of the sample size and variance formulas in Section 3 is based on Design 1. The formulas apply to Designs 2 and 3 directly with only slight adjustment as outlined later in Section 4.

3. Comparing Multiple Treatment Strategies

The goal of this paper is to design a sample size formula for a test that detects differences in strategy means from SMAR designs with a continuous endpoint. In order to achieve this goal, let us introduce the following notation. Let Rj be the counterfactual response indicator for an individual who responded to Aj, j = 1, 2; Y (AjBk) is the counterfactual outcome of an individual had he/she received Aj, responded, then took Bk; similarly, Y (AjCl) is the counterfactual outcome of an individual had he/she received Aj, did not respond, then took Cl. Based on these three counterfactual outcomes, consider Y (AjBkCl) as the outcome under strategy AjBkCl, which can be written as

| (1) |

To clarify the distinction between the observed and unobserved quantities, for example, for a patient who received A1, responded, and received B1, {R2, Y (A1B2), Y (A2B1), Y (A2B2)} are all unobservable. What is observed here is only Y (A1B1) (see consistency assumption below). As described in Section 2, we are interested in estimating μjkl = E{Y (AjBkCl)}. Conditioning on Rj, μjkl can be expressed as

| (2) |

where πj is the response rate for the first stage treatment Aj; μAjBk = E{Y (AjBk)} is the sub-group mean of the population receiving Aj followed by Bk, μAjCl = E{Y (AjCl)} is sub-group mean of the population receiving Aj followed by Cl. Our development of the sample size formula is based on Wald-type test statistics. Thus, an estimator of the strategy means and corresponding variance and covariance expressions is required. We will rely on the method of normalized inverse probability weighting (IPWN, Ko and Wahed, 2012) to construct unbiased estimator of strategy means. Although in this paper we focus on sample size formula for a continuous endpoint, the formulas developed apply equally for designs with a binary endpoint.

Consider Design 1 described in Section 1 (Figure 1). Contrary to the counterfactual variables defined above, the observed data for this design consists of i.i.d (independent and identically distributed) random variables, where Xji = 1, if the ith patient is randomized to Aj; 0 otherwise. Yi is the observed outcome for the ith individual, Ri is the indicator for initial response, Ri = 1 if the ith patient responded to initial therapy, 0, otherwise; Zki is the indicator for receiving Bk, i.e. Zki = 1 if subject i is randomized to receive Bk after responding to the first-stage treatment, 0, otherwise; similarly, is the indicator for receiving Cl. We make the usual assumptions of causal inference to construct consistent estimators for μjkl [15]. They are:

-

A1Consistency: A patient’s counterfactual outcome under the observed intervention (exposure) and the observed outcome agree. In the SMAR trial considered here,

and(3)

where R1i and R2i are indicators for counterfactual response to A1 and A2, respectively. The consistency assumption (CA) allows us to connect counterfactual and observed data.(4) -

A2

Sequential Randomization Assumption: The probability of a particular treatment allocation at stage a at a treatment time k does not depend on the counterfactual outcome given observed data up to but not including stage k randomization. This assumption follows since treatments are assigned randomly at each stage.

-

A3

Positivity: There is a non-zero probability of receiving any level of intervention for every combination of values of interventions.

Under these assumptions, we define the normalized weighted inverse probability estimator for strategy mean μjkl is given by

| (5) |

where , Xji is the assignment indicator for first-stage treatment Aj; Pk and Ql are probabilities of second treatment assignment for responders and non-responders, respectively.

Estimator (5) is similar to that in Ko and Wahed (2012) (Section 3.3) except that it treats the group sample sizes in Stage 1 as random rather than being treated as fixed. This is more reasonable because the group sizes in Stage 1 is determined through randomization. The IPWN estimator, , defined in Equation (5) is consistent and asymptotically normal. This can be shown as follows. We can write,

By the weak law of large numbers, where κj is the inverse of the randomization probability to Aj (i.e., ). This follows from the fact that Wjkli’s are i.i.d random variables with expectation, . Also, by the central limit theorem, , where is given in Equation (7) below. Therefore, by Slutsky’s theorem, is asymptotically equivalent in distribution to which is normally distributed as . It can also be shown that,

| (6) |

where ψjkli = κjWjkli(Yi − μjkl) is the influence function of the estimator and op(1) is a term that converges to zero in probability. Therefore, the asymptotic variance of is given by,

| (7) |

where and are variances of the outcome in the population of patients who received the sequence of treatments AjBk and AjCl, respectively; μAjBk and μAjCl are defined as before. Details for derivation of variance of strategy mean and covariance between strategy means ( and ) is shown in Appendix B.

Overall Sample Size

The hypothesis of interest is whether there is a strategy-specific mean difference. The null hypothesis is H0 : μ111=μ112=μ121=μ122=μ211=μ212=μ221=μ222, which is written as a linear equation H0 : Cμ=0, where

and μ = [μ111, μ112, μ121, μ122, μ211, μ212, μ221, μ222]T. Under the null hypothesis, the statistic nμ̂TCT[CΣ̂CT]−1Cμ̂ follows a central chi-square distribution with degree of freedom equal to 7, the number of rows of the contrast matrix C. Here μ̂ and Σ̂ denote estimated mean vector and covariance matrix given by,

where

where μ̂jkl is defined in Equation (7), is obtained by substituting estimates of parameters on the RHS in Equation (7). For example,

where

and

Under the alternative hypothesis, the test statistic follows a non-central chi-squared distribution with the same degrees of freedom and a non-centrality parameter λ, where

Consequently, a straightforward manipulation leads to a sample size formula,

| (8) |

To use the sample size formula in Equation (8), for a given power, we note that the power of the Wald test is the probability that we reject the null hypothesis, i.e., the probability that the test statistic is greater than the critical value. Thus,

| (9) |

where α is the level of significance of the test, and is the 100(1 − α)th percentile of central χ2 distribution with 7 degrees of freedom. For a given power and α, we can solve Equation (9) for λ. Having obtained λ, the sample size needed for achieving a given power is obtained by plugging in appropriate strategy means under the alternative hypothesis and their assumed variance-covariance matrix into the sample size expression above.

The knowledge of subgroup means and variances in the population will allow the computation of covariance terms. Suppose that the investigator wants to compare eight treatment strategies by testing the null hypothesis H0 : μ111 = μ112 = μ121 = μ122 = μ211 = μ212 = μ221 = μ222 against the alternative that at least one pair is different. From the knowledge in the research area, the investigator expects that those who receive A1 or A2, responds and receives B1 or does not respond and receives C2 will have mean responses μA1B1 = μA2B1 = μA1C2 = μA2C2 = 15 and the group of individuals following any other paths of treatment will have mean response equal to 20. The variation of responses within these groups are expected to be and , j, k, l = 1, 2.

Then, assuming 50% expected response in both A1 and A2 arms (π1 = 0.5, π2 = 0.5) and equal probability of randomization , we obtain μ111 = π1μA1B1 + (1 − π1)μA1C1 = 17.5, μ112 = π1μA1B1 + (1 − π1)μA1C2 = 15.0, μ121 = π1μA1B2 + (1 − π1)μA1C1 = 21.0, μ122 = π1μA1B2 + (1 − π1)μA1C2 = 18.5, μ211 = π2μA2B1 + (1 − π2)μA2C1 = 17.5, μ212 = π2μA2B1 + (1 − π2)μA2C2 = 15.0, μ221 = π2μA2B2 + (1 − π2)μA2C1 = 17.5, μ222 = π2μA2B2 + (1 − π2)μA2C2 = 15.0; and

Using C from the previous page (Page 9), we obtain

Now, if the investigator wants to power the study at 80% with α = 0.05, we solve

to obtain λ = 14.35. Then the sample size required for this case would be

4. Powering Pairwise Comparisons

Above we developed a sample size formula for a global test that provides evidence that there are differences among at least one pair of strategy means. Next, it is natural to focus on pairwise comparisons and ask which strategy means are different. A popular two-sample pairwise test is the t-test. A sample size based on the usual t-test would not apply directly since the assumption of independence among strategy means does not hold. When strategies share first stage treatment, a pairwise treatment comparison should consider the between-strategy covariances in the traditional t-test based sample size formula. Suppose we are interested in the sample size of a test that truly rejects the null hypotheses at a pre-specified level of significance (α) and a given power. For instance, there are 8 regimes and 28 pairwise comparisons for Design 1. One possible pairwise comparison would be,

For each test different sample sizes are required to detect a difference between each pairwise comparison. To control type I error, Bonferroni correction can be used. That is, for a two-sided test the level of significance for each hypothesis will be α/g, where g is the total number of pairwise comparisons. The aim is to compute the sample sizes for each pairwise comparison and then select maximum of the set of sample sizes that powers a test to identify difference between strategy means. The sample size formula that accounts dependency among strategy means is,

| (10) |

where , and σjkl,j′k′l′ are obtained using Equations (7) and (11); μjkl and μj′k′l′ are the strategy means under alternative hypothesis. If there is no overlap between strategy means that do not share the same initial treatments, the between-strategy means covariance is zero and the sample size formula (10) would mimic the one required for independent two-sample t-test.

Equation (10) has a more general use than it apparently implies. For example, suppose prior to designing the trial, researchers focus on g1 ≤ g specific pairwise comparisons. Then the sample size for pairwise comparisons can be calculated using a level of significance to ensure a pairwise comparison of g1 pairs. Since the variance-covariance formula depends on the randomization probabilities, the researcher could potentially use randomization probabilities that allocate more observations to the strategies of interest. Other (g − g1) pairwise comparisons could remain unpowered but essentially provide valuable information for future studies.

The methods described so far (in Section 3 and above) are explained via Design 1, however, the formulas can be applied to Designs 2 and 3. For example, in Design 2, there are no second stage randomization for responders. Therefore, we make the following simple modifications to make the formula applicable to Design 2. Set Y (Aj) as the counterfactual outcome for those who received Aj and responded, and let μAj and be the corresponding mean and variance of Y (Aj), j = 1, 2. As mentioned in Section 3, there are only four treatment strategies here, namely, A1C1, A1C2, , and . Therefore, the mean vector is μ = (μ11, μ12, μ21, μ22)T where for example, μ11 = π1μA1 + (1 − π1)μA1C1. Note that μA1C1 is used to indicate the mean of the population who receive A1 as initial and C1 as the second stage treatments. Similarly, the covariance matrix is

where

These formulas are obtained from the variance/covariance formulas for Design 1. For example, is the same as the RHS of Equation (7) with j = 1, k = 1, P1 = 1, and μA1B1 = μA1. The required sample size for testing the null hypothesis H0: μ11 = μ12 = μ21 = μ22 at level α and power 1 − β against an alternative specified by the subgroup means μA1, μA1C1, μA1C2, μA1, is then given by formula (8) with

and λ, the non-centrality parameter, given by the solution to the equation

Appropriate modifications can be used for Design 3 in a similar manner.

5. Simulation Study and Results

To evaluate the performance of the overall sample size formula, we conducted a number of simulations to see if the empirical power for detecting the alternative hypothesis is close to the nominal power. We presented four scenarios for each of the three designs in Tables 1, 2 and 3 by varying the nominal power, response rates and probabilities of second treatment assignment for responders (Pk) and non-responders (Ql). For each subject in the population, Yi(AjBk) and Yi(AjCl) were generated from normal distribution with means μAjBk and μAjCl, and variances and , respectively for j, k, l = 1, 2. Correspondingly, in Designs 2 and 3 for each individual, Yi(Aj) was generated from normal distribution, with means μAj. The indicator Xji was generated from a Bernoulli distribution with probability 0.5. Indicators Zk and were generated from a Bernoulli distribution with probability Pk and Ql for responders and non-responders, respectively. The response status Ri was generated from a Bernoulli distribution with probability (response rate) π1 for treatment A1 and π2 for treatment A2 and whenever applicable (Design 3), from a Bernoulli(π3) distribution for treatment A3. For Design 1, the outcome variable Yi is then generated using Equation (4). For Design 2, we used the same equation except that Yi(A1B1) and Yi(A2B1) is replaced by Yi(A1) and Yi(A2), respectively. Similar modification was made for Design 3. For each design and for each scenario we generated 10000 Monte-Carlo samples using the three designs.

Table 1.

Sample size computation and simulation of empirical power (# replications=10000) for Design 1 where Q1 = 0.5, subgroup means: μAjB1 = μAjC2 = 15, μAjC1 = 20, μAjB2 = 22; subgroup variances: , for j, k, l = 1, 2. Hypothesis of interest is H0 : μ111=μ112=μ121=μ122=μ211=μ212=μ221=μ222; α = 0.05.

| Scenario | π1 | π2 | P1 | Nominal Power |

Overall Sample Size |

Empirical Power |

Effect Size (Mahalanobis Distance) |

|---|---|---|---|---|---|---|---|

| 1 | 0.5 | 0.5 | 0.5 | 0.8 | 70 | 0.84 | 0.206 |

| 0.5 | 0.5 | 0.7 | 0.8 | 79 | 0.85 | 0.182 | |

| 0.5 | 0.5 | 0.5 | 0.9 | 89 | 0.92 | 0.206 | |

| 0.5 | 0.5 | 0.8 | 0.9 | 120 | 0.92 | 0.152 | |

| 2 | 0.2 | 0.5 | 0.5 | 0.8 | 83 | 0.82 | 0.172 |

| 0.2 | 0.5 | 0.7 | 0.8 | 92 | 0.83 | 0.156 | |

| 0.2 | 0.5 | 0.5 | 0.9 | 106 | 0.9 | 0.172 | |

| 0.2 | 0.5 | 0.8 | 0.9 | 134 | 0.92 | 0.136 | |

| 3 | 0.7 | 0.5 | 0.5 | 0.8 | 62 | 0.85 | 0.231 |

| 0.7 | 0.5 | 0.7 | 0.8 | 71 | 0.85 | 0.201 | |

| 0.7 | 0.5 | 0.5 | 0.9 | 79 | 0.92 | 0.231 | |

| 0.7 | 0.5 | 0.7 | 0.9 | 91 | 0.92 | 0.201 | |

| 4 | 0.2 | 0.7 | 0.5 | 0.8 | 72 | 0.84 | 0.198 |

| 0.2 | 0.7 | 0.7 | 0.8 | 82 | 0.84 | 0.176 | |

| 0.2 | 0.7 | 0.5 | 0.9 | 92 | 0.91 | 0.198 | |

| 0.2 | 0.7 | 0.7 | 0.9 | 104 | 0.92 | 0.176 | |

Alternative is true with means: Scenario 1: μ111 = 17.5, μ112 = 15.0, μ121 = 21.0, μ122 = 18.5, μ211 = 17.5, μ212 = 15.0, μ221 = 21.0, μ222 = 18.5

Scenario 2: μ111 = 19.0, μ112 = 15.0, μ121 = 20.4, μ122 = 16.4, μ211 = 17.5, μ212 = 15.0, μ221 = 21.0, μ222 = 18.5

Scenario 3: μ111 = 16.5, μ112 = 15.0, μ121 = 21.4, μ122 = 19.9, μ211 = 17.5, μ212 = 15.0, μ221 = 21.0, μ222 = 18.5

Scenario 4: μ111 = 19.0, μ112 = 15.0, μ121 = 20.4, μ122 = 16.4, μ211 = 16.5, μ212 = 15.0, μ221 = 21.4, μ222 = 19.9

Table 2.

Sample size computation and simulation of empirical power (# replications=10000) for Design 2 where subgroup means: μA1 = 15, μA2 = 17, μA1C1 = 20, μA1C2 = 15, ; subgroup variances: for j, k, l = 1, 2. Hypothesis of interest is H0 : μ11=μ12=μ21=μ22.

| Scenario | π1 | π2 | Q1 | Nominal Power |

Overall Sample Size |

Empirical Power |

Effect Size (Mahalanobis Distance) |

|---|---|---|---|---|---|---|---|

| 1 | 0.5 | 0.5 | 0.5 | 0.8 | 142 | 0.81 | 0.077 |

| 0.5 | 0.5 | 0.7 | 0.8 | 156 | 0.82 | 0.069 | |

| 0.5 | 0.5 | 0.5 | 0.9 | 185 | 0.91 | 0.077 | |

| 0.5 | 0.5 | 0.9 | 0.9 | 344 | 0.92 | 0.041 | |

| 2 | 0.2 | 0.5 | 0.5 | 0.8 | 130 | 0.81 | 0.084 |

| 0.2 | 0.5 | 0.7 | 0.8 | 144 | 0.82 | 0.071 | |

| 0.2 | 0.5 | 0.5 | 0.9 | 169 | 0.91 | 0.084 | |

| 0.2 | 0.5 | 0.9 | 0.9 | 448 | 0.92 | 0.032 | |

| 3 | 0.7 | 0.5 | 0.5 | 0.8 | 143 | 0.82 | 0.076 |

| 0.7 | 0.5 | 0.7 | 0.8 | 143 | 0.83 | 0.076 | |

| 0.7 | 0.5 | 0.5 | 0.9 | 186 | 0.91 | 0.076 | |

| 0.7 | 0.5 | 0.9 | 0.9 | 241 | 0.93 | 0.059 | |

| 4 | 0.7 | 0.2 | 0.5 | 0.8 | 94 | 0.82 | 0.116 |

| 0.7 | 0.2 | 0.7 | 0.8 | 88 | 0.84 | 0.123 | |

| 0.7 | 0.2 | 0.5 | 0.9 | 122 | 0.9 | 0.116 | |

| 0.7 | 0.2 | 0.9 | 0.9 | 131 | 0.94 | 0.108 | |

Alternative is true with means: Scenario 1: μ11 = 17.5, μ12 = 15.0, μ21 = 19.5, μ22 = 16.0

Scenario 2: μ11 = 19.0, μ12 = 15.0, μ21 = 19.5, μ22 = 16.0

Scenario 3: μ11 = 16.5, μ12 = 15.0, μ21 = 19.5, μ22 = 16.0

Scenario 4: μ11 = 16.5, μ12 = 15.0, μ21 = 21.0, μ22 = 15.4

Table 3.

Sample size computation and simulation of empirical power (# replications=10000) for Design 3 where subgroup means: μA1 = 15, μA2 = 17, μA3 = 19, μA1A3 = μA2A3 = μA3A2 = 15, μA1A2 = 20, μA2A1 = 22, μA3A1 = 24; subgroup variances: for j ≠ l; j, l = 1, 2, 3. Response rate for induction treatment A3 is assumed to be 50%. Hypothesis of interest is H0 : μ12 = μ13 = μ21 = μ23 = μ31 = μ32.

| Scenario | π1 | π2 | Nominal Power |

Overall Sample Size |

Empirical Power |

Effect Size (Mahalanobis Distance) |

|---|---|---|---|---|---|---|

| 1 | 0.5 | 0.5 | 0.8 | 108 | 0.83 | 0.119 |

| 0.2 | 0.5 | 0.8 | 111 | 0.83 | 0.116 | |

| 0.5 | 0.5 | 0.9 | 139 | 0.91 | 0.119 | |

| 0.2 | 0.5 | 0.9 | 142 | 0.91 | 0.116 | |

| 2 | 0.2 | 0.2 | 0.8 | 95 | 0.84 | 0.135 |

| 0.2 | 0.6 | 0.8 | 116 | 0.84 | 0.110 | |

| 0.2 | 0.2 | 0.9 | 122 | 0.92 | 0.135 | |

| 0.2 | 0.6 | 0.9 | 149 | 0.91 | 0.110 | |

| 3 | 0.3 | 0.5 | 0.8 | 111 | 0.83 | 0.116 |

| 0.3 | 0.6 | 0.8 | 116 | 0.82 | 0.110 | |

| 0.3 | 0.5 | 0.9 | 142 | 0.91 | 0.116 | |

| 0.3 | 0.6 | 0.9 | 149 | 0.92 | 0.110 | |

| 4 | 0.4 | 0.5 | 0.8 | 110 | 0.83 | 0.117 |

| 0.4 | 0.6 | 0.8 | 115 | 0.82 | 0.111 | |

| 0.4 | 0.5 | 0.9 | 141 | 0.92 | 0.117 | |

| 0.4 | 0.6 | 0.9 | 148 | 0.91 | 0.111 | |

Alternative is true with means: Scenario 1: μ12 = 17.5, μ13 = 15.0, μ21 = 19.5, μ23 = 16.0, μ31 = 21.5, μ32 = 17.0

Scenario 2: μ12 = 19.0, μ13 = 15.0, μ21 = 21.0, μ23 = 15.4, μ31 = 21.5, μ32 = 17.0

Scenario 3: μ12 = 18.5, μ13 = 15.0, μ21 = 19.5, μ23 = 16.0, μ31 = 21.5, μ32 = 17.0

Scenario 4: μ12 = 18.0, μ13 = 15.0, μ21 = 19.5, μ23 = 16.0, μ31 = 21.5, μ32 = 17.0

Tables 1, 2 and 3 demonstrate sample size computation for different scenarios by assuming certain values for population parameters. Tables 4 and 5 show the pairwise sample size computation for Designs 2 and 3. Design 1 assumes subgroup means: μAjB1 = μAjC2 = 15, μAjC1 = 20, μAjB2 = 22; subgroup variances: , for j, k, l = 1, 2. Subgroup variances are assumed to be the same for all designs considered. Depending on a specific design and scenario considered, the following range of response proportions πj’s are assumed: 0.2, 0.3, 0.5, 0.6 and 0.7. Similarly, depending on a specific design the following P1 and Q1 are assumed. Probability of treatment assignment for responders, P1, is assumed to be 0.5, 0.7, 0.9 and 1. For non-responders, Q1(= 1 − Q2), is assumed to be 0.5, 0.7, 0.9. Design 2 assumes the following subgroup means: μA1B1 = 15, μA2B1 = 17, μAjC2 = 15, μA1C1 = 20, μA2C1 = 22, for j, k, l = 1, 2. Design 3 assumes the following subgroup means: μA1B1 = 15, μA2B1 = 17, μA3B1 = 19, μAjC2 = 15, μA1C1 = 20, μA2C1 = 22, μA3C2 = 24, for j, k, l = 1, 2, 3. The parameter values were chosen following those from Ko and Wahed [13]. The strategy means differ for each scenario in each table. In each scenario, having obtained the appropriate sample size using our formula, we evaluate the power of the Wald tests in rejecting the null hypothesis of no difference in treatment means when the strategies have different means. Effect sizes are common measures in psychology and other disciplines where they are useful in calculating and interpreting power. The magnitude of effect sizes would capture experimental effects by protecting guaranteed significance due to large sample size [12]. The effect size is computed using the Mahalanobis distance (MD). One useful property of the MD is that it takes into account the correlation in the data.

Table 4.

Pairwise sample size computation for Design 2. Subgroup means: μA1 = 15, μA2 = 17, μA1C1 = 20, μA1C2 = 15, ; subgroup variances: for j, k, l = 1, 2. Here π1=0.5, π2=0.5, Q1=0.5, and power=0.8.

| Hypothesis | Overall MC Adjusted Sample Size |

Not Corrected for Multiple Comparison |

δi |

|---|---|---|---|

| H1 : μ11−μ12=δ1 | 532 | 345 | 2.5 |

| H2 : μ11−μ21=δ2 | 1107 | 717 | −2.0 |

| H3 : μ11−μ22=δ3 | 1882 | 1220 | 1.5 |

| H4 : μ12−μ21=δ4 | 207 | 134 | −4.5 |

| H5 : μ12−μ22=δ5 | 4008 | 2598 | −1.0 |

| H6 : μ21−μ22=δ6 | 280 | 181 | 3.5 |

Table 5.

Pairwise sample size computation for Design 3. Subgroup means: μA1 = 15, μA2 = 17, μA3 = 19, μA1A3 = μA2A3 = μA3A2 = 15, μA1A2 = 20, μA2A3 = 22, μA3A2 = 24; subgroup variances: for j ≠ l; j, l = 1, 2, 3. Here π1 = 0.5, π2 = 0.5, π3 = 0.5, Q1 = 0.5, and power=0.8. Second column provides sample size which powers all pairwise comparisons whereas the third column assumes that only three of the fifteen hypotheses are of interest.

| Hypothesis | Overall MC Adjusted Sample Size |

Partially MC Adjusted Sample Size |

δi |

|---|---|---|---|

| H1 : μ12−μ13=δ1 | 941 | 690 | 2.5 |

| H2 : μ12−μ21=δ2 | 1955 | 1435 | −2.0 |

| H3 : μ12−μ23=δ3 | 3326 | 2441 | 1.5 |

| H4 : μ12−μ31=δ4 | 489 | 359 | −4.0 |

| H5 : μ12−μ32=δ5 | 30704 | 22535 | 0.5 |

| H6 : μ13−μ21=δ6 | 366 | 269 | −4.5 |

| H7 : μ13−μ23=δ7 | 7082 | 5198 | −1.0 |

| H8 : μ13−μ31=δ8 | 176 | 129 | −6.5 |

| H9 : μ13−μ32=δ9 | 1819 | 1335 | −2.0 |

| H10 : μ21−μ23=δ10 | 494 | 362 | 3.5 |

| H11 : μ21−μ31=δ11 | 1955 | 1435 | −2.0 |

| H12 : μ21−μ32=δ12 | 1228 | 901 | 2.5 |

| H13 : μ23−μ31=δ13 | 247 | 182 | −5.5 |

| H14 : μ23−μ32=δ14 | 7339 | 5386 | −1.0 |

| H15 : μ31−μ32=δ15 | 314 | 230 | 4.5 |

The first row of Scenario 1 in Table 1 assumes strategy means μ111 = 17.5 μ112 = 15, μ121 = 21, μ122 = 18.5, μ211 = 17.5, μ212 = 15, μ221 = 21, μ222 = 18.5 when response rates π1, π2 were taken to be both 0.5; P1 and Q1 are assumed to be 0.5. Seventy subjects would be required to detect the resulting effect size of 0.21 with power 80% at α = 0.05. The empirical power is 85% which is slightly inflated compared to the nominal power of 80% used to compute the sample size. Row 3 of the same scenario shows that the empirical power of 92% is close to the nominal value of 90%. Similar patterns follow for all the rows in Scenarios 2, 3 and 4. If we observe across all scenarios (from 4 to 1), we note a small degree of increase in empirical power when P1 increases.

The first row of Scenario 1 in Design 2 (Table 2) assumes strategy means μ11 = 17.5, μ12 = 15, μ21 = 19.5, μ22 = 16 when response rates π1, π2 were taken to be both 0.5; Q1=0.5. In this case 142 subjects would be required to detect the resulting effect size of 0.08 with power 80% at α = 0.05. The empirical power is 81% which is very close to the nominal power of 80% used to compute the sample size. Row 4 of scenario 3 shows that the empirical power of 93% is slightly inflated compared to the nominal value of 90%. For various response rates, the empirical power for each case in scenarios 1 to 3 nearly attain the nominal power. This attests that the sample sizes calculated for Design 2 ensure enough power to detect differences among the four strategy means.

The first row of Scenario 1 in Design 3 (Table 3) assumes strategy means μ12 = 17.5, μ13 = 15, μ21 = 19.5, μ23 = 16, μ31 = 21.5, μ32 = 17 when response rates π1, π2, π3 were taken to be all 0.5. 108 subjects would be required to detect the resulting effect size of 0.12 with power 80% at α = 0.05. The empirical power is 83% which is slightly larger than the nominal power of 80% used to compute the sample size. We note that for small changes in response rates, sometimes the sample sizes do not change or change only slightly. For example, row 4 of scenarios 2 and 3 have the same sample size (149). The sample size did not change as π1 changed slightly from 0.2 to 0.3.

In many clinical trials testing of overall hypothesis may not be of primary interest, rather some or all of the pairwise comparisons are. To show how the sample size for a SMAR trial is determined in such cases, we present the sample sizes required for Design 2 when all six pairwise comparisons are powered simultaneously in the second column of Table 4. The third column provides the sample sizes when only individual tests are powered. For example, under the setting described in Table 4, Design 2 requires 4008 patients to power all pairwise comparisons. However, if the interest, for example, is in powering the single hypothesis H0 : μ111 = μ112 leaving other pairs as exploratory, the trial could be conducted using a sample as small as 345. Similarly, Table 5 provides sample sizes for Design 3 when fifteen pairwise comparisons are powered simultaneously (Column 2) and when only three pairwise comparisons are considered (Column 3). From Column 2, Design 3 requires 30,704 patients (maximum of the sample sizes) to power all pairwise comparisons. However, if the interest is in powering only three pairwise hypotheses such as H0 : μ12 = μ13, H0 : μ12 = μ21, and H0 : μ12 = μ23, the trial would require a sample size of 2,441. On the other hand, if the interest is only in comparing the three pairs, H4, H6, and H8 then the sample size required will be n = 359.

Outcomes in the above simulation scenarios were generated from a normal distribution. We wanted to conduct the sensitivity of our formula to non-normal responses. To do this, we further generated data from Logistic (symmetric) and Gamma (skewed) distributions and calculated the empirical power based on the sample size calculated using Equation(8). Basically, we selected one row from each scenario of Tables 1 to 3 to perform sensitivity analysis of our formula using data from Logistic and Gamma distributions ensuring the same means and variances for the subpopulations and keeping all other parameters the same. From each table, we selected the first row for Scenarios 1 and 3 while we chose the fourth row for Scenarios 2 and 4. Therefore, the results presented in Table 6 have twelve rows in total. In general, the nominal power is maintained and is consistent across the three distributions. This shows that our sample size formula is robust to the misspecification of outcome distribution.

Table 6.

Robustness of the Sample Size Formula against misspecification of outcome distributions. For Design 1 the following parameter values were considered: Q1 = 0.5, subgroup means: μAjB1 = μAjC2 = 15, μAjC1 = 20, μAjB2 = 22; subgroup variances: , for j, k, l = 1, 2. The hypothesis tested is H0 : μ111=μ112=μ121=μ122=μ211=μ212=μ221=μ222; α = 0.05. For Design 2 the following parameter values were considered: P1 = 1, subgroup means: μA1 = 15, μA2 = 17, μA1C1 = 20, μA1C2 = 15, ; subgroup variances: for j, k, l = 1, 2. The hypothesis tested is H0 : μ11=μ12=μ21=μ22. For Design 3 the following parameter values were considered: P1 = 1, subgroup means: μA1 = 15, μA2 = 17, μA3 = 19, μA1A3 = μA2A3 = μA3A2 = 15, μA1A2 = 20, μA2A3 = 22, μA3A2 = 24; subgroup variances: for j ≠ l; j, l = 1, 2, 3. Response rates to induction treatment A3 is assumed to be 50%. The hypothesis tested is H0 : μ11=μ13=μ21=μ23=μ31=μ32.

| Design | Scenario | π1 | π2 | P1 | Q1 | Nominal Power |

Overall Sample Size |

Empirical Power: Normal |

Empirical Power: Gamma |

Empirical Power: Logistic |

|---|---|---|---|---|---|---|---|---|---|---|

| Design 1 | 1 | 0.5 | 0.5 | 0.5 | 0.5 | 0.8 | 70 | 0.84 | 0.86 | 0.86 |

| 2 | 0.2 | 0.5 | 0.8 | 0.5 | 0.9 | 134 | 0.92 | 0.93 | 0.93 | |

| 3 | 0.7 | 0.5 | 0.5 | 0.5 | 0.8 | 62 | 0.85 | 0.86 | 0.87 | |

| 4 | 0.2 | 0.7 | 0.7 | 0.5 | 0.9 | 104 | 0.92 | 0.92 | 0.92 | |

| Design 2 | 1 | 0.5 | 0.5 | - | 0.5 | 0.8 | 142 | 0.81 | 0.83 | 0.82 |

| 2 | 0.2 | 0.5 | - | 0.9 | 0.9 | 448 | 0.92 | 0.91 | 0.91 | |

| 3 | 0.7 | 0.5 | - | 0.5 | 0.8 | 143 | 0.82 | 0.85 | 0.83 | |

| 4 | 0.7 | 0.2 | - | 0.9 | 0.9 | 131 | 0.94 | 0.96 | 0.94 | |

| Design 3 | 1 | 0.5 | 0.5 | - | 0.5 | 0.8 | 108 | 0.83 | 0.86 | 0.85 |

| 2 | 0.2 | 0.6 | - | 0.5 | 0.9 | 149 | 0.91 | 0.93 | 0.92 | |

| 3 | 0.3 | 0.5 | - | 0.5 | 0.8 | 111 | 0.83 | 0.86 | 0.85 | |

| 4 | 0.4 | 0.6 | - | 0.5 | 0.9 | 148 | 0.91 | 0.93 | 0.92 | |

6. Discussion

Complex multi-stage diseases require decision-based multi-stage treatments depending on the response to prior-stage treatments. SMAR designs provide efficient and unbiased inference to compare staged strategies for complex conditions. We presented a sample size formula that is applicable for various SMAR designs to ensure adequately powered comparisons of these treatment strategies. The usual design is to randomize responders (or non-responders) to available treatments. A slight variation to that is a design where responders (or non-responders) would not be randomized any further in the second stage. Designs 2 and 3 are such examples. In Design 2, only the non-responders are randomized to C1 or C2 and or respectively depending on whether they received A1 or A2 in the first stage. Responders would stay on the same first stage treatment. Equivalently, responders will be randomized with probability 1 to whatever treatment they received in the first stage. There are four strategies resulting from this design and the sample size required to detect differences among the four strategies is computed. In Design 3 each patient is randomized to a set of treatments (A1, A2, A3) in the first stage and these treatments are continued until they fail due to disease worsening. The patient is then re-randomized among a set of the same first stage treatments with the exception of the treatment s/he received initially. There are six strategies of interest in this design. We showed in the simulation how to compute sample size formula for this design and showed that the formula ensures nominal power under various scenarios involving many outcome distributions.

In contrast to our formula, Murphy’s [5] formula is not applicable to designs powering multi-strategy comparison or to designs comparing strategies that share the same initial treatments commonly referred to as shared-path strategies [17] or overlapping strategies [10]. Moreover, their formula requires specifying the variance of the response under the strategies being compared, although the effect sizes can be specified per standard deviations of mean difference assuming equal variance across strategies.

Dawson and Lavori [10, 11] provides a sample size formula for comparing pairs of overlapping or non-overlapping/treatment strategies based on semiparametric efficient variances. The formula requires one to specify the variance of the response under each strategy and the variance inflation factor, the latter depending on the coefficient of determinations based on the regression of counterfactual strategy response on stage-specific states. Correct specification of such quantities is difficult, if not impossible, in the absence of a similar SMAR trial. However, when correctly specified, Dawson and Lavori’s formula provide smaller sample sizes than those proposed in Murphy [5] or the ones provided here. One advantage of both Murphy [5] and Dawson and Lavori’s [10, 11] formula over our method is that they can be applied to compare strategies from SMAR trial with more than two stages. However, like Murphy’s formula, Dawson and Lavori’s formula also focuses on comparing pairs of treatment strategies.

The simplicity of our procedure compared to Dawson and Lavori [10] (even in the two-stage SMAR trial settings) relies on the specification of the parameters. Our formula requires one to specify sub-group-specific means and variances. Our sample size formula requires specification of subgroup means and variances for patients following different treatment paths. These parameters are usually available from observational studies or stage-specific individual non-SMAR trials. For example, there are many cancer clinical trials that compare frontline treatments (e.g. Estey et al. [18]). Even though such trials are terminated once the recruitment is over and the primary endpoint is observed or the trial period ends, patients are often followed and medication information (salvage treatments used) is collected for patients who become resistant to frontline therapy or for patients with disease progression. The collection of salvage treatment information is often done only for the purpose of safety, however, such information allows the researchers to obtain meaningful information on subgroup means and variances based on the salvage therapies received within each frontline treatments. Mental health research by its very nature, investigates sequences of treatments and hence the means and variances of responses under a particular treatment sequence are most likely to be available from observational studies or from electronic medical records. Fortunately, there are already existing SMAR trials in mental health (STAR*D [2], CATIE [19]) that can provide useful information on subgroups to be used in future trial design.

The Murphy [5] and Dawson and Lavori [10] methods require fewer unknown quantities to be specified compared to what is required by our formula, our parameters are basically means and variances of response among subpopulations. Generally, these parameters can be obtained from pilot studies, non-SMAR trials or observational studies. Therefore, these parameters are less likely to be mis-specified as compared to the parameters in Murphy’s [5] and Dawson and Lavori’s [10] methods. Moreover, our focus is to compare multiple treatment strategies for which specification of effect size does not necessarily reduce the number of unknown parameters.

Oetting et al. [9] sample size for comparing two strategies is derived under the assumption that response rates are the same across the two first stage treatments. While a sensitivity analysis was carried out in the simulation, this assumption may not be reasonable in practice. Finally, our formula does not address the issue of finding an optimal treatment strategy, which is a separate issue that is dealt with in Oetting et al. [9].

Use of Mahlanobis distance as an effect size measure in our analysis is to verify that the sample size increases with the increase in distance among the strategy means. Note that unlike standard effect size measures, Mahlanobis distance has no benchmark values to indicate large, small or moderate effect sizes. It should just be treated as a distance among multiple strategy means standardized for the variability.

Future research could investigate sample size formulas for various k-stage designs with emphasis on specific and meaningful number of strategies. Issues of missing data is another design concern in SMAR trials that needs to be addressed.

Acknowledgments

The authors thank the reviewers and Associate Editor for their constructive suggestions. This helped improve the manuscript substantially. This research was in part supported by a National Institute of Mental Health Grant P30 MH090333.

Appendix A: Influence function for μ̂jkl

Equation (6) can be expanded as follows μ̂jkl satisfies . Expanding with respect to μjkl,

This implies,

and

Now , and hence μ̂jkl − μjkl is op(1) and is bounded in probability (Op(1)) because of its convergence in distribution to normal distribution by central limit theorem. Therefore, the second term on the right is op(1).

Appendix B: Variance and covariance of strategy means

Following Ko and Wahed (2012), the variance formula in Equation (7) is derived as follows, . This variance can be expressed in terms of subgroup-specific population parameters. For example, consider . In this case, the weight is defined as , and therefore, since the indicator variables X1i, Ri, Z1i and take values 0 or 1; the term disappears since a patient can only be a responder or a non-responder. Then, . Under assumptions (A1)–(A3), using a series of conditional expectations, we can show that,

Consequently, the asymptotic variance of μ̂111 is given by,

Estimators that share the same first-stage treatment would be correlated as they use a common group of observations. Consider and .

To derive the covariance between strategy means and , we note that similar to is distributionally equivalent to . Therefore, the asymptotic covariance of and is given by,

Since , we can further simplify the above as,

Since, from Equation (2), (μA1B1 − μ111) = μA1B1 − π1μA1B1 − (1 − π1)μA1C1 = (1 − π1)(μA1B1 − μA1C1) and (μA1B1 − μ112) = (1 − π1)(μA1B1 − μA1C2), it follows that asymptotic covariance of μ̂111 and μ̂112 is given by

| (11) |

A similar derivation could be employed to compute other covariances. Let Σ = var(ψi), where ψi is the vector of eight influence functions ψjkli, j, k, l = 1, 2, denote the variance-covariance matrix where Equation (7) and similar entities are used to form the diagonal elements and Equation (11) is used to form the off-diagonal entries, respectively.

References

- 1.Lei H, Nahum-Shani I, Lynch K, Oslin D, Murphy SA. A ”SMART” design for building individualized treatment sequences. Annual Review of Clinical Psychology. 2012;8:14.1–14.28. doi: 10.1146/annurev-clinpsy-032511-143152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Rush AJ, Fava M, Wisniewski SR, Lavori PW, Trivedi MH, Sackeim HA. Sequenced treatment alternatives to relieve depression (STAR*D): rationale and design. Control Clin. Trials. 2004;25:119–142. doi: 10.1016/s0197-2456(03)00112-0. [DOI] [PubMed] [Google Scholar]

- 3.Lavori PW, Dawson R, Rush AJ. Flexible treatment strategies in chronic disease: clinical and research implications. Biol. Psychiatry. 2000;48:605–614. doi: 10.1016/s0006-3223(00)00946-x. [DOI] [PubMed] [Google Scholar]

- 4.Lavori PW, Dawson R. Dynamic treatment regimes: practical design considerations. Clin. Trials. 2004;1:9–20. doi: 10.1191/1740774s04cn002oa. [DOI] [PubMed] [Google Scholar]

- 5.Murphy SA. An Experimental Design for the Development of Adaptive Treatment Strategies. Statistics in Medicine. 2005;24:1455–1481. doi: 10.1002/sim.2022. [DOI] [PubMed] [Google Scholar]

- 6.Feng W, Wahed AS. A supremum log rank test for comparing adaptive treatment strategies and corresponding sample size formula. Biometrika. 2008;95(3):695–707. [Google Scholar]

- 7.Feng W, Wahed AS. Sample Size for Two-Stage Studies with Maintenance Therapy. Statistics in Medicine. 2009;28:2028–2041. doi: 10.1002/sim.3593. [DOI] [PubMed] [Google Scholar]

- 8.Li Z, Murphy SA. Sample size formulae for two-stage randomized trials with survival outcomes. Biometrika. 2011;98(3):503–518. doi: 10.1093/biomet/asr019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Oetting AI, Levy JA, Weiss RD, Murphy SA. Statistical methodology for a SMART design in the development of adaptive treatment strategies. In: Shrout PE, editor. Causality and Psychopathology: Finding the Determinants of Disorders and their Cures. Arlington, VA: American Psychiatric Publishing; 2011. pp. 179–205. [Google Scholar]

- 10.Dawson R, Lavori PW. Sample Size calculations for Evaluating Treatment Policies in Multi-Stage Design. Clin. Trials. 2010;7:643–652. doi: 10.1177/1740774510376418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Dawson R, Lavori PW. Efficient design and inference for multistage randomized trials of individualized treatment policies. Biostatistics. 2012;13(1):142–152. doi: 10.1093/biostatistics/kxr016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Dwyer JH. Analysis of variance and the magnitude of effects: A general approach. Psychological Bulletin. 1974;81(10):731–737. [Google Scholar]

- 13.Ko JH, Wahed AS. Up-front vs. Sequential Randomizations for Inference on Adaptive Treatment Strategies. Statistics in Medicine. 2012;31(9):812–830. doi: 10.1002/sim.4473. [DOI] [PubMed] [Google Scholar]

- 14.Pelham WE, Fabiano GA. Evidence-based psychosocial treatments for attention deficit/hyperactivity disorder. J. Clin. Child Adolesc. Psychol. 2008;37:184–214. doi: 10.1080/15374410701818681. [DOI] [PubMed] [Google Scholar]

- 15.Robins JM. Causal inference from complex longitudinal data. In: Berkane M, editor. Latent Variable Modeling and Applications to Causality. New York, NY: Springer; 1997. pp. 69–117. [Google Scholar]

- 16.Thall PF, Wooten LK, Logothetis CJ, Millikan RE, Tannir NM. Bayesian and frequentist two-stage treatment strategies based on sequential failure times subject to interval censoring. Statistics in Medicine. 2007;26:4687–4702. doi: 10.1002/sim.2894. [DOI] [PubMed] [Google Scholar]

- 17.Kidwell K, Wahed AS. Weighted log-rank statistic to compare shared-path adaptive treatment strategies. Biostatistics. 2013;14(2):299–312. doi: 10.1093/biostatistics/kxs042. [DOI] [PubMed] [Google Scholar]

- 18.Estey EH, Thall PF, Pierce S, Cortes J, Beran M, Kantarjian H, Keating MJ, Andreeff M, Freireich E. Randomized phase II study of fludarabine+ cytosine arabinoside+ idarubicinall-trans retinoic acidgranulocyte colony-stimulating factor in poor prognosis newly diagnosed acute myeloid leukemia and myelodysplastic syndrome. Blood. 1999;93(8):2478–2484. [PubMed] [Google Scholar]

- 19.Schneider LS, Tariot PN, Lyketsos CG, Dagerman KS, Davis SM, Hsiao JK, Ismail MS, Lebowitz BD, Lyketsos CG, Ryan JM, Stroup TS, Sultzer DL, Weintraub D, Lieberman JA. National Institute of Mental Health clinical antipsychotic trials of intervention effectiveness (CATIE), Alzheimer disease trial methodology. American Journal of Geriatric Psychiatry. 2001;9(4):346–360. [PubMed] [Google Scholar]