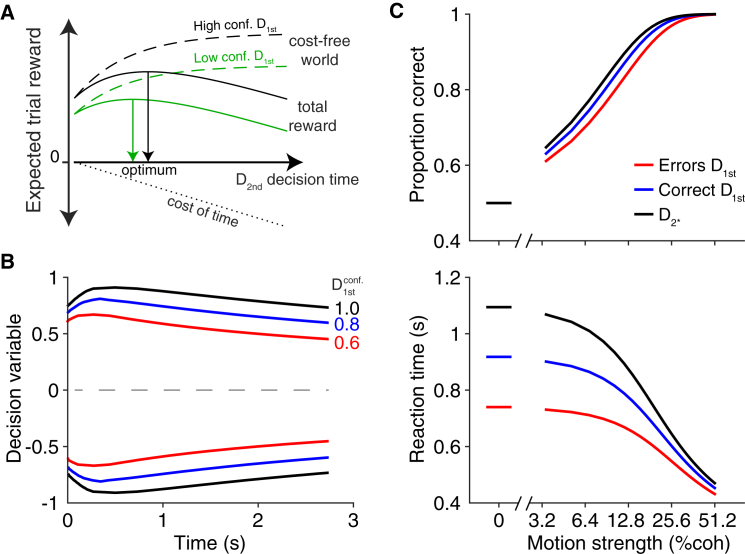

Figure 7.

Normative Model for D2nd

(A) Schematic illustration of the impetus to change the strategy on a second decision, based on the confidence in the first. The expected reward after the D1st decision increases monotonically with viewing duration (dashed lines). The expected reward is lower when D1st confidence is low (compare black and green dashed lines). With a cost on time (dotted black line), the total reward (solid lines) has a maximum corresponding to the optimal decision time, which is longer following high-confidence D1st decisions. Although this schematic provides an intuition for our results, we used dynamic programming to derive the optimal solution to maximize reward rate.

(B) Optimal time-dependent bounds from dynamic programming show that the bound height for D2nd increases with D1st confidence.

(C) Model simulation of accuracy and reaction time for second decisions using the optimal bounds in (B). For comparison to Figure 3, the three levels are comparable to a D2∗ decision (black: full confidence of 1.0), correct D1st (blue: high confidence of 0.8), and an error on D1st (red: low confidence of 0.6).