Abstract

Purpose

Since 2004, the Clinical Faculty Scholars Program (CFSP) at the University of Colorado Anschutz Medical Campus has provided intensive interdisciplinary mentoring and structured training for early-career clinical faculty from multiple disciplines conducting patient-oriented clinical and outcomes research. This study evaluated the two-year program’s effects by comparing grant outcomes for CFSP participants and a matched comparison cohort of other junior faculty.

Method

Using 2000–2011 institutional grant and employment data, a cohort of 25 scholars was matched to a cohort of 125 comparison faculty (using time in rank and pre-period grant dollars awarded). A quasi-experimental difference-in-differences design was used to identify the CFSP effect on grant outcomes. Grant outcomes were measured by counts and dollars of grant proposals and awards as principal investigator. Outcomes were compared within cohorts over time (pre- vs post-period) and across cohorts.

Results

From pre-to post-period, mean annual counts and dollars of grant awards increased significantly for both cohorts, but mean annual dollars increased significantly more for the CFSP than for the comparison cohort (delta $83,427 vs. $27,343, P < .01). Mean annual counts of grant proposals also increased significantly more for the CFSP than for the comparison cohort: 0.42 to 2.34 (delta 1.91) vs. 0.77 to 1.07 (delta 0.30), P < .01.

Conclusions

Institutional investment in mentored research training for junior faculty provided significant grant award gains that began after 1 year of CFSP participation and persisted over time. The CFSP is a financially sustainable program with effects that are predictable, significant, and enduring.

Mentoring is understood to increase professional success for early-career faculty, especially junior clinical faculty seeking careers that include research.1–8 At university-based academic health centers (AHCs), research training programs can generally be accessed by these faculty only after they have been awarded funding (e.g., an institutional career development award [CDA])5,7 or have enrolled in degree-granting programs.4 A few published case studies have focused on mentoring for junior faculty scholarship, typically programs for clinicians or clinician–educators.9–12 Additional studies have assessed the ability of training programs to enhance mentoring skills of the mentors of junior faculty.13,14 A gap remains in the literature, as well as in practice, regarding ways for AHCs to develop junior faculty in grantsmanship to aid their academic persistence, that is, their retention and progression in rank at their institution.15 Model programs, such as the Robert Wood Johnson Foundation Clinical Scholars program, exist at the national level for a small number of faculty.16 Nevertheless, thousands of junior faculty begin their appointments at AHCs planning careers that will include externally funded research. Attrition in the early-career faculty ranks indicates that many talented and well-trained clinicians and scientists who seek these careers are not retained at AHCs, in part due to their inability to achieve external funding.15,17–19

As clinical and outcomes researchers at a university-based AHC, the University of Colorado Anschutz Medical Campus (CU-Anschutz), we identified a local career development gap for junior faculty who wanted to become leaders in patient-oriented research by achieving external funding. These junior faculty often floundered in clinical departments that were laboratory-science oriented or did not have a strong track record of funded research, and thus they lacked research mentors in their departments. We therefore developed a program for these faculty that includes intensive mentoring as well as structured training.

Since 2004, the Clinical Faculty Scholars Program (CFSP) at CU-Anschutz has provided a ready-made research mentoring team to help rotating cohorts of scholars build tailored mentorship teams to support the goal of achieving external funding—most commonly CDAs or exploratory/developmental research awards. This faculty-led, interdisciplinary initiative requires departmental buy-in through significant sponsorship commitments for scholars and is financially self-sustaining. In brief, the CFSP trains junior faculty for grant productivity and academic success by providing targeted research skills training as well as mentoring by senior faculty and peers in order to build a support network and foster an institutional culture of mentoring.

In this report, we describe the CFSP and a quantitative evaluation of its effect on grant outcomes. We measure grant outcomes using objective institutional data on grant proposals and grant awards, observe program participants over time to assess persistence in outcomes, and incorporate a comparison cohort to control for confounders of experience and selection bias. We also present an existing theoretical model to characterize the mechanism that underpins grant success and persistence after failure.

Method

The CFSP “intervention” is a two-year program that includes intensive mentoring, research training, and peer feedback. The scholars meet regularly with their program mentors and participate in weekly work-in-progress sessions and monthly skill-building workshops. Annual evaluations of the CFSP are conducted for continuous quality improvement, using surveys and focus groups that yield important information about program mechanisms.

For this study, we sought objective metrics of success pertaining to a major goal—grant productivity—so we focused on grant proposals and grant awards. Because the CFSP accepts a limited number of applicants (which could cause selection bias), we sought a comparison group. We worked with the CU-Anschutz administration to conduct this evaluation of CFSP grant outcomes using employment and grant data for the period January 2000–September 2011. The Colorado Multiple Institutional Review Board approved this work as program evaluation and not human subject research.

CFSP development and structure

After CU-Anschutz competed for but was not awarded a Robert Wood Johnson Foundation Clinical Scholars site, a core group of faculty decided to seek internal infrastructure funding to develop a local version of the successful national program. The CFSP was founded in the CU-Anschutz School of Medicine in 2004 with three years of start-up funding provided by Dean Richard Krugman’s strategic research infrastructure initiative. This funding paid for program director/mentor time and subsidized sponsorship fees; the subsidization decreased each year over the three-year period while recruitment ramped up and positive outcomes began to be demonstrated, and sponsorship fees were increased to cover costs.

In 2008 the CFSP became a cornerstone program in the Education, Training, and Career Development Core of the Colorado Clinical Translational Sciences Institute (CCTSI) in order to obtain direct access to CCTSI resources for scholars and to expand recruitment beyond the medical school to include the CU-Anschutz College of Nursing, the Colorado School of Public Health, and the Skaggs School of Pharmacy and Pharmacy Sciences. The CCTSI provides partial fee scholarships for underrepresented minority (URM) faculty and for non-medicine faculty. This fee relief helps departments that might otherwise be unable to sponsor faculty and encourages participation by URM faculty (all women to date).

The CFSP costs for each scholar are (1) training fees of $22,000 per year ($20,000 in fiscal years 2004–2013) paid by the scholar’s sponsor/department/division to cover 10–15% of the program directors’ time and (2) at least 50% protected time with salary support for the scholar’s own research, funded or unfunded, during the two years of enrollment. In order to give some relief for repeat sponsorship, quantity discounts are available when a department sponsors three or more scholars at once, as has happened with the general internal medicine and cardiology divisions of the Department of Medicine.

The CFSP target population is early-career faculty with an interest in clinical translational research that is outcomes-based and patient-centered (i.e., the whole, live human). We accept 4–6 scholars per year, for a total of 10–12 scholars at any time. We have accepted faculty from many disciplines of medicine (e.g., internal medicine subspecialties, pediatrics, neurology, emergency medicine, obstetrics/gynecology, and surgery) as well as disciplines outside medicine (e.g., epidemiology, law, decision sciences, nursing, and medical anthropology). A typical scholar is an early-career clinician–scientist with research training who has attempted grant proposals—often proposals for CDAs—but has had limited success and faces at least one gap in mentorship. The minimum research training requirement for scholars is a research-based fellowship; an in-progress or completed master’s degree is more desirable, and a PhD is ideal. For breaks in participation (e.g., maternity/paternity leave), the program clock is paused to allow two full years.

Each scholar is assigned a primary senior mentor from among the program directors (A.L., A.P., A.G.) and meets individually with this mentor at least once a month. All scholars consult on research design and biostatistics with another program director (D.F.), who is a biostatistician. We recently added a program director who is a qualitative methodologist and provides similar functions. The mentor assignments are made to balance workload and interest in the area; there is a slight preference to avoid matching scholars with content experts because the primary senior mentors are intended to act more as career mentors. Each scholar also meets with each program director at least once annually. Scholars’ non-CFSP mentors, such as scientific or content-area mentors and departmental sponsors who are not paid by the CFSP, are asked to attend one work-in-progress session annually when their mentee presents.

Throughout the calendar year, the CFSP participants and program directors gather for weekly work-in-progress sessions. During each session, two scholars have 45 minutes each to present a scholarly or career issue for feedback. Each scholar presents roughly monthly (8–10 times per year). In 2006 we added monthly 2-hour workshops on research strategies to help scholars build needed skills. The workshops are run by program directors or guest experts and focus on skills related to grantsmanship (e.g., effective letters of support, specific aims, budgets and justifications), technical writing (e.g., clarity, organization, story-telling), and practical career development matters (e.g., managing and negotiating money, hiring and firing, lab management, strengths-based goal setting). These skills are the “hidden curriculum” for academic success that is not provided by disciplinary training.15,17

The CFSP’s scholar-to-scholar peer mentoring component allows reality-testing regarding workload arrangements, time management, and writing strategies, and helps scholars find collaborators or resources.20 As scholars are commonly submitting proposals for the same kind of grant mechanisms, this peer learning is valuable and yet not often available to a junior faculty member isolated in a home department or division. The cohort of scholars in program year 2 naturally moves into a leadership role for the scholars in program year 1. Year 2 scholars are trained as peer mentors during the work-in-progress sessions, where they are expected to take the lead in asking questions and giving feedback to the presenting scholars before the program directors weigh in. This regular mentoring practice reinforces learning by using the student as the teacher. At the most basic level, this feature of the CFSP model reflects Bandura’s theory of reciprocal learning.21 In addition, the norms of how to be collegial and productive modeled by mentors and fellow scholars deliver tacit and explicit messages of “how things are done here.” We tried, and rejected, formal peer-mentor assignments for mentoring outside of program sessions. Instead, we have encouraged and seen such peer-mentoring grow organically around topic areas. Small groups of former scholars hold their own work-in-progress sessions around campus and invite current scholars to join them.

Study sample: CSFP and comparison cohorts

We sought to evaluate the effect of CFSP participation on grant outcomes. Because selection into the program requires planning, applying, and negotiating for program sponsorship (tuition fees and protected time) there could be a selection bias toward success. To adjust for this selection, we sought to create a matched comparison cohort from the universe of junior faculty at CU-Anschutz using grant awards observable in the CU-Anschutz system and employment records.

All faculty in the CFSP and comparison cohorts were assistant professors appointed in the full-time regular (tenure-eligible) faculty line because clinical faculty appointments (i.e., clinical practice series) rarely have expectations for grant productivity. All faculty had hire or appointment start dates in the period January 1, 2000–September 23, 2011. Agreed-upon or actual protected time for research was not observable. Most faculty in the CFSP cohort were new hires to the AHC, so we assumed that there would be some extent of protected time in the first 2–3 years of employment for all regular faculty recruits. Potential comparison faculty were drawn from a list of all assistant professors at CU-Anschutz with hire/appointment dates in the study period, which was provided to us by the AHC’s human resources department for the purposes of program evaluation.

Data for all grant proposals submitted during the period January 1, 2000–September 23, 2011 through the CU-Anschutz central administrative system by scholars and eligible comparison faculty as principal investigator (PI) were extracted by the Office of Grants and Contracts (P.J. was director at that time) and provided to us for the purposes of program evaluation. Grant data, including the amount proposed (grant proposal) and the amount awarded (grant award, if any) were extracted to assist in removing duplicates, as the raw record extract typically contained multiple records per grant and sometimes multiple names per investigator (due to misspellings, name variants or changes in last name). To remove duplicate records, the list was reduced to all unique combinations of PI name, process date (i.e., date of proposal submission), routing number, and project title. The remaining list contained 32,584 grants for all junior faculty members in the initial pool of CFSP and eligible comparison faculty.

For the CFSP cohort, we selected the earliest 25 program participants to allow for sufficient follow-up observation through September 23, 2011. This cohort included the scholars who began the 2-year program on the CFSP’s initiation date of July 1, 2004, through those who started the program on July 1, 2009. (Scholars who started the program on July 1, 2010, or later were excluded as having insufficient time for follow-up observation.) The average time observed for the 25 scholars included in the CFSP cohort was 6 years, comprising an average of 1.5 years before entry into the CFSP, 2 years in the CFSP, and 2.5 years after completing the CFSP. To anchor pre- and post-CFSP study periods for each scholar, an index date was created at the program midpoint. For example, a scholar in the 2009 CFSP cohort would begin the program on July 1, 2009, have an index date (midpoint) of July 1, 2010, and end the program on June 30, 2011. We defined the pre-period to include CFSP year 1 to allow for exposure to training and because new faculty hires were not likely to have observable grant data in the CU-Anschutz system prior to their CFSP start date. After one year in the CFSP, most scholars had submitted grant proposals and some had received grant awards; therefore we started the post-period at the beginning of program year 2. For the CFSP cohort, the pre-period averaged 2.5 years and the post-period averaged 3.5 years.

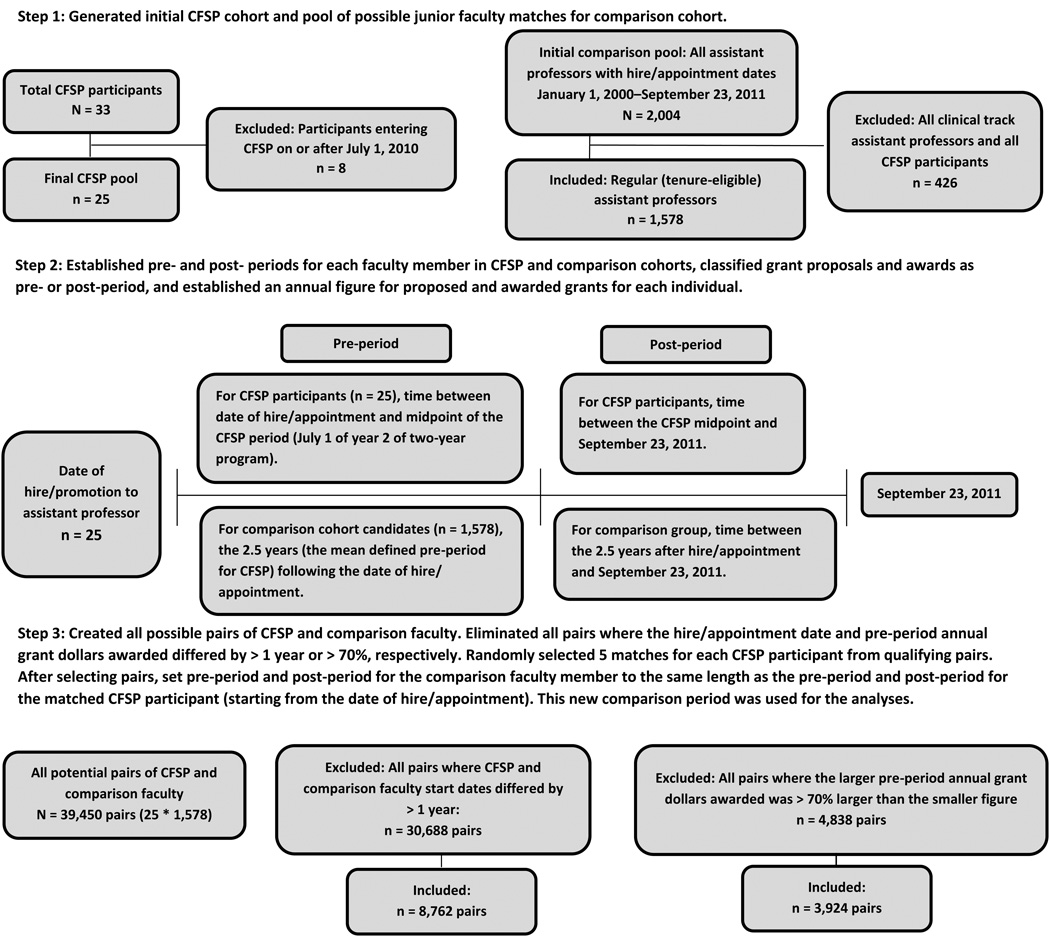

To qualify potential matches between the 25 faculty in the CFSP cohort and the eligible comparison faculty, we used two key variables: (1) time in rank on campus (using hire/appointment date) and (2) dollars awarded in grants as PI during the period prior to the CFSP midpoint or an equivalent period from the hire/appointment date for the comparison faculty. Grant outcomes were annualized as the total value of grant awards per period. Total amounts of multi-year awards were credited to the year that the grant proposal was processed at CU-Anschutz. We selected five matches for each CFSP scholar for a total of 125 faculty in the comparison cohort. The steps taken to define the pre- and post-periods and index dates for scholars, to identify possible matches from the eligible comparison faculty using the key variables, to randomly select qualified matches, and then to apply pre- and post-periods to those matches are presented in Figure 1. For additional details on the matching process and an example, see Supplemental Digital Appendix 1 at [LWW INSERT LINK].

Figure 1.

Steps taken to create the Clinical Faculty Scholars Program (CFSP) cohort and the matched comparison faculty cohort used in this study. The final CFSP cohort included 25 scholars and the matched comparison cohort included 125 early-career faculty. For additional details and an example, see Supplemental Digital Appendix 1 at [LWW INSERT LINK].

Evaluation design: Difference in differences

We evaluated grant outcomes using a difference-in-differences approach: changes in counts and dollars of grant proposals and awards were compared over time within cohorts (pre- to post-period so each cohort was its own control), and then the differences in these changes were calculated across the CFSP and comparison cohorts. Percentages of faculty with any grant awards and the total count and dollars of grant proposals and grant awards were compared using parametric (t-tests) and non-parametric tests (chi-square, Wilcoxon rank sum). Two-tailed P < .05 was considered statistically significant. Sensitivity analyses were performed (data not presented, as findings were robust to the alternative specifications of parametric t-tests, full sample instead of matched sample, and maximum follow-up for the comparison faculty [through September 23, 2011] instead of matched follow-up [i.e., a post-period length the same as that for paired scholar]). Subanalyses by grant mechanism were performed on CDA grants (e.g., NIH K-series awards or awards from organizations such as the American Heart Association) to explore the CFSP’s effect on this grant type due to the importance of this mechanism to junior faculty who seek to gain protected time to launch their research. SAS software version 9.3 (SAS Institute, Cary, North Carolina) was used for data management and analyses (P.H., D.F., A.L.).

Results

Table 1 presents outcomes for pre-and post-period grant productivity metrics for the CFSP and comparison cohorts: grant awards and grant proposals described by unduplicated counts and total dollars (direct plus indirect costs). For faculty in the CFSP and comparison cohorts, in the pre-period the average annual number of grant awards was < 1 (median 0), with a non-significant difference between cohorts. In dollar terms, these awards averaged approximately $22,000 per year for the CFSP cohort and $27,000 per year for the comparison cohort. The patterns for grant proposals in the pre-period were the same as for grant awards—numerically similar with non-significant differences, with the comparison cohort having a slightly higher annual average number of proposals and a higher annual average amount proposed.

Table 1.

Annual Grant Productivity of Faculty in Clinical Faculty Scholars Program (CFSP) Cohort and Comparison Cohort of Junior Faculty by Period, University of Colorado Anschutz Medical Campus (CU-Anschutz), 2000–2011a

| Outcome | CFSP cohort (n = 25) | Comparison cohort (n = 125) | Cohort comparison statistical tests |

|||||

|---|---|---|---|---|---|---|---|---|

| Pre-period: mean, median (range) |

Post-period: mean, median (range) |

Pre v. postb |

Pre-period: mean, median (range) |

Post-period: mean, median (range) |

Pre v. postb |

(Pre)c Pre v. pre |

(Post)c Post v. post or Δ v. Δ |

|

| Grant awards | ||||||||

| Count per year | 0.20, 0.00 (0.00 to 1.50) |

1.15, 0.97 (0.00 to 3.12) |

< .01 | 0.30, 0.00 (0.00 to 5.00) |

0.49, 0.00 (0.00 to 7.44) |

.04 | .88 | < .01 |

| Delta (Δ) count per year |

0.94, 0.95 (−0.53 to 2.8) |

0.18, 0.00 (−1.59 to 7.44) |

< .01 | |||||

| Dollars per year | $21,580, $0 ($0 to $255,998) |

$105,008, $74,567 ($0 to $405,601) |

< .01 | $26,742, $0 ($0 to $451,616) |

$53,716, $0 ($0 to $969,419) |

.02 | .99 | < .01 |

| Delta (Δ) dollars per year |

$83,427, $57,585 (−150,904 to $340,834) |

$27,343, $0 ($−149,153 to $821,473) |

< .01 | |||||

| Grant proposals | ||||||||

| Count per year | 0.42, 0.00 (0.00 to 2.40) |

2.34, 2.16 (0.00 to 6.00) |

< .01 | 0.77, 0.00 (0.00 to 6.50) |

1.07, 0.00 (0.00 to 9.36) |

.02 | .83 | < .01 |

| Delta (Δ) count per year |

1.91, 2.16 (−0.22 to 4.29) |

0.30, 0.00 (−5.43 to 8.76) |

< .01 | |||||

| Dollars per year | $50,032, $0 ($0 to $78,309) |

$289,986, $263,955 ($116,302 to $355,734) |

< .01 | $88,312, $0 ($0 to $54,000) |

$170,574, 0 ($0 to $159,653) |

< .01 | .95 | < .01 |

| Delta (Δ) dollars per year |

$239,954, $168,569 ($40,490 to $271,933) |

$82,262, $0 ($0 to $84,390) |

< .01 | |||||

| No. years observed | 2.46, 1.75 (0.92 to 7.08) |

3.49, 3.17 (1.17 to 6.17) |

2.46, 1.75 (0.92 to 7.08) |

3.49, 3.17 (1.17 to 6.17) |

||||

|

Pre-period: % (no. awards/no. proposals) |

Post-period: % (no. awards/no. proposals) |

Pre v. postb |

Pre-period: % (no. awards/no. proposals) |

Post-period: % (no. awards/no. proposals) |

Pre v. postb |

(Pre)c Pre v. Pre |

(Post)c Post v. Post or Δ v. Δ |

|

| Grant success rate | 46.9 (15/32) | 52.9 (91/172) | .53 | 42.5 (134/315) | 51.7 (275/532) | .01 | .64 | .78 |

|

CDAs as proportion of total grant awardsd |

0.0 (0/15) | 13.2 (12/91) | .21 | 7.5 (10/134) | 6.2 (17/275) | .62 | .60 | .03 |

|

CDAs as proportion of total grant proposalsd |

12.5 (4/32) | 18.0 (31/172) | .45 | 10.8 (34/315) | 6.8 (36/532) | .04 | .77 | < .01 |

Abbreviations: CDA indicates career development award.

The faculty in the CFSP cohort (n = 25) entered the CFSP in the 2004–2009 program cohorts; the early-career faculty in the comparison cohort were matched 5:1 to CFSP faculty based on time in rank using date of hire/appointment and pre-period grant awards. Analyses of grant outcomes used data observable in the CU-Anschutz central administrative system, with grant proposal and grant award dates from January 1, 2000, through September 23, 2011, for grants on which the faculty served as principal investigator. For descriptions of the pre- and post-periods, cohort matching process, and data sources, see the Method section and Figure 1.

Wilcoxon test non-parametric tests or Chi-square tests over time (between period, within cohort)

Wilcoxon test non-parametric tests or Chi-Square tests between cohorts (within period or delta)

NIH K series awards or other CDAs.

Post-period grant metrics were calculated first as post-period annual rates and separately as change rates (delta pre- to post-period) for each cohort. The post-period counts and dollars of grant awards increased significantly in both the CFSP and comparison cohorts compared to the pre-period levels. Comparing the within-cohort deltas, the magnitude of the increase in the dollar value of annual awards was significantly larger for the CFSP group compared with the comparison group (mean increase $83,427 vs. $27,343, P < .01). The same pattern emerged for average counts of awards: the CFSP cohort post-period count was roughly 1 award per person per year higher than in the pre-period (mean 0.20 vs 1.15, P < .01), whereas the comparison cohort count increased by about 0.2 awards per person per year (mean 0.30 vs 0.49, P =.04), a significant difference in differences (pre–post changes across the CFSP cohort vs comparison cohort, delta 0.94 vs 0.18, P < .01). Likewise for grant proposals, the CFSP cohort count increased from a mean of 0.42 per person per year to a mean of 2.34 (delta 1.91, P < .01), whereas the comparison cohort count increased from a mean of 0.77 to a mean of 1.07 (delta 0.30, P =.02). Thus, CFSP cohort proposal count increased significantly more over time than the comparison cohort count (P < .01). Total dollars proposed increased in a similar pattern for the CFSP group relative to the comparison group ($239,954 vs. $82,262, P < .01).

Table 1 also reports grant success rates as conversion from proposals to awards (grants awarded out of grants proposed) across the two periods. Measured success rates in the pre-period were not significantly different for the CFSP and comparison cohorts (46.9% [15/32] vs. 42.5% [134/315], P = .64) and increased non-significantly for both cohorts from the pre- to post-period (CFSP 46.9% to 52.9% vs. comparison 42.5% to 51.7%, P = .78). For CDAs, in the pre-period the two cohorts were non-significantly different: CDAs represented none (0/15) of the scholars’ pre-period grant awards and 12.5% (4/32) of their pre-period grant proposals. In the comparison cohort, CDAs represented 7.5% (10/134) of pre-period grant awards and 10.8% (34/315) of pre-period grant proposals. In the post-period, 18.0% (31/172) of the grants proposed by scholars were CDAs compared with 6.8% (36/532) of the grants proposed by comparison faculty (P < .01). CDAs were also a significantly higher proportion of total post-period awards to scholars, representing 13.2% (12/91) of grant awards to the CFSP cohort versus 6.2% (17/275) of grant awards to the comparison cohort (P < .03).

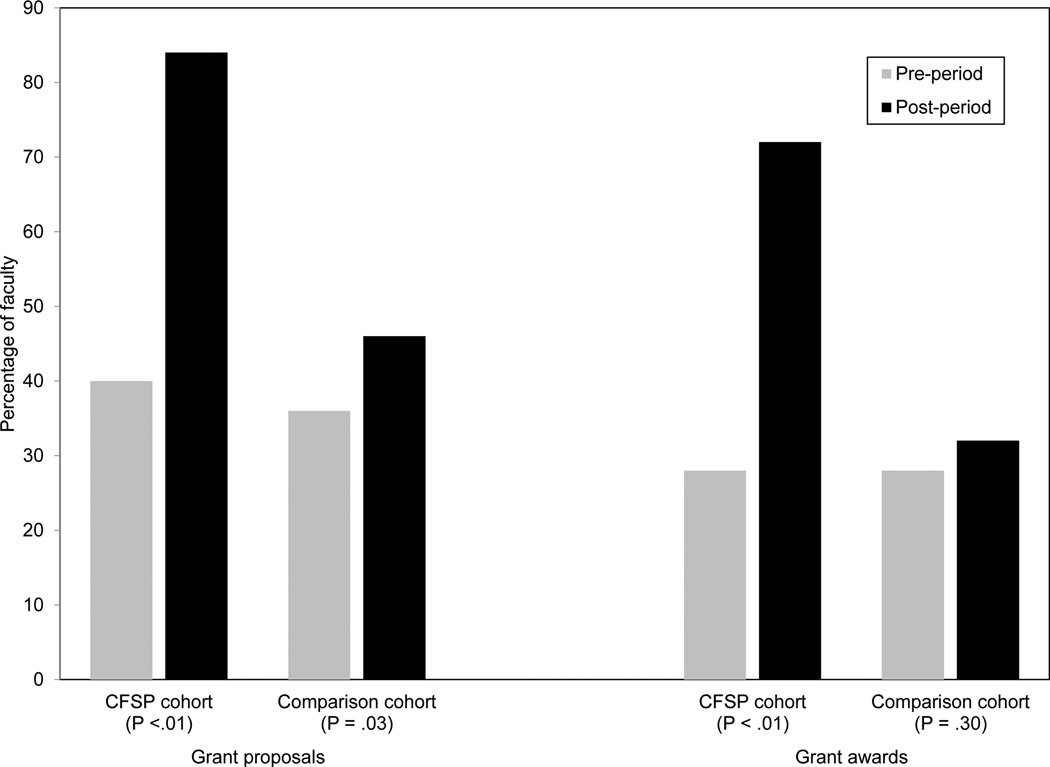

Figure 2 illustrates the proportion of each cohort that had at least 1 grant proposal or award, by period. The pre-period proportions of CFSP and comparison faculty with grant awards did not differ (both 28.0%; 7/25 and 35/125, respectively; pre-period grant dollars awarded were a match criterion). The post-period, however, showed a significant increase in the proportion of CFSP faculty with grant awards to 72.0% (18/25; P < .01) and a non-significant increase in the comparison cohort to 32.0% (40/125; P = .30) (difference-in-differences P < .01). There was a similar pattern for grant proposals in the post-period, with greater increases for the scholars versus the comparison faculty.

Figure 2.

Percentage of faculty in the Clinical Faculty Scholars Program (CFSP) cohort (n = 25) and comparison cohort (n = 125) with at least one grant proposal or grant award, by period. For descriptions of the pre- and post-periods, cohort matching process, and data sources, see the Method section.

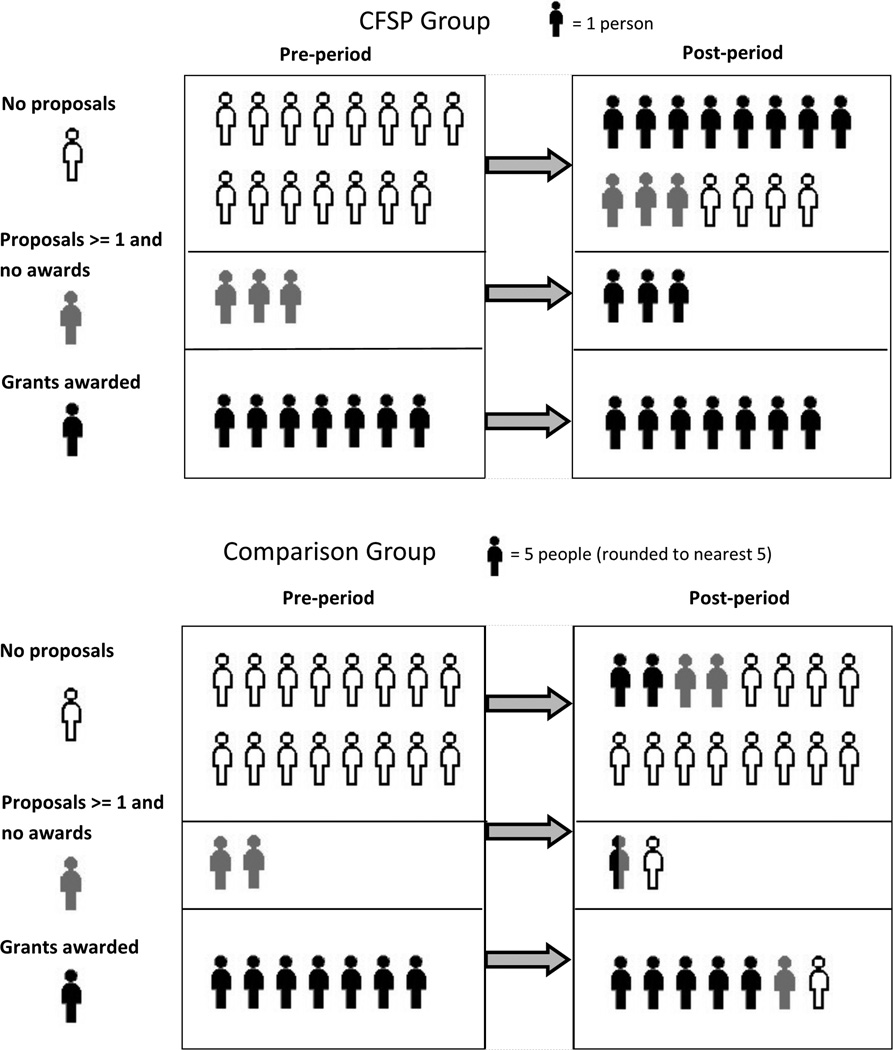

Figure 3 illustrates grant proposals, grant awards, and persistence by cohort. On the basis of pre-period grant activity, we divided the faculty in each cohort into three mutually exclusive categories: faculty with grants awarded, faculty with unfunded proposals (i.e., at least 1 proposal but no awards), and faculty with no proposals. In the pre-period, as reported above, the proportions of faculty with grants awarded were balanced: 28.0% (7/25) of scholars and 28.0% (35/125) of comparison faculty. Similarly, 40.0% (10/25) of scholars and 36.0% (45/125) of the comparison faculty had submitted at least 1 proposal (difference not significant, P = .70)].

Figure 3.

Grant proposals, grant awards, and persistence, by period: Clinical Faculty Scholars Program (CFSP) cohort (n = 25) and comparison faculty cohort (n = 125). For descriptions of the pre- and post-periods, cohort matching process, and data sources, see the Method section.

Of the 60.0% (15/25) of scholars who did not submit a grant proposal in the pre-period, 73.3% (11/15) submitted proposals in the post-period (Figure 3). A similar proportion of comparison faculty did not submit proposals in the pre-period (64.0%, 80/125); in the post-period, 25.0% (20/80) of these faculty submitted proposals. (Four [16.0%] of the scholars and 60 [48.0%] of the comparison faculty had zero proposals in both periods.) All 10 scholars who submitted proposals in the pre-period (of whom 3 were not awarded and 7 were awarded grants in the pre-period) held awards in the post-period. Of the 10 comparison faculty with unfunded proposals in the pre-period, 50.0% (5/10) submitted proposals again in the post-period (of whom 2 were not awarded and 3 were awarded grants).

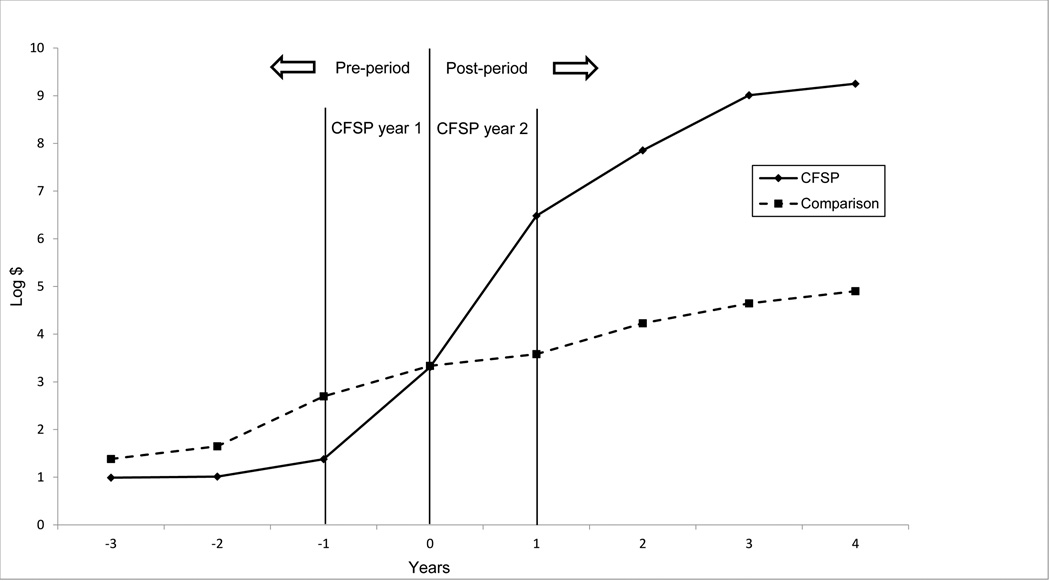

Figure 4 plots average grant award values over time by cohort. Mean dollars were transformed to a logarithmic scale because of dispersion and skew of the dollars, and $1 was added to every value so the zeroes would be determinate. The timeline was anchored on 0 as the midpoint between the first and second years in the CFSP or the matched equivalent period. The figure captures participation in proposals (non-zero tries for grants), the dollar amounts proposed, and the cumulative nature of the proposals and awards over time (Price × Quantity). The two cohorts started out at the leftmost point with low cumulative grant dollars at the time of hire/appointment, with the comparison cohort non-significantly higher than the CFSP cohort. During CFSP year 1, cumulative dollars awarded began to converge for the cohorts. In CFSP year 2, the cohorts reversed position as the comparison cohort’s cumulative award value increased nonsignificantly while the CFSP cohort’s cumulative award value continuously increased at a higher rate throughout the post-period. By the end of the observation period, and despite no significant gain in overall grant success rate, the CFSP cohort showed higher dollars awarded and higher counts of submitted proposals than those of the comparison cohort.

Figure 4.

Log (cumulative dollars awarded + $1) mean dollars by year, Clinical Faculty Scholars Program (CFSP) cohort versus comparison faculty cohort (n = 125). The CFSP program is a two-year program. For these calculations, time = 0 was set as the midpoint of the CFSP program or matched equivalent period. For descriptions of the pre- and post-periods, cohort matching process, data sources, and calculations, see the Method section.

Discussion

This evaluation of the CFSP—a faculty development program with intensive interdisciplinary senior and peer mentorship and research strategies/skills training for early-career faculty—provides evidence of success in grant productivity. We used objective grant metrics from administrative records, a matched comparison cohort, and a quasi-experimental difference-in-differences design to separate the effects of CFSP and time. Significant increases were observed in the CFSP cohort in participation in grant proposals (i.e., more faculty proposals for funding) and persistence (i.e., more faculty who had tried and failed continued to try and subsequently were successful) that accumulated significantly higher dollar awards over time and over a matched comparison faculty group. The comparison faculty—a selected, matched sample of “everybody else” of the same rank who had similar baseline awards—converted grant proposals to awards to some extent from the pre- to post-period, but comparison faculty who did not have awards in the pre-period generally did not submit or continue to submit in the post-period.

The comparison group is a key feature of this study. A potential criticism of the observed success of the CFSP cohort is “cherry-picking” faculty and thus selection could be the explanation for the success—that is, CFSP faculty would be successful with or without the program. Because we recruit scholars from a small pool of junior faculty who are seeking careers in patient-centered clinical or outcomes research and because the program requires a departmental sponsor, we did not have a large pool of unsuccessful applicants who might have joined but were not accepted to use as a comparison group. (We generally turn down 0–4 applicants annually.) Instead, we sought to use objective criteria to attempt to control for any pre-period grant success that would be the best predictor of future grant success. Grant data were linked to human resource records to create a similarly experienced comparison cohort by also selecting on job titles and hire/appointment dates. This allowed us to exclude clinical assistant professors who would not likely have grantsmanship as a job expectation and to use hire/appointment start dates to anchor the observation periods.

Outcome measures of grant productivity were chosen as objective criteria that would indicate program success for the individual scholar and the departmental sponsor. Direct, monetary program value could also be assessed in terms of return on investment (ROI), with the dollar cost for tuition weighed against gains in grant funding. To calculate ROI, gains were calculated as the difference between cohorts in post-period total average award values, as follows: CFSP cohort total post-period award dollars per person minus same figure for the comparison cohort = $369,018 – $272,022 = gain of $96,996 per person. Costs were tuition fees of $40,000 per scholar over the two-year program. We excluded the salary offset because those funds are spent for full-time tenure-eligible faculty irrespective of program participation and vary widely due to numerous unobservable factors not associated with program participation. This yielded an ROI of 143%, or ((96,996–40,000)/40,000) = 1.43, which could be interpreted as indicating that each dollar invested in the scholar’s CFSP tuition pays back an additional $2.42, more than doubling the investment. Although this ROI is only one measure of value, it suggests the CFSP provides an excellent value for the money in terms of extramural funding gains alone. It should be noted that this ROI likely underestimates the program’s value by ignoring direct and indirect effects such as collaborative awards, retention, satisfaction, engagement, workplace quality, culture, and recruitment, which we did not monetize.

There is evidence suggesting the CFSP has recruitment value. Across our campus, the program has become a tool for recruiting junior faculty interested in clinical and outcomes research. Over the past several years, we have observed that many scholars begin the CFSP on the first program start date (July 1) following their hire/appointment at the AHC. The CFSP provides an answer for departments to a recruit’s question of “What will you provide to help me be successful if I come here?” CFSP tuition fees and protected time have been built into letters of offer for use if and when the newly hired faculty member is accepted by the program. CFSP directors have joined recruitment interviews and have connected applicants to the program. In addition, there is evidence of workplace quality and culture value: CFSP directors, other senior faculty, and former scholars have established structured research career development programs inspired by the CFSP, such as the CCTSI Colorado Mentoring Training program (CO-Mentor, a structured program for pairs of junior and senior faculty), the Scientist Training and Intensive Mentoring in Emergency Medicine (EM-STIM) program, and the Surgical/Subspecialty Clinical Outcomes Research (SCORE) program. These and other CFSP-inspired programs are disseminating mentoring skills and building research capacity in a range of departments/divisions, such as OB/gynecology, gastroenterology, cardiology, pediatrics, medicine, and emergency medicine.

What is the mechanism of action for the CFSP? This evaluation did not explore that question, but the theory and predictions that seem closest are Bandura’s social cognitive theory and its core concept of a person’s belief in his or her own capabilities (i.e., perceived self-efficacy).22 Self-efficacy for grant success was demonstrated in four ways in this evaluation, as the theory predicts: (1) mastery experiences, including failed tries, a normalization of the failure, and experience overcoming failure through perseverance; (2) social modeling, by seeing similar others succeed by perseverance; (3) social persuasion, whereby people are persuaded to believe in themselves and the payoff to perseverance; and (4) management of physical and emotional states (e.g., reducing anxiety or depression) and correcting misreading of emotional states (e.g., depression over rejection or anxiety over uncertainty).22 These aspects match with our observations in this study and several years of program evaluations using annual surveys and focus group reports (data not shown).

From these sources and our own experiences in the program, we suspect that there are a handful of key elements that play an important role in the CFSP effects: the “instant” mentoring team, a cadre of likeminded peers, and a place to belong. This program anchors new junior faculty members on campus, helps them make quick connections to colleagues and resources, and gives them a safe space to ask questions and share their challenges and successes. As a multidisciplinary group, the CFSP cohort resembles a study section review group more closely than a topically focused group. We have observed that when a scholar is able to write a grant proposal so that the whole group can understand the aims, then he or she typically becomes successfully funded. Sometimes the ability to write and speak to content-area specialists, but not to educated non-specialists, acts as a barrier to getting grants funded.

Another key feature of the CFSP is the social network and norming that scholars provide to each other as peer mentors. Each cohort overlaps with others, so scholars have weekly interactions with about 15 other junior faculty members by the time they complete the CFSP (i.e., their own cohort plus the cohorts before and after theirs). Further, all CFSP alumni can be tapped as a network for any scholar; across campus, they act as an identifiable and active network of like-minded faculty with some shared career goals, enhancing scholars’ attachment to the institution. A formal example of this is Lean-In-CU, a Lean In Circle affinity group established by CFSP alumni to promote the success of women in medicine and science.23 These social dimensions have been shown to contribute to academic persistence15 and therefore advancement in rank (academic career success).

Limitations

Despite the strengths of this evaluation, there were limitations. The key dependent variables were grant proposals and grant awards with the faculty member as PI. Awards as PIs are a critical metric of success and independence; the role of PI is one standard of excellence as it typically represents an independent line of inquiry awarded based on experience, expertise, and peer review. This metric is limited, however, by its inability to reflect multiple PI or co-investigator status, which may be significant scientifically and financially. For example, institutional K awards (K12/KL2) would be linked to the program PI and not to the junior faculty grantee/recipient despite the fact that a K12 award would cover a majority of release time for the junior faculty grantee’s research and bring with it resources, additional training, and mentorship. We were able to observe this type of funding only for the scholars through the CFSP’s annual reporting, and it served as a substantial source of early funding for these junior faculty members. However, it was not included in the grant metrics for this study because we had no way to obtain similar data for the comparison faculty. In addition, over time, as the emphasis of external funding shifts toward team-based science, the inability to observe the grant awards on which a scholar is co-investigator—as institutional and national data sources only record the PI— may obscure important trends in the grant proposals submitted by early-career faculty.

Another limitation is that we were only able to observe grant proposals submitted through the CU-Anschutz administrative authority. Our study did not include grant proposals submitted through university affiliates such as the Veteran’s Administration, Denver Health, or Kaiser Permanente Colorado, because we had no way to obtain these records for the comparison cohort. It should be noted, however, that all these limitations remain for absolute levels of reported grants, but are minimized for relative levels as these data underestimate grant productivity in both groups. There is no reason to expect that we would systematically miss unmeasured grants in the CFSP or comparison cohorts, particularly as all regular (tenure-eligible) faculty have academic appointments and deliver care in a variety of university or academic affiliate settings.

Administrative grant and employment records used in this study posed additional limitations: We had no access to individual or organizational variables such as gender, race/ethnicity, research training, years since training, department, start-up package, in-kind research resources (e.g., laboratory or administrative access), or protected time for research. Available data allowed matching on observable grant experience by using pre-period awarded dollars. This criterion was chosen in order to find non-CFSP faculty for whom there was some available time to submit proposals and some support from the department through which the grant was routed, presumably such that the comparison faculty had received PI awards on a similar scale to the CFSP faculty. Nevertheless, whenever decisions were made on matching, choices favored the comparison group and disadvantaged the CFSP faculty (non-significantly), if anyone.

Conclusions

In summary, the CFSP is an innovative faculty-led program that is financially sustainable and enhances junior faculty grant productivity over multiple years. Furthermore, the success of the CFSP confirms its strategic contribution to the continued growth of the AHC’s research portfolio, which is particularly important at a time when well-established senior researchers are progressing toward retirement. Bringing in talented faculty to grow the research enterprise is an expensive strategy, especially if they are not prepared to persevere to obtain grant funding. Developing the capability of people starting their careers and already on the faculty is both cost-effective and humane. We have shown that, with the right resources, junior faculty from a wide range of disciplines can be trained for extramural grant success and that the resulting productivity is observable on average after one year of this training and grows over time. Persistence in grantsmanship by scholars has kept talented faculty researchers at CU-Anschutz flourishing academically, thus enhancing our AHC’s mission. Although this evaluation is not generalizable, the CFSP’s principles may assist other AHCs as they work to build the medical science workforce.

Supplementary Material

Acknowledgments

The authors wish to thank Lisa Affleck, University of Colorado Office of Human Resources, for providing database queries and assistance with analytic data source creation; John Steiner, MD, MPH, founding director of the Clinical Faculty Scholars Program (CFSP) and the Education, Training, and Career Development (ETCD) Core of the Colorado Clinical Translational Sciences Institute (CCTSI) for creative vision and leadership in mentored research training; and Marc Moss, MD, current ETCD Core director for supporting the CFSP and CFSP-inspired structured mentoring training programs as central to the “educational pipeline” for clinical translational science.

Funding/Support: The Clinical Faculty Scholars Program is supported in part by NIH/NCATS Colorado CTSA grant UL1 RR025780. Content in this manuscript is the sole responsibility of the authors and does not necessarily represent official NIH views. This evaluation was unfunded.

Footnotes

Supplemental digital content for this article is available at [LWW INSERT LINK].

Other disclosures: None reported.

Ethical approval: This program evaluation was designed such that it did not meet criteria for human subject research, and was certified as such by the Colorado Multiple Institutional Review Board (protocol 15–1819, October 5, 2015).

Contributor Information

Anne M. Libby, Department of Emergency Medicine, University of Colorado School of Medicine, Anschutz Medical Campus, Aurora, Colorado.

Patrick W. Hosokawa, Adult and Child Consortium for Outcomes Research and Dissemination Sciences (ACCORDS), University of Colorado Anschutz Medical Campus, Aurora, Colorado.

Diane L. Fairclough, Department of Biostatistics, Colorado School of Public Health, University of Colorado Anschutz Medical Campus, Aurora, Colorado.

Allan V. Prochazka, Department of Medicine, University of Colorado School of Medicine, Anschutz Medical Campus, Aurora, Colorado and Department of Veterans Affairs.

Pamela J. Jones, Department of Pediatrics, School of Medicine, University of Colorado Anschutz Medical Campus, Aurora, Colorado.

Adit A. Ginde, Department of Emergency Medicine, University of Colorado School of Medicine, Anschutz Medical Campus, Aurora, Colorado.

References

- 1.Bakken LL. An evaluation plan to assess the process and outcomes of a learner-centered training program for clinical research. Medical Teacher. 2002;24(2):162–168. doi: 10.1080/014215902201252. [DOI] [PubMed] [Google Scholar]

- 2.Blixen CE, Papp KK, Hull AL, Rudick RA, Bramstedt KA. Developing a mentorship program for clinical researchers. Journal of Continuing Education in the Health Professions. 2007;27(2):86–93. doi: 10.1002/chp.105. [DOI] [PubMed] [Google Scholar]

- 3.Colon-Emeric CS, Bowlby L, Svetkey L. Establishing faculty needs and priorities for peer-mentoring groups using a nominal group technique. Medical Teacher. 2012;34(8):631–634. doi: 10.3109/0142159X.2012.669084. [DOI] [PubMed] [Google Scholar]

- 4.DeCastro R, Sambuco D, Ubel PA, Stewart A, Jagsi R. Mentor networks in academic medicine: Moving Beyond a dyadic conception of mentoring for junior faculty researchers. Academic Medicine. 2013;88(4):488–496. doi: 10.1097/ACM.0b013e318285d302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Guise J-M, Nagel JD, Regensteiner JG. Building Interdisciplinary Research Careers in Women’s Heatlh Directors Best practices and pearls in interdisciplinary mentoring from Building Interdisciplinary Research Careers in Women's Health Directors. Journal of Womens Health. 2012;21(11):1114–1127. doi: 10.1089/jwh.2012.3788. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Steiner JF. Promoting mentorship in translational research: Should we hope for Athena or train Mentor? Academic Medicine. 2014;89(5):702–704. doi: 10.1097/ACM.0000000000000205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Tilloman RE, Jang S, Abedin Z, Richards BF, Spaeth-Rublee B, Pincus HA. Policies, activities, and structures supporting research mentoring: A national survey of academic health centers with Clinical and Translational Science Awards. Academic Medicine. 2013;88(1):90–96. doi: 10.1097/ACM.0b013e3182772b94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Tsen LC, Borus JF, Nadelson CC, Seely EW, Haas A, Fuhlbrigge AL. The Development, implementation, and assessment of an innovative faculty mentoring leadership program. Academic Medicine. 2012;87(12):1757–1761. doi: 10.1097/ACM.0b013e3182712cff. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Shaw KB, Mist S, Dixon MW, et al. The Oregon Center for Complementary and Alternative Medicine career development program: Innovation in research training for complementary and alternative medicine. Teaching and Learning in Medicine. 2003;15(1):45–51. doi: 10.1207/S15328015TLM1501_09. [DOI] [PubMed] [Google Scholar]

- 10.Bertram A, Yeh HC, Bass EB, Brancati F, Levine D, Cofrancesco J. How we developed the GIM clinician-educator mentoring and scholarship program to assist faculty with promotion and scholarly work. Medical Teacher. 2015;37(2):131–135. doi: 10.3109/0142159X.2014.911269. [DOI] [PubMed] [Google Scholar]

- 11.Coates WC, Love JN, Santen SA, et al. Faculty development in medical education research: A cooperative model. Academic Medicine. 2010;85(5):829–836. doi: 10.1097/ACM.0b013e3181d737bc. [DOI] [PubMed] [Google Scholar]

- 12.Herbert JL, Borson S, Phelan EA, Belza B, Cochrane BB. Consultancies: A Model for interdisciplinary training and mentoring of junior faculty investigators. Academic Medicine. 2011;86(7):866–871. doi: 10.1097/ACM.0b013e31821ddad0. [DOI] [PubMed] [Google Scholar]

- 13.Johnson MO, Subak LL, Brown JS, Lee KA, Feldman MD. An innovative program to train health sciences researchers to be effective clinical and translational research mentors. Academic Medicine. 2010;85(3):484–489. doi: 10.1097/ACM.0b013e3181cccd12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Pfund C, House SC, Asquith P, et al. Training mentors of clinical and translational research scholars: A randomized controlled trial. Academic Medicine. 2014;89(5):774–782. doi: 10.1097/ACM.0000000000000218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Manson SM. Personal journeys, professional paths: Persistence in navigating the crossroads of a research career. American Journal of Public Health. 2009;99:S20–S25. doi: 10.2105/AJPH.2007.133603. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Parker S. Robert Wood Johnson Foundation Physician Faculty Scholars Program, 2005–2012. Princeton, New Jersey: Robert Wood Johnson Foundation; [Accessed April 28, 2016]. http://www.rwjf.org/en/library/research/2012/01/robert-wood-johnson-foundation-physician-faculty-scholars-progra.html. [Google Scholar]

- 17.Beckerle MC, Reed KL, Scott RP, et al. Medical faculty development: A modern-day odyssey. Science and Translational Medicine. 2011;3(104):104cm131. doi: 10.1126/scitranslmed.3002763. [DOI] [PubMed] [Google Scholar]

- 18.Rubio DM, Pimack BA, Switer GE, Bryce CL, Seltzer DL, Kapoor WN. A comprehensive career-success model for physician-scientists. Academic Medicine. 2011;86:1571–1576. doi: 10.1097/ACM.0b013e31823592fd. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Marsh JD, Todd RF3rd. Training and sustaining physician scientists: What is success? American Journal of Medicine. 2015;128(4):431–436. doi: 10.1016/j.amjmed.2014.12.015. [DOI] [PubMed] [Google Scholar]

- 20.Lord JA, Mourtzanos E, McLaren K, Murray SB, Kimmel RJ, Cowley DS. A peer mentoring group for junior clinician educators: Four years' experience. Academic Medicine. 2012;87(3):378–383. doi: 10.1097/ACM.0b013e3182441615. [DOI] [PubMed] [Google Scholar]

- 21.Bandura A. Social Learning Theory. Morristown, NJ: General Learning Press; 1971. [Google Scholar]

- 22.Bandura A. On the Functional Properties of Perceived Self-Efficacy Revisited. Journal of Management. 2012;38(1):9–44. [Google Scholar]

- 23.Betz M, Libby AM. [Accessed April 26, 2016];Lean In CU. Lean In Circles. http://leanincircles.org/circle/lean-in-cu.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.