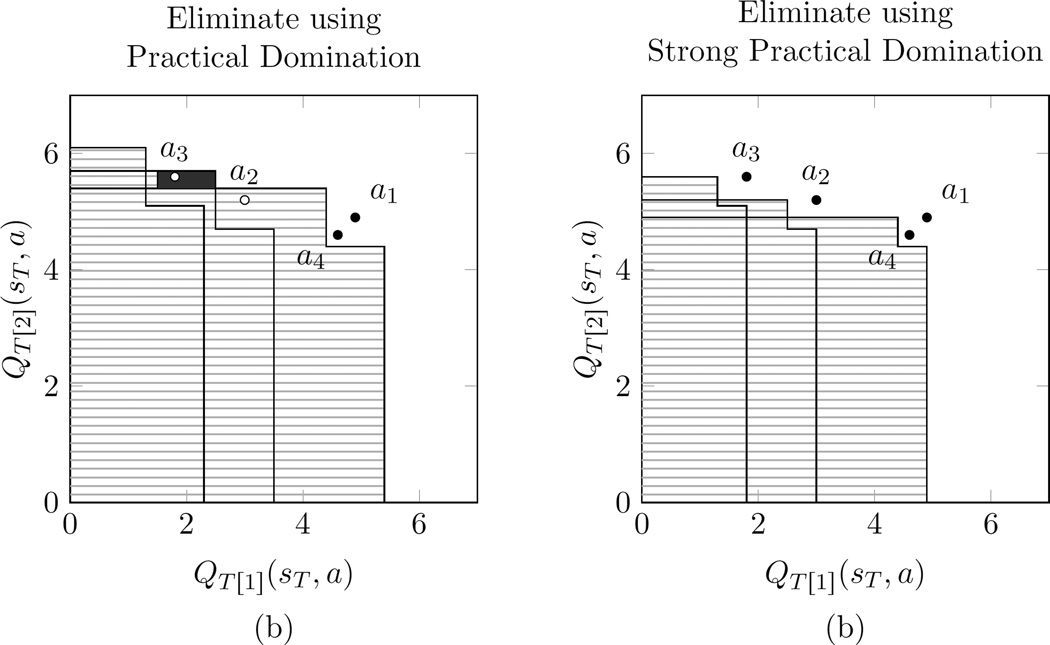

Figure 4.

Comparison of rules for eliminating actions. In this simple example, we suppose the Q-vectors (QT[1] (sT, a), QT[2] (sT, a)) are (4.9, 4.9), (3, 5.2), (1.8, 5.6), (4.6, 4.6) for a1, a2, a3, a4, respectively, and suppose Δ1 = Δ2 = 0.5. Figure 4(a): Using the Practical Domination rule, action a4 is not eliminated by a3 because it is not much worse according to either basis reward, as judged by Δ1 and Δ2. Action a2 is eliminated because although it is slightly better than a1 according to basis reward 2, it is much worse according to basis reward 1. Similarly, a3 is eliminated by a2. Note the small solid rectangle to the left of a2: points in this region (including a3) are dominated by a2, but not by a1. This illustrates the non-transitivity of the Practical Domination relation, and in turn shows that it is not a partial order. Figure 4(b): Using Strong Practical Domination, which is a partial order, no actions are eliminated, and there are no regions of non-transitivity.