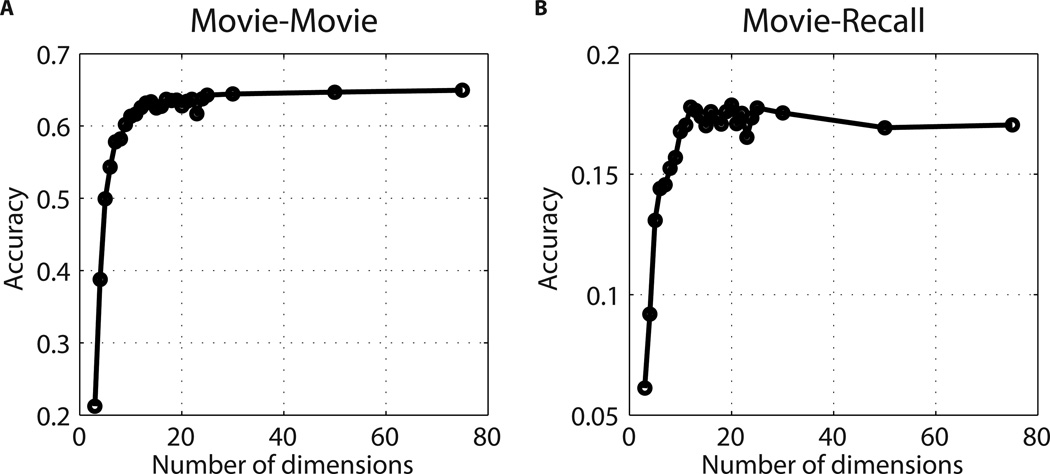

Figure 5. Dimensionality of shared patterns.

In order to quantify the number of distinct dimensions of the spatial patterns that are shared across brains and can contribute to the classification of neural responses, we used the Shared Response Model (SRM). This algorithm operates over a series of data vectors (in this case, multiple participants’ brain data) and finds a common representational space of lower dimensionality. Using SRM in the PMC region, we asked: when the data are reduced to k dimensions, how does this affect scene-level classification across brains? How many dimensions generalize from movie to recall? A) Results when using the movie data in the PMC region (movie-movie). Classification accuracy improves as the number of dimensions k increases, starting to plateau around 15, but still rising at 50 dimensions. (Chance level 0.04.) B) Results when training SRM on the movie data and then classifying recall scenes across participants in the PMC region (recall-recall). Classification accuracy improves as the number of dimensions increases, with maximum accuracy being reached at 12 dimensions. Note that there could be additional shared dimensions, unique to the recall data, that would not be accessible via these analyses.