Abstract

Human adults automatically mimic others' emotional expressions, which is believed to contribute to sharing emotions with others. Although this behaviour appears fundamental to social reciprocity, little is known about its developmental process. Therefore, we examined whether infants show automatic facial mimicry in response to others' emotional expressions. Facial electromyographic activity over the corrugator supercilii (brow) and zygomaticus major (cheek) of four- to five-month-old infants was measured while they viewed dynamic clips presenting audiovisual, visual and auditory emotions. The audiovisual bimodal emotion stimuli were a display of a laughing/crying facial expression with an emotionally congruent vocalization, whereas the visual/auditory unimodal emotion stimuli displayed those emotional faces/vocalizations paired with a neutral vocalization/face, respectively. Increased activation of the corrugator supercilii muscle in response to audiovisual cries and the zygomaticus major in response to audiovisual laughter were observed between 500 and 1000 ms after stimulus onset, which clearly suggests rapid facial mimicry. By contrast, both visual and auditory unimodal emotion stimuli did not activate the infants' corresponding muscles. These results revealed that automatic facial mimicry is present as early as five months of age, when multimodal emotional information is present.

Keywords: facial mimicry, EMG, infants, emotion

1. Introduction

Humans often spontaneously and unconsciously match their behaviours to those of others. In particular, matching facial expressions, often termed facial mimicry, have various social functions for our smooth social interactions. Facial mimicry has often been assessed by measuring facial electromyographic (EMG) activity during observation of facial expressions, which enables the detection of subtle, visually imperceptible reactions [1,2]. For example, observation of negative facial expressions (anger, sadness or crying) activates the observer's corrugator supercilii (brow) activity, whereas observation of positive facial expressions (happiness, smiling, laughter) activates the observer's zygomaticus major (cheek) activity. This distinct pattern of facial reactions to different emotions can be observed as soon as 500 ms after exposure to facial stimuli [3–5]. Interestingly, such responses can be evoked across modalities. For instance, people activate the facial muscles involved in expressing a certain emotion even when they see others' emotional bodily gestures or hear their voices [6–9]. These findings suggest that facial mimicry reflects a multimodal re-enactment of one's own sensory, motor and affective experiences, which occur in response to any signals across modalities (i.e. embodied emotion theory) [10]. Moreover, a similar response can surprisingly be observed even when participants are unaware of the stimulus owing to short presentation time [11,12] or if participants are affected by cortical blindness [13]. These further suggest that facial mimicry of emotional stimuli involves subconsciously controlled processes.

The automatic processes of mimicry raise important questions such as whether the system for facial mimicry is innate or acquired later. Some researchers have argued that there is an inborn connection between ‘seeing’ and ‘doing’ that is known as a sensory–motor coupling system [14–16]. This view is supported by the findings that new-born infants mimic the orofacial actions of others, a phenomenon referred to as the neonatal imitation [17] (see a review by Simpson et al. [16]). However, evidence for this appears very limited, because only a few actions (e.g. tongue protrusion; mouth opening) were reliably mimicked and several studies failed to replicate the results [18–22]. In particular, few reports exist of new-born infants mimicking emotional facial expressions (but see [23]). Neonatal imitation is thus likely a specific reaction to a particular condition, which should be differentiated from general facial mimicry, and facial mimicry in reaction to emotional expressions may not be present from birth and postnatally acquired.

This raises an important question: when and how does facial mimicry emerge during postnatal development? Previous studies reported that mothers' emotional expressions induce the similar categories of facial expressions in three-month-old infants [24–26]. However, this behaviour seems present only when emotional expressions are displayed by their mother, but not by strangers [26]. Therefore, it is still unclear to what extent these responses can be considered equivalent to automatic and rapid facial reactions that have been investigated in adults. To address this question, the technique of EMG measurement would be useful, as it would allow us to analyse even subtle responses of facial muscles with high temporal resolution in a more objective manner. Nevertheless, there is no study that examined EMG reactions to facial emotions in children younger than 3 years [27]. This study therefore examined young infants' facial EMG activities in response to others' emotional expressions. We focused particularly on four- to five-month-olds because it has been suggested that infants start to discriminate several emotions around this age [28,29], and we speculated that automatic motor responses to others' emotions would become differentiated with a similar developmental sequence of emotion recognition.

We also focused on investigating how the infants react to different modality of the emotional displays. Past studies on emotion recognition have proposed that infants first become able to discriminate emotions from audiovisual bimodal stimuli, and later this ability expands to unimodal auditory and visual stimuli [28,29]. We speculated that the consistent developmental sequence might be present in the mimicry domain, in which four- to five-month-old infants would demonstrate facial mimicry to audiovisual emotional displays, but may not to unimodal stimuli. We therefore measured infants' EMG activities over the corrugator supercilii and zygomaticus major muscles while viewing adults' dynamically expressing crying, laughing and neutral emotions with audiovisual, visual and auditory modalities. To capture the infant's attention equally across conditions, stimuli of every modality condition involved both visual and auditory information, but the conditions were differentiated by the modalities that convey ‘emotional’ information. That is, while the audio-visual bimodal emotion stimuli were a display of laughing/crying facial expression with an emotionally congruent vocalization, the visual/auditory unimodal emotion stimuli displayed emotional faces or vocalizations paired with a neutral vocalization or face, respectively. Audio-visual physical synchrony, regardless of the emotional sense, is known to affect the speed or threshold of the perception [30–33]. We therefore employed audio-visual asynchronous stimuli (that were emotionally congruent) in the AV condition, which allowed us to compare three conditions fairly in terms of modality conveying emotional information, but not in terms of their physical and temporal synchrony.

2. Material and methods

(a). Participants

The final sample consisted of 15 full-term four- to five-month-old infants (nine males and six females, mean age = 154.6 days; standard deviation (s.d.) = 10.0 days; ranging from 140 to 169 days). An additional 25 infants were recruited, but were excluded from the analysis owing to the following reasons. Sixteen of them did not provide the minimum dataset that required at least one trial from all the seven experimental conditions because of their crying before (mostly during electrode attachment) or while viewing stimulus clips; three infants had frequent body movements during the EMG recordings, which made data processing difficult; the other six infants were excluded owing to experimental mistakes.

(b). Apparatus

Video clips were presented on a 23-inch monitor with a resolution of 1920 × 1080 pixels (ColorEdge CS230, Eizo, Japan), which was placed at a distance of approximately 40 cm from the infant sitting on an experimenter's lap. Sounds were presented by a pair of speakers placed behind both sides of the monitor. A video camera that was masked from the infants was mounted on the top edge of the monitor. EMG activity was recorded by a bioamplifier, PolymateII AP2516 (Teac), which was also blocked out from the infant's view.

(c). Stimuli and procedure

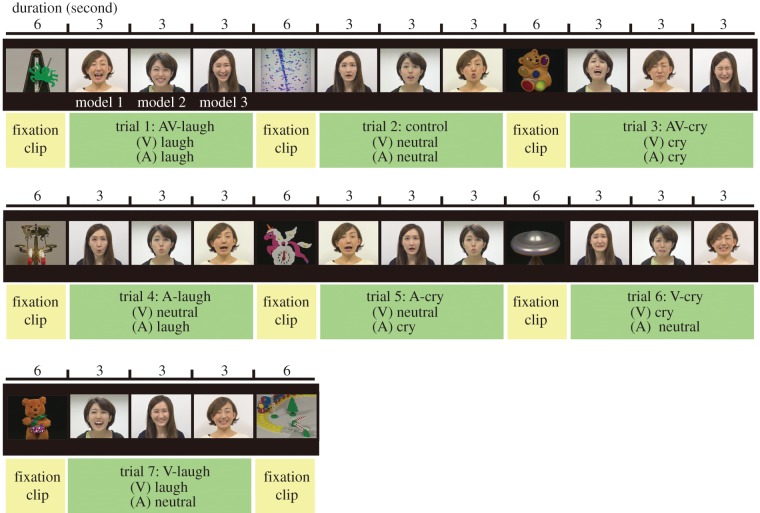

Infants viewed a series of video clips while their facial EMG activities over the left corrugator supercilii and zygomaticus major were recorded. The video consisted of three stimulus sets. A schema of the presentation during a stimulus set is shown in figure 1. Each stimulus set contained seven trials, which presented all stimulus conditions: 2 emotion-types (laughing and crying) × 3 emotion-modalities (audiovisual, AV; visual, V; auditory, A) and one additional control condition. Modalities refer to those related to emotion; that is, all stimuli contained both visual and auditory information, but in the unimodal (V and A) emotion conditions, an emotional facial expression or vocalization was paired with a neutral vocalization or facial expression, respectively. The AV condition had an emotionally consistent facial expression and vocalization, and the control condition involved a neutral facial expression with a neutral vocalization in which models made vocalizations such as ‘ah-ah’, ‘uh-uh’ or ‘oh-oh’. During production of the clips, facial expressions and vocalizations were recorded separately (i.e. they were not extracted from a common source) and integrated afterwards, so that visual and auditory information were not completely synchronized with each other, even for the stimuli in the AV condition. This manipulation allowed us to compare the AV, V and A conditions fairly in terms of their modality regarding emotional information, but not in terms of their physical and temporal synchrony. Each trial included three continuous presentations of the stimuli of the same condition represented by three different female models, each of which lasted 3 s. The presentation order of the three models was determined randomly across trials. Before each trial, a 6 s fixation clip was presented to capture the infant's attention. The fixation clips were selected from a commercially released DVD, Baby Mozart (The Walt Disney Co.), which presented various images of brightly coloured toys or visually captivating objects with classical music. Thus, each stimulus set lasted 111 s and almost 5.5 minutes were required to present the three stimulus clips. However, the experiment was terminated immediately if the infant became distracted or fussy. Individual data that did not have at least one trial from all seven conditions were excluded from the analysis.

Figure 1.

A schema of the stimulus video clip. A stimulus set involving seven experimental conditions, each of which consisted of a 6 s fixation clip, followed by the three 3 s clips presented by different models.

Infants were seated on an experimenter's lap; their arms were held by the experimenter. The experimenter was not viewing the monitor, but the wall just above the monitor during stimulus presentation and she did not react to any of the infant's behaviour. Parents were seated at some distance behind the infant and were not visible by the infant.

(d). Electromyographic recording and analysis

To measure facial surface muscle activity, gold active electrodes were attached over the left corrugator supercilii and zygomaticus major muscles, following the guidelines by Fridlund & Cacioppo [34]. Ground electrodes were placed on the forehead. Activity over each muscle was continuously recorded at a sampling rate of 1000 Hz with a 60 Hz notch filter.

Raw signals were filtered offline (bandpass: 10–400 Hz) and rectified. The signals were then screened in the following manner. First, the recoded videotapes were checked, and the trials during which infants were not attending to the stimuli were removed. Next, for further cleaning of extreme activity change, which included body movements, blinks or overt smiles, signals over 3 s.d. from the mean bandpass-filtered signals were detected and those 100 points (100 ms) before or after the detected signals were also removed. This procedure allowed us to analyse only very reliable data, and the data thus reflected relatively subtle muscle activation. The averaged signals from 250 to 1250 ms before the stimulus onset (during the presentation of the fixation stimulus) were calculated for each fixation clip, and the mean value of these was used as a baseline activity. Activities during stimulus presentation were expressed as percentage changes with respect to this baseline (see electronic supplementary material, figure S1 for the waveforms represented by the percentage of baseline activity during stimulus presentation). To focus on the time course of the muscle activation after the stimulus onset, reactions to the clips with three different models and those in different stimulus sets (if available) were all summed, and the averaged activity during the presentation of the 3 s clip were obtained for each condition. Finally, for the purpose of analysis, the activity percentages in each condition were epoched by averaging the data for each 500 ms chunk after the stimulus onset, which provided six time-windows (0–500, 500–1000, … 2500–3000 ms post-stimulus), in accordance with the earlier notion that any distinct muscle response to the stimuli was expected to be detectable after 500 ms of exposure [5].

The Kolmogorov–Smirnov normality test revealed that the data were not normally distributed. Therefore, we applied a non-parametric statistical method (Wilcoxon signed-rank test) for each paired comparison of the emotion types (cry, laughter versus control) at each of the six time-windows. The problem of multiple comparisons (increased probability of type I error) was corrected for by using the Bonferroni correction (significance level: p < 0.05/18).

3. Results

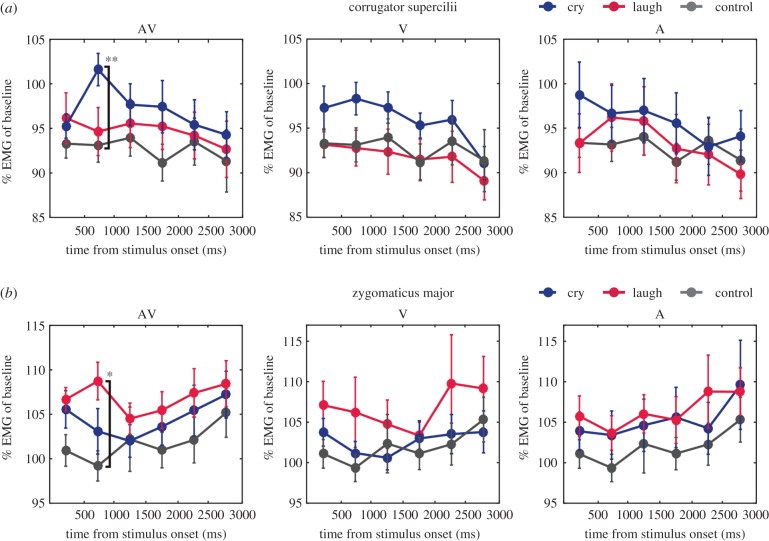

To examine the facial mimicry responses of infants to presented emotions, we analysed whether the specific activation of the corrugator supercilii (brow) in response to crying and the zygomaticus major (cheek) in response to laughter were observed in each audiovisual, visual or auditory emotion-modality condition. Muscle activities in each 500 ms time-window after stimulus onset are shown in figure 2.

Figure 2.

EMG activity of the (a) corrugator supercilii and (b) zygomaticus major for each modality condition. Mean muscle activity (percentage of baseline) for each 500 ms time-window after stimulus onset. The error bar represents the standard error across participants. Asterisks represent statistically significant differences according to the Wilcoxon signed-rank tests with Bonferroni correction: **p < 0.01/18; *p < 0.05/18.

In the AV condition, the corrugator supercilii muscle showed remarkably increased activity at 500–1000 ms after stimulus onset in response to crying (left panel in figure 2a). This increased activity of the corrugator supercilii muscle was not clearly observed in both V and A conditions. Wilcoxon signed-rank tests confirmed that responses of the corrugator supercilli to crying were significantly larger than those to control stimuli (Z = 3.2, p = 0.0004, r = 0.82) in the 500–1000 ms time-window. In the V and A conditions, no significant differences in EMG activity across emotions were found in any time-widows (see table 1 for statistical results).

Table 1.

Statistical results of the Wilcoxon signed-rank test on each AV/V/A condition for (a) corrugator supercilii and (b) zygomaticus major. Statistically significant results adjusted with Bonferroni correction (p < 0.05/18) is represented in italics.

| time from stimulus onset (ms) | cry versus laugh |

cry versus neutral |

laugh versus neutral |

|||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Z | p | R | Z | p | R | Z | p | R | ||

| (a) corrugator supercilii | ||||||||||

| AV | 0–500 | 0.3 | 0.8 | 0.1 | 1.2 | 0.3 | 0.3 | 0.9 | 0.4 | 0.2 |

| 500–1000 | 2.4 | 0.01 | 0.6 | 3.2 | 0.0004 | 0.8 | 0.9 | 0.4 | 0.2 | |

| 1000–1500 | 0.7 | 0.5 | 0.2 | 1.5 | 0.2 | 0.4 | 0.6 | 0.6 | 0.2 | |

| 1500–2000 | 1.1 | 0.3 | 0.3 | 1.8 | 0.07 | 0.5 | 1.9 | 0.06 | 0.5 | |

| 2000–2500 | 0.4 | 0.7 | 0.1 | 0.3 | 0.8 | 0.1 | 0.5 | 0.6 | 0.1 | |

| 2500–3000 | 0.6 | 0.6 | 0.2 | 0.6 | 0.6 | 0.1 | 0.6 | 0.6 | 0.1 | |

| V | 0–500 | 1.0 | 0.3 | 0.3 | 1.4 | 0.2 | 0.4 | 0.1 | 1.0 | 0.01 |

| 500–1000 | 2.2 | 0.03 | 0.6 | 1.7 | 0.09 | 0.4 | 0.3 | 0.8 | 0.1 | |

| 1000–1500 | 1.7 | 0.1 | 0.4 | 1.6 | 0.1 | 0.4 | 0.3 | 0.8 | 0.1 | |

| 1500–2000 | 2.1 | 0.04 | 0.5 | 2.4 | 0.01 | 0.6 | 0.1 | 1.0 | 0.01 | |

| 2000–2500 | 1.2 | 0.2 | 0.3 | 0.2 | 0.9 | 0.04 | 0.5 | 0.6 | 0.1 | |

| 2500–3000 | 0.7 | 0.5 | 0.2 | 0.2 | 0.9 | 0.04 | 0.6 | 0.6 | 0.2 | |

| A | 0–500 | 1.7 | 0.09 | 0.4 | 1.3 | 0.2 | 0.3 | 0.4 | 0.7 | 0.1 |

| 500–1000 | 0.5 | 0.6 | 0.1 | 1.0 | 0.4 | 0.2 | 0.3 | 0.8 | 0.09 | |

| 1000–1500 | 0.6 | 0.6 | 0.2 | 0.8 | 0.5 | 0.2 | 0.6 | 0.6 | 0.1 | |

| 1500–2000 | 1.0 | 0.3 | 0.3 | 1.6 | 0.1 | 0.4 | 0.6 | 0.6 | 0.1 | |

| 2000–2500 | 0.8 | 0.5 | 0.2 | 0.2 | 0.9 | 0.04 | 0.3 | 0.8 | 0.07 | |

| 2500–3000 | 1.9 | 0.06 | 0.5 | 1.5 | 0.2 | 0.4 | 1.1 | 0.3 | 0.3 | |

| (b) zygomaticus major | ||||||||||

| AV | 0–500 | 1.1 | 0.3 | 0.3 | 1.0 | 0.3 | 0.3 | 2.3 | 0.02 | 0.6 |

| 500–1000 | 2.4 | 0.01 | 0.6 | 1.0 | 0.4 | 0.2 | 3.0 | 0.001 | 0.8 | |

| 1000–1500 | 1.1 | 0.3 | 0.3 | 0.5 | 0.6 | 0.1 | 1.8 | 0.07 | 0.5 | |

| 1500–2000 | 0.5 | 0.7 | 0.1 | 0.6 | 0.6 | 0.1 | 2.4 | 0.01 | 0.6 | |

| 2000–2500 | 0.7 | 0.5 | 0.2 | 0.9 | 0.4 | 0.2 | 1.3 | 0.2 | 0.3 | |

| 2500–3000 | 0.9 | 0.4 | 0.2 | 0.1 | 0.9 | 0.03 | 1.5 | 0.1 | 0.4 | |

| V | 0–500 | 0.9 | 0.9 | 0.03 | 0.1 | 0.1 | 0.4 | 0.1 | 0.07 | 0.5 |

| 500–1000 | 0.7 | 0.8 | 0.09 | 0.1 | 0.1 | 0.4 | 0.1 | 0.2 | 0.4 | |

| 1000–1500 | 0.4 | 0.4 | 0.2 | 0.6 | 0.6 | 0.1 | 0.1 | 0.08 | 0.5 | |

| 1500–2000 | 0.6 | 0.7 | 0.1 | 0.5 | 0.6 | 0.2 | 0.6 | 0.6 | 0.1 | |

| 2000–2500 | 0.9 | 0.9 | 0.04 | 0.5 | 0.5 | 0.2 | 0.3 | 0.3 | 0.3 | |

| 2500–3000 | 0.5 | 0.5 | 0.2 | 0.5 | 0.5 | 0.2 | 0.08 | 0.08 | 0.5 | |

| A | 0–500 | 1.4 | 0.2 | 0.4 | 0.9 | 0.4 | 0.2 | 1.3 | 0.2 | 0.3 |

| 500–1000 | 0.4 | 0.7 | 0.1 | 0.5 | 0.6 | 0.1 | 1.4 | 0.2 | 0.4 | |

| 1000–1500 | 1.1 | 0.3 | 0.3 | 1.2 | 0.3 | 0.3 | 1.4 | 0.2 | 0.4 | |

| 1500–2000 | 1.1 | 0.3 | 0.3 | 0.6 | 0.6 | 0.2 | 1.0 | 0.3 | 0.3 | |

| 2000–2500 | 1.9 | 0.06 | 0.5 | 0.1 | 0.9 | 0.03 | 1.2 | 0.2 | 0.3 | |

| 2500–3000 | 1.0 | 0.3 | 0.3 | 0.4 | 0.7 | 0.1 | 1.0 | 0.4 | 0.2 | |

The zygomaticus major muscle also showed greater activity in response to laughter, compared with crying and control stimuli at 500–1000 ms after stimulus onset in the AV condition (left panel in figure 2b). Wilcoxon signed-rank tests revealed a greater activation of the zygomaticus major in response to laughter than to control stimuli in the 500–1000 ms time-window (Z = 3.0, p = 0.001, r = 0.78). In the V and A conditions, no significant differences in EMG activity across emotions were found in any time-windows (table 1).

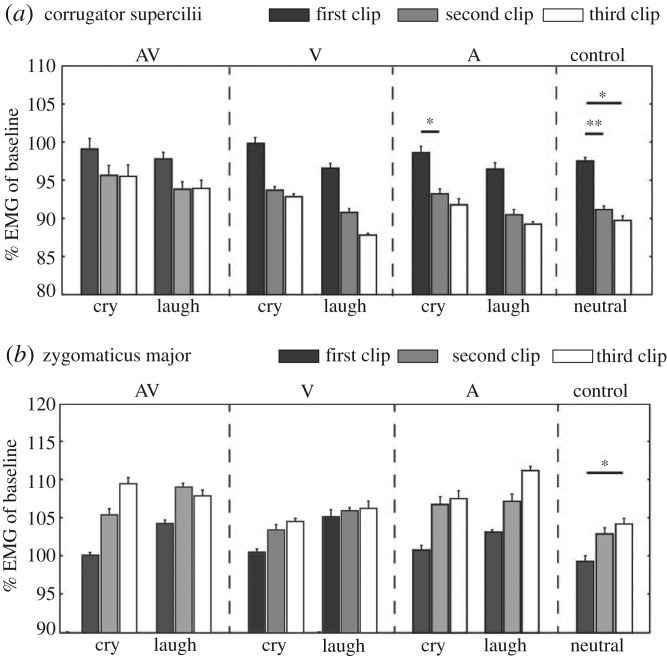

Finally, we observed that activity changes with repeated presentation of stimulus clips were clearly different between the two muscles: the activity of the corrugator supercilli decreased with the repetitive presentation of the stimulus clip, whereas the activity of the zygomaticus major increased with the repetition of those (figure 3; electronic supplementary material, figure S1). Wilcoxon signed-rank tests with the Bonferroni correction (significant level: p < 0.05/9) revealed a significant decrease in the activity of the corrugator supercilii with the repetition of presentation of the auditory-cry (first clip > second clip: Z = 2.7, p = 0.004, r = 0.70) and the control (neutral) condition (first clip > second clip: Z = 3.1, p = 0.001, r = 0.810; first clip > third clip: Z = 2.9, p = 0.002, r = 0.75). This tendency was not statistically significant for other conditions (see table 2 for details). The significant increase in the activity of the zygomaticus major was found only for the control condition (first clip < third clip: Z = 2.8, p = 0.003, r = 0.72). Because this tendency was observed consistently for all emotions within the muscle, though many of them did not reach the level of statistically significance, the activity pattern related to habituation is more likely to depend on the feature of the muscle, rather than on the emotions to which the muscle reacts.

Figure 3.

Changes in muscle activity with repeated presentation of stimulus clips for (a) corrugator supercilii and (b) zygomaticus major. Mean muscle activity (percentage of baseline) for the first/second/third clip in a trial. The error bar represents the standard error across participants. Asterisks represent statistically significant differences according to the Wilcoxon signed-rank tests with Bonferroni correction: **p < 0.01/9; *p < 0.05/9.

Table 2.

Statistical results of the Wilcoxon signed-rank tests for the effect of repetition in (a) corrugator supercilii and (b) zygomaticus major. Statistically significant results adjusted Bonferroni correction (p < 0.05/9) is represented in italics.

| emotion | first versus second |

first versus third |

second versus third |

|||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Z | p | R | Z | p | R | Z | p | R | ||

| (a) corrugator supercilli | ||||||||||

| AV | cry | 2.0 | 0.05 | 0.5 | 1.6 | 0.1 | 0.4 | 0.2 | 0.9 | 0.04 |

| laugh | 2.1 | 0.04 | 0.5 | 1.7 | 0.09 | 0.4 | 0.03 | 1.0 | 0.01 | |

| V | cry | 2.2 | 0.02 | 0.6 | 2.4 | 0.01 | 0.6 | 0.4 | 0.7 | 0.1 |

| laugh | 2.4 | 0.02 | 0.6 | 2.1 | 0.04 | 0.5 | 1.7 | 0.09 | 0.4 | |

| A | cry | 2.7 | 0.004 | 0.7 | 0.8 | 0.5 | 0.2 | 0.4 | 0.8 | 0.09 |

| laugh | 2.0 | 0.04 | 0.5 | 2.3 | 0.02 | 0.6 | 0.5 | 0.6 | 0.1 | |

| neutral | 3.1 | 0.001 | 0.8 | 2.9 | 0.002 | 0.8 | 1.3 | 0.2 | 0.3 | |

| (b) zygomaticus major | ||||||||||

| AV | cry | 1.9 | 0.06 | 0.5 | 2.0 | 0.04 | 0.5 | 1.7 | 0.1 | 0.4 |

| laugh | 0.8 | 0.5 | 0.2 | 0.8 | 0.4 | 0.2 | 0.2 | 0.9 | 0.06 | |

| V | cry | 1.4 | 0.2 | 0.4 | 1.2 | 0.2 | 0.3 | 1.3 | 0.2 | 0.3 |

| laugh | 0.2 | 0.9 | 0.1 | 0.6 | 0.6 | 0.2 | 0.9 | 0.4 | 0.2 | |

| A | cry | 2.0 | 0.05 | 0.5 | 1.8 | 0.08 | 0.5 | 0.8 | 0.5 | 0.2 |

| laugh | 1.0 | 0.3 | 0.3 | 1.7 | 0.09 | 0.4 | 1.6 | 0.1 | 0.4 | |

| neutral | 1.8 | 0.1 | 0.5 | 2.8 | 0.003 | 0.7 | 1.5 | 0.2 | 0.4 | |

4. Discussion

This study investigated whether four- to five-month-old infants show facial mimicry in response to audiovisual, visual or auditory emotions in others. Recordings were made of the infants' facial EMG activities over the corrugator supercilii (brow—a muscle involved in crying) and the zygomaticus major (cheek—a muscle involved in laughter) while the infants viewed the stimuli. The results showed increased activations of the corrugator supercilii in response to audiovisual crying, and the zygomaticus major in response to audiovisual laughing, between 500 and 1000 ms after the stimulus onset. Consistent with the findings of previous studies with adults [4,5] and children [27,35–37], infants' muscles activity in response to audiovisual emotional displays emerged and peaked between 500 and 1000 ms after the stimulus onset. Therefore, the current results clearly demonstrate that automatic facial mimicry is present as early as five months of age when multimodal emotional information is present.

One might argue that infants merely showed appropriate emotional responses to the stimuli, rather than mimicking the facial movements they saw. In adults, previous studies have suggested that facial mimicry involves both sensory–motor matching processes and emotional processes [38–40], thus we could consider that mimicry is in part a consequence of the emotional responses. In this study, we have observed the phenomenon of mimicry in infants, but at this point, it is unclear about the underlying mechanisms (i.e. to what extent those two processes are recruited behind the infant's mimicry responses). Nevertheless, it is likely that both processes are interactively linked and play important role on development of higher social cognition.

While clear evidence of facial mimicry of audiovisual emotional stimuli was found, four- to five-month-old infants did not produce automatic facial reactions to unimodal visual and auditory emotional signals. Adults show facial EMG reactions to both unimodal facial expressions (visual only) [4–6,41,42] and emotional vocalization (auditory only) [6]. A recent study showed that even three-year-old children show the mimicking responses to unimodal facial expressions [27]. In contrast to those previous findings in adults or children, our results revealed that four- to five-month-old infants do not show mimicking responses towards emotional faces and vocalization. These findings, together with the results that they showed clear mimicking responses to audiovisual bimodal emotions, suggest that four- to five-months-old infants have started to construct a system eliciting the mimicry, but at this stage, the system has matured only to the point to which motor responses are triggered only when multimodal emotional information is provided.

Previous developmental studies of emotion recognition reported that emotion recognition is initially promoted in naturalistic, multimodal conditions and that this ability is later extended to auditory and visual unimodal conditions [28,29]. For example, a study by Flom et al. [29] revealed that four-month-old infants discriminate happy, sad and angry emotions through audiovisual bimodal stimulations, but that sensitivity to auditory stimuli emerges at five months and that to visual stimuli emerges at seven months. This notion is also supported by event-related potential studies [43]. In this study, we found that four- to five-month-old infants show a clear mimicry response only to bimodally presented emotions, but not to unimodal emotional signals, which is consistent with the initial stage of the development of emotion recognition in infants. These findings support the possibility that facial mimicry and emotion recognition develop in tandem in early infancy.

When facial mimicry emerges in postnatal development, reciprocal mimicry in our social interactions and Hebbian associative learning may explain its generating mechanisms [44–50]. Infants spontaneously and involuntarily produce several facial expressions during their early development. For example, they begin to smile in social contexts at around two to three months [51]. Facial expressions in infants often induce adults around them to produce similar expressions [52,53]; this, in turn, provides infants with visual input that links their motor output to personal emotional experiences. Co-occurrence of perception, action and emotional experiences forms a network across these channels. This loop shapes the system that enables us to automatically produce the congruent facial action and emotion expressed by others, which enables infants to develop the ability to recognize others' emotional expressions. Recent studies have revealed that infants' motor resonance was recruited during observation of others' goal-directed actions, depending on the infant's capacity to produce the same action [54,55]. This suggests that infants' own sensory and motor simultaneous experience shapes the sensory–motor coupling network gradually during early development and facilitates the understanding of others' action. Similar developmental processes might exist in the domain of facial expressions during which infants express, observe and understand facial expressions, though faces are radically different from other actions in that we usually do not see our own facial actions, and that it is deeply linked with emotional processing. In future studies, it would be important to clarify the details of the emergence and the development of facial emotional mimicry by focusing on multiple developmental stages and to directly investigate the links between facial mimicry and emotion recognition in early development. Furthermore, clarifying neural mechanisms underlying mimicry development (e.g. Hebbian learning) will provide important insights considering how humans construct basic mechanisms that further facilitate higher social cognition, such as empathy or theory of mind, during early development.

Finally, several limitations of this study should be acknowledged. First of all, despite our best efforts to collect more data, given the difficulty of targeting infants as subjects, the analysis was conducted on a relatively small dataset. Partly related to that, owing to the non-normal distribution of the collected data, non-parametric methods with relatively conservative adjustment were employed for the statistical analyses. While this provided strong evidence of the positive result (i.e. presence of mimicry for the AV condition), it would have raised the possibility of causing the type II errors for the null results (i.e. absence of mimicry for the V and A conditions). Further investigations with larger samples are needed for more conclusive answer towards the modality-dependent response differences. Second, the stimuli used in this study had several limitations. Most notably, they lacked some ecological validity. For the bimodal emotion condition, we used auditory–visual asynchronous stimuli that were emotionally congruent. Although previous studies have shown that temporal synchrony between face and voice is not imperative for infants to detect common emotions across modalities at five months of age [28,29], it seems important for four-month-old infants [29]. Thus, the use of audiovisual asynchronous stimuli might have weakened the infants' responses in this study, though we still observed clear mimicking responses in this condition. On the other hand, in unimodal emotion conditions, we presented an emotional signal of the target modality with neutral cues of the other modality. Although many studies testing infants' sensitivity to auditory emotions present auditory stimulus paired with neutral facial expressions [29,56,57], there still remains the possibility that these unnatural stimuli prevent infants from emitting natural responses. In addition, this manipulation made it difficult to compare the results fairly with previous findings of the mimicry for unimodal stimuli, which have mostly been focused on adults, as those studies usually do not present neutral signals of untargeted modality. In addition, we had only female models in our stimuli, which may have prevented us from determining whether the facial mimicry response is a more general mechanism regardless of the person who presents the emotions, or whether there are particular effects induced by the characteristics of models, such as sex or degree of familiarity. Moreover, we used different videos as a fixation clip at each trial in order to keep infants engaged. This procedure introduced the variance of muscle activity during the fixation clip across trials and reduced the validity of the baseline. In future studies, it will be important to confirm whether or not the same results could be obtained by using more natural stimuli, such as non-manipulated, completely synchronous audiovisual stimuli and auditory-only or visual-only unimodal stimuli, as well as using more various models including males or familiar persons to infants, and employing better-controlled procedures that are also compatible with an infant-friendly task. Finally and most importantly, because this study did not test infants younger than four months of age, we failed to clarify the earliest age at which the first mimicry emerges. Although one unpublished study has reported absence of mimicry measured by EMG among three-month-old infants [58], testing younger infants with the same paradigm would allow us to assess the developmental course of mimicry in a direct manner. Thus, further investigations are needed to understand the whole developmental trajectory of facial mimicry in infants and its links to various social cognitive abilities.

Overall, this study investigated automatic facial mimicry of infants in response to others' emotional displays (laughter and crying) by measuring facial EMG activities. We found that four- to five-month-old infants showed clear facial EMG reactions to dynamic presentation of audiovisual emotions. However, they did not show similar reactions towards unimodal, auditory and visual emotional stimuli. These results suggest that automatic facial mimicry is present in infants as young as five months when multimodal emotional information is provided, but responses to unimodal emotions would probably develop later.

Supplementary Material

Acknowledgements

We appreciate Kazuko Nakatani for her support in recruiting participants and collecting data. We are grateful to the models who cooperated in creating the stimuli and to all of the families that have kindly and generously given their time to participate in this study.

Ethics

This study received approval from the institutional ethics committee, and we adhered to the Declaration of Helsinki. The parents of all participants gave written informed consent for their child's participation in the study.

Data accessibility

Raw data are available from the Dryad Digital Repository [59].

Authors' contributions

T.I. and T.N. designed the study; T.I. performed experiments; T.I. and T.N. analysed the data and drafted the manuscript. Both authors gave final approval for publication.

Competing Interests

We have no competing interests.

Funding

This work was supported by KAKENHI grants no. A15J000670 to T.I. and no. 25700014 to T.N. from the Japan Society for the Promotion of Science.

References

- 1.Dimberg U. 1997. Facial EMG: indicator of rapid emotional reactions. Int. J. Psychophysiol. 25, 52–53. ( 10.1016/S0167-8760(97)85483-9) [DOI] [Google Scholar]

- 2.Tassinary L. 2007. The skeletomotor system: surface electromyography. In Handbook of psychophysiology (eds Cacioppo JT, Tassinary LG, Berntson GG), pp. 267–299. Cambridge, UK: Cambridge University Press. [Google Scholar]

- 3.Dimberg U, Thunberg M, Grunedal S. 2002. Facial reactions to emotional stimuli: automatically controlled emotional responses. Cogn. Emot. 16, 449–471. ( 10.1080/02699930143000356) [DOI] [Google Scholar]

- 4.Dimberg U. 1997. Facial reactions: rapidly evoked emotional responses. J. Psychophysiol. 11, 115–123. [Google Scholar]

- 5.Dimberg U, Thunberg M. 1998. Rapid facial reactions to emotional facial expressions. Scand. J. Psychol. 39, 39–45. ( 10.1111/1467-9450.00054) [DOI] [PubMed] [Google Scholar]

- 6.Sestito M, et al. 2013. Facial reactions in response to dynamic emotional stimuli in different modalities in patients suffering from schizophrenia: a behavioral and EMG study. Front. Hum. Neurosci. 7, 368 ( 10.3389/fnhum.2013.00368) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Magnée MJM, Stekelenburg JJ, Kemner C, de Gelder B. 2007. Similar facial electromyographic responses to faces, voices, and body expressions. Neuroreport 18, 369–372. ( 10.1097/WNR.0b013e32801776e6) [DOI] [PubMed] [Google Scholar]

- 8.Hietanen JK, Surakka V, Linnankoski I. 1998. Facial electromyographic responses to vocal affect expressions. Psychophysiology 35, 530–536. ( 10.1017/S0048577298970445) [DOI] [PubMed] [Google Scholar]

- 9.Verona E, Patrick CJ, Curtin JJ, Bradley MM, Lang PJ. 2004. Psychopathy and physiological response to emotionally evocative sounds. J. Abnorm. Psychol. 113, 99–108. ( 10.1037/0021-843X.113.1.99) [DOI] [PubMed] [Google Scholar]

- 10.Niedenthal PM. 2007. Embodying emotion. Science 316, 1002–1005. ( 10.1126/science.1136930) [DOI] [PubMed] [Google Scholar]

- 11.Dimberg U, Thunberg M, Elmehed K. 2000. Unconscious facial reactions to emotional facial expressions. Psychol. Sci. 11, 86–89. ( 10.1111/1467-9280.00221) [DOI] [PubMed] [Google Scholar]

- 12.Mathersul D, McDonald S, Rushby JA. 2013. Automatic facial responses to briefly presented emotional stimuli in autism spectrum disorder. Biol. Psychol. 94, 397–407. ( 10.1016/j.biopsycho.2013.08.004) [DOI] [PubMed] [Google Scholar]

- 13.Tamietto M, Castelli L, Vighetti S, Perozzo P, Geminiani G, Weiskrantz L, de Gelder B. 2009. Unseen facial and bodily expressions trigger fast emotional reactions. Proc. Natl Acad. Sci. USA 106, 17 661–17 666. ( 10.1073/pnas.0908994106) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Lepage JF, Théoret H. 2007. The mirror neuron system: grasping others’ actions from birth? Dev. Sci. 10, 513–523. ( 10.1111/j.1467-7687.2007.00631.x) [DOI] [PubMed] [Google Scholar]

- 15.Meltzoff AN, Decety J. 2003. What imitation tells us about social cognition: a rapprochement between developmental psychology and cognitive neuroscience. Phil. Trans. R. Soc. Lond. B 358, 491–500. ( 10.1098/rstb.2002.1261) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Simpson EA, Murray L, Paukner A, Ferrari PF. 2014. The mirror neuron system as revealed through neonatal imitation: presence from birth, predictive power and evidence of plasticity. Phil. Trans. R. Soc. B 369, 20130289 ( 10.1098/rstb.2013.0289) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Meltzoff AN, Moore MK. 1977. Imitation of facial and manual gestures by human neonates. Science 198, 75–78. ( 10.1126/science.198.4312.75) [DOI] [PubMed] [Google Scholar]

- 18.Anisfeld M. 1991. Neonatal imitation. Dev. Rev. 11, 60–97. ( 10.1016/0273-2297(91)90003-7) [DOI] [Google Scholar]

- 19.Anisfeld M. 1996. Only tongue protrusion modeling is matched by neonates. Dev. Rev. 16, 149–161. ( 10.1006/drev.1996.0006) [DOI] [Google Scholar]

- 20.Ray E, Heyes C. 2011. Imitation in infancy: the wealth of the stimulus. Dev. Sci. 14, 92–105. ( 10.1111/j.1467-7687.2010.00961.x) [DOI] [PubMed] [Google Scholar]

- 21.Jones SS. 2009. The development of imitation in infancy. Phil. Trans. R. Soc. B 364, 2325–2335. ( 10.1098/rstb.2009.0045) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Oostenbroek J, Suddendorf T, Nielsen M, Redshaw J, Kennedy-Costantini S, Davis J, Clark S, Slaughter V. 2016. Comprehensive longitudinal study challenges the existence of neonatal imitation in humans. Curr. Biol. 26, 1334–1338. ( 10.1016/j.cub.2016.03.047) [DOI] [PubMed] [Google Scholar]

- 23.Field TM, Woodson R, Greenberg R, Cohen D. 1982. Discrimination and imitation of facial expression by neonates. Science 218, 179–181. ( 10.1126/science.7123230) [DOI] [PubMed] [Google Scholar]

- 24.Termine NT, Izard CE. 1988. Infants’ responses to their mothers’ expressions of joy and sadness. Dev. Psychol. 24, 223–229. ( 10.1037/0012-1649.24.2.223) [DOI] [Google Scholar]

- 25.Haviland JM, Lelwica M. 1987. The induced affect response: 10-week-old infants’ responses to three emotion expressions. Dev. Psychol. 23, 97–104. ( 10.1037/0012-1649.23.1.97) [DOI] [Google Scholar]

- 26.Kahana-Kalman R, Walker-Andrews AS. 2001. The role of person familiarity in young infants’ perception of emotional expressions. Child Dev. 72, 352–369. ( 10.1111/1467-8624.00283) [DOI] [PubMed] [Google Scholar]

- 27.Geangu E, Quadrelli E, Conte S, Croci E, Turati C. 2016. Three-year-olds’ rapid facial electromyographic responses to emotional facial expressions and body postures. J. Exp. Child Psychol. 144, 1–14. ( 10.1016/j.jecp.2015.11.001) [DOI] [PubMed] [Google Scholar]

- 28.Walker-Andrews AS. 1997. Infants’ perception of expressive behaviors: differentiation of multimodal information. Psychol. Bull. 121, 437–456. ( 10.1037/0033-2909.121.3.437) [DOI] [PubMed] [Google Scholar]

- 29.Flom R, Bahrick LE. 2007. The development of infant discrimination of affect in multimodal and unimodal stimulation: the role of intersensory redundancy. Dev. Psychol. 43, 238–252. ( 10.1037/0012-1649.43.1.238) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Grant KW, Seitz P-F. 2000. The use of visible speech cues for improving auditory detection of spoken sentences. J. Acoust. Soc. Am. 108, 1197–1208. ( 10.1121/1.1288668) [DOI] [PubMed] [Google Scholar]

- 31.Foxton JM, Riviere L-D, Barone P. 2010. Cross-modal facilitation in speech prosody. Cognition 115, 71–78. ( 10.1016/j.cognition.2009.11.009) [DOI] [PubMed] [Google Scholar]

- 32.Eramudugolla R, Henderson R, Mattingley JB. 2011. Effects of audio-visual integration on the detection of masked speech and non-speech sounds. Brain Cogn. 75, 60–66. ( 10.1016/j.bandc.2010.09.005) [DOI] [PubMed] [Google Scholar]

- 33.Paris T, Kim J, Davis C. 2013. Visual speech form influences the speed of auditory speech processing. Brain Lang. 126, 350–356. ( 10.1016/j.bandl.2013.06.008) [DOI] [PubMed] [Google Scholar]

- 34.Fridlund AJ, Cacioppo JT. 1986. Guidelines for human electromyographic research. Psychophysiology 23, 567–589. ( 10.1111/j.1469-8986.1986.tb00676.x) [DOI] [PubMed] [Google Scholar]

- 35.Beall PM, Moody EJ, McIntosh DN, Hepburn SL, Reed CL. 2008. Rapid facial reactions to emotional facial expressions in typically developing children and children with autism spectrum disorder. J. Exp. Child Psychol. 101, 206–223. ( 10.1016/j.jecp.2008.04.004) [DOI] [PubMed] [Google Scholar]

- 36.Oberman LM, Winkielman P, Ramachandran VS. 2009. Slow echo: facial EMG evidence for the delay of spontaneous, but not voluntary, emotional mimicry in children with autism spectrum disorders. Dev. Sci. 12, 510–520. ( 10.1111/j.1467-7687.2008.00796.x) [DOI] [PubMed] [Google Scholar]

- 37.Rozga A, King TZ, Vuduc RW, Robins DL. 2013. Undifferentiated facial electromyography responses to dynamic, audio-visual emotion displays in individuals with autism spectrum disorders. Dev. Sci. 16, 499–514. ( 10.1111/desc.12062) [DOI] [PubMed] [Google Scholar]

- 38.Moody EJ, McIntosh DN, Mann LJ, Weisser KR. 2007. More than mere mimicry? The influence of emotion on rapid facial reactions to faces. Emotion 7, 447–457. ( 10.1037/1528-3542.7.2.447) [DOI] [PubMed] [Google Scholar]

- 39.Likowski KU, Mühlberger A, Gerdes AB, Wieser MJ, Pauli P, Weyers P. 2012. Facial mimicry and the mirror neuron system: simultaneous acquisition of facial electromyography and functional magnetic resonance imaging. Front. Hum. Neurosci. 6, 214 ( 10.3389/fnhum.2012.00214) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Moody EJ, McIntosh DN. 2011. Mimicry of dynamic emotional and motor-only stimuli. Soc. Psychol. Pers. Sci. 2, 679–686. ( 10.1177/1948550611406741) [DOI] [Google Scholar]

- 41.Sato W, Fujimura T, Suzuki N. 2008. Enhanced facial EMG activity in response to dynamic facial expressions. Int. J. Psychophysiol. 70, 70–74. ( 10.1016/j.ijpsycho.2008.06.001) [DOI] [PubMed] [Google Scholar]

- 42.Rymarczyk K, Biele C, Grabowska A, Majczynski H. 2011. EMG activity in response to static and dynamic facial expressions. Int. J. Psychophysiol. 79, 330–333. ( 10.1016/j.ijpsycho.2010.11.001) [DOI] [PubMed] [Google Scholar]

- 43.Grossmann T. 2010. The development of emotion perception in face and voice during infancy. Restor. Neurol. Neurosci. 28, 219–236. ( 10.3233/RNN-2010-0499) [DOI] [PubMed] [Google Scholar]

- 44.Brass M, Heyes C. 2005. Imitation: is cognitive neuroscience solving the correspondence problem? Trends Cogn. Sci. 9, 489–495. ( 10.1016/j.tics.2005.08.007) [DOI] [PubMed] [Google Scholar]

- 45.Keysers C, Gazzola V. 2006. Towards a unifying neural theory of social cognition. Prog. Brain Res. 156, 379–401. ( 10.1016/S0079-6123(06)56021-2) [DOI] [PubMed] [Google Scholar]

- 46.Keysers C, Perrett DI. 2004. Demystifying social cognition: a Hebbian perspective. Trends Cogn. Sci. 8, 501–507. ( 10.1016/j.tics.2004.09.005) [DOI] [PubMed] [Google Scholar]

- 47.Cook R, Bird G, Catmur C, Press C, Heyes C. 2014. Mirror neurons: from origin to function. Behav. Brain Sci. 37, 177–192. ( 10.1017/S0140525X13000903) [DOI] [PubMed] [Google Scholar]

- 48.Del Giudice M, Manera V, Keysers C. 2009. Programmed to learn? The ontogeny of mirror neurons. Dev. Sci. 12, 350–363. ( 10.1111/j.1467-7687.2008.00783.x) [DOI] [PubMed] [Google Scholar]

- 49.Keysers C, Gazzola V. 2014. Hebbian learning and predictive mirror neurons for actions, sensations and emotions. Phil. Trans. R. Soc. B 369, 20130175 ( 10.1098/rstb.2013.0175) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Iacoboni M. 2009. Imitation, empathy, and mirror neurons. Annu. Rev. Psychol. 60, 653–670. ( 10.1146/annurev.psych.60.110707.163604) [DOI] [PubMed] [Google Scholar]

- 51.Srofe LA, Waters E. 1976. The ontogenesis of smiling and laughter: a perspective on the organization of development in infancy. Psychol. Rev. 83, 173–189. ( 10.1037/0033-295X.83.3.173) [DOI] [PubMed] [Google Scholar]

- 52.Malatesta CZ, Haviland JM. 1982. Learning display rules - the socialization of emotion expression in infancy. Child Dev. 53, 991–1003. ( 10.1111/j.1467-8624.1982.tb01363.x) [DOI] [PubMed] [Google Scholar]

- 53.Nadel J. 2002. Imitation and imitation recognition: Functional use in preverbal infants and nonverbal children with autism. In The imitative mind: development, evolution and brain bases (eds AN Meltzoff, W Prinz), pp. 42–62. Cambridge, UK: Cambridge University Press. [Google Scholar]

- 54.Natale E, Senna I, Bolognini N, Quadrelli E, Addabbo M, Macchi Cassia V, Turati C. 2014. Predicting others’ intention involves motor resonance: EMG evidence from 6- and 9-month-old infants. Dev. Cogn. Neurosci. 7, 23–29. ( 10.1016/j.dcn.2013.10.004) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Turati C, Natale E, Bolognini N, Senna I, Picozzi M, Longhi E, Cassia VM. 2013. The early development of human mirror mechanisms: evidence from electromyographic recordings at 3 and 6 months. Dev. Sci. 16, 793–800. ( 10.1111/desc.12066) [DOI] [PubMed] [Google Scholar]

- 56.Walker-Andrews AS, Lennon E. 1991. Infants’ discrimination of vocal expressions: Contributions of auditory and visual information. Infant Behav. Dev. 14, 131–142. ( 10.1016/0163-6383(91)90001-9) [DOI] [Google Scholar]

- 57.Horowitz FD. 1975. Visual attention, auditory stimulation, and language discrimination in young infants. Monogr. Soc. Res. Child Dev. 39, 105–115. [PubMed] [Google Scholar]

- 58.Datyner AC, Richmond JL, Henry JD.2013. The development of empathy in infancy: insights from the rapid facial mimicry response. In Front. Hum. Neurosci Conf. Abstract: ACNS-2013 Australasian Cognitive Neuroscience Society Conference . ( ) [DOI]

- 59.Isomura T, Nakano T.2016. Data from: Automatic facial mimicry in response to dynamic emotional stimuli in five-month-old infants. Dryad Digital Repository. ( ) [DOI]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Raw data are available from the Dryad Digital Repository [59].