Abstract

Homeostatic processes that provide negative feedback to regulate neuronal firing rates are essential for normal brain function. Indeed, multiple parameters of individual neurons, including the scale of afferent synapse strengths and the densities of specific ion channels, have been observed to change on homeostatic time scales to oppose the effects of chronic changes in synaptic input. This raises the question of whether these processes are controlled by a single slow feedback variable or multiple slow variables. A single homeostatic process providing negative feedback to a neuron’s firing rate naturally maintains a stable homeostatic equilibrium with a characteristic mean firing rate; but the conditions under which multiple slow feedbacks produce a stable homeostatic equilibrium have not yet been explored. Here we study a highly general model of homeostatic firing rate control in which two slow variables provide negative feedback to drive a firing rate toward two different target rates. Using dynamical systems techniques, we show that such a control system can be used to stably maintain a neuron’s characteristic firing rate mean and variance in the face of perturbations, and we derive conditions under which this happens. We also derive expressions that clarify the relationship between the homeostatic firing rate targets and the resulting stable firing rate mean and variance. We provide specific examples of neuronal systems that can be effectively regulated by dual homeostasis. One of these examples is a recurrent excitatory network, which a dual feedback system can robustly tune to serve as an integrator.

Keywords: Homeostasis, Dynamical systems, Stability, Integrator, Synaptic scaling, Averaging

Introduction

Homeostasis, the collection of slow feedback processes by which a living organism counteracts the effects of external perturbations to maintain a viable state, is a topic of great interest to biologists [1, 2]. The brain in particular requires a precise balance of numerous state variables to remain properly operational, so it is no surprise that multiple homeostatic processes have been identified in neural tissues [3]. Some of these processes appear to act at the level of individual neurons to maintain a desirable rate of spiking. When chronic changes in input statistics dramatically lower a neuron’s firing rate, multiple slow changes take place that each act to increase the firing rate again, including the collective scaling of afferent synapses [4, 5] and the adjustment of intrinsic neuronal excitability through adding and removing ion channels [5–7]. These changes suggest the existence of multiple independent slowly-adapting variables that each integrate firing rate over time and provide negative feedback.

Here we undertake an analytical investigation of the dynamics of homeostasis via two independent slow mechanisms (“dual homeostasis”). Our focus on dual homeostasis is partially motivated by the rough breakdown of firing rate homeostatic mechanisms into two categories, synaptic and intrinsic. In our analytical work, we maintain sufficient generality to describe a broad class of firing rate control mechanisms, but we illustrate our results using examples in which homeostasis is governed by one synaptic mechanism acting multiplicatively on neuronal inputs and one intrinsic mechanism acting additively to increase or decrease neuronal excitability. We limit our scope to dual homeostasis to allow us to derive strong analytical results.

It is not immediately clear that dual homeostasis should even be possible. When two variables independently provide negative feedback to drive the same signal toward different targets, one possible outcome is “wind-up” [2], where each variable perpetually ramps up or down in competition with the other to drive the signal toward its own target.

In a recent publication [8], we perform numerical simulations of dual homeostasis (intrinsic and synaptic) in biophysically detailed neurons. We show empirically that this dual homeostasis is stable across a broad swath of parameter space and that it serves to restore not only a characteristic mean firing rate but also a characteristic firing rate variance after perturbations.

Here, we demonstrate analytically that stable homeostasis occurs in a broad family of dual control systems. Further, we find that dual homeostatic control naturally preserves both the mean and the variance of the firing rate, a task impossible for a homeostatic system with a single slow feedback mechanism. We identify broad conditions under which a dually homeostatic neuron possesses a stable homeostatic fixed point, and we derive estimates of the characteristic firing rate mean and variance maintained by homeostasis in terms of homeostatic parameters. We use rate-based neurons and Poisson-spiking neurons for illustration, but our main result is sufficiently general to apply to any model neuron.

One specific application in which such a control system could play an essential role is in tuning a recurrent excitatory network to serve as an integrator. This task is generally considered one that requires biologically implausible precise calibration of multiple parameters [9, 10] and is not well understood (though various solutions to the fine tuning problem have been proposed in [11–14]). In [8], we show empirically that a heterogeneous network of dually homeostatic neurons can tune itself to serve as an integrator. Here, we demonstrate analytically in a simple model of a recurrent excitatory network that integrating behavior can be stabilized by single-cell dual homeostasis and that this stability is robust to the homeostatic parameters of the neurons in the network.

In Sect. 2, we introduce our generalized model of dual homeostasis with the simple but informative example of synaptic/intrinsic firing rate control, and we discuss the reasons that stable homeostatic control is possible for this system. In Sect. 3, we pose a highly general mathematical model of dual homeostatic control. We derive an estimate of the firing rate mean and variance that characterize the fixed points in a given dual homeostatic control system and conditions under which these fixed points are stable. In Sect. 4, we give further specific examples that are encompassed by our general result. In Sect. 5, we use our results to explore dual homeostasis as a strategy for integrator tuning in recurrent excitatory networks. In Sect. 6, we summarize and discuss our results.

Preliminary Examples

Throughout this manuscript, we consider a homeostatic neuronal firing rate control system with slow homeostatic control variables that serve as parameters for neuronal dynamics. Each of these variables represents a biological mechanism that provides slow negative feedback in response to a more rapidly varying neuronal firing rate r. In this section, we focus on an example in which the two control variables are (1) a factor g describing the collective scaling of the strengths of afferent synapses and (2) the neuron’s “excitability” x, which represents a horizontal shift in the mapping from input current to firing rate. An increase in x can be understood as an increase in excitability (or a decrease in firing threshold) and might be implemented in vivo by a change in ion channel density as suggested in [7]. The choice of x and g as homeostatic control variables is motivated by the observation that synaptic scaling and homeostasis of intrinsic excitability operate concurrently in mammalian cortex [5]. We write this dual control system in the form

| 1 |

where r is a neuronal firing rate, and are the “target firing rates” of the two homeostatic mechanisms, and are increasing functions describing the effect of deviations from the target rates on the two control variables, and and are time constants assumed to be long on the time scale of variation of r. An extra factor of g multiplies the second ODE because g acts as a multiplier and must remain nonnegative. As a result, g increases/decreases exponentially (or increases/decreases linearly) if the firing rate r is below/above the target rate . This extra g multiplier is not essential for any of the results we derive here.

In general, r may represent the firing rate of any type of model neuron, or a correlate of firing rate such as calcium concentration. Likewise, the target rates and may represent firing rates or calcium levels at which the corresponding homeostatic mechanisms equilibrate. We assume that r changes quickly relative to and and that r is “distribution-ergodic.” This term is defined precisely in the next section; intuitively, it means that over a sufficiently long time, r behaves like a series of independent samples from a stationary distribution. This allows us to approximate the right-hand sides in (1) by averages over the distributions of and . We will use to represent the mean of a stationary distribution. Since the dynamics of the firing rate depends on control variables x and g, the distributions we consider here also depend on these variables. Averaging (1) over r, we can write

| 2 |

In this section, we assume that the neuron is a standard linear firing rate unit with time constant receiving synaptic input . This input is scaled by synaptic strength g, and the neuron’s response is additively shifted by the excitability x. Thus, the firing rate is described by the equation

| 3 |

In a later section, we will consider a similar system with spiking dynamics.

Constant Input

First, let us assume that is equal to a constant ϕ. In this case, r assumes its asymptotic value on time scale and closely tracks this value as g and x change slowly. Thus, we have and . To find the x-nullcline (the set of points where ), we set in (2). Since is increasing, it is invertible over its range, so we find that the x-nullcline in the phase plane consists of the set . Similarly, the g-nullcline is the line , plus the set . Fixed points of this ODE are precisely the set of intersections of the nullclines. We are interested primarily in fixed points with , so we ignore the set . Representative vector fields, nullclines, and trajectories for (2) with are illustrated in Fig. 1.

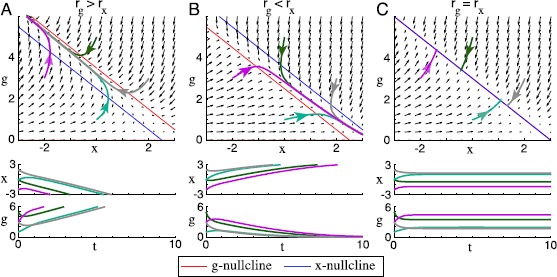

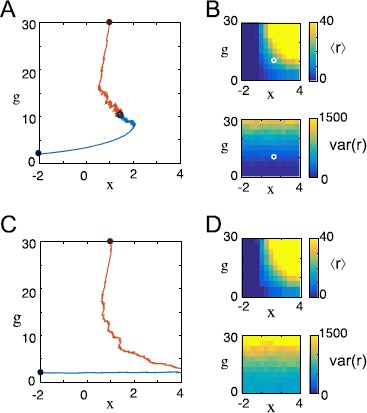

Fig. 1.

In neurons with constant firing rate, dual homeostasis fails to converge on a set point. A firing rate unit receives constant input . It is controlled by the homeostatic x (intrinsic homeostasis) and g (synaptic homeostasis) as described by (2) with and . Other parameters are listed in Appendix 2. Vector fields of the control system are illustrated with arrows in the phase plane. The x- and g-nullclines are plotted with sample trajectories in the phase plane (above), and these sample trajectories are plotted over time (below). (A) If the target firing rate of the excitability-modifying homeostatic mechanism is lower than the target firing rate of the synaptic scaling mechanism (in this case, and ), then g increases and x decreases without bound. This phenomenon is called “controller wind-up.” (B) If (in this case, and ), then , i.e., all afferent synapses are eliminated. (C) If (in this case, ), then the nullclines lie on top of each other, creating a one-parameter set of fixed points that collectively attract all control system trajectories

From the nullcline equations, it is clear that if , there are no fixed points with . If , g increases and x decreases without bound (Fig. 1A); if , then g goes to zero (Fig. 1B). Intuitively, this is because the two mechanisms are playing tug-of-war over the firing rate, each ramping up (or down) in a fruitless effort to bring the firing rate to its target. In control theory, this phenomenon is called “wind-up.”

In the (degenerate) case , the nullclines overlap perfectly, forming a line of fixed points (Fig. 1C). This situation is undesirable because it leaves an extra degree of freedom: homeostasis has no unique fixed point, so the neuron could reach a set point with any synaptic strength, including arbitrarily strong or weak synapses, depending on initial conditions. Further, this state is destroyed by any perturbation to the target rates, so it could not be easily sustained in a biological system.

These results might lead us to believe that a control system consisting of two homeostatic control mechanisms cannot drive a neuron’s firing rate toward a single stable set-point. However, we shall find that this is only because we have posed the problem in the context of a perfectly constant input . When varies, the resulting picture is very different.

Varying Input

We now consider an input that is not constant at ϕ, but instead fluctuates randomly around ϕ. One simple example is , where is white noise with unit variance. In this case, r is an Ornstein-Uhlenbeck (OU) process and is described by the stochastic differential equation

| 4 |

An OU process approaches a stationary Gaussian distribution from any initial condition after time . In this case, this distribution has mean and variance .

Why does the introduction of variations in change the situation at all? This is closely connected with the basic insight that the mean value of a function f over some distribution of arguments r, written , may not be the same as the function f applied to the mean of the arguments, written . The mean value of is affected by the spread of the distribution of r and by the curvature of f. Only linear functions f have the property that for all distributions of r.

As a consequence, “satisfying” both homeostatic mechanisms may not require the condition . The value of ẋ averaged over time may be zero even when the average rate is not exactly , and the average value of ġ may be zero when is not exactly . The conditions required to satisfy each mechanism depend on the entire distribution of r, including the mean and the variance . As long as at least one of the homeostatic mechanisms controls and , the system has two degrees of freedom and therefore may satisfy the two fixed-point equations nondegenerately, that is, at a single isolated fixed point.

Example 1

Rate model with linear and quadratic feedback

In order to more clearly see the influence of input variation on the control system, we explore the case in which and . Substituting into equation (2) to describe the averaged dynamics of x and g, we have

For the OU process r, we have and , so

| 5 |

A vector field, nullclines, and trajectories for this system are plotted for (constant input) in Fig. 1. The same system with (varying input) is represented in Fig. 2.

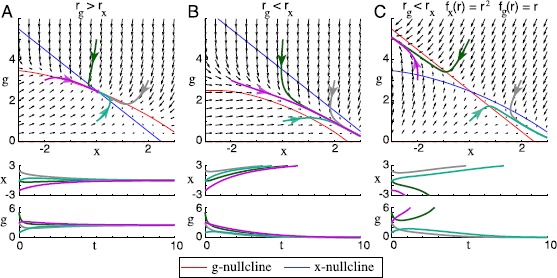

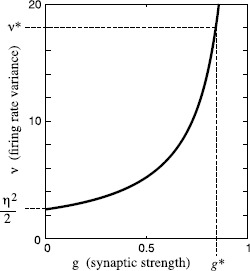

Fig. 2.

In neurons with fluctuating firing rate, dual homeostasis is effective under certain conditions. A firing rate unit receives a variable synaptic input . The parameters are listed in Appendix 2. Vector fields of equation (5) in the phase plane are illustrated with arrows. The x- and g-nullclines are plotted with sample trajectories in the phase plane (above), and these sample trajectories are plotted over time (below). (A) If the target firing rate of the intrinsic homeostatic mechanism is lower than the target firing rate of the synaptic scaling mechanism (in this case, and ), then the nullclines cross, and all trajectories are attracted to the fixed point at their intersection. (B) If (in this case, and ), then the nullclines do not cross, and g goes to zero. (C) If and is exchanged with (, , , ), then the nullclines do cross, but the resulting fixed point is unstable

The fixed points of this ODE can be studied using basic dynamical systems methods. Setting , we find that this equation has fixed points at and . We are not interested in the first fixed point because it has . Of the next two fixed points, we are interested only in the one with nonnegative . This fixed point exists with if and only if the term under the square root is positive, that is, if and only if . It is asymptotically stable (i.e., attracts trajectories from all initial conditions in its neighborhood) if the Jacobian of the ODE at the fixed point has two eigenvalues with negative real part. If one or more eigenvalues have positive real part, then it is asymptotically unstable. At this fixed point, the Jacobian is , and it is easy to check that both eigenvalues have negative real part. We conclude that this (averaged) system possesses a stable homeostatic set-point if and only if . At such a fixed point, the firing rate has mean and variance . Conversely, given any firing rate mean and variance , we can choose targets to stabilize the neuron with this firing rate mean and variance by setting and . Note that this equation is not dependent on ϕ or σ, the parameters of the input . Thus, if ϕ or σ changes, then the homeostatic control system will return the neuron to the same characteristic mean and variance.

In Fig. 2A, . In this case, a stable set point exists, and it is evident that it is reached by the following process:

If the mean firing rate is below , then g and x increase, both acting to increase the mean firing rate until it is in the neighborhood of . If the mean firing rate is above the targets, then g and x both decrease to lower the mean firing rate to near .

- If g is now small, then the firing rate variance is small, and the second moment is close to . Once slightly exceeds , the averaged control system has

but is still less than , so

Alternatively, if g is large, then is large, so the second moment exceeds , whereas is still below , ġ is negative, and ẋ is positive. g slowly seeks out the intermediate point between these extremes, where the variance of r makes up the difference between and . As it does so, x changes in the opposite direction to keep the mean firing rate near .

In Fig. 2B, it is evident that when , no such equilibrium exists. In Fig. 2C, we show that if we exchange with and with such that g is linearly controlled and x is quadratically controlled, then an equilibrium exists for , but it is not stable. These observations suggest that certain general conditions must be met for there to exist a stable equilibrium in a dually homeostatic system. We explore these conditions in the next section.

Note that if only x were dynamic but not g, the firing rate variance would be fixed at , and therefore the variance at equilibrium would be sensitive to changes in σ, the variance of the input current. If only g were dynamic but not x, the firing rate mean and variance could both change over the course of g-homeostasis, but the two would be inseparably linked: using the expressions above for firing rate mean and variance, we can see that no matter how g changed we would always have . Thus, the neuron could only maintain a characteristic firing rate mean and variance if they satisfied this constraint.

Analysis

Now we shall consider the general case in which two control variables a and b evolve according to arbitrary control functions and and control the distribution of a neuron’s firing rate r. We make the dependence of this distribution on a and b explicit by writing the distribution of r as . We address several questions to this model. First, what fixed points exist for a given control system, and what characterizes these fixed points? Second, under what circumstances are these fixed points stable?

In this section, we answer these questions under the simplifying assumption that and are constant on any domain where . In Appendices 1 and 2, we show that our results persist qualitatively for nonconstant and .

In Theorem 1, we write expressions for the firing rate mean and variance that characterize any fixed point . From this result we find that the difference between the two target firing rates plays a key role in establishing the characteristic variance at a control system fixed point.

In Theorem 2, we present a general condition that ensures that a fixed point of the averaged control system is stable. This condition takes the form of a relationship between convexity of the control functions and the derivatives of the first and second moments of with respect to a and b.

Definitions

Consider a pair of homeostatic variables a and b whose instantaneous rates of change are functions of a firing rate variable r:

| 6 |

where is a multiplier separating the fast time scale of firing rate variation and the slow time scale of homeostasis, and are homeostatic time constants (in units of slow time), and are the target firing rates of the two homeostatic mechanisms, and and are smooth increasing bounded functions with bounded derivatives. Note that we have introduced the small parameter ϵ representing the separation of the time scales of homeostasis and firing rate dynamics rather than assuming that and are large. This form is sufficiently general to encompass a wide range of different feedback strategies.

Remark 1

In order to describe the evolution of a homeostatic variable a that acts multiplicatively and must remain positive (e.g., the synaptic scaling multiplier g used in many of our examples), we can instead set . We can then put this system into the general form above by replacing a with , whose evolution is described by the ODE .

We assume that, for fixed a and b, the firing rate (written as a function of time and control variables) is distribution-ergodic with stationary firing rate distribution , that is, with probability 1 for all integrable functions f. For brevity of notation, we let denote the expected value of a function of r over the stationary distribution (or, equivalently, the time average of this function over time ), given a control system state . Let and denote the mean and variance of , respectively.

Averaging (6) over the invariant distribution, we arrive at the “averaged equations”:

| 7 |

We use the averaged equations to study the behavior of the unaveraged system (6). Since r may be constantly fluctuating, a and b may continue to fluctuate even once a fixed point of the averaged system has been reached, so we cannot expect stable fixed points in the classical sense. Instead, we define a weaker form of stability.

We shall call a point “stable in the small-ϵ limit” if there exists a continuous increasing function α with and an such that for all , the trajectory initialized at remains within a radius- ball centered at for all time with probability 1. Intuitively, a point is stable in the small-ϵ limit if trajectories become trapped in a ball around that point, and the ball is smaller when homeostasis is slower.

Lemma 1

Any exponentially stable fixed point of the averaged system (7) is a stable fixed point of the original system (6) in the small-ϵ limit.

Proof

This follows from Theorem 10.5 in [15]. □

Main Results

Fixed Points

Given a homeostatic control state , it is straightforward to find the target firing rates that make that state a fixed point in terms of the average values of and . By setting in (7) we find that and . (These expressions are well defined because is increasing and hence invertible, and must fall within the range of ; likewise for b.)

Given a pair of target firing rates and and functions and , we can ask what states become fixed points of the averaged system. We shall answer this question in order to show that (1) when and are constant, the fixed points are exactly the points at which attains a certain characteristic mean and variance, (2) the relative convexities of the control functions determine whether or must be larger for fixed points to exist, and (3) fixed points with high firing rate variance are achieved by setting far from .

Theorem 1

Consider a dual control system as described in Sect. 3.1 with target firing rates and and control functions and . Let , , and . We consider a domain of control system states on which each distribution has constant and on its support. The fixed points of the averaged control system in this domain are exactly the points at which the mean is and the variance is , where we define

We will henceforth call and the “characteristic” mean and variance of any neuron regulated by this control system.

Remark 2

Note that this result is a/b symmetric. If a and b are swapped, then the signs of , , and k reverse; however, since these terms all occur in pairs, this reversal leaves the expressions for and unchanged.

Remark 3

In Appendix 1, we show that this result persists in some sense for nonconstant and . Specifically, if variation in and over the appropriate domain is small, the mean and variance at any fixed point are close to and , and every point at which the mean is and the variance is is close to a fixed point.

Proof

We abbreviate as μ and as ν. Since and have constant second derivatives on the domain of interest, we can write

Taking the expected values of both sides, we have

A simple calculation gives us , so we can write

At a fixed point of the averaged control system with firing rate mean and variance , we have , or

| 8 |

Deriving a similar expression by expanding around , we have

| 9 |

Multiplying (8) by and (9) by and taking the difference, the terms cancel, leaving

Solving for , we have

or

where . Taking the difference of (8) and (9), we get

or, substituting for and solving for ,

□

Given the parameters of the control system (including a pair of target firing rates), this theorem shows that achieving a specific firing rate mean and variance is necessary and sufficient for the time-averaged control system to reach a fixed point. If (the distribution of the firing rate as a function of a and b) changes, as it might as a result of changes in the statistics of neuronal input, then the new fixed points will be the new points at which this firing rate mean and variance are achieved. Conversely, given a desirable firing rate mean and variance, we could tune the parameters of the control system to make these the characteristic mean and variance of the neuron at control system fixed points.

Whether any fixed point actually exists depends on whether the characteristic firing rate mean and variance demanded by Theorem 1 can be achieved by the neuron, that is, fall within the range of and . If the mapping from to is not degenerate, then there exists a nondegenerate (two-parameter) set of reachable values of μ and ν for which control system fixed points exist. In the degenerate case that neither μ nor ν depend on b, the set of reachable values of μ and ν are a degenerate one-parameter family in a two-dimensional space. This corresponds to the case of a single-mechanism control system. In this case, a control system possesses a fixed point with a given firing rate mean and variance only if they are chosen in a particular relationship to each other. A perturbation to neuronal parameters would displace this one-parameter family in the -space, likely making the preperturbation firing rate mean and variance unrecoverable.

We now prove a corollary giving a simpler form of Theorem 1, which holds if is sufficiently small.

Corollary 1

Given and , let . If and are chosen such that and are sufficiently small, then the characteristic mean and variance given in Theorem 1 are arbitrarily well approximated by

Proof

For k defined in Theorem 1, we can write

so that if is sufficiently small, then k is arbitrarily close to . This gives us an approximation of . We can also use it to write

All of these terms are bounded in norm by multiples of either or , so this expression is arbitrarily small. This gives us an approximation for . □

The range of for which this result holds is determined by and , measures of the convexities of the control functions. Informally, we say that this corollary holds if is “small on the scale of the convexity of the control functions.”

From the corollary we draw two important conclusions that hold while remains small on the scale of the convexity of the control functions:

Since a negative firing rate variance can never be achieved by the control system, there can only be a fixed point if and take the same sign.

Increasing causes a proportionate increase in control system’s characteristic firing rate variance.

Fixed Point Stability

Next, we address the question of whether a fixed point of the averaged control system is stable. We again make the simplifying assumption that and are constant and then drop this assumption in Appendix 2.

Theorem 2

Let denote a fixed point of the averaged control system described above. We assume the following:

The functions μ and ν are differentiable at .

and are negative at , that is, on average, a and b provide negative feedback to r near .

For in a neighborhood of , and are constant on any domain of r where .

Let and denote the firing rate mean and variance at this fixed point. Below, all derivatives of μ and ν with respect to a and b are evaluated at .

The fixed point of the averaged system is stable if

| 10 |

Remark 4

Note that this result is a/b symmetric: if a and b are swapped, then the signs of both terms reverse and these sign changes cancel, leaving the stability condition unchanged.

Proof

A fixed point of the averaged system is exponentially stable if the Jacobian

evaluated at has two negative eigenvalues. By Assumption 2, the Jacobian of the dual control system at has negative trace. A matrix has two negative eigenvalues if it has a negative trace and positive determinant. Therefore, the fixed point of the averaged control system is exponentially stable if

at .

Below, we abbreviate as μ and as ν. In order to write useful expressions for the terms in , we Taylor-expand about μ out to second order, writing . We similarly expand and average these expressions at to rewrite the averaged control equations:

Differentiating these expressions and evaluating them at , we calculate the terms in :

| 11 |

where all derivatives are evaluated at . We have assumed that over the support of , so we can write

Likewise for the other three terms , , and . Calculating the determinant of J and canceling like terms, we have

| 12 |

| 13 |

Thus, is exponentially stable if

or, equivalently, if

□

Remark 5

In Appendix 2, we drop the assumption that and are constant over the range of r and derive a sufficient condition for stability of the form

| 14 |

for a that is close to zero if and do not vary too widely over most of the range of r.

Remark 6

A similar result to Theorem 2 could be proven for a system with a single slow homeostatic feedback. The limitation of such a system would be in reachability. As the single homeostatic variable a changed, the firing rate mean μ and variance ν could reach only a one-parameter family of values in the -space. Thus, most mean/variance pairs would be unreachable. A perturbation to neuronal parameters would displace this one-parameter family in the -space, likely making the original mean/variance pair unreachable. Thus, a single homeostatic feedback could only succeed in recovering its original firing rate mean and variance after perturbation in special cases.

By Lemma 1, fixed points of the averaged system that satisfy the criteria for stability under Theorem 2 are stable in the small-ϵ limit for the full, un-averaged control system.

Further Single-Cell Examples

We will focus on examples in which the two homeostatic variables are excitability x and synaptic strength g, respectively, as discussed before.

The generality of the main results above (which require that and be constant) allows us to investigate a range of different models of firing rate dynamics even if we do not have an explicit expression for the rate distribution P. We only need to know dependence of the firing rate mean μ and variance ν on the control variables to use Theorem 1 to identify control system fixed points and to use Theorem 2 to determine whether those fixed points are stable.

In the more general case addressed in Appendix 2, where the second derivatives are not assumed to be constant, the left side of (10) must be positive and sufficiently large to guarantee the existence of a stable fixed point, where the lower bound Δ for “sufficiently large” is close to zero if and are nearly constant over most of the distribution . We will further discuss the simpler case, but all of our analysis can be applied to the more general case by replacing “positive” with “sufficiently large.”

Returning to Example 1

We can use Theorem 2 to generalize the results for the dually controlled OU process from Sect. 2. We consider the rate model described by the differential equation

| 15 |

where is any second-order stationary process, that is, a process with stationary mean and stationary autocovariance function , both independent of t. We assume that r is ergodic. Let and denote the characteristic firing rate mean and variance determined from the parameters of the control system parameters using Theorem 1.

This firing rate process is the output of a stationary linear filter applied to a second-order stationary process, so according to standard results, we have and , where . Thus, as long as , there exists a fixed point of the control system at

At this fixed point, we have , , , and . This gives us , and the conditions for Theorem 2 are met if is positive.

Figure 3 shows simulation results for this system under conditions sufficient for stability. Note that if the statistics of the input change, the fixed point changes so that the system maintains its characteristic firing rate mean and variance at equilibrium. In Fig. 4A and Fig. 4B, we alter this system by increasing ϵ and by giving correlations on long time scales, respectively. In both these cases, trajectories fluctuate widely about the fixed point of the averaged system but remain within a bounded neighborhood, consistent with the idea of stability in the small-ϵ limit.

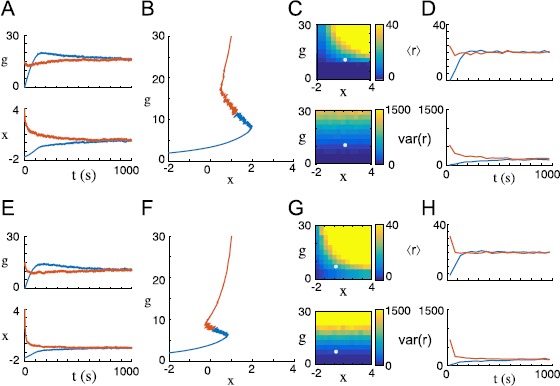

Fig. 3.

Intrinsic/synaptic dual homeostasis recovers original mean and variance after perturbation in a simulated firing rate model. Firing rate r is described by equation (15) with parameter values listed in Appendix 3. Input current is set to , where is white noise with unit variance. In the top row of figures, and ; in the bottom row of figures, and . (A) x and g trajectories plotted over time from two different initial conditions (orange and blue) as a fixed point is reached. (B) The same trajectories plotted in x/g phase space. (C) Mean firing rate (above) and firing rate variance (below) are calculated as a function of homeostatic state and represented by color in x/g parameter space. The fixed point is marked in white. (D) Mean firing rate (above) and firing rate variance (below) are plotted over time for both initial conditions. (E)-(H) When the mean and variance of the input current are increased, the system seeks out a new homeostatic fixed point. Note in G and H that, in spite of the new input statistics, a fixed point is reached with the same firing rate mean and variance

Fig. 4.

Convergence of intrinsic/synaptic dual homeostasis is compromised by short homeostatic time constants and temporally correlated noise. Firing rate r is described by equation (15) with parameter values listed in Appendix 3. (A)-(B) The system simulated for Fig. 1A-D is modified by reducing homeostatic time constants by a factor of 50: we set and . Trajectories enter and remain within a large neighborhood of the fixed point observed in Fig. 1A, but fluctuate randomly within that neighborhood. By Lemma 1 these trajectories converge in the small-ϵ limit, so this neighborhood represents the ball of radius that traps all trajectories and shrinks to zero as . (C)-(D) The system described in Fig. 1A is modified by introducing long temporal correlations into the time course of the input current: is an Ornstein-Uhlenbeck process described by the SDE , where ξ is white noise with unit variance and . Again, trajectories enter and remain within a large neighborhood of the fixed point observed in Fig. 1A but fluctuate randomly within that neighborhood

The slopes and can be understood as measures of the strength of the homeostatic response to deviations from the target firing rate, and the second derivatives and can be understood as measures of the asymmetry of this response for upward and downward rate deflections. If x and g are rescaled to set , then Theorem 2 predicts that dual homeostasis stabilizes a fixed point with a given characteristic mean and variance if , thats is, if the (signed) difference between the effects of positive rate deflections and negative rate deflections is greater for the synaptic mechanism than for the intrinsic mechanism.

Example 2

Intrinsic noise

In Example 1, we assumed that all of the rate fluctuation was due to fluctuating synaptic input. If we introduce an intrinsic source of noise (e.g., channel noise), then the picture becomes slightly more complicated. We set

| 16 |

where is unit-variance white noise independent of , and η sets the magnitude of the noise. The same calculations as before show that the conditions for stability under Theorem 2 are met at any fixed point for . But now the firing rate variance includes the noise variance: . Under Theorem 1, a fixed point only exists if control system parameters are chosen to establish a characteristic variance of . This neuron cannot be stabilized with variance less than because a variance that low cannot be achieved by the inherently noisy neuron.

In Fig. 5, we show the behavior of this system when (the mean and variance necessary for a fixed point are in the ranges of μ and ν) and when (the necessary variance is not in the range of ν).

Fig. 5.

Dual homeostasis tolerates some intrinsic firing rate noise but fails to converge if noise is sufficiently strong. The dynamics of the firing rate r are modeled by an intrinsically noisy OU process described by equation (16) with parameter values listed in Appendix 3. (A) Intrinsic noise amplitude is set to . Dual homeostasis converges on a fixed point near the fixed point of the corresponding system with no intrinsic noise, illustrated in Fig. 1A-D. (B) Firing rate mean and variance are calculated and displayed as functions of x and g in x/g parameter space. The fixed point of the system is marked in white. (C) Intrinsic noise amplitude is set to . Dual homeostasis fails to converge: x winds up without bound, and g winds down toward zero. (D) Note that, due to intrinsic noise, the firing rate variance everywhere in parameter space is larger than the characteristic variance reached at equilibrium in B. Thus, the characteristic variance of this system at equilibrium is unreachable, and dual homeostasis does not converge

Example 3

Poisson-spiking neuron with calcium-like firing rate sensor

In some biological neurons, firing rate controls homeostasis via intracellular calcium concentration [4]. Intracellular calcium increases at each spike and decays slowly between spikes, and it activates signaling pathways that cause homeostatic changes. Our dual homeostasis framework is general enough to describe such a system. We let ρ represent the concentration of some correlate of firing rate, such as intracellular calcium, and use it in place of firing rate r. We model neuronal spiking as a Poisson process with rate , where is a stationary synaptic input. We let ρ increase instantaneously by δ at each spike and decay exponentially with time constant between spikes. We assume that ρ is ergodic.

We show in Appendix 4 that, after sufficient time, assumes a stationary distribution with mean and variance , where ϕ is the stationary mean of , and C is a positive constant determined by the stationary autocovariance of . Thus, we calculate , , , and , and we find that . As in Examples 1 and 2, we conclude that the conditions for stability under Theorem 2 are met if .

Note that the conditions for stability in this model are the same as the conditions in the firing rate models. In [8], we show the same result empirically for biophysically detailed model neurons. What all these models have in common is that changes in g significantly affect the firing rate variance in the same direction, whereas x controls mainly the firing rate mean and has little or no effect on the variance. These results suggest that is a general, model-independent condition for stability of synaptic/intrinsic dual homeostasis. In Appendix 2, where control function second derivatives are not assumed to be constant, this condition is replaced by the condition of sufficiently large .

As in Example 2, not all mean/variance pairs can be achieved by the control system: no matter how small g is, we still have due to the inherently noisy nature of Poisson spiking, which acts as a restriction on the range of ν. We also must have , so the range of μ is constrained to . If and are chosen such that the characteristic firing rate mean and variance defined in Theorem 1 obey these inequalities, then there exists a control system state at which Theorem 1 is satisfied and which is therefore a fixed point.

Recurrent Networks and Integration

A recurrent excitatory network has been shown to operate as an integrator when neuronal excitability and connection strength are appropriately tuned [9, 10]. Such a network can maintain a range of different firing rates indefinitely by providing excitatory feedback that perfectly counteracts the natural decay of the population firing rate. When input causes the network to transition from one level of activity to another, the firing rate of the network represents the cumulative effect of this input over history. Thus, the input is “integrated.”

Below, we show that the parameter values that make such a network an integrator can be stably maintained through dual homeostasis as described before. Importantly, we also show that an integrator network made stable by dual homeostasis is robust to variations in control system parameters and (as in the previous examples) unaffected by changes in input mean and variance. In this section, we build intuition for this phenomenon by investigating a simple example network consisting of one self-excitatory firing rate unit, which may be taken to represent the activity of a homogeneous recurrent network. In Appendix 5, we perform similar analysis for N rate-model neurons with heterogeneous parameters. In this case, we do not prove stability, but we do demonstrate that if any neuron’s characteristic variance is sufficiently high, then the network is arbitrarily close to an integrator at any fixed point of the control system.

We consider a single firing rate unit described by the equation

where η is the level of intrinsic noise, is a second-order stationary synaptic input with mean ϕ and autocovariance , and is a white noise process with unit variance. (For simplicity, we have rescaled time to set the time constant to 1.) Let denote the expected value of r at time t. Taking the expected values of both sides of the equation, we have

Let μ denote the expected value of r once it has reached a stationary distribution. Setting , we calculate

| 17 |

Let denote the deviation of r from m at time t: . From the equations above we have

| 18 |

If we set , then the s-dependence drops out of the right side, and we have

In this extreme case, s acts as a perfect integrator of its noise and its input fluctuations, that is, as a noisy integrator. For g close to 1, the mean-reversion tendency of s is weak, so on short time scales, s acts like a noisy integrator.

Next, we write a differential equation for the variance of r. From (18) we write

Squaring both sides out to and taking the expected value, we have

where C is a positive constant depending on and as in the previous section. Let denote the expected variance of r when it has reached a stationary distribution. At this stationary distribution, we have , so

or

| 19 |

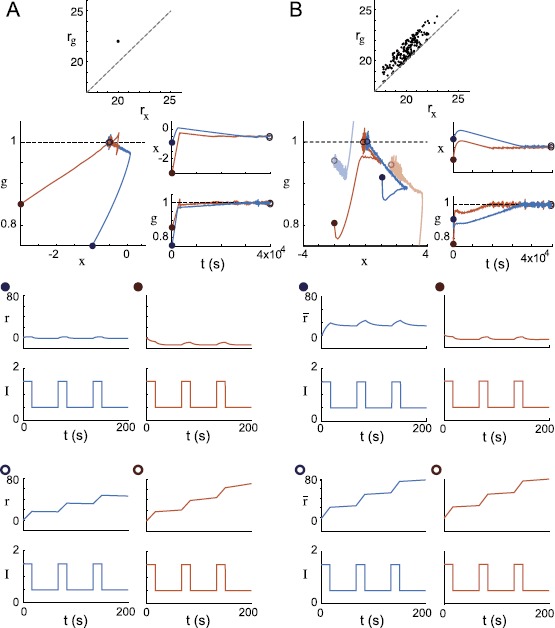

This relation between g and ν is plotted in Fig. 6. The right side of this equation is at and increases with g until it asymptotes to infinity at . So, given , there exists exactly one at which a firing rate variance of is achieved. The larger the characteristic variance, the closer will be to unity. As discussed before, the firing rate is a good integrator on short time scales if g is close to unity. So, given a sufficiently large characteristic variance , the system’s only potentially stable state in the range will allow it to act as a good integrator on short time scales. The larger , the more widely can be varied while still remaining sufficiently large to make close to unity. So, if target firing rates are chosen to make the characteristic variance sufficiently large, then an integrator achieved in this way is robust to variation in characteristic variance (and unaffected by variation in ).

Fig. 6.

Firing rate variance ν vs. synaptic strength g in an excitatory recurrent network. Equation (19) is plotted with and . When synaptic strength is zero, all firing rate variance is due to noise, so . As synaptic strength increases, firing rate variance increases. As synaptic strength approaches unity, recurrent excitation acts to reinforce variations in firing rate, and variance asymptotes to ∞. If target firing rates are set such that the characteristic firing rate variance is large, then the synaptic strength at a control system fixed point must be close to unity, making the network an integrator of its inputs

We can use (17) and (19) to calculate

so as in the previous examples, the conditions for Theorem 2 are met, and stability of any fixed point is guaranteed if .

In short, if and target rates are chosen to create a sufficiently large characteristic variance , then dual homeostasis of intrinsic excitability and synaptic strength stabilizes a recurrent excitatory network in a state such that the network mean firing rate acts as a near-perfect integrator of inputs shared by the population. The corollary to Theorem 1 tells us that, to first approximation, the characteristic variance is proportionate to the difference between the target rates, and a large characteristic variance is achieved by setting the homeostatic target rates far apart from each other. The integration behavior created in this way is robust to variation in the characteristic mean and variance, and therefore robust to the choice of target firing rates.

This effect can be intuitively understood by noting that as a network gets closer to being a perfect integrator (i.e., as g approaches 1), fluctuations in firing rate are reinforced by the resulting fluctuations in excitation. As a result, the tendency to revert to a mean rate grows weaker and the firing rate variance increases toward infinity. (In a perfect integrator, a perturbed firing rate never reverts to a mean, so the variance of the firing rate is effectively infinite.) Thus, the network can attain a large variance by tuning g to be sufficiently close to 1. If this large variance is the characteristic variance of the control system, then there is a fixed point of the dual control system at this value of g.

In some sense, this behavior is an artifact of the model used—perfect integration is only possible if the feedback perfectly counters the decay of the firing rate over a range of different rates, which is possible in this model because rate increases linearly with feedback and feedback increases linearly with rate. However, such a balance is also achievable with nonlinear rate/feedback relationships if they are locally linear over the relevant range of firing rates. In particular, if the firing rate is a sigmoidal function of input and the eigenvalue of firing rate dynamics near a fixed point is near zero, the upper and lower rate limits act to control runaway firing rates while the system acts as an integrator in the neighborhood of the fixed point. In [8], we show that a recurrent network of biophysically detailed neurons with sigmoidal activation curves can be robustly tuned by dual homeostasis to act as an integrator.

In Appendix 5, we show that integration behavior also occurs at set points in networks of heterogeneous dually homeostatic neurons if one or more of them have a sufficiently large characteristic variance. If only one neuron’s characteristic variance is large, the afferent synapse strength to that neuron grows until that neuron gives itself enough feedback to act as a single-neuron integrator as described before. But if many characteristic variances are large, then all synapse strengths remain biophysically reasonable, and many neurons participate in integration, as might be expected in a true biological integrator network.

In Fig. 7, we show simulation results for homogeneous and heterogeneous recurrent networks with target firing rates set to create sufficiently large characteristic firing rate variances. In addition to corroborating our analytical results, these simulations provide empirical evidence that the fixed points of heterogeneous networks are stable under similar conditions to those guaranteeing the stability of the single self-excitatory rate unit discussed before.

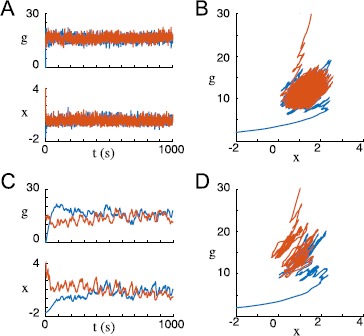

Fig. 7.

Dual homeostasis creates integrators from a single recurrently excitatory neuron and a heterogeneous excitatory network. (A) Dual homeostasis tunes a single neuron with a recurrent excitatory connection to function as an integrator from two different initial conditions (orange and blue). First row: the target rates and are plotted as a point in space. Second row: the resulting x and g trajectories are plotted in phase space and over time. Third row: before dual homeostasis, the neuron is tested for integrator-like behavior by injecting pulsatile input . The firing rate r returns to a baseline after each pulse. Fourth row: after dual homeostasis, firing rate r increases at each pulse and retains its approximate value from one pulse to the next. This neuron is an integrator: its firing rate at any time represents an integral of the pulse history. (B) Analogous plots for a heterogeneous recurrently excitatory network of 200 neurons initialized from two initial conditions (orange and blue). Top row: target firing rate pairs of all neurons are plotted in space. Note that is always chosen to be greater than . Second row: average values of g and x across the network are plotted in phase space and over time. In phase space, a representative trajectory of a single neuron in the network for each of the two simulation runs is also plotted in lighter colors. Note that these trajectories are significantly removed from the average trajectories. Third row: both times the network is initialized, the average network rate r̅ does not act as an integrator for pulsatile input. Fourth row: after dual homeostasis, the average network rate acts as an integrator

Discussion

This mathematical work is motivated by the observation that the mean firing rates of neurons are restored after chronic changes in input statistics and that this firing rate regulation is mediated by multiple slow biophysical changes [3, 5]. We explore the possibility that these changes represent the action of multiple independent slow negative feedback (“homeostatic”) mechanisms, each with its own constant “target firing rate” at which it reaches equilibrium. Specifically, we focus on a model in which the firing of an unspecified model neuron is regulated by two slow homeostatic feedbacks, which may correspond to afferent synapse strength and intrinsic excitability or any two other neuronal parameters.

In a previous work [8], we showed in numerical simulations that a pair of homeostatic mechanisms regulating a single biophysically detailed neuron can stably maintain a characteristic firing rate mean and variance for that neuron. Here, we have analytically derived mathematical conditions sufficient for any model neuron exhibiting such dual homeostasis to exhibit this behavior. Importantly, the homeostatic system reaches a fixed point when the firing rate mean and variance reach characteristic values determined by homeostasis parameters, so the mean and variance at equilibrium are independent of the details of the neuron model, including stimulus statistics. Thus, this effect can restore a characteristic firing rate mean after major changes in the neuron’s stimulus statistics, as has been observed in vivo, while at the same time restoring a characteristic firing rate variance.

In Theorem 1, we have provided expressions for the characteristic firing rate mean and variance established by a specific set of homeostatic parameters. They show that when the separation between the target rates and is appropriately small, the relative convexities of the functions and (by which the firing rate exerts its influence on the homeostatic variables) determine which target rate must be larger for a fixed point to exist. When a fixed point does exist, the characteristic firing rate variance at the fixed point is proportional to the difference between and .

In Theorem 2, we find that any fixed point of our dual homeostatic control system is stable if a specific expression is positive. This expression reflects the mutual influences of firing rate on the homeostatic control system and of control system on the firing rate mean and variance.

Both these theorems are proven under the simplifying assumption that and are constant. However, in Appendices 1 and 2, we drop this assumption and find that qualitatively similar results hold as long as these second derivatives do not vary too widely. In particular, stability is guaranteed if the expression in Theorem 2 exceeds a certain positive bound that is close to zero if and are nearly constant across most of the range of variation of the firing rate.

We have explored the implications of our results for a system with slow homeostatic regulation of intrinsic neuronal “excitability” x (an additive horizontal shift in the firing rate curve) and afferent synapse strength g. From the corollary to Theorem 1 we find that (to first approximation) stable firing rate regulation requires . Using Theorem 2, we show that for rate-based neuron models and Poisson-spiking models, stable firing rate regulation is achieved when the is sufficiently concave-up relative to .

We predict that these conditions on relative concavity and relative target firing rates should be met by any neuron with independent intrinsic and synaptic mechanisms as its primary sources of homeostatic regulation. Experimental verification of these conditions would suggest that our analysis accurately describes the interaction of a pair of homeostatic mechanisms; experimental contradiction of these conditions would suggest that the control process regulating the neuron could not be accurately described by two independent homeostatic mechanisms providing simple negative feedback to firing rate.

Our results have special implications for neuronal integrators that maintain a steady firing rate through recurrent excitation. We have found that the precise additive and multiplicative tuning necessary to maintain the delicate balance of excitatory feedback can be performed by a dual control system within the framework we study here if the target firing rates are set far enough apart to achieve a large characteristic firing rate variance. The integrator maintained by dual homeostasis is robust to variation of its target firing rates. This occurs because the circuit is best able to achieve a large firing rate variance when it is tuned to eliminate any bias toward a particular firing rate, exactly the condition necessary for integration. This robust integrator tuning scheme should be considered in the ongoing experimental effort to understand processes of integration in the brain.

Appendix 1: Generalization of Theorem 1

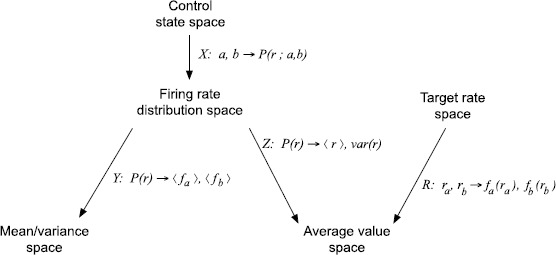

Here we present the sense in which the results of Theorem 1 persist for functions and with nonconstant second derivatives. Mappings defined further are illustrated in Fig. A1.

Fig. A1.

Schematic of mappings defined in Appendix 1

Let X denote the map from a pair to the resulting firing rate distribution (with density function ). Let Y denote the map from a distribution to its mean and variance . Let Z denote the map from a distribution to the average values of and over that distribution. Let R denote the map from a pair of firing rate targets to , which are the average values of and necessary for ȧ and ḃ to average to zero.

For a given set of target rates, the inverse image of under Z, written , is the set of all r distributions for which ȧ and ḃ average to zero. is the set of pairs that are fixed points of the averaged control system. is the set of all pairs that give rise to distributions with mean μ and variance ν. Theorem 1, which holds if and are constant, can be written as

where and are as defined in Theorem 1.

, , and the maps R and Z all change continuously in response to perturbations in and in the space. Therefore, if and are sufficiently close to constants across their domains, the points in are arbitrarily close to points in and vice versa. In other words, the fixed points of the control system all give rise to firing rate mean and variance arbitrarily close to , and the control system states that give rise to r distributions with firing rate mean and variance of are arbitrarily close to fixed points.

Appendix 2: Generalization of Theorem 2

Here we present the sense in which the results of Theorem 2 persist for functions and with nonconstant second derivatives.

The Jacobian of the averaged control system is continuous with respect to perturbations to and in . Therefore, if the determinant of the Jacobian is negative (i.e., a fixed point of the averaged system is stable) for functions and with constant second derivatives, then when these functions are perturbed in , the Jacobian is negative at the perturbed fixed point (see the previous appendix).

The persistence of fixed point stability for nonconstant and can be expressed as a generalization of Theorem 2. Rather than requiring an expression to be positive, this generalized theorem requires that the same expression exceed some nonnegative lower bound, which is close to zero if and are sufficiently close to constants over most of the support of the distribution of firing rates r.

Theorem 3

Let denote a fixed point of the averaged control system described before. We assume the following:

The functions μ and ν are differentiable at .

and are negative at , that is, on average, a and b provide negative feedback to r near .

Let and denote the firing rate mean and variance at this fixed point. Below, all derivatives with respect to a and b are evaluated at .

We define

and

The fixed point of the averaged system is stable if

| 20 |

Remark 7

is the maximal deviation of from on the ball of radius D. is the amount of variance attributable to probability mass on that ball. Note that .

Proof

We abbreviate as μ and as ν. From (11) we can use the intermediate value theorem to write

where depends on r and μ. Integrating, we have

| 21 |

We let

We now define a simpler matrix J̃ that approximates J:

where , and likewise for the other three terms in J̃. This matrix is identical to the matrix J under the assumption of constant and . Therefore, from (13) we have

We will estimate how closely the determinant of J̃ approximates that of J. From (21) we write out the expected value as an integral over a probability distribution:

Given any radius , we can split the integral into two parts, one on a ball of radius D about and one outside that ball:

Applying the intermediate value theorem to both integrals, we have

for some and some . By the definition of ,

We compare this quantity to :

This bound holds for all . Taking an infimum over all D, we have

| 22 |

Using this bound (and the equivalent bound for the other three terms of ), we set bounds on how closely the determinant of J̃ approximates the determinant of J:

From (22) we have

Substituting the definitions of , , etc., and simplifying, we get

Thus, we are guaranteed that if , that is, if

or, equivalently, if

□

Appendix 3: Simulation Parameters

Simulation parameters are given in Tables 1-3.

Table 2.

| Parameter | Meaning | Value |

|---|---|---|

| dt | Euler timestep | 0.01 s |

| x homeostatic time constant | 500 s | |

| g homeostatic time constant | 50,000 s | |

| firing rate time constant | 0.1 s | |

| x homeostasis target firing rate | 20 | |

| g homeostasis target firing rate | 24 | |

| x homeostasis control function | ||

| g homeostasis control function |

Table 1.

Parameter values in Figs. 1 - 2 (unless otherwise specified). Parameter values that differed between Figs. 1 and 2 are separated by a slash. Matlab function ode45 was used for numerical integration of the averaged control system

| Parameter | Meaning | Value |

|---|---|---|

| ϕ | Mean input current | 1 |

| σ | Input current variance | 0 / 0.001 |

| x homeostatic time constant | 1 | |

| g homeostatic time constant | ||

| x homeostasis target firing rate | 2.5 | |

| g homeostasis target firing rate | 3.5 | |

| x homeostasis control function | ||

| g homeostasis control function |

Table 3.

Parameter values in Fig. 7 . Parameters that differed between Fig. 7 A and Fig. 7 B are separated by a slash. In Fig. 7 B, for each neuron, ζ is chosen from a normal distribution with mean zero and variance 1, and γ is chosen from a uniform distribution on

| Parameter | Meaning | Value |

|---|---|---|

| N | Number of neurons in network | 1 / 200 |

| dt | Euler timestep | 0.01 s / 0.05 s |

| x homeostatic time constant | / 5,000 s | |

| g homeostatic time constant | / | |

| firing rate time constant | 1 s | |

| x homeostasis target firing rate | 20 / 20 + ζ | |

| g homeostasis target firing rate | 21 / | |

| x homeostasis control function | ||

| g homeostasis control function |

Appendix 4: Calcium Process Mean and Variance

Here we derive the mean and variance of a process that consists of exponentially decaying impulses arriving as a Poisson process with a rate that varies according to a stationary random input process . This process is designed to emulate the dynamics of intracellular calcium, a common sensor of neuronal firing rate, which increases sharply at spike decays slowly between spikes.

Let be a second-order stationary process, that is, a process for which the mean over instantiations is a constant that does not depend on t, and the delay-w autocovariance is a function that does not depend on t. Let ρ be a positive quantity initialized at zero at that increases by δ at each event of a Poisson process with rate and decays exponentially with time constant between events.

We can write

where , and is a Poisson random measure with mean measure [16]. Note that this process is doubly stochastic: is a random process, and is a random measure depending on a specific instantiation of . We will write to signify the expected value over instantiations of , and to signify the expected value given a specific instance of . According to [17], the nth moment of this process can be written

where

and

Thus, for the first moment of , we have

| 23 |

The second moment is given by

Setting , we have

where . Substituting from (23), we have

Appendix 5: Recurrent Networks with Heterogeneity

Here we show that some of our results on homogeneous recurrent excitatory networks generalize to heterogeneous networks in which each neuron has its own dual control system, target rates, and level of intrinsic noise. In particular, we show that if the neurons in such a network have target firing rates that set at least one of the characteristic variances sufficiently high, then when the combined control system reaches a fixed point, the network behaves like an integrator on short time scales. This condition is robust to variation in individual neuronal parameters, including target firing rates. Since the combined control systems of a heterogeneous network with N neurons is 2N-dimensional rather than two-dimensional, we do not attempt to prove the stability of fixed points here. However, we have verified the stability of the behavior described below in simulations (data not shown, code available online).

We consider a collection of N neurons with firing rates connected all-to-all by excitatory synapses. Each neuron n has its own homeostatic variables and , its own extrinsic second-order stationary synaptic input with mean and autocovariance , its own intrinsic noise (where is a white noise process with unit variance), a second-order stationary synaptic input with mean and autocovariance shared by all neurons, and excitatory synaptic input from all other neurons, which together determine the firing rate as in the rate models above. Each neuron n has its own target firing rates and . Each neuron n delivers an additional excitatory synaptic input to each other neuron.

We write the rate model for neuron n in the form

Here we have rescaled time in order to ignore the time constant . We can take expected values of both sides to describe the expected firing rate at time t, :

| 24 |

Let denote the deviation of rate n from its expected value: . Taking the difference of the last two equations, we have

| 25 |

Let denote the average network firing rate at time t. The expected value of is . Let denote the deviation of from its expected value. Taking the average over n in (25), we have

| 26 |

Let . For , is a mean-zero OU process. If we set , then the term drops out, and we can solve for :

| 27 |

In this case, the mean network firing rate acts as a perfect integrator of all of the network’s input fluctuations and noise. The closer g̅ is to 1, the more acts like an integrator on short time scales.

For , the vector of rates is a linearly filtered second-order stationary random process and therefore approaches a stationary mean and variance. Using (25), we write a recursive equation for the covariance of and out to :

where and are constants depending on the input autocovariance functions, and is 1 if and zero if . Once all rates have reached a stationary joint distribution, we should have , so from the last equation we have

Thus, the stationary covariances are related by the equation

| 28 |

Summing this expression over n and m, we have

| 29 |

We set . Solving for Ω, we have

| 30 |

We sum over m in (28) and write the result in terms of Ω:

| 31 |

We set and solve for :

| 32 |

Finally, from (28) we have

| 33 |

where g is the vector of all .

Suppose that target firing rates are set such that each neuron n has characteristic variance . Then at any fixed point of the combined control system, we have for all n. It is easy to check that is at , that it increases with any with respect to any on the domain , and that it asymptotes to infinity as approaches 1. Thus, for sufficiently large , the constraint can be solved on the domain, and any solution must be close to the hypersurface . As discussed before, the system acts as an integrator on short time scales when g̅ is close to 1; thus, if any one or more of the characteristic variances are sufficiently large, then at a fixed point of the combined control system, the mean network firing rate acts as an integrator on short time scales.

We can intuitively see that if only one neuron, neuron n, has a high characteristic variance, then it acts like the single-neuron integrator as approaches N, and neuron n dominates the global feedback signal. This scenario is not particularly biophysically interesting. However, if many neurons have high characteristic variance, then no individual neuron will dominate the feedback signal. In the special case that all neuron parameters are identical, a symmetry argument shows that will be close to 1 for all n—no single synapse is strong enough to make a single neuron an integrator, but the fluctuations in network firing rate cause correlated fluctuations in the individual firing rates, which collectively reinforce the network rate fluctuations, causing the network as a whole act as an integrator.

By the corollary of Theorem 1, the characteristic variance of a neuron is proportionate to the difference between target firing rates as long as this difference remains small on the scale of the convexity of and ; so if and are small, then a large characteristic variance can be achieved by setting target firing rates sufficiently far apart, and perturbations to this difference cause proportionate perturbations in characteristic variance. If the characteristic variances are sufficiently large to make the network an integrator, then they will remain sufficiently large even in the face of such perturbations. We conclude that a network integrator stabilized by dual homeostasis is robust to perturbations in the target firing rates and .

Footnotes

Declarations

Code mentioned in the manuscript will be made available on Figshare.

Competing Interests

The authors declare that they have no competing interests.

Authors’ Contributions

The main idea of this paper was proposed by PM. JC prepared the manuscript initially and performed all the steps of the proofs in this research. All authors read and approved the final manuscript.

Contributor Information

Jonathan Cannon, Email: cannon@brandeis.edu.

Paul Miller, Email: pmiller@brandeis.edu.

References

- 1.Cannon WB. Physiological regulation of normal states: some tentative postulates concerning biological homeostasis. In: Langley LL, editor. Homeostasis: origins of the concept. Stroudsburg: Dowden, Hutchinson and Ross; 1973. [Google Scholar]

- 2.O’Leary T, Wyllie DJA. Neuronal homeostasis: time for a change? J Physiol. 2011;589(20):4811–4826. doi: 10.1113/jphysiol.2011.210179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Desai NS. Homeostatic plasticity in the CNS: synaptic and intrinsic forms. J Physiol (Paris) 2004;97:391–402. doi: 10.1016/j.jphysparis.2004.01.005. [DOI] [PubMed] [Google Scholar]

- 4.Turrigiano GG. The self-tuning neuron: synaptic scaling of excitatory synapses. Cell. 2008;135(3):422–435. doi: 10.1016/j.cell.2008.10.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Maffei A, Turrigiano GG. Multiple modes of network homeostasis in visual cortical layer 2/3. J Neurosci. 2008;28(17):4377–4384. doi: 10.1523/JNEUROSCI.5298-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Turrigiano G, Abbot LF, Marder E. Activity-dependent changes in the intrinsic properties of cultured neurons. Science. 1994;264(5161):974–977. doi: 10.1126/science.8178157. [DOI] [PubMed] [Google Scholar]

- 7.Desai NS, Rutherford LC, Turrigiano GG. Plasticity in the intrinsic excitability of cortical pyramidal neurons. Nat Neurosci. 1999;2(6):515–520. doi: 10.1038/9165. [DOI] [PubMed] [Google Scholar]

- 8.Cannon J, Miller P. Synaptic and intrinsic homeostasis cooperate to optimize single neuron response properties and tune integrator circuits. J Neurophys. 2016;116(5):2004–2022. doi: 10.1152/jn.00253.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Cannon SC, Robinson DA, Shamma S. A proposed neural network for the integrator of the oculomotor system. Biol Cybern. 1983;49(2):127–136. doi: 10.1007/BF00320393. [DOI] [PubMed] [Google Scholar]

- 10.Seung HS, Lee DD, Reis BY, Tank DW. Stability of the memory of eye position in a recurrent network of conductance-based model neurons. Neuron. 2000;26:259–271. doi: 10.1016/S0896-6273(00)81155-1. [DOI] [PubMed] [Google Scholar]

- 11.Major G, Baker R, Aksay E, Seung HS, Tank DW. Plasticity and tuning of the time course of analog persistent firing in a neural integrator. Proc Natl Acad Sci USA. 2004;101(20):7745–7750. doi: 10.1073/pnas.0401992101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Lim S, Goldman MS. Balanced cortical microcircuitry for maintaining information in working memory. Nat Neurosci. 2013;16(9):1306–1314. doi: 10.1038/nn.3492. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Goldman MS. Robust persistent neural activity in a model integrator with multiple hysteretic dendrites per neuron. Cereb Cortex. 2003;13(11):1185–1195. doi: 10.1093/cercor/bhg095. [DOI] [PubMed] [Google Scholar]

- 14.Koulakov AA, Raghavachari S, Kepecs A, Lisman JE. Model for a robust neural integrator. Nat Neurosci. 2002;5(8):775–782. doi: 10.1038/nn893. [DOI] [PubMed] [Google Scholar]

- 15.Khalil HK. Nonlinear systems. 3. New York: Prentice Hall; 2002. [Google Scholar]

- 16.Cınlar E. Probability and stochastics. New York: Springer; 2011. [Google Scholar]

- 17.Bassan B, Bona E. Moments of stochastic processes governed by Poisson random measures. Comment Math Univ Carol. 1990;31(2):337–343. [Google Scholar]