Abstract

How do the hippocampus and amygdala interact with thalamocortical systems to regulate cognitive and cognitive-emotional learning? Why do lesions of thalamus, amygdala, hippocampus, and cortex have differential effects depending on the phase of learning when they occur? In particular, why is the hippocampus typically needed for trace conditioning, but not delay conditioning, and what do the exceptions reveal? Why do amygdala lesions made before or immediately after training decelerate conditioning while those made later do not? Why do thalamic or sensory cortical lesions degrade trace conditioning more than delay conditioning? Why do hippocampal lesions during trace conditioning experiments degrade recent but not temporally remote learning? Why do orbitofrontal cortical lesions degrade temporally remote but not recent or post-lesion learning? How is temporally graded amnesia caused by ablation of prefrontal cortex after memory consolidation? How are attention and consciousness linked during conditioning? How do neurotrophins, notably brain-derived neurotrophic factor (BDNF), influence memory formation and consolidation? Is there a common output path for learned performance? A neural model proposes a unified answer to these questions that overcome problems of alternative memory models.

Keywords: Cognitive-emotional learning, Conditioning, Memory consolidation, Amnesia, Hippocampus, Amygdala, Pontine nuclei, Adaptive timing, Time cells, BDNF

Overview and scope

The roles and interactions of amygdala, hippocampus, thalamus, and neocortex in cognitive and cognitive-emotional learning, memory, and consciousness have been extensively investigated through experimental and clinical studies (Berger & Thompson, 1978; Clark, Manns, & Squire, 2001; Frankland & Bontempi, 2005; Kim, Clark, & Thompson, 1995; Lee & Kim, 2004 ; Mauk & Thompson 1987; Moustafa et al., 2013; Port, Romano, Steinmetz, Mikhail, & Patterson, 1986; Powell & Churchwell, 2002; Smith, 1968; Takehara, Kawahara, & Krino, 2003). This article develops a neural model aimed at providing a unified explanation of challenging data about how these brain regions interact during normal learning, and how lesions may cause specific learning and behavioral deficits, including amnesia. The model also proposes testable predictions to further test its explanations. The most relevant experiments use the paradigm of classical conditioning, notably delay conditioning and trace conditioning during the eyeblink conditioning task that is often used to explicate basic properties of associative learning. Earlier versions of this work were briefly presented in Franklin and Grossberg (2005, 2008).

Eyeblink conditioning has been extensively studied because it has disclosed behavioral, neurophysiological, and anatomical information about the learning and memory processes related to adaptively timed, conditioned responses to aversive stimuli, as measured by eyelid movements in mice (Chen et al., 1995), rats (Clark, Broadbent, Zola, & Squire, 2002; Neufeld & Mintz, 2001; Schmajuk, Lam, & Christiansen, 1994), monkeys (Clark & Zola, 1998), and humans (Clark, Manns, & Squire, 2001; Solomon et al., 1990), and by the timing and amplitude of the nictitating membrane reflex (NMR) which involves a nictitating membrane that covers the eye like an eyelid in cats (Norman et al., 1974), rabbits (Berger & Thompson, 1978; Christian & Thompson, 2003; McLaughlin, Skaggs, Churchwell, & Powell, 2002; Port, Mikhail, & Patterson, 1985; Port et al., 1986; Powell & Churchill 2002; Powell, Skaggs, Churchwell, & McLauglin, 2001; Solomon et al., 1990), and other animals. Eyeblink/NMR conditioning data will herein be used to help formulate and answer basic questions about associative learning, adaptive timing, and memory consolidation.

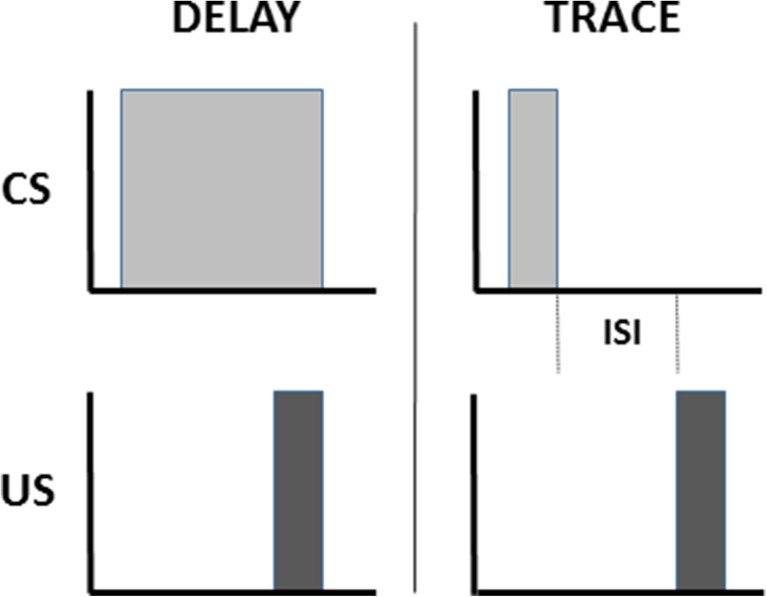

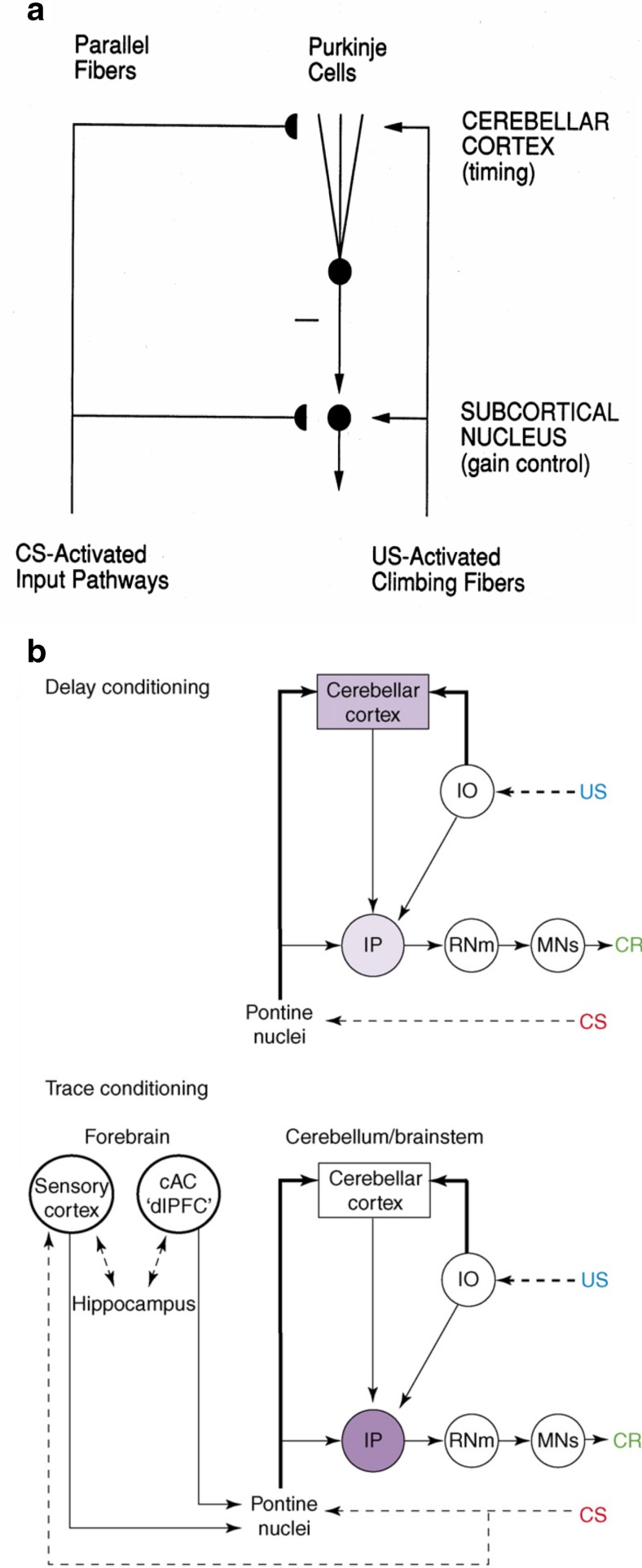

Classical conditioning involves learning associations between objects or events. Eyeblink conditioning associates a neutral event, such as a tone or a light, called the conditioned stimulus (CS), with an emotionally-charged, reflex-inducing event, such as a puff of air to the eye or a shock to the periorbital area, called the unconditioned stimulus (US). Delay conditioning occurs when the stimulus events temporally overlap so that the subject learns to make a conditioned response (CR) in anticipation of the US (Fig. 1). Trace conditioning involves a temporal gap between CS offset and US onset such that a CS-activated memory trace is required during the inter-stimulus interval (ISI) in order to establish an adaptively timed association between CS and US that leads to a successful CR (Pavlov, 1927).

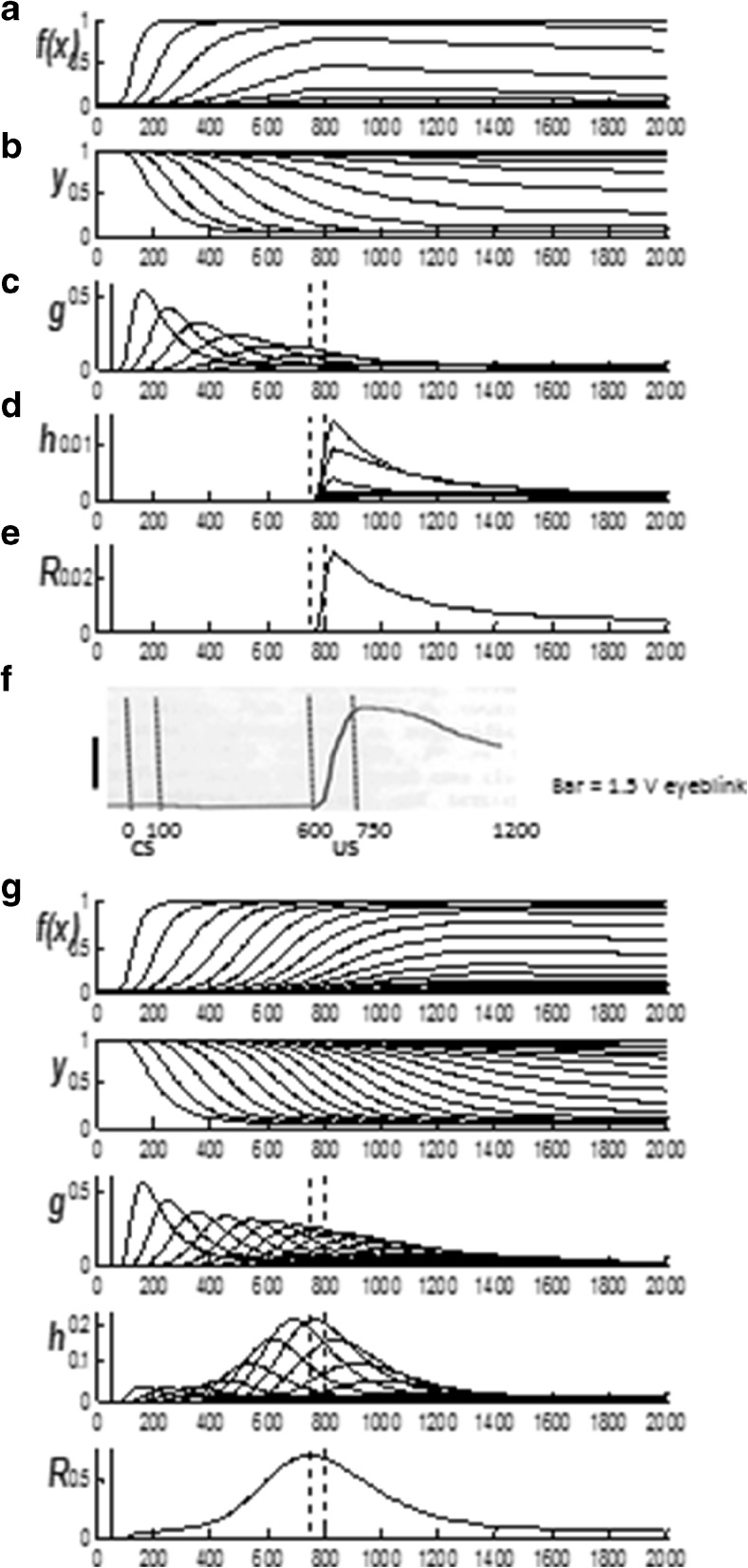

Fig. 1.

Eyeblink conditioning associates a neutral event, called the conditioned stimulus (CS), with an emotionally-charged, reflex-inducing event, called the unconditioned stimulus (US). Delay conditioning occurs when the stimulus events temporally overlap. Trace conditioning involves a temporal gap between CS offset and US onset such that a CS-activated memory trace is required during the inter-stimulus interval (ISI) in order to establish an association between CS and US. After either normal delay and trace conditioning, with a range of stimulus durations and ISIs a conditioned response (CR) is performed in anticipation of the US

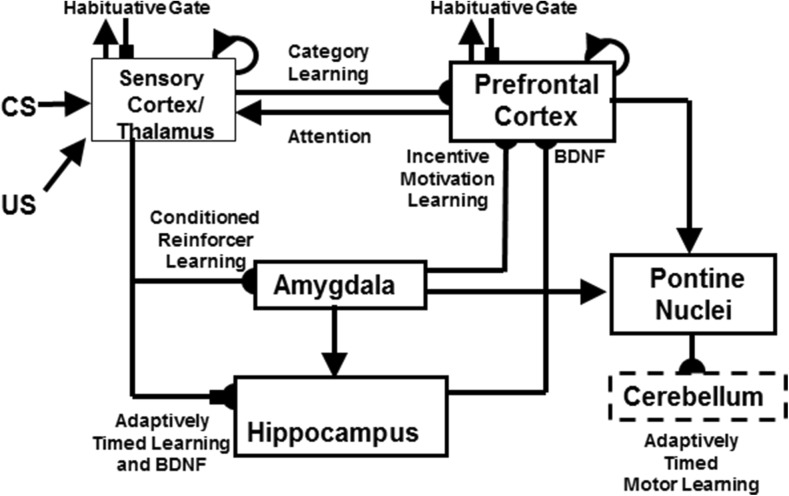

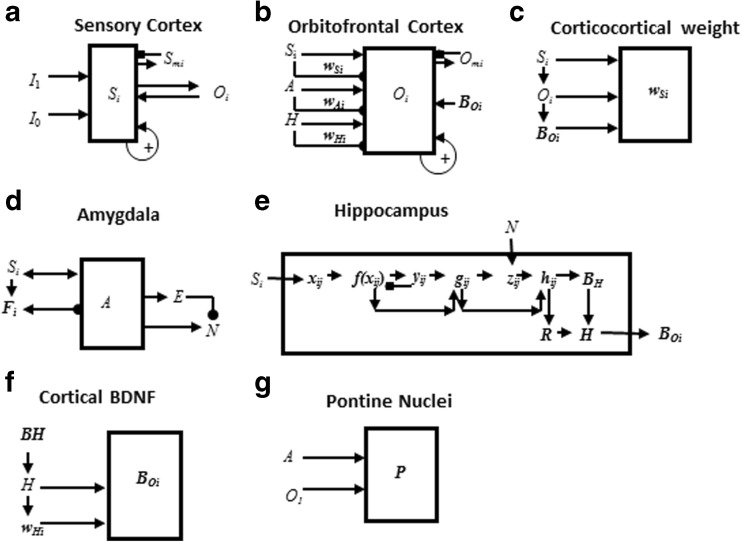

Multiple brain areas are involved in eyeblink conditioning. Many of these regions, and their interactions, are simulated in the current neural model (Fig. 2). Sensory input comes into the cortex, and the model, by way of the thalamus. Since the US is an aversive stimulus, the amygdala is involved (Büchel, Dolan, Armony, & Friston, 1999; Lee & Kim, 2004). The hippocampus plays a role in new learning, in general (Frankland & Bontempi, 2005; Kim, Clark, & Thompson, 1995; Takehara et al., 2003) and in adaptively timed learning, in particular (Büchel et al., 1999; Green & Woodruff-Pak, 2000; Kaneko & Thompson, 1997; Port et al., 1986; Smith, 1968). The prefrontal cortex plays an essential role in the consolidation of long-term memory (Frankland & Bontempi, 2005; Takehara, Kawahara, & Krino, 2003; Winocur, Moscovitch, & Bontempi, 2010). Lesions of the amygdala, hippocampus, thalamus, and neocortex have different effects depending on the phase of learning when they occur.

Fig. 2.

The neurotrophic START, or nSTART, macrocircuit is formed from parallel and interconencted networks that support both delay and trace conditioing. Connectivity between thalamus and sensory cortex includes pathways from the amygdala and hippocampus, as does connectivity between sensory cortex and prefrontal cortex, specifically orbitofrontal cortex. These circuits are homologous. Hence the current model lumps the thalamus and sensory cortex together and simulates only sensory cortical dynamics. Multiple types of learning and neurotrophic mechanisms of memory consolidation cooperate in these circuits to generate adaptively timed responses. Connections from sensory cortex to orbitofrontal cortex support category learning. Reciprocal connections from orbitofrontal cortex to sensory cortex support attention. Habituative transmitter gates modulate excitatory conductances at all processing stages. Connections from sensory cortex to amygdala connections support conditioned reinforcer learning. Connections from amygdala to orbitofrontal cortex support incentive motivation learning. Hippocampal adaptive timing and brain-derived neurotrophic factor (BDNF) bridge temporal delays between conditioned stimulus (CS) offset and unconditioned stimulus (US) onset during trace conditioning acquisition. BDNF also supports long-term memory consolidation within sensory cortex to hippocampal pathways and from hippocampal to orbitofrontal pathways. The pontine nuclei serve as a final common pathway for reading-out conditioned responses. Cerebellar dynamics are not simulated in nSTART. Key: arrowhead = excitatory synapse; hemidisc = adaptive weight; square = habituative transmitter gate; square followed by a hemidisc = habituative transmitter gate followed by an adaptive weight

In particular, the model clarifies why the hippocampus is needed for trace conditioning, but not delay conditioning (Büchel et al., 1999; Frankland & Bontempi, 2005; Green & Woodruff-Pak, 2000; Kaneko & Thompson, 1997; Kim, Clark, & Thompson, 1995; Port et al., 1986; Takehara, Kawahara, & Krino, 2003); why thalamic lesions retard the acquisition of trace conditioning (Powell & Churchwell, 2002), but have less of a statistically significant effect on delay conditioning (Buchanan & Thompson, 1990); why early but not late amygdala lesions degrade both delay conditioning (Lee & Kim, 2004) and trace conditioning (Büchel et al., 1999); why hippocampal lesions degrade recent but not temporally remote trace conditioning (Kim et al., 1995; Takehara et al., 2003); why in delay conditioning, such lesions typically have no negative impact on CR performance but this finding may vary with experimental preparation and CR success criteria (Berger, 1984; Chen et al., 1995; Lee & Kim, 2004; Port, 1985; Shors, 1992; Moustafa, et al., 2013); why cortical lesions degrade temporally remote but not recent trace conditioning, but have no impact on the acquisition of delay conditioning (Frankland & Bontempi, 2005; Kronforst-Collins & Disterhoft, 1998; McLaughlin et al., 2002; Takehara et al., 2003; see also, Oakley & Steele Russell, 1972; Yeo, Hardiman, Moore, & Steele Russell,. 1984); how temporally-graded amnesia may be caused by ablation of the medial prefrontal cortex after memory consolidation (Simon, Knuckley, Churchwell, & Powell, 2005; Takehara et al., 2003; Weible, McEchron, & Disterhoft, 2000); how attention and consciousness are linked during delay and trace conditioning (Clark, Manns, & Squire, 2002; Clark & Squire, 1998, 2010); and how neurotrophins, notably brain-derived neurotrophic factor (BDNF), influence memory formation and consolidation (Kokaia et al., 1993, Tyler et al., 2002).

The article does not attempt to explain all aspects of memory consolidation, although its proposed explanations may help to do so in future studies. One reason for this is that the prefrontal cortex and hippocampus, which figure prominently in model explanations, carry out multiple functions (see section ‘Clinical relevance of BDNF). The model only attempts to explain how an interacting subset of these mechanisms contribute to conditioning and memory consolidation. Not considered, for example, are sequence-dependent learning, which depends on prefrontal working memories and list chunking dynamics (cf. compatible models for such processes in Grossberg & Kazerounian, 2016; Grossberg & Pearson, 2008; and Silver et al., 2011), or spatial navigation, which depends upon entorhinal grid cells and hippocampal place cells (cf. compatible models in Grossberg & Pilly, 2014; Pilly & Grossberg, 2012). In addition, the model does not attempt to simulate properties such as hippocampal replay, which require an analysis of sequence-dependent learning, including spatial navigation, for their consideration, or finer neurophysiological properties such the role of sleep, sharp wave ripples, and spindles in memory consolidation (see Albouy, King, Maquet, & Doyon, 2013, for a review).

Data about brain activity during sleep provide further evidence about learning processes that support memory consolidation. These processes begin with awake experience and may continue during sleep where there are no external stimuli that support learning (Kali & Dayan, 2004; Wilson, 2002). The activity generated during waking in the hippocampus is reproduced in sequence during rapid eye movement (REM) sleep with the same time scale as the original experiences, lasting tens of seconds to minutes (Louie & Wilson, 2001), or is compressed during slow-wave sleep (Nádasdy et al. 1999). During sleep, slow waves appear to be initiated in hippocampal CA3 (Siapas, Lubenov, & Wilson, 2005; Wilson & McNaughton, 1994), and hippocampal place cells tend to fire as though neuronal states were being played back in their previously experienced sequence as part of the memory consolidation process (Ji & Wilson, 2007; Qin, McNaughton, Skaggs, & Barnes, 1997; Skaggs & McNaughton, 1996; Steriade, 1999; Wilson & McNaughton, 1994). Relevant to the nSTART analysis are the facts that, during sleep, the interaction of hippocampal cells with cortex leads to neurotrophic expression (Hobson & Pace-Schott, 2002; Monteggia et al., 2004), and that similar sequential, self-organizing ensembles that are based on experience may also exist in various areas of the neocortex (Ji & Wilson, 2007; Maquet et al., 2000; cf. Deadwyler, West, & Robinson, 1981; Schoenbaum & Eichenbaum, 1995). With the nSTART analyses of neurotrophically-modulated memory consolidation as a function, these sleep- and sequence-dependent processes, which require substantial additional model development, can be more easily understood.

Unifying three basic competences

The model reconciles three basic behavioral competences. Its explanatory power is illustrated by the fact that these basic competences are self-evident, but the above data properties are not. All three competences involve the brain’s ability to adaptively time its learning processes in a task-appropriate manner.

First, the brain needs to pay attention quickly to salient events, both positive and negative. However, such a rapid attention shift to focus on a salient event creates the risk of prematurely responding to that event, or of prematurely resetting and shifting the attentional focus to a different event before the response to that event could be fully executed. As explained below, this fast motivated attention pathway includes the amygdala. These potential problems of a fast motivated attention shift are alleviated by the second and third competences.

Second, the brain needs to be able to adaptively time and maintain motivated attention on a salient event until an appropriate response is executed. The ability to maintain motivated attention for an adaptively timed interval on the salient event involves the hippocampus, notably its dentate-CA3 region (Berger, Clark, & Thompson, 1980). Recent data have further developed this theme through the discovery of hippocampal “time cells” (Kraus et al., 2013; MacDonald et al., 2011).

Third, the brain needs to be able to adaptively time and execute an appropriate response to the salient event. The ability to execute an adaptively timed behavioral response always involves the cerebellum (Christian & Thompson, 2003; Fiala, Grossberg, & Bullock, 1996; Green & Woodruff-Pak, 2000; Ito, 1984). When the timing contingencies involve a relatively long trace conditioning ISI, or the onset of the US in delay conditioning is sufficiently delayed, then the hippocampus may also be required due to higher cognitive demand (Beylin, Gandhi, Wood, Talk, Matzel, & Shors, 2001).

How the brain may realize these three competences, along with data supporting these hypotheses, has been described in articles about the Spectrally Timed Adaptive Resonance Theory (START) model of Grossberg & Merrill (1992, 1996). A variation of the START model in which several of its mechanisms are out of balance is called the Imbalanced START, or iSTART, model that has been used to describe possible neural mechanisms of autism (Grossberg & Seidman, 2006). START mechanisms have also been used to offer mechanistic explanations of various symptoms of schizophrenia (Grossberg, 2000b). The current neurotrophic START, or nSTART, model builds upon this foundation. The nSTART model further develops the START model to refine the anatomical interactions that are described in START, to clarify how adaptively timed learning and memory consolidation depend upon neurotrophins acting within several of these anatomical interactions, and to explain using this expanded model how various brain lesions to areas involved in eyeblink conditioning may cause abnormal learning and memory.

nSTART model of adaptively timed eyeblink conditioning

Neural pathways that support the conditioned eyeblink response involve various hierarchical and parallel circuits (Thompson, 1988; Woodruff-Pak & Steinmetz, 2000a, 2000b). The nSTART macrocircuit (Fig. 2) simulates key processes that exist within the wider network that supports the eyeblink response in vivo and highlights circuitry required for adaptively timed trace conditioning. Thalamus and sensory cortex are lumped into one sensory cortical representation for representational simplicity. However, the exposition of the model and its output pathways will require discussion of independent thalamocortical and corticocortical pathways. Different experimental manipulations affect brain regions like the thalamus, cortex, amygdala, and hippocampus in different ways. Our model computer simulations illustrate these differences. In addition, it is important to explain how these several individual responses of different brain regions contribute to a final common path the activity of which covaries with observed conditioned responses. Outputs from these brain regions meet directly or indirectly at the pontine nucleus, the final common bridge to the cerebellum which generates the CR (Freeman & Muckler, 2003; Kalmbach et al. 2009a, b; Siegel et al., 2012; Woodruff-Pak & Disterhoft, 2007). Simulations of how the model pontine nucleus responds to the aggregate effect of all the other brain regions are thus also provided. The internal dynamics of the cerebellum are not, however, simulated in the nSTART model; but see Fiala, Grossberg, and Bullock (1996) for a detailed cerebellar learning model that simulates how Ca++ can modulate mGluR dynamics to adaptively time responses across long ISIs.

Normal and amnesic delay conditioning and trace conditioning

The ability to associatively learn what subset of earlier events predicts, or causes, later consequences, and what event combinations are not predictive, is a critical survival competence in normal adaptive behavior. In this section, data are highlighted that describe the differences between the normal and abnormal acquisition and retention of associative learning relative to the specific role of interactions among the processing areas in nSTART’s functional anatomy; notably, interactions between sensory cortex and thalamus, prefrontal cortex, amygdala, and hippocampus. See ‘Methods ,’ for an exposition of design principles and heuristic modeling concepts that go into the nSTART model; ‘ Model description ,’ for a non-technical exposition of the model processes and their interactions; ‘Results,’ for model simulations of data; ‘ Discussion ,’ for a general summary; and ‘Mathematical Equations and Parameters,’ for a complete summary of the model mechanisms.

Lesion data show that delay conditioning requires the cerebellum but does not need the hippocampus to acquire an adaptively timed conditioned response. Studies of hippocampal lesions in rats, rabbits, and humans reveal that, if a lesion occurs before delay conditioning (Daum, Schugens, Breitenstein, Topka, & Spieker, 1996; Ivkovich & Thompson, 1997; Schmaltz & Theios, 1972; Solomon & Moore, 1975; Weiskrantz & Warrington, 1979;), or any time after delay conditioning (Akase, Alkon, & Disterhoft, 1989; Orr & Berger, 1985; Port et al., 1986), the subject can still acquire or retain a CR. Depending on the performance criteria, sometimes the acquisition is reported as facilitated (Berger, 1984; Chen, 1995; Lee & Kim, 2004; Port, 1985; Shors, 1992).

Lee and Kim (2004) presented electromyography (EMG) data showing that amygdala lesions in rats decelerated delay conditioning if made prior to training, but not if made post-training, while hippocampal lesions accelerated delay conditioning if made prior to training. They found a time-limited role of the amygdala similar to the time-limited role of the hippocampus: The amygdala is more active during early acquisition than later. In addition, they found that the amygdala without the hippocampus is not sufficient for trace conditioning. During functional magnetic resonance imaging (fMRI) studies of human trace conditioning, Büchel et al. (1999) also found decreases in amygdala responses over time. They cited other fMRI studies that found robust hippocampal activity in trace conditioning, but not delay conditioning, to underscore their hypothesis that, while the amygdala may contribute to trace conditioning, the hippocampus is required. Chau and Galvez (2012) discussed the likelihood of the same time-limited involvement of the amygdala in trace eyeblink conditioning.

Holland and Gallagher (1999) reviewed literature describing the role of the amygdala as either modulatory or required, depending on specific connections with other brain systems, for normal “functions often characterized as attention, reinforcement and representation” (p. 66). Aggleton and Saunders (2000) described the amygdala in terms of four functional systems (accessory olfactory, main olfactory, autonomic, and frontotemporal). In the macaque monkey, ten interconnected cytotonic areas were defined within the amygdala, with 15 types of cortical inputs and 17 types of cortical projections, and 22 types of subcortical inputs from the amygdala and 15 types of subcortical projections to the amygdala (their Figs. 1.2–1.7, pp. 4–9). Given this complexity, the data are mixed about whether the amygdala is required for acquisition, or retention after consolidation, depending on the cause (cytotoxin, acid or electronic burning, cutting), target area, and degree of lesion, as well as the strength of the US, learning paradigm, and specific task (Blair, Sotres-Bayon, Moiya, & LeDoux, 2005; Cahill & McGaugh, 1990; Everitt, Cardinal, Hall, Parkinson, & Robbins, 2000; Kapp, Wilson, Pascoe, Supple, & Whalen, 1990; Killcross, Everitt, & Robbins, 1997; Lehmann, Treit, & Parent, 2000; Medina, Repa, Mauk, & LeDoux, 2002; Neufeld & Mintz, 2001; Oswald, Maddox, Tisdale, & Powell, 2010; Vazdarjanova & McGaugh, 1998). In fact, "…aversive eyeblink conditioning…survives lesions of either the central or basolateral parts of the amygdala" (Thompson et al. 1987). Additionally, such lesions have been found not to prevent Pavlovian appetitive conditioning or other types of appetitively-based learning (McGaugh, 2002, p.456).

These inconsistencies among the data may exist due to the contributions from multiple pathways that support emotion. For example, within the MOTIVATOR model extension of the CogEM model (see below), hypothalamic and related internal homeostatic and drive circuits may function without amygdala (Dranias et al., 2008). The nSTART model only incorporates an afferent cortical connection from the amygdala to represent incentive motivational learning signals. Within the cortex, however, the excitatory inputs from both the amygdala and hippocampus are modulated by the strength of thalamocortical signals.

A clear pattern emerges from comparing various data that disclose essential functions of the hippocampus, functions that are qualititatively simulated in nSTART. The hippocampus has been studied with regard to the acquisition of trace eyeblink conditioning, and the adaptive timing of conditioned responses (Berger, Laham, & Thompson, 1980; Mauk & Ruiz, 1992; Schmaltz & Theios, 1972; Sears & Steinmetz, 1990; Woodruff-Pak, 1993; Woodruff-Pak & Disterhoft, 2007). If a hippocampal lesion or other system disruption occurs before trace conditioning acquisition (Ivkovich & Thompson, 1997; Kaneko & Thompson, 1997; Weiss & Thompson, 1991b; Woodruff-Pak, 2001), or shortly thereafter (Kim et al., 1995; Moyer, Deyo, & Disterhoft, 1990; Takehara et al., 2003), the CR is not obtained or retained. Trace conditioning is impaired by pre-acquisition hippocampal lesions created during laboratory experimentation on animals (Anagnostaras, Maren, & Fanselow, 1999; Berry & Thompson, 1979; Garrud et al., 1984; James, Hardiman, & Yeo, 1987; Kim et al., 1995; Orr & Berger, 1985; Schmajuk, Lam, & Christiansen, 1994; Schmaltz & Theios, 1972; Solomon & Moore, 1975), and in humans with amnesia (Clark & Squire, 1998; Gabrieli et al., 1995; McGlinchey-Berroth, Carrillo, Gabrieli, Brawn, & Disterhoft, 1997), Alzheimer’s disease, or age-related deficits (Little, Lipsitt, & Rovee-Collier, 1984; Solomon et al., 1990; Weiss & Thompson, 1991a; Woodruff-Pak 2001).

The data show that, during trace conditioning, there is successful post-acquisition performance of the CR only if the hippocampal lesion occurs after a critical period of hippocampal support of memory consolidation within the neocortex (Kim et al., 1995; Takashima et al., 2009; Takehara et al., 2003). Data from in vitro cell preparations also support the time-limited role of the hippocampus in new learning that is simulated in nSTART: activity in hippocampal CA1 and CA3 pyramidal neurons peaked 24 h after conditioning was completed and decayed back to baseline within 14 days (Thompson, Moyer, & Disterhoft, 1996). The effect of early versus late hippocampal lesions is challenging to explain since no overt training occurs after conditioning during the period before hippocampal ablation.

After consolidation due to hippocampal involvement is accomplished, thalamocortical signals in conjunction with the cerebellum determine the timed execution of the CR during performance (Gabreil, Sparenborg, & Stolar, 1987; Sosina, 1992). Indeed, “…there are two memory circuitries for trace conditioning. One involves the hippocampus and the cerebellum and mediates recently acquired memory; the other involves the mPFC and the cerebellum and mediates remotely acquired memory” (Takehara et al., 2003, p. 9904; see also Berger, Weikart, Basset, & Orr, 1986; O'Reilly et al., 2010). nSTART qualitatively models these data as follows: after the consolidation of memory, when there is no need for hippocampus, nSTART models the cortical connections to the pontine nuclei that serve to elicit conditioned responses by way of the cerebellum (Siegel, Kalmback, Chitwood, & Mauk, 2012; Woodruff-Pak & Disterhoft, 2007).

Based on the extent and timing of hippocampal damage, learning impairments range from needing more training trials than normal in order to learn successfully, through persistent response-timing difficulties, to the inability to learn and form new memories. The nSTART model explains the need for the hippocampus during trace conditioning in terms of how the hippocampus supports strengthening of partially conditioned thalamocortical and cortiocortical connections during memory consolidation (see Fig. 2). The hippocampus has this ability because it includes circuits that can bridge the temporal gaps between CS and US during trace conditioning, unlike the amygdala, and can learn to adaptively time these temporal gaps in its responses, as originally simulated in the START model (Grossberg & Merrill, 1992, 1996; Grossberg & Schmajuk, 1989). The current nSTART model extends this analysis using mechanisms of endogenous hippocampal activation and BDNF modulation (see below) to explain the time-limited role of the hippocampus in terms of its support of the consolidation of new learning into long-term memories. This hypothesis is elaborated and contrasted with alternative models of memory consolidation below (‘Multiple hippocampal functions: Space, time, novelty, consolidation, and episodic learning’).

Conditioning and consciousness

Several studies of humans have described a link between consciousness and conditioning. Early work interpreted conscious awareness as another class of conditioned responses (Grant, 1973; Hilgard, Campbell, & Sears, 1937; Kimble, 1962; McAllister & McAllister, 1958). More recently, it was found that, while amnesic patients with hippocampal damage acquired delay conditioning at a normal rate, they failed to acquire trace conditioning (Clark & Squire, 1998). These experimenters postulated that normal humans acquire trace conditioning because they have intact declarative or episodic memory and, therefore, can demonstrate conscious knowledge of a temporal relationship between CS and US: “trace conditioning requires the acquisition and retention of conscious knowledge” (p. 79). They did not, however, discuss mechanisms underlying this ability, save mentioning that the neocortex probably represents temporal relationships between stimuli and “would require the hippocampus and related structures to work conjointly with the neocortex” (p.79).

Other studies have also demonstrated a link between consciousness and conditioning (Gabrieli et al., 1995; McGlinchey-Berroth, Brawn, & Disterhoft, 1999; McGlinchey-Berroth et al., 1997) and described an essential role for awareness in declarative learning, but no necessary role in non-declarative or procedural learning, as illustrated by experimental findings related to trace and delay conditioning, respectively (Manns, Clark, & Squire, 2000; Papka, Ivry, & Woodruff-Pak, 1997). For example, trace conditioning is facilitated by conscious awareness in normal control subjects while delay conditioning is not, whereas amnesics with bilateral hippocampal lesions perform at a success rate similar to unaware controls for both delay and trace conditioning (Clark, Manns, & Squire, 2001). Amnesics were found to be unaware of experimental contingencies, and poor performers on trace conditioning (Clark & Squire, 1998). Thus, the link between adaptive timing, attention, awareness, and consciousness has been experimentally established within the trace conditioning paradigm. The nSTART model traces the link between consciousness and conditioning to the role of hippocampus in supporting a sustained cognitive-emotional resonance that underlies motivated attention, consolidation of long-term memory, core consciousness, and "the feeling of what happens" (Damasio, 1999).

Brain-derived neurotrophic factor (BDNF) in memory formation and consolidation

Memory consolidation, a process that supports an enduring memory of new learning, has been extensively studied: (McGaugh, 2000, 2002; Mehta, 2007; Nadel & Bohbot, 2001; Takehara, Kawahara, & Krino, 2003; Squire & Alverez, 1995; Takashima, 2009; Thompson, Moyer, & Disterhoft, 1996; Tyler, et al. 2002). These data show time-limited involvement of the limbic system, and long-term involvement of the neocortex. The question of what sort of process occurs during the period that actively strengthens memory, even when there is no explicit practice, has been linked to the action of neurotrophins (Zang, et al., 2007), especially BDNF, a complex class of proteins that have important effects on learning and memory (Heldt, Stanek, Chhatwal, & Ressler, 2007; Hu & Russek, 2008; Monteggia et al., 2004; Purves, 1988; Rattiner, Davis, & Ressler, 2005; Schuman, 1999; Thoenen, 1995; Tyler, Alonso, Bramham, & Pozzo-Miller, 2002). Postsynaptically, neurotrophins enhance responsiveness of target synapses (Kang & Schuman, 1995; Kohara, Kitamura, Morishima, & Tsumoto, 2001) and allow for quicker processing (Knipper et al., 1993; Lessman, 1998). Presynaptically, they act as retrograde messengers (Davis & Murphy, 1994; Ganguly, Koss, & Poo, 2000) coming from a target cell population back to excitatory source cells and increasing the flow of transmitter from the source cell population to generate a positive feedback loop between the source and the target cells (Schinder, Berninger, & Poo, 2000), as also occurs in some neural models of learning and memory search (e.g., Carpenter & Grossberg, 1990). BDNF has also been interpreted as an essential component of long-term potentiation (LTP) in normal cell processing (Chen, Kolbeck, Barde, Bonhoeffer, & Kossel, 1999; Korte et al., 1995; Phillips et al., 1990). The functional involvement of existing BDNF receptors is critical in early LTP (up to 1 h) during the acquisition phase of learning the CR, whereas continued activation of the slowly decaying late phase LTP signal (3+ h) requires new protein synthesis and gene expression. Rossato et al. (2009) have shown that hippocampal dopamine and the ventral tegmental area provide a temporally sensitive trigger for the expression of BDNF that is essential for long-term consolidation of memory related to reinforcement learning.

The BDNF response to a particular stimulus event may vary from microseconds (initial acquisition) to several days or weeks (long-term memory consolidation); thus, neurotrophins have a role whether the phase of learning is one of initial synaptic enhancement or long-term memory consolidation (Kang, Welcher, Shelton, & Schuman, 1997; Schuman, 1999; Singer, 1999). Furthermore, BDNF blockade shows that BDNF is essential for memory development at different phases of memory formation (Kang et al., 1997), and during all ages of an individual (Cabelli, Hohn, & Shatz, 1995; Tokuka, Saito, Yorifugi, Kishimoto, & Hisanaga, 2000). As nSTART qualitatively simulates, neurotrophins are thus required for both the initial acquisition of a memory and for its ongoing maintenance as memory consolidates.

BDNF is heavily expressed in the hippocampus as well as in the neocortex, where neurotrophins figure largely in activity-dependent development and plasticity, not only to build new bridges as needed, but also to inhibit and dismantle old synaptic bridges. A process of competition among axons during the development of nerve connections (Bonhoffer, 1996; Tucker, Meyer, & Barde, 2001; van Ooyen & Willshaw, 1999; see review in Tyler et al., 2002), exists both in young and mature animals (Phillips, Hains, Laramee, Rosenthal, & Winslow, 1990). BDNF also maintains cortical circuitry for long-term memory that may be shaped by various BDNF-independent factors during and after consolidation (Gorski, Zeiler, Tamowski, & Jones, 2003).

The nSTART model hypothesizes how BDNF may amplify and temporally extend activity-based signals within the hippocampus and the neocortex that facilitate endogenous strengthening of memory without further explicit learning. In particular, memory consolidation may be mechanistically achieved by means of a sustained cascade of BDNF expression beginning in the hippocampus and spreading to the cortex (Buzsáki & Chrobak, 2005; Cousens & Otto, 1998; Hobson & Pace-Schott, 2002; Monteggia, et al., 2004; Nádasdy, Hirase, Czurkó, Csicsvari, & Buzsáki, 1999; Smythe, Colom, & Bland, 1992; Staubli & Lynch, 1987; Vertes, Hoover, & Di Prisco, 2004), which is modeled in nSTART by the maintained activity level of hippocampal and cortical BDNF after conditioning trials end (see Fig. 2).

Hippocampal bursting activity is not the only bursting activity that drives consolidation. Long-term activity-dependent consolidation of new learning is also supported by the synchronization of thalamocortical interactions in response to thalamic or cortical inputs (Llinas, Ribary, Joliot, & Wang, 1994; Steriade, 1999). Thalamic bursting neurons may lead to synaptic modifications in cortex, and cortex can in turn influence thalamic oscillations (Sherman & Guillery, 2003; Steriade, 1999). Thalamocortical resonance has been described as a basis for temporal binding and consciousness in increasingly specific models over the years. These models simulate how specific and nonspecific thalamic nuclei interact with the reticular nucleus and multiple stages of laminar cortical circuitry (Buzsáki, Llinás, Singer, Berthoz, & Christen, 1994; Engel, Fries, & Singer, 2001; Grossberg, 1980, 2003, 2007; Grossberg & Versace, 2008; Pollen, 1999; Yazdanbakhsh & Grossberg, 2004). nSTART qualitatively explains consolidation without including bursting phenomena, although oscillatory dynamics of this kind arise naturally in finer spiking versions of rate-based models such as nSTART (Grossberg & Versace, 2008; Palma, Grossberg, & Versace, 2012a, 2012b).

The nSTART model focuses on amygdala and hippocampal interactions with thalamus and neocortex during conditioning (Fig. 2). The model proposes that the hippocampus supports thalamo-cortical and cortico-cortical category learning that becomes well established during memory consolidation through its endogenous (bursting) activity (Siapas, Lubenov, & Wilson, 2005; Sosina, 1992) that is supported by neurotrophin mediators (Destexhe, Contreras & Steriade, 1998). nSTART proposes that thalamo-cortical sustained activity is maintained through the combination of two mechanisms: the level of cortical BDNF activity, and the strength of the learned thalamo-cortical adaptive weights, or long-term memory (LTM) traces that were strengthened by the memory consolidation process. This proposal is consistent with trace conditioning data showing that, after consolidation, when the hippocampus is no longer required for performance of CRs, the medial prefrontal cortex takes on a critical role for performance of the CR in reaction to the associated thalamic sensory input, Here, the etiology of retrograde amnesia is understood as a failure to retain memory, rather than as a failure of adaptive timing (Takehara et al., 2003).

Methods

From CogEM to nSTART

The nSTART model synthesizes and extends key principles, mechanisms, and properties of three previously published brain models of conditioning and behavior. These three models describe aspects of:

How the brain learns to categorize objects and events in the world (Carpenter & Grossberg, 1987, 1991, 1993; Grossberg, 1976a, 1976b, 1980, 1982, 1984, 1987, 1999, 2013; Raizada & Grossberg, 2003); this is described within Adaptive Resonance Theory, or ART;

How the brain learns the emotional meanings of such events through cognitive-emotional interactions, notably rewarding and punishing experiences, and how the brain determines which events are motivationally predictive, as during attentional blocking and unblocking (Dranias, Grossberg, & Bullock, 2008; Grossberg, 1971, 1972a, 1972b, 1980, 1982, 1984, 2000b; Grossberg, Bullock, & Dranias, 2008; Grossberg & Gutowski, 1987; Grossberg & Levine, 1987; Grossberg & Schmajuk, 1987); this is described within the Cognitive-Emotional-Motor, or CogEM, model; and

How the brain learns to adaptively time the attention that is paid to motivationally important events, and when to respond to these events, in a context-appropriate manner (Fiala, Grossberg, & Bullock, 1996; Grossberg & Merrill, 1992, 1996; Grossberg & Paine, 2000; Grossberg & Schmajuk, 1989); this is described within the START model.

All three component models have been mathematically and computationally characterized elsewhere in order to explain behavioral and brain data about normal and abnormal behaviors. The principles and mechanisms that these models employ have thus been independently validated through their ability to explain a wide range of data. nSTART builds on this foundation to explain data about conditioning and memory consolidation, as it is affected by early and late amygdala, hippocampal, and cortical lesions, as well as BDNF expression in the hippocampus and cortex. The exposition in this section heuristically states the main modeling concepts and mechanisms before building upon them to mathematically realize the current model advances and synthesis.

The simulated data properties emerge from interactions of several brain regions for which processes evolve on multiple time scales, interacting in multiple nonlinear feedback loops. In order to simulate these data, the model incorporates only those network interactions that are rate-limiting in generating the targeted data. More detailed models of the relevant brain regions, that are consistent with the model interactions simulated herein, are described below, and provide a guide to future studies aimed at incorporating a broader range of functional competences.

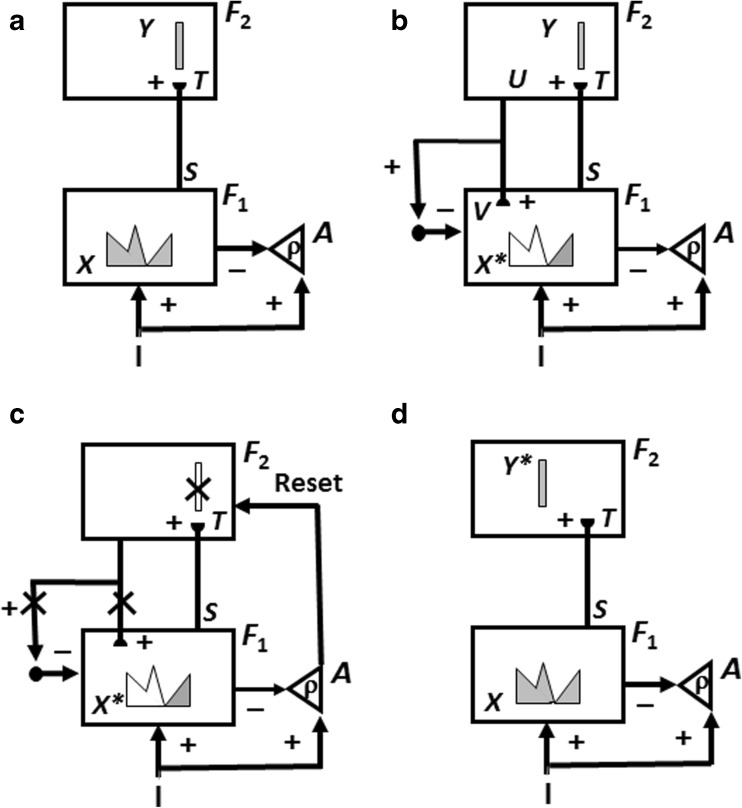

Adaptive resonance theory

The first model upon which nSTART builds is called Adaptive Resonance Theory, or ART. ART is reviewed because a key process in nSTART is a form of category learning, and also because nSTART simulates a cognitive-emotional resonance that is essential for explaining its targeted data. ART proposes how the brain can rapidly learn to attend, recognize, and predict new objects and events without catastrophically forgetting memories of previously learned objects and events. This is accomplished through an attentive matching process between the feature patterns that are created by stimulus-driven bottom-up adaptive filters, and learned top-down expectations (Fig. 3). The top-down expectations, acting by themselves, can also prime the brain to anticipate future bottom-up feature patterns with which they will be matched.

Fig. 3.

How ART searches for and learns a new recognition category using cycles of match-induced resonance and mismatch-induced reset. Active cells are shaded gray; inhibited cells are not shaded. (a) Input pattern I is instated across feature detectors at level F 1 as an activity pattern X, at the same time that it generates excitatory signals to the orienting system A with a gain ρ that is called the vigilance parameter. Activity pattern X generates inhibitory signals to the orienting system A as it generates a bottom-up input pattern S to the category level F 2. A dynamic balance within A between excitatory inputs from I and inhibitory inputs from S keeps A quiet. The bottom-up signals in S are multiplied by learned adaptive weights to form the input pattern T to F 2. The inputs T are contrast-enhanced and normalized within F 2 by recurrent lateral inhibitory signals that obey the membrane equations of neurophysiology, otherwise called shunting interactions. This competition leads to selection and activation of a small number of cells within F 2 that receive the largest inputs. In this figure, a winner-take-all category is chosen, represented by a single cell (population). The chosen cells represent the category Y that codes for the feature pattern at F 1. (b) The category activity Y generates top-down signals U that are multiplied by adaptive weights to form a prototype, or critical feature pattern, V that encodes the expectation that the active F 2 category has learned for what feature pattern to expect at F 1. This top-down expectation input V is added at F 1 cells. If V mismatches I at F 1, then a new STM activity pattern X* (the gray pattern), is selected at cells where the patterns match well enough. In other words, X* is active at I features that are confirmed by V. Mismatched features (white area) are inhibited. When X changes to X*, total inhibition decreases from F 1 to A. (c) If inhibition decreases sufficiently, A releases a nonspecific arousal burst to F 2; that is, “novel events are arousing”. Within the orienting system A, a vigilance parameter ρ determines how bad a match will be tolerated before a burst of nonspecific arousal is triggered. This arousal burst triggers a memory search for a better-matching category, as follows: Arousal resets F 2 by inhibiting Y. (d) After Y is inhibited, X is reinstated and Y stays inhibited as X activates a different category, that is represented by a different activity winner-take-all category Y*, at F 2.. Search continues until a better matching, or novel, category is selected. When search ends, an attentive resonance triggers learning of the attended data in adaptive weights within both the bottom-up and top-down pathways. As learning stabilizes, inputs I can activate their globally best-matching categories directly through the adaptive filter, without activating the orienting system [Adapted with permission from Carpenter and Grossberg (1987)]

In nSTART, it is assumed that each CS and US is familiar and has already undergone category learning before the current simulations begin. The CS and US inputs to sensory cortex in the nSTART macrocircuit are assumed to be processed as learned object categories (Fig. 2). nSTART models a second stage of category learning from an object category in sensory cortex to an object-value category in orbitofrontal cortex. In general, each object category can become associated with more than one object-value category, so the same sensory cue can learn to generate different conditioned responses in response to learning with different reinforcers. It does this by learning to generate different responses when different value categories are active. These adaptive connections are thus, in general, one-to-many. Conceptually, the two stages of learning, at the object category stage and the object-value category stage, can be interpreted as a coordinated category learning process through which the orbitofrontal cortex categorizes objects and their motivational significance (Barbas, 1995, 2007; Rolls, 1998, 2000). The current model simulates such conditioning with only a single type of reinforcer. Strengthening the connection from object category to object-value category represents a simplified form of this category learning process in the current model simulations. One-to-many learning from an object category to multiple object-value categories is simulated in Chang, Grossberg, and Cao (2014).

As in other ART models, a top-down expectation pathway also exists from the orbitofrontal cortex to the sensory cortex. It provides top-down attentive modulation of sensory cortical activity, and is part of the cortico-cortico-amygdalar-hippocampal resonance that develops in the model during learning. This cognitive-emotional resonance, which plays a key role in the current model and its simulations, as well as its precursors in the START and iSTART models, is the main reason that nSTART is considered to be part of the family of ART models. Indeed, Grossberg (2016) summarizes an emerging classification of brain resonances that support conscious seeing, hearing, feeling, and knowing that includes this cognitive-emotional resonance.

nSTART explains how this cognitive-emotional resonance is sustained through time by adaptively-timed hippocampal feedback signals (Fig. 2). This hippocampal feedback plays a critical role in the model’s explanation of data about memory consolidation, and its ability to explain how the brain bridges the temporal gap between stimuli that occur in experimental paradigms like trace conditioning. Consolidation is complete within nSTART when the hippocampus is no longer needed to further strengthen the category memory that is activated by the CS. Finally, the role of the hippocampus in sustaining the cognitive-emotional resonances helps to explain the experimentally reported link between conditioning and consciousness (Clark & Squire, 1998).

In a complete ART model, when a sufficiently good match occurs between a bottom-up input pattern and an active top-down expectation, the system locks into a resonant state that focuses attention on the matched features and drives learning to incorporate them into the learned category; hence the term adaptive resonance. ART also predicts that all conscious states are resonant states, and the Grossberg (2016) classification of resonances contributes to clarifying their diverse functions throughout the brain. Such an adaptive resonance is one of the key mechanisms whereby ART ensures that memories are dynamically buffered against catastrophic forgetting. As noted above, a simplified form of this attentive matching process is included in nSTART in order to explain the cognitive-emotional resonances that support memory consolidation and the link between conditioning and consciousness.

In addition to the attentive resonant state itself, a hypothesis testing, or memory search, process in response to unexpected events helps to discover predictive recognition categories with which to learn about novel environments, and to switch attention to new inputs within a known environment. This hypothesis testing process is not simulated herein because the object categories that are activated in response to the CS and US stimuli are assumed to already have been learned, and unexpected events are minimized in the kinds of highly controlled delay and trace conditioning experiments that are the focus of the current study.

For the same reason, another mechanism that is important during hypothesis testing is not included in nSTART. The degree of match between bottom-up and top-down signal patterns that is required for resonance, sustained attention, and learning to occur is set by a vigilance parameter (Carpenter & Grossberg, 1987) (see ρ in Fig. 3a). Vigilance may be increased by predictive errors, and controls whether a particular learned category will represent concrete information, such as a particular view of a particular face, or abstract information, such as the fact that everyone has a face. Low vigilance allows the learning of general and abstract recognition categories, whereas high vigilance forces the learning of specific and concrete categories. The current simulations do not need to vary the degree of abstractness of the categories to be learned, so vigilance control has been omitted for simplicity.

A big enough mismatch designates that the selected category does not represent the input data well enough, and drives a memory search, or hypothesis testing, for a category that can better represent the input data. In a more complete nSTART model, hypothesis testing would enable the learning and stable memory of large numbers of thalamo-cortical and cortico-cortical recognition categories. Such a hypothesis testing process includes a novelty-sensitive orienting system A, which is predicted to include both the nonspecific thalamus and the hippocampus (Fig. 3c; Carpenter & Grossberg, 1987, 1993; Grossberg, 2013; Grossberg & Versace, 2008). In nSTART, the model hippocampus does include the crucial process of adaptively timed learning that can bridge temporal gaps of hundreds of milliseconds to support trace conditioning and memory consolidation. In a more general nSTART model that is capable of self-stabilizing its learned memories, the hippocampus would also be involved in the memory search process.

In an ART model that includes memory search, when a mismatch occurs, the orienting system is activated and generates nonspecific arousal signals to the attentional system that rapidly reset the active recognition categories that have been reading out the poorly matching top-down expectations (Fig. 3c). The cause of the mismatch is hereby removed, thereby freeing the bottom-up filter to activate a different recognition category (Fig. 3d). This cycle of mismatch, arousal, and reset can repeat, thereby initiating a memory search, or hypothesis testing cycle, for a better-matching category. If no adequate match with a recognition category exists, say because the bottom-up input represents an unfamiliar experience, then the search process automatically activates an as yet uncommitted population of cells, with which to learn a new recognition category to represent the novel information.

All the learning and search processes that ART predicted have received support from behavioral, ERP, anatomical, neurophysiological, and/or neuropharmacological data, which are reviewed in the ART articles listed above; see, in particular, Grossberg (2013). Indeed, the role of the hippocampus in novelty detection has been known for many years (Deadwyler, West, & Lynch, 1979; Deadwyler et al., 1981; Vinogradova, 1975). In particular, the hippocampal CA1 and CA3 regions have been shown to be involved in a process of comparison between a prior conditioned stimulus and a current stimulus by rats in a non-spatial auditory task, the continuous non-matching-to-sample task (Sakurai, 1990). During performance of the task, single unit activity was recorded from several areas: CA1 and CA3, dentate gyrus (DG), entorhinal cortex, subicular complex, motor cortex (MC), prefrontal cortex, and dorsomedial thalamus. Go and No-Go responses indicated, respectively, whether the current tone was perceived as the same as (match) or different from (non-match) the preceding tone. Since about half of the units from the MC, CA1, CA3, and DG had increments of activity immediately prior to a Go response, these regions were implicated in motor or decisional aspects of making a match response. On non-match trials, units were also found in CA1 and CA3 with activity correlated to a correct No-Go response. Corroborating the function of the hippocampus in recognition memory, but not in storing the memories themselves, Otto and Eichenbaum (1992) reported that CA1 cells compare cortical representations of current perceptual processes to previous representations stored in parahippocampal and neocortical structures to detect mismatch in an odor-guided task. They noted that “the hippocampus maintains neither active nor passive memory representations” (p. 332).

Grossberg and Versace (2008) have proposed how the nonspecific thalamus can also be activated by novel events and trigger hypothesis testing. In their Synchronous Matching ART (SMART) model, a predictive error can lead to a mismatch within the nucleus basalis of Meynert, which releases acetylcholine broadly in the neocortex, leading to an increase in vigilance and a memory search for a better matching category. Palma, Grossberg, and Versace (2012a) and Palma, Versace, and Grossberg (2012b) further model how acetylcholine-modulated processes work, and explain a wide range of data using their modeling synthesis.

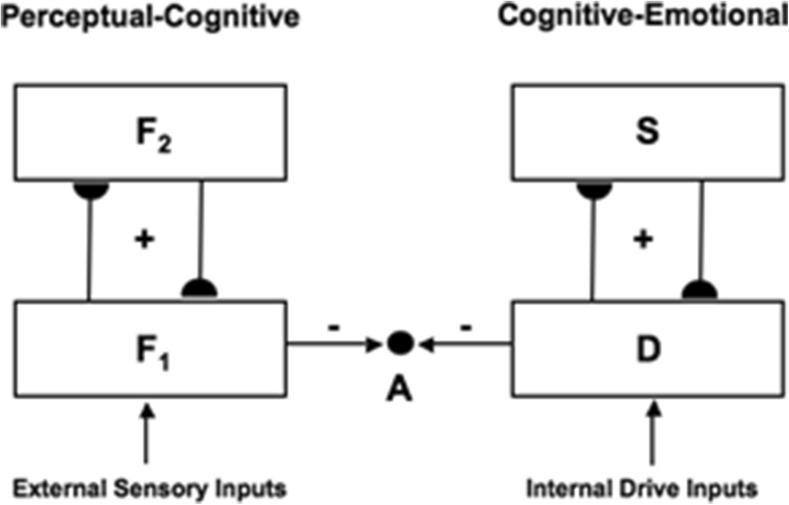

CogEM and MOTIVATOR models

Recognition categories can be activated when objects are experienced, but do not reflect the emotional or motivational value of these objects. Such a recognition category can, however, be associated through reinforcement learning with one or more drive representations, which are brain sites that represent internal drive states and emotions. Activation of a drive representation by a recognition category can trigger emotional reactions and incentive motivational feedback to recognition categories, thereby amplifying valued recognition categories with motivated attention as part of a cognitive-emotional resonance between the inferotemporal cortex, amygdala, and orbitofrontal cortex. When a recognition category is chosen in this way, it can trigger choice and release of actions that realize valued goals in a context-sensitive way.

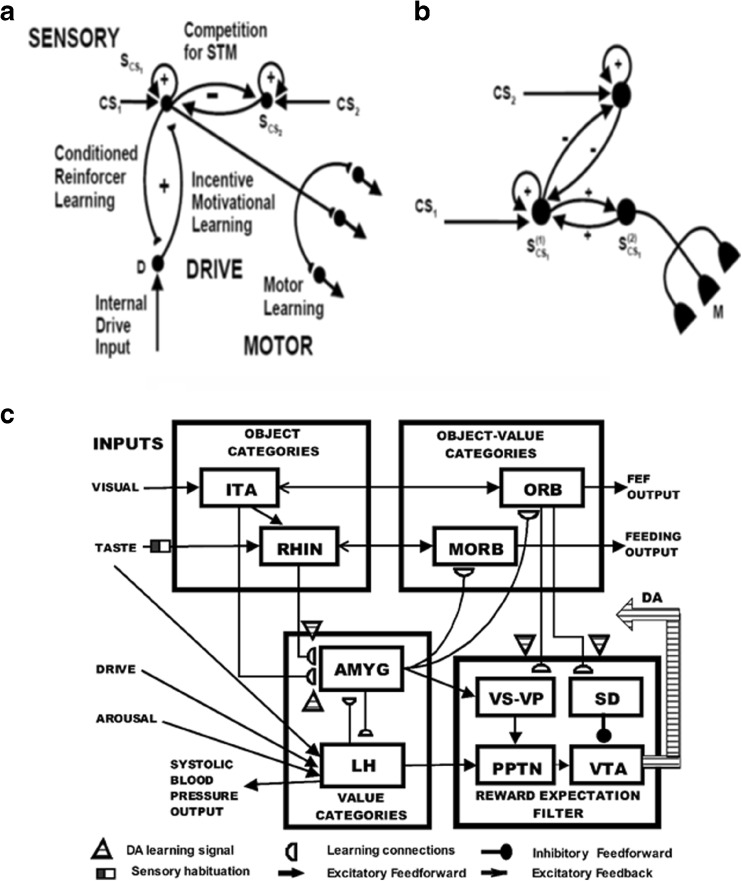

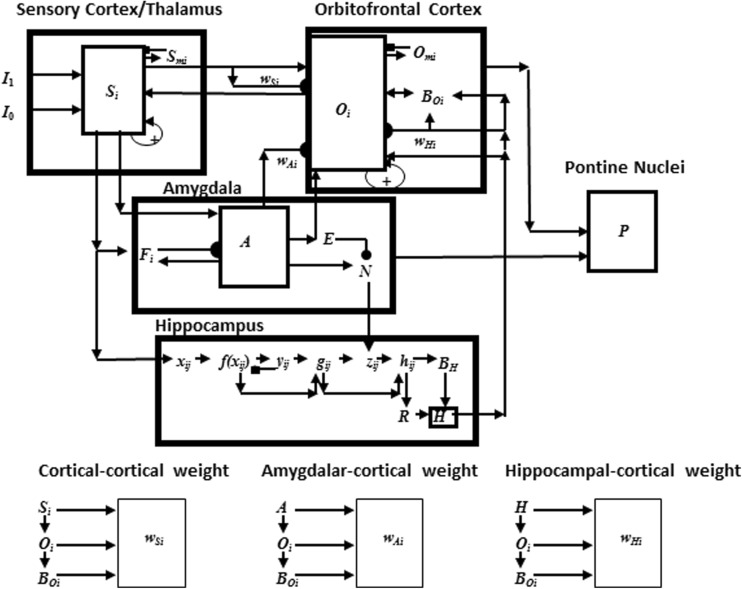

Such internal drive states and motivational decisions are incorporated into nSTART using mechanisms from the second model, called the Cognitive-Emotional-Motor, or CogEM, model. CogEM simulates the learning of cognitive-emotional associations, notably associations that link external objects and events in the world to internal feelings and emotions that give these objects and events value (Fig. 3a and b). These emotions also activate the motivational pathways that energize actions aimed at acquiring or manipulating objects or events to satisfy them.

The CogEM model clarifies interactions between two types of homologous circuits: one circuit includes interactions between the thalamus, sensory cortex, and amygdala; the other circuit includes interactions between the sensory cortex, orbitofrontal cortex, and amygdala. The nSTART model (Fig. 2) simulates cortico-cortico-amygdalar interactions. At the present level of simplification, the same activation and learning dynamics could also simulate interactions between thalamus, sensory cortices, and the amygdala. In particular, the CogEM model proposes how emotional centers of the brain, such as the amygdala, interact with sensory and prefrontal cortices – notably the orbitofrontal cortex – to generate affective states, attend to motivationally salient sensory events, and elicit motivated behaviors. Neurophysiological data provide increasing support for the predicted role of interactions between the amygdala and orbitofrontal cortex in focusing motivated attention on cell populations that can select learned responses which have previously succeeded in acquiring valued goal objects (Baxter et al., 2000; Rolls, 1998, 2000; Schoenbaum, Setlow, Saddoris, & Gallagher, 2003).

In ART, resonant states can develop within sensory and cognitive feedback loops. Resonance can also occur within CogEM circuits between sensory and cognitive representations of the external world and emotional representations of what is valued by the individual. Activating the (sensory cortex)-(amygdala)-(prefrontal cortex) feedback loop between cognitive and emotional centers is predicted to generate a cognitive-emotional resonance that can support conscious awareness of events happening in the world and how we feel about them. This resonance tends to focus attention selectively upon objects and events that promise to satisfy emotional needs. Such a resonance, when it is temporally extended to also include the hippocampus, as described below, helps to explain how trace conditioning occurs, as well as the link between conditioning and consciousness that has been experimentally reported.

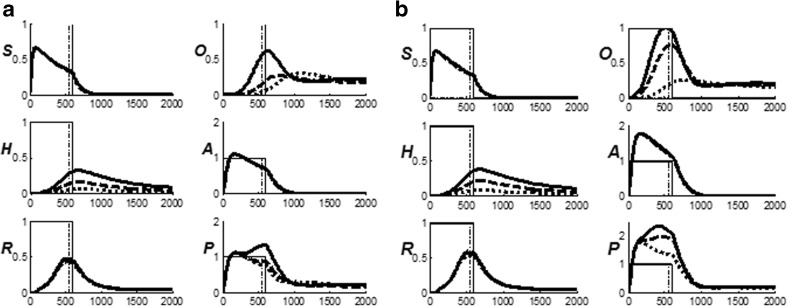

Figure 4a and b summarize the CogEM hypothesis that (at least) three types of internal representation interact during classical conditioning and other reinforcement learning paradigms: sensory cortical representations S, drive representations D, and motor representations M. These representations, and the learning that they support, are incorporated into the nSTART circuit (Fig. 2).

Fig. 4.

(a) The simplest Cognitive-Emotional-Motor (CogEM) model: Three types of interacting representations (sensory, S; drive, D; and motor, M) that control three types of learning (conditioned reinforcer, incentive motivational, and motor) help to explain many reinforcement learning data. (b) In order to work well, a sensory representation S must have (at least) two successive stages, S(1) and S(2), so that sensory events cannot release actions that are motivationally inappropriate. The two successive stages of a sensory representation S are interpreted to be in the appropriate sensory cortex (corresponds to S(1)) and the prefrontal cortex, notably the orbitofrontal cortex (corresponds to S(2)). The prefrontal stage requires motivational support from a drive representation D such as amygdala, to be fully effective, in the form of feedback from the incentive motivational learning pathway. Amydgala inputs to prefrontal cortex cause feedback from prefrontal cortex to sensory cortex that selectively amplifies and focuses attention upon motivationally relevant sensory events, and thereby “attentionally blocks” irrelevant cues. [Reprinted with permission from Grossberg and Seidman (2006).] (c) The amygdala and basal ganglia work together, embodying complementary functions, to provide motivational support, focus attention, and release contextually appropriate actions to achieve valued goals. For example, the basal ganglia substantia nigra pars compacta (SNc) releases Now Print learning signals in response to unexpected rewards or punishments, whereas the amygdala generates incentive motivational signals that support the attainment of expected valued goal objects. The MOTIVATOR model circuit diagram shows cognitive-emotional interactions between higher-order sensory cortices and an evaluative neuraxis composed of the hypothalamus, amygdala, basal ganglia, and orbitofrontal cortex [Reprinted with permission from Dranias et al. (2008)]

Sensory representations S temporarily store internal representations of sensory events in short-term and working memory. Drive representations D are sites where reinforcing and homeostatic, or drive, cues converge to activate emotional responses. Motor representations M control the read-out of actions. In particular, the S representations are thalamo-cortical or cortico-cortical representations of external events, including the object recognition categories that are learned by inferotemporal and prefrontal cortical interactions (Desimone, 1991, 1998; Gochin, Miller, Gross, & Gerstein, 1991; Harries & Perrett, 1991; Mishkin, Ungerleider, & Macko, 1983; Ungerleider & Mishkin, 1982), and that are modeled by ART. Sensory representations temporarily store internal representations of sensory events, such as conditioned stimuli (CS) and unconditioned stimuli (US), in short-term memory via recurrent on-center off-surround networks that tend to conserve their total activity while they contrast-normalize, contrast-enhance, and store their input patterns in short-term memory (Fig. 4a and b).

The D representations include hypothalamic and amygdala circuits (Figs. 2 and 5) at which reinforcing and homeostatic, or drive, cues converge to generate emotional reactions and motivational decisions (Aggleton, 1993; Bower, 1981; Davis, 1994; Gloor et al., 1982; Halgren, Walter, Cherlow, & Crandall, 1978; LeDoux, 1993). The M representations include cortical and cerebellar circuits that control discrete adaptive responses (Evarts, 1973; Ito, 1984; Kalaska, Cohen, Hyde, & Prud’homme, 1989; Thompson, 1988). More complete models of the internal structure of these several types of representations have been presented elsewhere (e.g., Brown, Bullock, & Grossberg, 2004; Bullock, Cisek, & Grossberg, 1998; Carpenter & Grossberg, 1991; Contreras-Vidal, Grossberg, & Bullock, 1997; Dranias, Grossberg, & Bullock, 2008; Fiala, Grossberg, & Bullock, 1996; Gnadt & Grossberg, 2008; Grossberg, 1987; Grossberg, Bullock & Dranias, 2008; Grossberg & Merrill, 1996; Grossberg & Schmajuk, 1987; Raizada & Grossberg, 2003), and can be incorporated into future elaborations of nSTART without undermining any of the current model's conclusions.

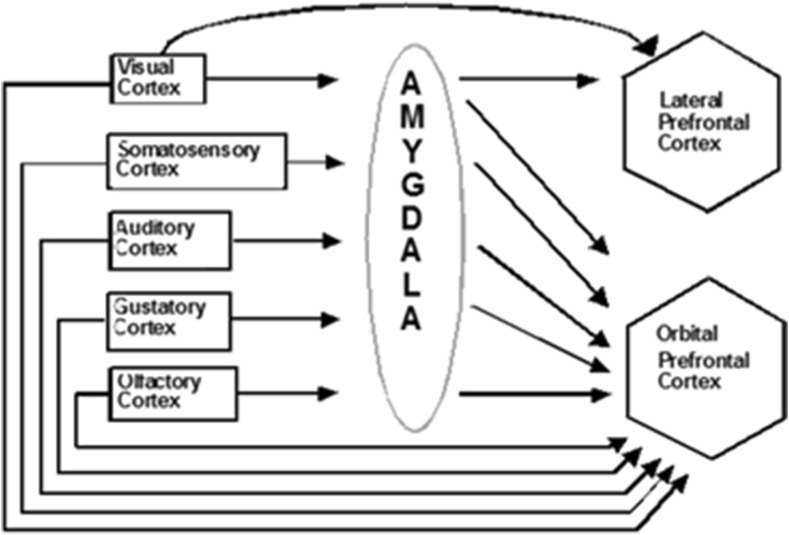

Fig. 5.

Orbital prefrontal cortex receives projections from the sensory cortices (visual, somatosensory, auditory, gustatory, and olfactory) and from the amygdala, which also receives inputs from the same sensory cortices. These anatomical stages correspond to the model CogEM stages in Fig. 4 [Reprinted with permission from Barbas (1995)]

nSTART does not incorporate the basal ganglia to simulate its targeted data, even though the basal ganglia and amygdala work together to provide motivational support, focus attention, and release contextually appropriate actions to achieve valued goals (Flores & Diserhoft, 2009). The MOTIVATOR model (Dranias et al., 2008; Grossberg et al., 2008) begins to explain how this interaction happens (Fig. 4c), notably how the amygdala and basal ganglia may play complementary roles during cognitive-emotional learning and motivated goal-oriented behaviors (Grossberg, 2000a). MOTIVATOR describes cognitive-emotional interactions between higher-order sensory cortices and an evaluative neuraxis composed of the hypothalamus, amygdala, basal ganglia, and orbitofrontal cortex. Given a conditioned stimulus (CS), the model amygdala and lateral hypothalamus interact to calculate the expected current value of the subjective outcome that the CS predicts, constrained by the current state of deprivation or satiation. As in the CogEM model, the amygdala relays the expected value information to orbitofrontal cells that receive inputs from anterior inferotemporal cells, and medial orbitofrontal cells that receive inputs from rhinal cortex. The activations of these orbitofrontal cells code the subjective values of objects. These values guide behavioral choices.

The model basal ganglia detect errors in CS-specific predictions of the value and timing of rewards. Excitatory inputs from the pedunculopontine nucleus interact with timed inhibitory inputs from model striosomes in the ventral striatum to regulate dopamine burst and dip responses from cells in the substantia nigra pars compacta and ventral tegmental area. Learning in cortical and striatal regions is strongly modulated by dopamine. The MOTIVATOR model is used to address tasks that examine food-specific satiety, Pavlovian conditioning, reinforcer devaluation, and simultaneous visual discrimination. Model simulations successfully reproduce discharge dynamics of known cell types, including signals that predict saccadic reaction times and CS-dependent changes in systolic blood pressure. In the nSTART model, these basal ganglia interactions are not needed to simulate the targeted data, hence will not be further discussed.

Even without basal ganglia dynamics, the CogEM model has successfully learned to control motivated behaviors in mobile robots (e.g., Baloch & Waxman, 1991; Chang & Gaudiano, 1998; Gaudiano & Chang, 1997; Gaudiano, Zalama, Chang, & Lopez-Coronado, 1996).

Three types of learning take place among the CogEM sensory, drive, and motor representations (Fig. 4a). Conditioned reinforcer learning enables sensory events to activate emotional reactions at drive representations. Incentive motivational learning enables emotions to generate a motivational set that biases the system to process cognitive information consistent with that emotion. Motor learning allows sensory and cognitive representations to generate actions. nSTART simulates both conditioned reinforcer learning, from thalamus to amygdala, or from sensory cortex to amygdala, as well as incentive motivational learning, from amygdala to sensory cortex, or from amygdala, to orbitofrontal cortex (Fig. 2). Instead of explicitly modeling motor learning circuits in the cerebellum, nSTART uses CR cortical and amygdala inputs to the pontine nucleus as indicators of the timing and strength of conditioned motor outputs (Freeman & Muckler, 2003; Kalmbach et al., 2009; Siegel et al., 2012; Woodruff-Pak & Disterhoft, 2007).

During classical conditioning, a CS activates its sensory representation S before the drive representation D is activated by an unconditioned simulus (US), or other previously conditioned reinforcer CSs. If it is appropriately timed, such pairing causes learning at the adaptive weights within the S → D pathway. The ability of the CS to subsequently activate D via this learned pathway is one of its key properties as a conditioned reinforcer. As these S → D associations are being formed, incentive motivational learning within the D → S incentive motivational pathway also occurs, due to the same pairing of CS and US. Incentive motivational learning enables an activated drive representation D to prime, or modulate, the sensory representations S of all cues, including the CSs, that have consistently been correlated with it. That is how activating D generates a “motivational set”: it primes all of the sensory and cognitive representations that have been associated with that drive in the past. These incentive motivational signals are a type of motivationally-biased attention. The S → M motor, or habit, learning enables the sensorimotor maps, vectors, and gains that are involved in sensory-motor control to be adaptively calibrated, thereby enabling a CS to read-out correctly calibrated movements as a CR.

Taken together, these processes control aspects of the learning and recognition of sensory and cognitive memories, which are often classified as part of the declarative memory system (Mishkin, 1982, 1993; Squire & Cohen, 1984); and the performance of learned motor skills, which are often classified as part of the procedural memory system (Gilbert & Thatch, 1977; Ito, 1984; Thompson, 1988).

Once both conditioned reinforcer and incentive motivational learning have taken place, a CS can activate a (sensory cortex)-(amygdala)-(orbitofrontal cortex)-(sensory cortex) feedback circuit (Figs. 2 and 4c). This circuit supports a cognitive-emotional resonance that leads to core consciousness and “the feeling of what happens” (Damasio, 1999), while it enables the brain to rapidly focus motivated attention on motivationally salient objects and events. This is the first behavioral competence that was mentioned above in the Overview and scopesection. This feedback circuit could also, however, without further processing, immediately activate motor responses, thereby leading to premature responding in many situations.

We show below that this amygdala-based process is effective during delay conditioning, where the CS and US overlap in time, but not during trace conditioning, where the CS terminates before the US begins, at least not without the benefit of the adaptively timed learning mechanisms that are described in the next section. Thus, although the CogEM model can realize the first behavioral competence that is summarized above, it cannot realize the second and third competences, which involve bridging temporal gaps between CS, US, and conditioned responses (as discussed above). Mechanisms that realize the second and third behavioral competences enable the brain to learn during trace conditioning.

It is also important to acknowledge that, as reviewed above, the amygdala may have a time-limited role during aversive conditioning (Lee & Kim, 2004). As the association of eyeblink CS-US becomes more consolidated through the strengthening of direct thalamo-cortical and cortico-cortical learned associations, the role of the amygdala may become less critical.

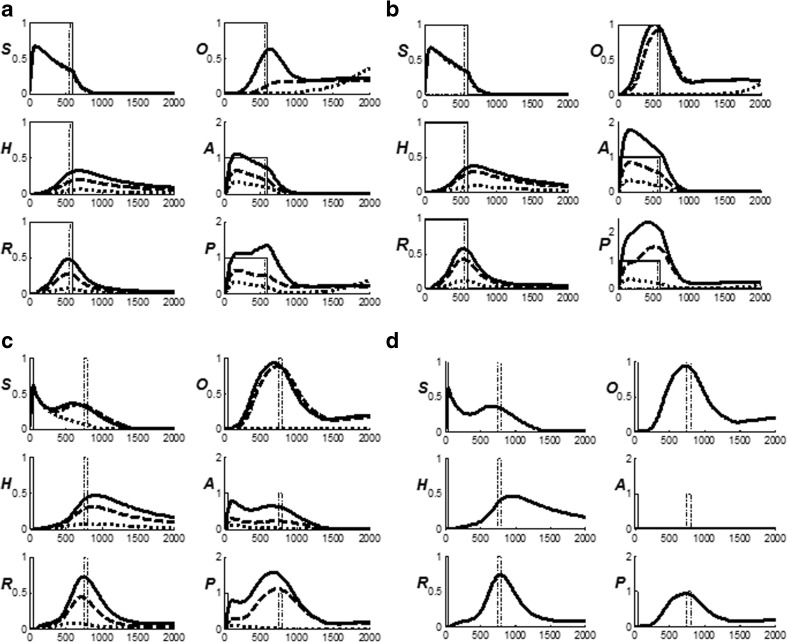

Spectral Timing model and hippocampal time cells

The third model, called the Spectral Timing model, clarifies how the brain learns adaptively timed responses in order to acquire rewards and other goal objects that are delayed in time, as occurs during trace conditioning. Spectral timing enables the model to bridge an ISI, or temporal gap, of hundreds of milliseconds, or even seconds, between the CS offset and US onset. This learning mechanism has been called spectral timing because a “spectrum” of cells respond at different, but overlapping, times and can together generate a population response for which adaptively timed cell responses become maximal at, or near, the time when the US is expected (Grossberg & Merrill, 1992, 1996; Grossberg & Schmajuk, 1989), as has been shown in neurophysiological experiments about adaptively timed conditioning in the hippocampus (Berger & Thompson, 1978; Nowak & Berger, 1992; see also Tieu et al., 1999).

Each cell in such a spectrum reaches its maximum activity at different times. If the cell responds later, then its activity duration is broader in time, a property that is called a Weber law, or scalar timing, property (Gibbon, 1977). Recent neurophysiological data about “time cells” in the hippocampus have supported the Spectral Timing model prediction of a spectrum of cells with different peak activity times that obey a Weber law. Indeed, such a Weber law property was salient in the data of MacDonald et al. (2011), who wrote: “…the mean peak firing rate for each time cell occurred at sequential moments, and the overlap among firing periods from even these small ensembles of time cells bridges the entire delay. Notably, the spread of the firing period for each neuron increased with the peak firing time…” (p. 3). MacDonald et al. (2011) have hereby provided direct neurophysiological support for the prediction of spectral timing model cells (“small ensembles of time cells”) that obey the Weber law property (“spread of the firing period…increased with the peak firing time”).

To generate the adaptively timed population response, each cell's activity is multiplied, or gated, by an adaptive weight before the memory-gated activity adds to the population response. During conditioning, each weight is amplified or suppressed to the extent to which its activity does, or does not, overlap times at which the US occurs; that is, times around the ISI between CS and US. Learning has the effect of amplifying signals from cells for which timing matches the ISI, at least partially. Most cell activity intervals do not match the ISI perfectly. However, after such learning, the sum of the gated signals from all the cells – that is, its population response – is well-timed to the ISI, and typically peaks at or near the expected time of US onset. This sort of adaptive timing endows the nSTART model with the ability to learn associations between events that are separated in time, notably between a CS and US during trace conditioning.

Evidence for adaptive timing has been found during many different types of reinforcement learning. For example, classical conditioning is optimal at a range of inter-stimulus intervals between the CS and US that are characteristic of the task, species, and age, and is typically attenuated at zero ISI and long ISIs. Within an operative range, learned responses are timed to match the statistics of the learning environment (e.g., Smith, 1968).

Although the amygdala has been identified as a primary site in the expression of emotion and stimulus-reward associations (Aggleton, 1993), as summarized in Figs. 2 and 5, the hippocampal formation has been implicated in the adaptively timed processing of cognitive-emotional interactions. For example, Thompson et al. (1987) distinguished two types of learning that go on during conditioning of the rabbit Nictitating Membrane Response: adaptively timed “conditioned fear” learning that is linked to the hippocampus, and adaptively timed “learning of the discrete adaptive response” that is linked to the cerebellum. In particular, neurophysiological evidence has been reported for adaptive timing in entorhinal cortex activation of hippocampal dentate and CA3 pyramidal cells (Berger & Thompson, 1978; Nowak & Berger, 1992) to which the more recently reported “time cells” presumably contribute.

Spectral timing has been used to model challenging behavioral, neurophysiological, and anatomical data about several parts of the brain: the hippocampus to maintain motivated attention on goals for an adaptively timed interval (Grossberg & Merrill, 1992, 1996; cf. Friedman, Bressler, Garner, & Ziv, 2000), the cerebellum to read out adaptively timed movements (Fiala, Grossberg, & Bullock, 1996; Ito, 1984), and the basal ganglia to release dopamine bursts and dips that drive new associative learning in multiple brain regions in response to unexpectedly timed rewards and non-rewards (Brown, Bullock, & Grossberg, 1999, 2004; Schultz, 1998; Schultz et al., 1992).

Distinguishing expected and unexpected disconfirmations

Adaptive timing is essential for animals that actively explore and learn about their environment, since rewards and other goals are often delayed in time relative to the actions that are aimed at acquiring them. The brain needs to be dynamically buffered, or protected against, reacting prematurely before a delayed reward can be received. The Spectral Timing model accomplishes this by predicting how the brain distinguishes expected non-occurrences, also called expected disconfirmations, of reward, which should not be allowed to interfere with acquiring a delayed reward, from unexpected non-occurrences, also called unexpected disconfirmations, of reward, which can trigger the usual consequences of predictive failure, including reset of working memory, attention shifts, emotional rebounds, and the release of exploratory behaviors. In the nSTART model, and the START model before it, spectral timing circuits generate adaptively timed hippocampal responses that can bridge temporal gaps between CS and US and provide motivated attention to maintain activation of the hippocampus and neocortex between those temporal gaps (Figs. 2 and 6).

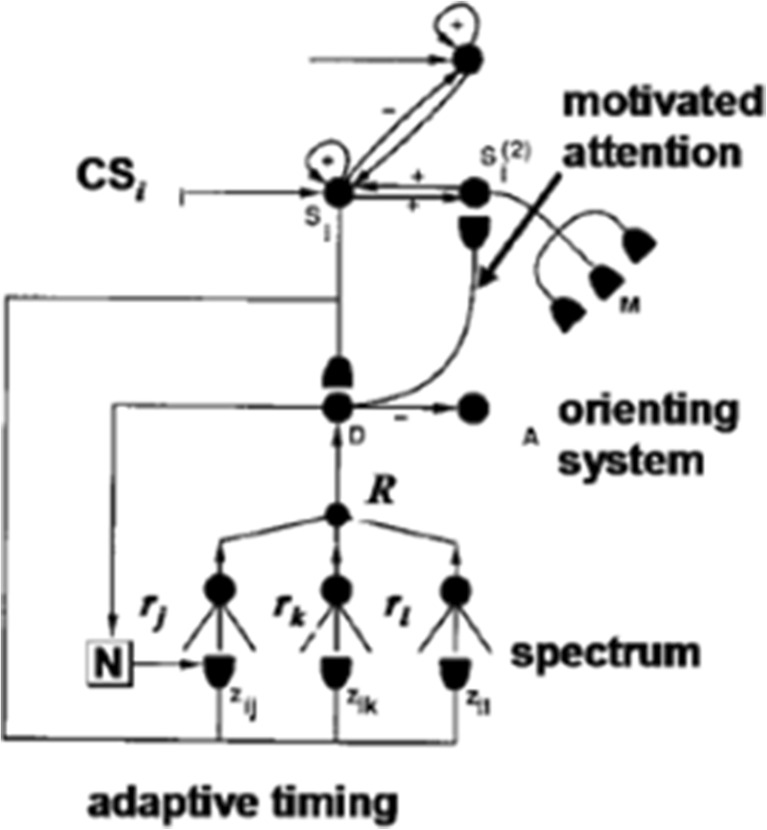

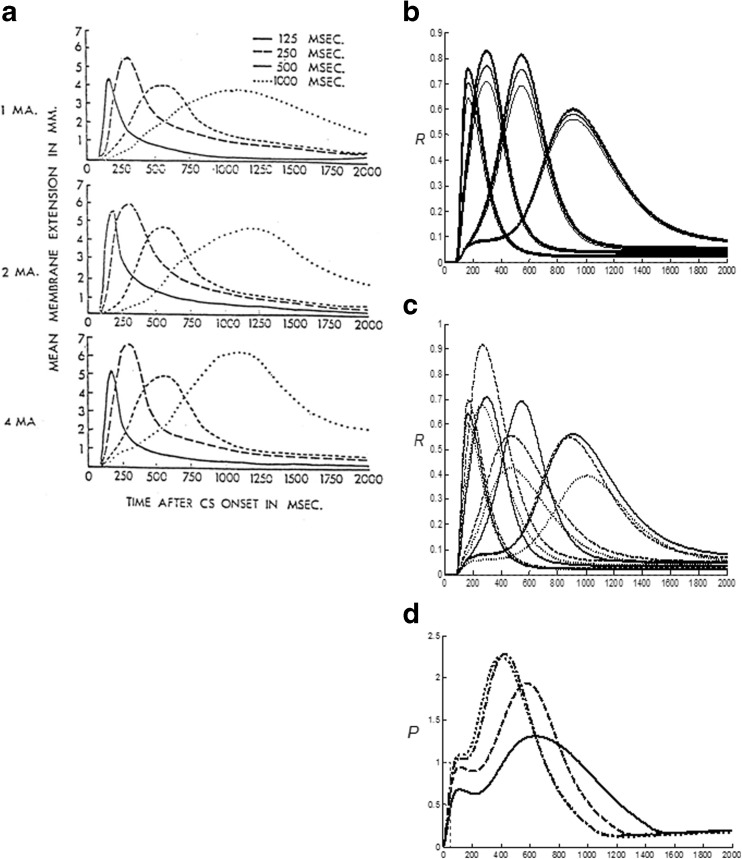

Fig. 6.

In the START model, conditioning, attention, and timing are integrated. Adaptively timed hippocampal signals R maintain motivated attention via a cortico-hippocampal-cortical feedback pathway, at the same time that they inhibit activation of orienting system circuits A via an amygdala drive representation D. The orienting system is also assumed to occur in the hippocampus. The adaptively timed signal is learned at a spectrum of cells whose activities respond at different rates r j and are gated by different adaptive weights z ij . A transient Now Print learning signal N drives learned changes in these adaptive weights. In the nSTART model, the hippocampal feedback circuit operates in parallel to the amygdala, rather than through it [Reprinted with permission from Grossberg and Merrill (1992)]

What spares an animal from erroneously reacting to expected non-occurrences of reward as predictive failures? Why does an animal not immediately become so frustrated by the non-occurrence of such a reward that it prematurely shifts its attentional focus and releases exploratory behavior aimed at finding the desired reward somewhere else, leading to relentless exploration for immediate gratification? Alternatively, if the animal does wait, but the reward does not appear at the expected time, then how does the animal then react to the unexpected non-occurrence of the reward by becoming frustrated, resetting its working memory, shifting its attention, and releasing exploratory behavior?

Any solution to this problem needs to account for the fact that the process of registering ART-like sensory matches or mismatches is not itself inhibited (Fig. 3): if the reward happened to appear earlier than expected, the animal could still perceive it and release consummatory responses. Instead, the effects of these sensory mismatches upon reinforcement, attention, and exploration are somehow inhibited, or gated off. That is, a primary role of such an adaptive timing mechanism seems to be to inhibit, or gate, the mismatch-mediated arousal process whereby a disconfirmed expectation would otherwise activate widespread signals that could activate negatively reinforcing frustrating emotional responses that drive extinction of previous consummatory behavior, reset working memory, shift attention, and release exploratory behavior.

The START model unifies networks for spectrally timed learning and the differential processing of expected versus unexpected non-occurrences, or disconfirmations (Fig. 6). In START, learning from sensory cortex to amygdala in Si → D pathways is supplemented by a parallel Si → H hippocampal pathway. This parallel pathway embodies a spectral timing circuit. The spectral timing circuit supports adaptively timed learning that can bridge temporal gaps between cues and reinforcers, as occurs during trace conditioning. As shown in Fig. 6, both of these learned pathways can generate an inhibitory output signal to the orienting system A. As described within ART (Fig. 3c), the orienting system is activated by novelty-sensitive mismatch events. Such a mismatch can trigger a burst of nonspecific arousal that is capable of resetting the currently active recognition categories that caused the mismatch, while triggering opponent emotional reactions, attention shifts, and exploratory behavioral responses. The inhibitory pathway from D to A in Fig. 6 prevents the orienting system from causing these consequences in response to expected disconfirmations, but not to unexpected disconfirmations (Grossberg & Merrill, 1992, 1996). In particular, read-out from the hippocampal adaptive timing circuit activates D which, in turn, inhibits A. At the same time, adaptively timed incentive motivational signals to the prefrontal cortex (pathway D → Si (2) in Fig. 6) are supported by adaptively timed output signals from the hippocampus that help to maintain motivated attention, and a cognitive-emotional resonance for a task-appropriate duration.