Abstract

These medication errors have occurred in health care facilities at least once. They will happen again—perhaps where you work. Through education and alertness of personnel and procedural safeguards, they can be avoided. You should consider publishing accounts of errors in your newsletters and/or presenting them at your inservice training programs.

Your assistance is required to continue this feature. The reports described here were received through the Institute for Safe Medication Practices (ISMP) Medication Errors Reporting Program. Any reports published by ISMP will be anonymous. Comments are also invited; the writers' names will be published if desired. ISMP may be contacted at the address shown below.

Errors, close calls, or hazardous conditions may be reported directly to ISMP through the ISMP Web site (www.ismp.org), by calling 800-FAIL-SAFE, or via e-mail at ismpinfo@ismp.org. ISMP guarantees the confidentiality and security of the information received and respects reporters' wishes as to the level of detail included in publications.

UNDERSTANDING HUMAN OVER-RELIANCE ON TECHNOLOGY

The implementation of information technology in medication use systems is widely accepted as a way to reduce adverse drug events by decreasing human error.1 Technology examples include computerized order entry systems, clinical decision support systems, robotic dispensing, profiled automated dispensing cabinets (ADCs), smart infusion pumps, and barcode scanning of medications during compounding, dispensing, ADC restocking, and administration. These technologies are meant to support human cognitive processes and thus have great potential to combat the shortcomings of manual medication systems and improve clinical decisions and patient outcomes. This is accomplished through precise controls, automatically generated cues and recommendations to help the user respond appropriately, prompts that promote the correct sequence of work or ensure the collection of critical information, and alerts to make the user aware of potential errors.

Information technology to support clinical decision making does not replace human activity but rather changes it, often in unintended or unanticipated ways.2 Instances of misuse and disuse, often to work around technology issues, and new sources of errors after technology implementation have been well documented. Errors can also be caused by over-reliance on and trust in the proper function of technology.3 The technology can occasionally malfunction, misdirect the user, or provide incorrect information or recommendations that lead the user to change a previously correct decision or follow a pathway that leads to an error. Over-reliance on technology can result in serious consequences for patients. In its recent Safety Bulletin,4 ISMP Canada highlighted human overreliance on technology based on its analysis of an event reported to a Canadian national reporting system. In the article, they discussed 2 related cognitive limitations: automation bias and automation complacency.

Incident Description

An elderly patient was admitted to the hospital with new-onset seizures. Admission orders included the anticonvulsant phenytoin (handwritten using the brand name Dilantin), 300 mg orally every evening. Before the pharmacy closed, a pharmacy staff member entered the Dilantin order into the pharmacy computer so that the medication could be obtained from an ADC in the patient care unit overnight. In the pharmacy computer, medication selection for order entry was performed by typing the first 3 letters of the medication name (“dil” in this case) and then choosing the desired medication name from a drop-down list. The computer list contained both generic and brand names. The staff member was interrupted while entering the order. When this task was resumed, dilTIAZem 300 mg was selected instead of Dilantin 300 mg.

On the patient care unit, the order for Dilantin had been correctly transcribed by hand onto a daily computer-generated medication administration record (MAR), which was verified against the prescriber's order and cosigned by a nurse. The nurse who obtained the medication from the unit's ADC noticed the discrepancy between the MAR and the ADC display, but accepted the information displayed on the ADC screen as correct. The patient received one dose of long-acting dilTIAZem 300 mg orally instead of the Dilantin 300 mg as ordered. The error was caught the next morning when the patient exhibited significant hypotension and bradycardia.

Automation Bias and Automation Complacency

The tendency to favor or give greater credence to information from technology (eg, an ADC display) and to ignore a manual source of information that provides contradictory information (eg, a handwritten entry on the computer-generated MAR), even if it is correct, illustrates the phenomenon of automation bias.3 Automation complacency is a closely linked, overlapping concept that refers to the monitoring of technology less frequently or with less vigilance because of a lower degree of suspicion of error and a stronger belief in its accuracy.2 End-users of a technology (eg, a nurse who relies on the ADC display that lists medications to be administered) tend to forget or ignore that information from the device may depend on data entered by a person. In other words, processes that may appear to be wholly automated are often dependent upon human input at critical points and thus require the same degree of monitoring and attention as manual processes. These 2 phenomena can affect decision making in individuals as well as in teams and offset the benefits of technology.2

Automation bias and complacency can lead to decisions that are not based on a thorough analysis of all available information but that are strongly biased toward the presumed accuracy of the technology.2 While these effects are inconsequential if the technology is correct, errors are possible if the technology output is misleading. An automation bias omission error takes place when users rely on the technology to inform them of a problem but it does not (eg, excessive dose warning); thus, they fail to respond to a potentially critical situation because they were not prompted to do so. An automation bias commission error occurs when users make choices based on incorrect suggestions or information provided by technology.3 In the Dilantin incident described above, there were 2 errors caused by automation bias: the first error occurred when the pharmacy staff member accepted dilTIAZem as the correct drug in the pharmacy order entry system. The second error occurred when the nurse identified the discrepancy between the ADC display and the MAR but trusted the information on the ADC display over that on the handwritten entry on the computer-generated MAR.

In recent analyses of health-related studies on automation bias and complacency, clinicians overrode their own correct decisions in favor of erroneous advice from technology between 6% to 11% of the time,3 and the risk of an incorrect decision increased by 26% if the technology output was in error.5 The rate of detecting technology failures is also low: In one study, half of all users failed to detect any of the technology failures introduced during the course of a typical work day (eg, important alert did not fire, presentation of the wrong information or recommendation).2,6

Causes of Automation Bias and Complacency

Automation bias and complacency are thought to result from 3 basic human factors2,3:

In human decision-making, people have a tendency to select the pathway that requires the least cognitive effort, which often results in letting technology dictate the path. This factor is likely to play a greater role as people are faced with more complex tasks, multitasking, heavier workloads, or increasing time pressures—common phenomena in health care.

People often believe that the analytic capability of technology is superior to that of humans, which may lead to overestimating the performance of these technologies.

People may reduce their effort or shed responsibility in carrying out a task when an automated system is also performing the same function. It has been suggested that the use of technology convinces the human mind to hand over tasks and associated responsibilities to the automated system.7,8 This mental handover can reduce the vigilance that the person would demonstrate if carrying out the particular task independently.

Other conditions linked to automation bias and complacency are discussed below.

Experience. There is conflicting evidence as to the effect of experience on automation bias and complacency. Even though there is evidence that reliance on technology is reduced as clinicians' experience and confidence in their decisions increases, it has also been shown that increased familiarity with technology can lead to desensitization, which may cause clinicians to doubt their instincts and accept inaccurate technology-derived information.3 Thus, automation bias and complacency have been found in both naïve and expert users.2

Perceived reliability and trust in the technology. While once believed to be a general tendency to trust all technology, automation bias and complacency are now believed to be influenced by the perceived reliability of a specific technology based on the user's prior experiences with the system.2 When automation is perceived to be reliable at least 70% of the time, people are less likely to question its accuracy.9

Confidence in decisions. As trust in technology increases automation bias and complacency, users are less likely to be biased if they are confident in their own decisions.3,10,11

The use of technology is considered a high-leverage strategy to optimize clinical decision making, but only if the user's trust in the technology closely matches the reliability of the technology itself. Therefore, strategies to address errors related to automation bias and complacency focus on improving the reliability of the technology itself and supporting clinicians to more accurately assess the reliability of the technology, so that appropriate monitoring and verification strategies can be employed.

Analyze and address vulnerabilities. Conduct a proactive risk assessment (eg, failure mode and effects analysis [FMEA]) for new technologies to identify unanticipated vulnerabilities and address them before undertaking facility-wide implementation. Also encourage reporting of technology-associated risks, issues, and errors.

Limit human-computer interfaces. Organizations should continue to enable all technology to communicate seamlessly, thereby limiting the need for human interaction with the technology, which could introduce errors.

Design the technology to reduce over-reliance. The design of the technology can affect the users' attention and how they regard its value and reliability. For example, the “auto-complete” function for drug names after entering the first few letters is a design strategy that has often led to selection of the first, but incorrect, choice provided by the technology. Requiring the use of 4 letters to generate a list of potential drug names could reduce these types of errors. To cite another example, studies have found that providing too much on-screen detail can decrease the user's attention and care, thereby increasing automation bias.3

Provide training. Provide training about the technology involved in the medication-use system to all staff who utilize the technology. Include information about the limitations of such technology, as well as previously identified gaps and opportunities for error. Allow trainees to experience automation failures during the training (eg, technology failure to issue an important alert; discrepancies between technology entries and handwritten entries in which the handwritten entries are correct; “auto-fill” or “auto-correct” errors; incorrect calculation of body surface area due to human error during input of the weight in pounds instead of kg). Experiencing technology failures during training can help to reduce errors due to complacency and automation bias by encouraging critical thinking when automated systems are used.3 Allowing trainees to experience automation failures may increase the likelihood that they will recognize these failures during daily work.

Reduce task distraction. Although easier said than done, leaders should attempt to ensure the persons using technology can do so uninterrupted and are not simultaneously responsible for other tasks. Automation failures are less likely to be identified if the user is required to multitask or is otherwise distracted or rushed.2

Technology plays an important role in the design and improvement of medication systems; however, it must be viewed as supplementary to clinical judgement. Even though its use can make many aspects of the medication-use system safer, health care professionals must continue to apply their clinical knowledge and critical thinking skills to use and monitor technology to provide optimal patient care.

ISMP thanks ISMP Canada for its generous contribution to the content for this article.

IT'S EXELAN, NOT EXELON

It's rare for the name of a drug company to be so close to the name of a drug that it leads to a medication error. But that's exactly what happened in the following case. This mix-up almost led to harm for an elderly patient who almost got the wrong medication.

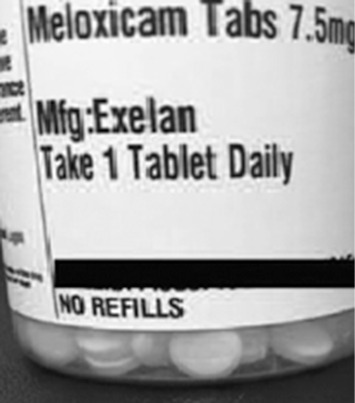

After a patient was admitted to the hospital, a family member brought in a prescription bottle that contained meloxicam, a nonsteroidal anti-inflammatory agent that the patient had been taking at home. (See Figure 1 for a photo of the prescription container label.) To develop an active medication list for the patient's physician to reconcile, a nurse inadvertently copied down the manufacturer's name, Exelan, thinking it was a brand name for meloxicam. The doctor then ordered Exelon, a brand name for rivastigmine tartrate, along with the meloxicam strength and frequency – “Exelon 7.5 mg one tablet daily.”

Figure 1.

Meloxicam prescription container label.

Rivastigmine tartrate is an anticholinesterase inhibitor indicated for patients with mild to moderate dementia of the Alzheimer's type or mild to moderate dementia associated with Parkinson's disease. Although Exelon is not available in a tablet, it is available as a patch and capsule. When used orally, the starting dose is just 1.5 mg twice a day with subsequent dose titration after tolerating that dose for 2 to 4 weeks, then additional dose titration, again after 2 to 4 weeks, until a maximum dose of 12 mg daily is tolerated. Among the capsule strengths available are 1.5 mg, 3 mg, 4.5 mg, and 6 mg; so a 7.5 mg oral dose could be ordered and dispensed as 2 capsules (6 mg and 1.5 mg, or 3 mg and 4.5 mg). Fortunately, a pharmacist noticed the unusual Exelon dose and recognized the error when he received the order.

Perhaps the pharmacy label would have been less prone to error if the manufacturer's name was listed far away from the drug name—a recommendation we made to the mail order pharmacy that displayed the manufacturer's name above the directions for use. This type of error also demonstrates the importance of including the drug indication in prescription communications. We also notified Novartis, Exelan, and the US Food and Drug Administration (FDA). Exelon, a Novartis product, initially received FDA approval in 2000. Exelan, the company, was incorporated in 2010. Neither ISMP nor FDA has any similar reports of mix-ups between Exelan and Exelon in its databases.

CRASH CART DRUG MIX-UP

During a neonatal code, a physician asked for EPINEPHrine, but a nurse inadvertently prepared a prefilled emergency syringe of infant 4.2% sodium bicarbonate injection. Three doses of the wrong medication were given. The outcome of the neonate that coded is unknown at this time. The error was discovered after the code when the empty packages were recognized as incorrect.

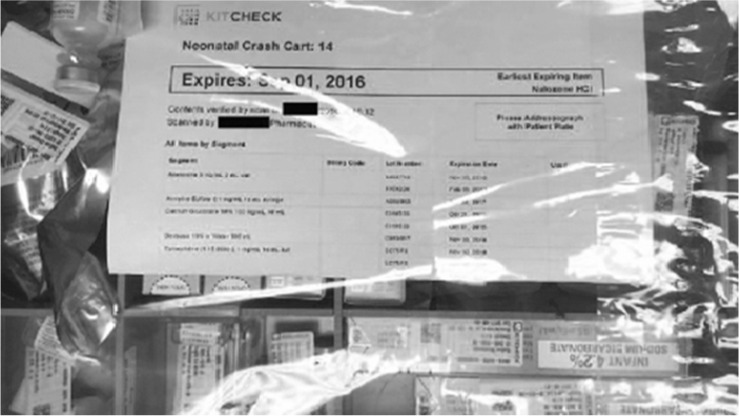

It is clear that the sodium bicarbonate carton's label must not have been properly confirmed, but part of the problem may have been related to the way the crash cart trays were prepared with a packing slip placed inside the tray that covered the EPINEPHrine carton labels (see Figure 2). Also, the sodium bicarbonate syringe labels may have been oriented upside down according to the nurse's point of view. Doses are so small during a neonatal code that more than one dose of medication might come from the same syringe; this can compound a selection error. The report we received did not specify if this was the case or if different prefilled syringes were used.

Figure 2.

Packing skip inside the crash cart tray that covered the EPINEPHrine carton labels.

Holding mock codes would be helpful in identifying potential problems like this. Nurses, pharmacists, and others would also become more familiar with available items in code carts, how they are stored, what they look like, and so on. During an actual code, any packing slips should be immediately removed from trays so they do not interfere with content visibility. Items in trays must be properly oriented for recognition during the code. It is also helpful for the person preparing the drugs during the code to be different from the person administering them. That gives an opportunity for the preparer to say, “Here's the EPINEPHrine 1 mg,” and then hand it off to have the person administering the medication read the label to confirm (eg, “I have in my hand EPINEPHrine 1 mg.”). It only takes a few seconds to confirm that the correct drug is in hand. Including pharmacists on code teams to help prepare the necessary medications is also an important error-reduction strategy.

RISK WITH ENTERING A “TEST ORDER”

Submitting a contrived prescription to a patient's pharmacy to determine insurance coverage has led to close calls or actual dispensing of the medication to the patient. In one reported case, when a patient contacted a clinic for a warfarin refill, the nurse noticed that both warfarin and apixaban were on the patient's medication list. Review of the notes in the electronic health record indicated that a “test script” had been sent to the pharmacy to determine the copay for apixaban. Apparently that prescription was dispensed, and the patient took both medications for about 3 days before the error was discovered. No harm occurred.

In another case, a pharmacy received an order for rivaroxaban for a patient with an active warfarin order. When the prescribing resident was notified about the therapeutic duplication, he stated that he only meant to see whether the patient's prescription would be covered by insurance.

It may be that some prescribers do not realize that pharmacies should not check this information by entering “test” orders (it violates their contract with vendors), or that the practice is dangerous as the patient may actually receive the medication. Instead, the patient, prescriber, or a hospital-assigned individual should call the insurance company or pharmacy benefits manager (PBM) to inquire about coverage.

REFERENCES

- 1. Mahoney CD, Berard-Collins CM, Coleman R, Amaral JF, Cotter CM.. Effects of an integrated clinical information system on medication safety in a multi-hospital setting. Am J Health Syst Pharm. 2007; 64( 18): 1969– 1977. [DOI] [PubMed] [Google Scholar]

- 2. Parasuraman R, Manzey DH.. Complacency and bias in human use of automation: An attentional integration. Hum Factors. 2010; 52( 3): 381– 410. [DOI] [PubMed] [Google Scholar]

- 3. Goddard K, Roudsari A, Wyatt JC.. Automation bias: A systematic review of frequency, effect mediators, and mitigators. J Am Med Inform Assoc. 2012; 19( 1): 121– 127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. ISMP Canada. . Understanding human over-reliance on technology. ISMP Canada Safety Bulletin. 2016; 16( 5): 1– 4. [Google Scholar]

- 5. Goddard K, Roudsari A, Wyatt JC.. Automation bias: Empirical results assessing influencing factors. Int J Med Inform. 2014; 83( 5): 368– 75. [DOI] [PubMed] [Google Scholar]

- 6. Parasuraman R, Molloy R, Singh IL.. Performance consequences of automation-induced “complacency.” Int J Aviat Psychol. 1993; 3( 1): 1– 23. [Google Scholar]

- 7. Coiera E. Technology, cognition and error. BMJ Qual Saf. 2015; 24( 7): 417– 422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Mosier KL, Skitka LJ.. Human decision makers and automated decision aid: Made for each other? : Parasuraman R, Mouloua M, Automation and Human Performance: Theory and Applications. Mahwah, NJ: Lawrence Erlbaum Associates; 1996: 201– 220. [Google Scholar]

- 9. Campbell EM, Sittig DF, Guappone KP, Dykstra RH, Ash JS.. Overdependence on technology: An unintended adverse consequence of computerized provider order entry. AMIA Annu Symp Proc. 2007: 94– 98. [PMC free article] [PubMed] [Google Scholar]

- 10. Lee JD, Moray N.. Trust, control strategies and allocation of function in human-machine systems. Ergonomics. 1992; 35( 10): 1243– 1270. [DOI] [PubMed] [Google Scholar]

- 11. Yeh M, Wickens CD.. Display signaling in augmented reality: Effects of cue reliability and image realism on attention allocation and trust calibration. Hum Factors. 2001; 43( 3): 355– 365. [DOI] [PubMed] [Google Scholar]