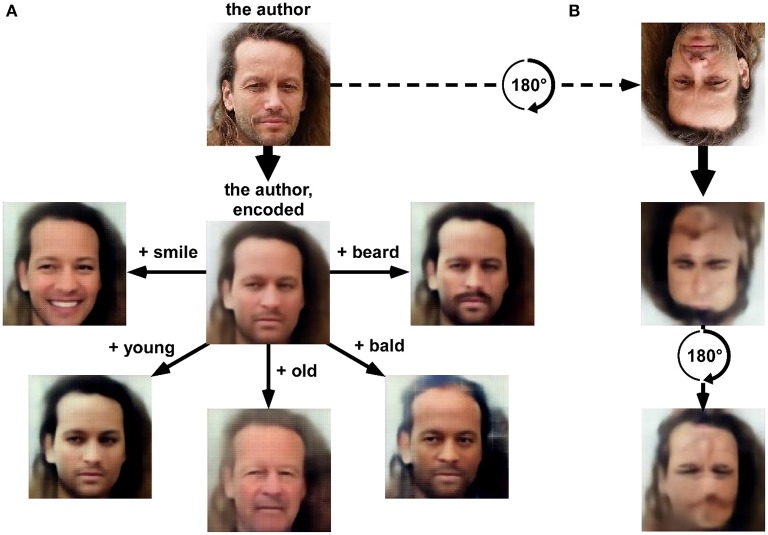

Figure 1.

A “variational auto-encoder” (VAE) deep network (13 layers) was trained using an unsupervised “generative adversarial network” procedure (VAE/GAN, Goodfellow et al., 2014; Larsen et al., 2015) on a labeled database of 202,599 celebrity faces (15 epochs). The latent space (1024-dimensional) of the resulting network provides a description of numerous facial features that could approximate face representations in the human brain. (A) A picture of the author as seen (i.e., “encoded”) by the network is rendered (i.e., “decoded”) in the center of the panel. After encoding, the latent space can be sampled with simple linear algebra. For example, adding a “beard vector” (obtained by subtracting the average latent description of 1000 faces having a “no-beard” label from the average latent description of 1000 faces having a “beard” label) before decoding creates a realistic image of the author with a beard. The same operation can be done (clockwise, from right) by adding average vectors reflecting the labels “bald,” “old,” “young,” or “smile.” In short, the network manipulates concepts, which it can extract from and render to pixel-based representations. It is tempting to envision that the 1024 “hidden neurons” forming this latent space could display a pattern of stimulus selectivity comparable to that observed in certain human face-selective regions (Kanwisher et al., 1997; Tsao et al., 2006; Freiwald et al., 2009; Freiwald and Tsao, 2010). (B) Since the network (much like the human brain) was trained solely with upright faces, it inappropriately encodes an upside-down face, partly erasing important facial features (the mouth) and “hallucinating” inexistent features (a faint nose and mouth in the forehead region). This illustrates how human-like perceptual behavior (here, the face inversion effect) can emerge from computational principles. The database used for training this network is accessible from mmlab.ie.cuhk.edu.hk/projects/CelebA.html (Liu et al., 2015).