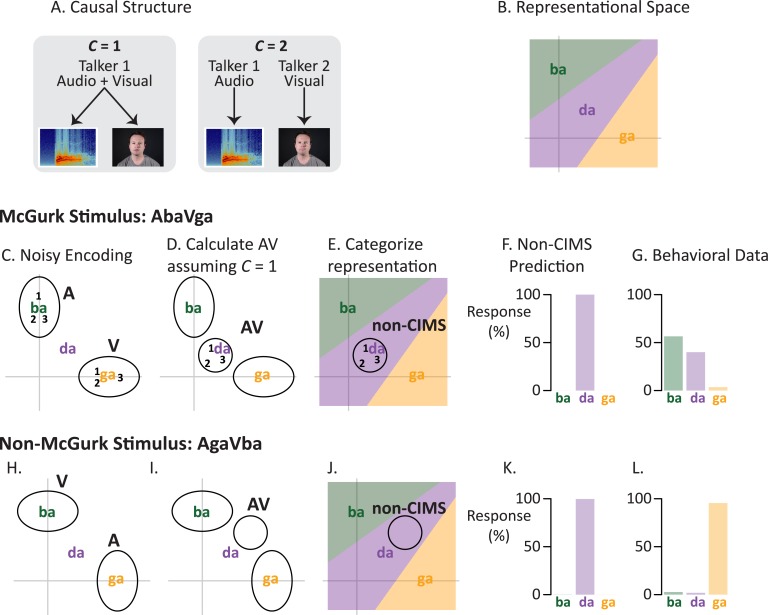

Fig 1. Modeling of multisensory speech perception without causal inference.

(A) There are two possible causal structures for a given audiovisual speech stimulus. If there is a common cause (C = 1), a single talker generates the auditory and visual speech. Alternatively, if there is not a common cause (C = 2), two separate talkers generate the auditory and visual speech. (B) We generate multisensory representations in a two-dimensional representational space. The prototypes of the syllables “ba,” “da,” and “ga” (location of text labels) are mapped into the representational space with locations determined by pairwise confusability. The x-axis represents auditory features; the y-axis represents visual features. (C) Encoding the auditory “ba” + visual “ga” (AbaVga) McGurk stimulus. The unisensory components of the stimulus are encoded with noise that is independent across modalities. On three trials in which an identical AbaVga stimulus is presented (represented as 1, 2, 3) the encoded representations of the auditory and visual components differ because of sensory noise, although they are centered on the prototype (gray ellipses show 95% probability region across all presentations). Shapes of ellipses reflect reliability of each modality: for auditory “ba” (ellipse labeled A), the ellipse has its short axis along the auditory x-axis; visual “ga” (ellipse labeled V) has its short axis along the visual y-axis. (D) On each trial, the unisensory representations are integrated using Bayes’ rule to produce an integrated representation that is located between the unisensory components in representational space. Numbers show the actual location of the integrated unisensory representations from C. Because of reliability weighting, the integrated representations are closer to “ga” along the visual y-axis, but closer to “ba” along the auditory x-axis (ellipse shows 95% probability region across all presentations). (E) Without causal inference (non-CIMS), the AV representation is the final representation. On most trials, the representation lies in the “da” region of representational space (numbers and 95% probability ellipse from D). (F) A linear decision rule is applied, resulting in a model prediction of exclusively “da” percepts across trials. (G) Behavioral data from 60 subjects reporting their percept of auditory “ba” + visual “ga”. Across trials, subjects reported the “ba” percept for 57% of trials and “da” for 40% of trials. (H) Encoding the auditory “ga” + visual “ba” (AgaVba) incongruent non-McGurk stimulus. The unisensory components are encoded with modality-specific noise; the auditory “ga” ellipse has its short axis along the auditory axis, the visual “ba” ellipse has its short axis along the visual axis. (I) Across many trials, the integrated representation (AV) is closer to “ga” along the auditory x-axis, but closer to “ba” along the visual y-axis. (J) Over many trials, the integrated representation is found most often in the “da” region of perceptual space. (K) Across trials, the non-CIMS model predicts “da” for the non-McGurk stimulus. (L) Behavioral data from 60 subjects reporting their perception of AgaVba. Subjects reported “ga” on 96% of trials.