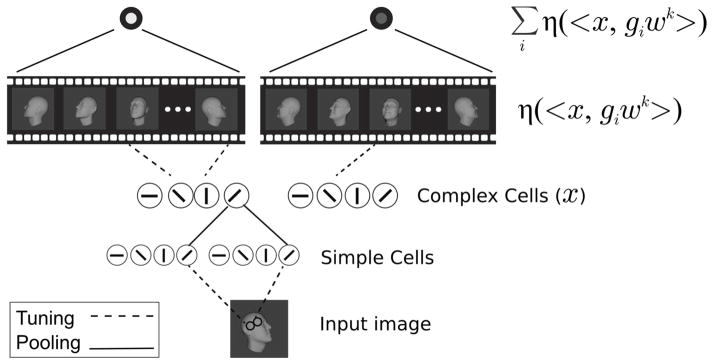

Figure 2. Illustration of the model.

Inputs are first encoded in a V1-like model. Its first layer (simple cells) corresponds to the S1 layer of the HMAX model. Its second layer (complex cells) corresponds to the C1 layer of HMAX [14]. In the view-based model, the V1-like encoding is then projected onto stored frames giwk at orientation i, from videos of transforming faces k = 1,…,K. Finally, the last layer is computed as μk = Σiη(〈x, giwk〉). That is, the kth element of the output is computed by summing over all responses to cells tuned to views of the kth template face. In the PCA-model, the V1-like encoding is instead projected onto templates describing the ith PC of the kth template face’s transformation video. The pooling in the final layer is then over all the PCs derived from the same identity. That is, it is computed as . In both the view-based and PCA models, units in the output layer pool over all the units in the previous layer corresponding to projections onto the same template individual’s views (view-based model) or PCs (PCA-model).