Abstract

Introduction

Dissemination and implementation research training has great potential to improve the impact and reach of health-related research; however, research training needs from the end user perspective are unknown. This paper identifies and prioritizes dissemination and implementation research training needs.

Methods

A diverse sample of researchers, practitioners, and policymakers was invited to participate in Concept Mapping in 2014–2015. Phase 1 (Brainstorming) gathered participants' responses to the prompt: To improve the impact of research evidence in practice and policy settings, a skill in which researchers need more training is… The resulting statement list was edited and included subsequent phases. Phase 2 (Sorting) asked participants to sort each statement into conceptual piles. In Phase 3 (Rating), participants rated the difficulty and importance of incorporating each statement into a training curriculum. A multidisciplinary team synthesized and interpreted the results in 2015–2016.

Results

During Brainstorming, 60 researchers and 60 practitioners/policymakers contributed 274 unique statements. Twenty-nine researchers and 16 practitioners completed sorting and rating. Nine concept clusters were identified: Communicating Research Findings, Improve Practice Partnerships, Make Research More Relevant, Strengthen Communication Skills, Develop Research Methods and Measures, Consider and Enhance Fit, Build Capacity for Research, and Understand Multilevel Context. Though researchers and practitioners had high agreement about importance (r =0.93) and difficulty (r =0.80), ratings differed for several clusters (e.g., Build Capacity for Research).

Conclusions

Including researcher and practitioner perspectives in competency development for dissemination and implementation research identifies skills and capacities needed to conduct and communicate contextualized, meaningful, and relevant research.

Introduction

Significant funds and effort are dedicated to intervention testing with the aim of preventing disease and improving public health. Often, heath promotion and disease prevention efforts include community members as important public health partners. Unfortunately, the products of such research are not always applied to practice and policy, and therefore do not go on to impact health at the population level.1–3 Dissemination and implementation (D&I) science represents an important avenue for public health progress by enhancing the application of evidence-based interventions. Owing to the prominence of D&I research as a core function of Prevention Research Centers,4 these centers are uniquely positioned to conduct cutting-edge D&I research. The aim of D&I science is to understand how to systematically bring evidence-based policies and programs into real-world practice to promote health and prevent disease.5,6

There remains somewhat limited capacity to conduct D&I research.5 To fill this gap, training programs for researchers interested in D&I science are necessary. Training in D&I research has great potential to improve the impact and reach of the products of health-related research. Training programs exist to build capacity for D&I research such as the Implementation Research Institute,7 KT Canada Summer Institute on Knowledge Translation,8 Training Institute for Dissemination and Implementation Research in Health,9 Prevention and Control of Cancer Post-Doctoral Training in Implementation Science,10 Mentored Training for Dissemination and Implementation Research in Cancer (MT-DIRC),11 and University of California San Francisco's Certificate program in Implementation Science.12 Efforts have begun to develop a set of competencies to inform the curricula for these programs.8,11 However, end user perspectives on research training needs are necessary to enhance the relevance of training efforts to the needs of practitioners.13–15

To help inform training needs, the past 15 years have brought new perspectives on how to infuse more research into practice, with suggestions that incorporating practitioners into research evaluation (so called “practice-based evidence”) provides research that may be more relevant to practitioners than research conducted in a purely controlled setting.14 Ideally, D&I efforts should combine evidence-based practice (i.e., prioritize implementation of interventions shown to be effective and consistent with community preferences)13,15 with practice-based evidence (i.e., evidence that is developed in the real world rather than under highly controlled research conditions).14 This is particularly the case in the context of D&I research, as practitioners are often important stakeholders.16 However, D&I research training programs are often developed with limited practitioner input, which can lead to key gaps in competencies.

The objectives of this paper are threefold: (1) to identify ideas for improving D&I research training from the perspectives of both practitioners and researchers; (2) to use a graphical tool to allow participants to organize the ideas into concept clusters; and (3) to compare the idea clusters identified with existing D&I research competencies.

Methods

Concept Mapping was used in 2014–2015 to identify the training needs of investigators interested in D&I research. This method engages stakeholders to organize ideas using mixed methods.17,18 Concept Mapping uses a multistage process to generate and organize ideas; related concepts are clustered visually and statistically.17 For the current study, both phases were conducted using Concept Systems Global Max.

Concept maps have been used as an evaluative tool and an aid in program planning.17,18 Known as structured conceptualization, concept maps have the ability to produce visual representations of the collective thoughts of a larger group.17 In particular, concept maps are useful in understanding training needs, as this method uses multivariate methods to build maps that integrate diverse perspectives from various stakeholders and visually display a composite of the respondents' input. The maps developed can provide a structure to be used in planning and program development, such as curriculum development.17

Concept Mapping is appropriate to evaluate the gaps in current training curricula and help set priorities to plan future curricula, which address these concerns.17,18 Concept maps have been used by the current research team previously as a research agenda–setting tool—the ease of usability make this tool ideal for engaging with a diverse geographic audience.19,20 Concept maps have been used by others to outline a training curriculum.21

Phase 1

Phase 1 (Brainstorming) gathered statements. Three groups of participants were invited to contribute to Phase 1: practitioners, researchers, and policymakers. To recruit practitioners, a list of e-mails was populated from a variety of Listservs: public health practitioners who had previously collaborated on research projects, the directors of practice-based research networks, and National Association of Chronic Disease Directors practitioners. In total, 294 e-mails were sent to practitioners. The list of policymakers approached was generated from a random sample of 20 U.S. state legislatures and their representatives who serve on a health-related committee. An additional list was generated from a random sample of ten U.S. cities' city council members. In total, 596 policymakers were identified and e-mailed invitations to submit statements. A larger number of policymakers were sampled as previous studies have found low response rates.22 Finally, researchers were identified through Listservs of previous D&I trainings and D&I network Listservs. A total of 238 researchers were invited to participate. This study was approved by the Human Research Protection Office at Washington University in St. Louis.

Study participants were asked to respond to the focus prompt: To improve the impact of research evidence in practice and policy settings, a skill in which researchers need more training is… The list of statements contributed in Phase 1 was edited for clarity and redundancy to minimize the burden of participants in Phases 2 and 3 and to maximize the usefulness of the results.

Phases 2 and 3

The recruitment lists used in Phase 1 were used to identify participants for Phases 2 and 3. The software system limited the number of participants in these phases to 100; thus, participants were asked to reply to an initial e-mail inviting them to Phases 2 and 3. The team then created log-on information for the Concept Systems software for each responding participant.

Phase 2 (Sorting) asked participants to sort each statement into conceptual piles based on their themes or meanings. In Phase 3 (Rating), participants rated each statement based on their perception of the difficulty (On a scale of 1 to 5, with 1 being “very difficult” to 5 being “very easy,” how difficult would it be to incorporate this skill into a training curriculum for researchers?) and importance (On a scale of 1 to 5, with 1 being “not at all important” and 5 being “extremely important,” how important do you feel each skill is for a researcher to master?) of incorporating the statement into a training curriculum. A complete list of the statements included in Phases 2 and 3 is included in Appendix Table 1.

Statistical Analysis

Multidimensional scaling and hierarchical cluster analyses were used to develop a cluster map, depicting the organization of the statements based on participant sorting.23 Multidimensional scaling uses the square total similarity matrix (how many people sorted each pair of statements together in a pile) to create a map of points, thus generating coordinates in the two dimensions for each statement. Hierarchical cluster analysis groups individual statements on the point map into clusters that are aggregated to reflect similar concepts.18 Different numbers of clusters were explored until the clusters were neither too large nor too constrained. The range of issues in the included statements and each statement's bridging value (a score calculated by Concept Systems that indicates how frequently an item was grouped with other items) was also considered in establishing the final set of clusters. Finally, the authors moved several statements from the cluster they were originally assigned to, to improve fit. Model fit was explored using the stress value, which is a measure of goodness of fit between the point map generated and the total similarity matrix. Stress scores that are higher indicate poorer representation of the data by the map; therefore, lower scores are preferred. For a reliable concept map, the average stress value, over a sample of 69 maps, is 0.28 (SD=0.04; range, 0.17–0.34).17,18

The difficulty and importance rating for each cluster, in the final set of clusters, was determined by averaging the ratings for each statement; theses averages were determined for the total sample and separately for researchers and practitioners. The cluster ratings were compared using descriptive statistics for the total sample. Correlations were used to compare the difficulty and importance ratings for each cluster between researchers and practitioners. Individual strategies were plotted based on their difficulty and importance, and those rated easiest and most important were considered to be in the “Go Zone.” A multidisciplinary team synthesized and interpreted the results in 2015–2016.

Results

During Brainstorming (Phase 1), 125 participants (Table 1) contributed 274 unique statements. An equal number of researchers (n=60) and practitioners/policymakers (n=60) contributed statements; five respondents did not select a category. Twenty-nine researchers and 16 practitioners completed the Sorting (Phase 2) and Rating (Phase 3) phases; 45 rated Question 1 and 43 rated Question 2 (Table 1). Only one policymaker participated in the Sorting/Rating phases, and was thus excluded from subsequent analyses.

Table 1. Participant Characteristics.

| Characteristics | Phase 1 n (%)a | Phase 2 n (%)a |

|---|---|---|

| Researcher, practitioner, policymaker | ||

| Researcher | 60 (50) | 29 (64) |

| Practitioner | 39 (33) | 16 (36) |

| Policymaker | 21 (18) | 0 (0) |

| Work setting | ||

| Healthcare facility (e.g., hospital, clinic, medical health center) | 20 (17) | 8 (18) |

| Research institutions (e.g., university, research consulting) | 38 (32) | 16 (36) |

| Federal agency (e.g., NIH, CDC, HHS) | 2 (2) | 3 (7) |

| Local government | 2 (2) | 0 (0) |

| State government | 18 (15) | 2 (4) |

| Local health department | 14 (12) | 8 (18) |

| State health department | 12 (10) | 1 (2) |

| Community-based organization | 6 (5) | 4 (9) |

| Voluntary health organizations (e.g., American Cancer Society) | 2 (2) | 0 (0) |

| Other | 6 (5) | 3 (7) |

| Highest degree attained | ||

| Associates | 2 (2) | 1 (2) |

| Bachelors | 9 (8) | 3 (7) |

| Masters | 40 (34) | 8 (18) |

| Medical Doctorate (MD, DO) | 26 (22) | 9 (20) |

| Doctorate of Philosophy (PhD) | 39 (33) | 22 (49) |

| Other Doctorate (DrPH, EdD, PsyD, JD, DMD, PharmD, etc.) | 3 (3) | 2 (2) |

| Years work in current field | ||

| Range | 1-55 years | 1-35 years |

| Median | 10 | 10 |

| Average | 13 | 11 |

5 respondents did not provide demographic information, but contributed statements.

CDC, Centers for Disease Control and Prevention; DO, Doctor of Osteopathic Medicine; DrPH, Doctor of Public Health; EdD, Doctorate in Education; PsyD, Doctor Of Psychology; JD, Juris Doctor; DMD, Doctor of Dental Medicine; PharmD, Doctor of Pharmacy; D&I, dissemination and implementation

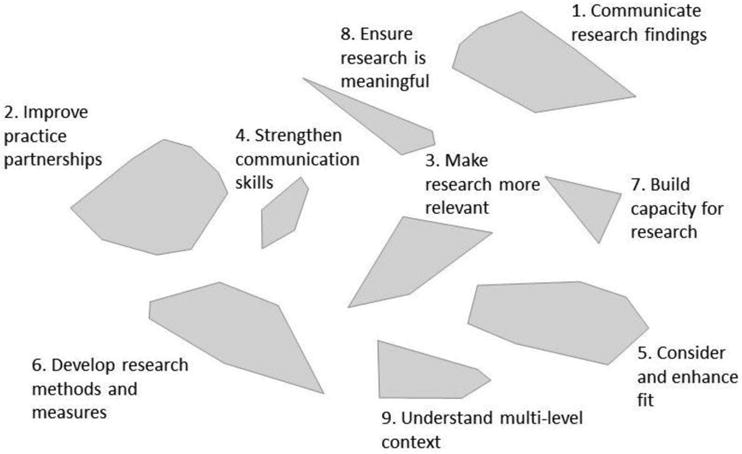

The cluster map is presented in Figure 1. Nine clusters were identified: Communicating Research Findings, Improve Practice Partnerships, Make Research More Relevant, Strengthen Communication Skills, Develop Research Methods and Measures, Consider and Enhance Fit, Build Capacity for Research, and Understand Multilevel Context. A complete list of the statements making up each cluster is available in Appendix Table 1. The stress value for this cluster map was 0.24 after 26 iterations.

Figure 1.

Cluster Map: Cluster Maps spatially show how closely statements are related based on how frequently they were sorted together.

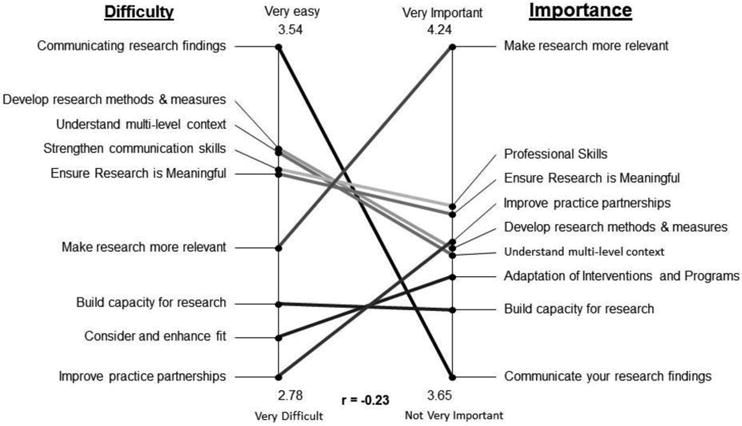

The pattern match in Figure 2 shows the difficulty and importance ratings for each cluster, as rated by the combined sample. It was apparent, that Communicating Research Findings was ranked “very easy,” but also “not very important.” Make Research More Relevant was ranked “somewhat more difficult,” but “very important.”

Figure 2.

Pattern Match: all researchers and practitioners.

Though researchers and practitioners had high agreement about importance (r =0.93) and difficulty (r =0.80) of the clusters, several clusters (e.g., Build Capacity for Research) were rated differently by researchers and practitioners. Researchers consistently rated the clusters as of higher importance than practitioners, though the differences were not statistically significant. With respect to difficulty, for six of the clusters (Communicating Research Findings, Improve Practice Partnerships, Make Research More Relevant, Strengthen Communication Skills, Consider and Enhance Fit, and Ensure Research is Meaningful), practitioner ratings of difficulty were higher, whereas for the remaining three clusters, researcher difficulty ratings were higher; these differences were not statistically significant.

Go Zones were used to explore statements that were ranked low for difficulty and high for importance (Appendix Figure 1). Some example Go Zone statements include: Present research results in a simple and intuitively understandable way and Conceptualizing good D&I research questions. When comparing difficulty as rated by researchers and importance as rated by practitioners, a similar pattern emerged.

Discussion

Overall, this study identified nine skills and capacities needed to conduct and communicate research that is contextualized, meaningful, and relevant (Figure 1). These could be priorities for training programs in D&I research. The inclusion of both researcher and practitioner perspectives in curriculum development for D&I training may broaden the reach, relevance, and impact of such programs. In the current study, researchers and practitioners were relatively similar in the way they ranked the importance of the statements. This may relate to the type of practitioners included in the study (i.e., those willing to participate in a research study or that are familiar with D&I research) and may also speak to the type of researchers working in D&I; perhaps these comprise a group that is more in tune with their stakeholders. Research in other fields has found greater differences between researchers and practitioners.19

Developers of training programs should consider these findings in creating curricula and developing competencies and training approaches. As an example, the clusters and statements (particularly those noted in the “Go Zone”) were compared with the competencies for an ongoing training program, MT-DIRC. Some of the clusters identified in the current work fit with very few MT-DIRC competencies. For example, only one competency mapped onto Cluster 1 (Communicating Research Findings). Statements in this cluster were dominated by ideas on how to communicate research findings. The competencies identified by Padek and colleagues,11 and used to inform MT-DIRC, included a section on practice-based considerations, which primarily mapped on to Cluster 2: Improve Practice Partnerships. Many of these competencies did not capture the nuances of skills associated with effective communication techniques and their importance in D&I research. Statements in the Communicate Research Findings cluster were rated high for difficulty and low for importance by both researchers and practitioners (Figure 2), which may explain its exclusion from the previously developed set of competencies. Future research could explore why this seemingly important set of ideas was ranked as low importance and high difficulty. Examining the curricula of other D&I research training programs such as the Implementation Research Institute,7 Training Institute for Dissemination and Implementation Research in Health,9 and Prevention and Control of Cancer Post-Doctoral Training in Implementation Science10 could inform the field as to which programs might best match the identified training needs. This may also allow program developers to fill gaps, where one program may contain competencies others lack.

By contrast, many of the previously identified competencies aligned with statements in the clusters. The Improve Practice Partnerships cluster statements aligned with six competencies within the MT-DIRC “Practice Based Considerations” area of expertise (Appendix Table 2). The Develop Research Methods and Measures cluster contains many of the competencies outlined through previous card sort work, and which are incorporated into MT-DIRC trainings (Appendix Table 2).11 Mapping of the gaps can be used to refine and articulate the skills involved in the existing competencies. Although the competencies from MT-DIRC and other programs can be viewed as broader instructional goals,11 the statements identified within these clusters may be used as narrower instructional objectives.

Trainers seeking to develop D&I research training programs should aim to include competencies around the statements identified as high priorities. This includes those ranked as highly important by researchers and practitioners, with an initial focus on training needs rated to be of low difficulty such as conceptualizing research questions, presenting findings, and using frameworks. However, more-difficult competencies should be included as well, if they are rated as important, recognizing those rated as more difficult may take more time and effort to incorporate. Ensuring inclusion of competencies rated highly important by practitioners may bring a greater focus on creating research for the end user. An example of a competency, which might be important to incorporate, but difficult to include is Making Research Relevant. This cluster was rated high for importance and moderate for difficulty (Figure 2). Looking at the statements in this cluster (e.g., Better identification of questions whose answers are likely to change practice and policy and Involve practitioners in research question development; Appendix Table 1 has a complete list), these statements closely align with the concept of “designing for dissemination.”24 A designing for dissemination approach encourages researchers to collaboratively involve dissemination partners early in the research process to better incorporate issues related to external validity and D&I in the earliest phases of intervention development.25–29 These may be difficult to incorporate because these skills might be less concrete, and more difficult to gain experience with during a training program. Existing evidence with a survey of public health researchers indicate that these are not practiced widely in the field.24

Limitations

There are several limitations to this study. As mentioned, participants included in the sample were likely those interested in research; the contact information for some of those invited was obtained from lists of existing research partnerships. This may limit the generalizability of the sample, as practitioners less interested in research were not included. Additionally, though a small number of policymakers participated in the initial idea generation phase, there were not enough of these stakeholders in the Sorting and Rating phases to be included in the Phase 2 and 3 analysis. Thus, had more policymakers been included in the second phase of the study, the Communicating Research Findings cluster may have been rated as more important as well as difficult.30 As concept mapping is a mixed methods approach for which the initial phase is driven by qualitative research, purposive rather than random sampling was used to select participants; thus, the participants may not be representative of researchers, practitioners, and policymakers. Further, data saturation, rather than a target sample, drove the sample size. Reducing the 274 statements initially submitted to a list of 93, which makes the sorting and rating phases more manageable, may have eliminated some of the nuance between similar statements and thus some specificity may have been lost. It is also not possible to know exactly what researchers and practitioners were thinking when they rated importance and difficulty, and whether these were similar within and between groups of respondents. Finally, though this study identified topics in which researchers and practitioners report D&I researchers need additional training, it does not provide additional detail about how best to develop curricula.12

Conclusions

By applying a systematic structured brainstorm process like Concept Mapping, competencies in D&I training can be developed that are relevant for both researchers and practitioners. This exercise demonstrated competencies that were rated as high for importance and low for difficulty, suggesting ready opportunity for inclusion in training such as conceptualizing research questions, presenting findings, and using frameworks. Other competencies were identified that were rated as important, but somewhat difficult such as Making Research Relevant; these may still be important, but take more effort and resources to address.

Supplementary Material

Table 2. Mean Difficulty and Importance Ratings for Each Cluster by Researchers and Practitioners.

| Cluster | Difficulty of incorporating skills | Importance of skill mastery | ||

|---|---|---|---|---|

|

| ||||

| Researchers | Practitioner | Researchers | Practitioner | |

| Raters | 30 of 46 | 16 of 46 | 29 of 45 | 16 of 45 |

|

| ||||

| Communicating research findings (15 statements) | ||||

| Mean | 3.52 | 3.60 | 3.70 | 3.55 |

|

| ||||

| Improve practice partnerships (16 statements) | ||||

| Mean | 2.71 | 2.92 | 3.91 | 3.85 |

|

| ||||

| Make research more relevant (8 statements) | ||||

| Mean | 2.99 | 3.25 | 4.28 | 4.18 |

|

| ||||

| Strengthen communication skills (4 statements) | ||||

| Mean | 3.21 | 3.36 | 3.96 | 3.94 |

|

| ||||

| Consider and enhance fit (10 statements) | ||||

| Mean | 2.82 | 2.98 | 3.90 | 3.70 |

|

| ||||

| Develop research methods and measures (21 statements) | ||||

| Mean | 3.36 | 3.21 | 3.91 | 3.82 |

|

| ||||

| Build capacity for research (4 statements) | ||||

| Mean | 3.02 | 2.83 | 3.85 | 3.62 |

|

| ||||

| Ensure research is meaningful (7 statements) | ||||

| Mean | 3.19 | 3.35 | 3.96 | 3.91 |

|

| ||||

| Understand multi-level context (8 statements) | ||||

| Mean | 3.31 | 3.28 | 3.88 | 3.85 |

Acknowledgments

The authors are grateful to Ms. Nageen Mir and Drs. Karen Emmons, Matthew Kreuter, Anne Sales, and Chris Pfund, who contributed to this project.

Support for this work came from National Cancer Institute at NIH, through the project entitled: Mentored Training for Dissemination and Implementation Research in Cancer Program (5R25CA171994-02). Additional support for came from National Cancer Institute at NIH (5R01CA160327); the National Institute of Diabetes and Digestive and Kidney Diseases (Grant Number 1P30DK092950); and Washington University Institute of Clinical and Translational Sciences grant UL1 TR000448 and KL2 TR000450 from the National Center for Advancing Translational Sciences.

Dr. Stange's time is supported in part by a Clinical Research Professorship from the American Cancer Society, as a Scholar of The Institute for Integrative Health, and through the Clinical and Translational Science Collaborative of Cleveland, UL1TR000439 from the National Center for Advancing Translational Sciences component of NIH and NIH roadmap for Medical Research.

Footnotes

No other financial disclosures were reported by the authors of this paper.

The contents of this article are solely the responsibility of the authors and do not necessarily represent the official views of NIH.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Balas E, Boren S. Managing clinical knowledge for health care improvement. In: Bemmel J, McCray A, editors. Yearbook of Medical Informatics 2000: Patient-Centered Systems. Stuttgart, Germany: Schattauer Verlaggesellsschaft mbH; 2000. pp. 65–70. [PubMed] [Google Scholar]

- 2.Green LW, Ottoson J, Garcia C, Robert H. Diffusion theory and knowledge dissemination, utilization, and integration in public health. Annu Rev Public Health. 2009;30:151–174. doi: 10.1146/annurev.publhealth.031308.100049. http://dx.doi.org/10.1146/annurev.publhealth.031308.100049. [DOI] [PubMed] [Google Scholar]

- 3.Woolf SH, Johnson RE. The break-even point: when medical advances are less important than improving the fidelity with which they are delivered. Ann Fam Med. 2005;3(6):545–552. doi: 10.1370/afm.406. http://dx.doi.org/10.1370/afm.406. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.National Center for Chronic Disease Prevention and HealthPromotion (NCCDPHP), editor. CDC. RFA-DP-14-001: Health Promotion and Disease Prevention Research Centers. 2013. [Google Scholar]

- 5.Brownson R, Colditz G, Proctor EK, editors. Dissemination and Implementation Research in Health: Translating Science to Practice. Oxford; New York: Oxford University Press; 2012. [Google Scholar]

- 6.Eccles MP, Mittman BS. Welcome to implementation science. Implement Sci. 2006;1(1):1. http://dx.doi.org/10.1186/1748-5908-1-1. [Google Scholar]

- 7.Proctor EK, Landsverk J, Baumann AA, et al. The implementation research institute: training mental health implementation researchers in the United States. Implement Sci. 2013;8:105. doi: 10.1186/1748-5908-8-105. http://dx.doi.org/10.1186/1748-5908-8-105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Straus SE, Brouwers M, Johnson D, et al. Core competencies in the science and practice of knowledge translation: description of a Canadian strategic training initiative. Implement Sci. 2011;6:127. doi: 10.1186/1748-5908-6-127. http://dx.doi.org/10.1186/1748-5908-6-127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Meissner HI, Glasgow RE, Vinson CA, et al. The U.S. training institute for dissemination and implementation research in health. Implement Sci. 2013;8:12. doi: 10.1186/1748-5908-8-12. http://dx.doi.org/10.1186/1748-5908-8-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Prevention and Control of Cancer: Post-Doctoral Trainin in Implementation Science (PRACCTIS) [Accessed February 15, 2016]; www.umassmed.edu/pracctis.

- 11.Padek M, Colditz G, Dobbins M, et al. Developing educational competencies for dissemination and implementation research training programs: an exploratory analysis using card sorts. Implement Sci. 2015;10:114. doi: 10.1186/s13012-015-0304-3. http://dx.doi.org/10.1186/s13012-015-0304-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Gonzales R, Handley MA, Ackerman S, O'Sullivan PS. A framework for training health professionals in implementation and dissemination science. Acad Med. 2012;87(3):271–278. doi: 10.1097/ACM.0b013e3182449d33. http://dx.doi.org/10.1097/acm.0b013e3182449d33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Brownson RC, Baker EA, Leet TL, Gillespie KN, True WR. Evidence-Based Public Health. 2nd. New York: Oxford University Press; 2010. http://dx.doi.org/10.1093/acprof:oso/9780195397895.001.0001. [Google Scholar]

- 14.Green LW. Public health asks of systems science: to advance our evidence-based practice, can you help us get more practice-based evidence? Am J Public Health. 2006;96(3):406–409. doi: 10.2105/AJPH.2005.066035. http://dx.doi.org/10.2105/AJPH.2005.066035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kohatsu ND, Robinson JG, Torner JC. Evidence-based public health: an evolving concept. Am J Prev Med. 2004;27(5):417–421. doi: 10.1016/j.amepre.2004.07.019. http://dx.doi.org/10.1016/j.amepre.2004.07.019. [DOI] [PubMed] [Google Scholar]

- 16.Brownson RC, Colditz GA, Dobbins M, et al. Concocting that Magic Elixir: Successful Grant Application Writing in Dissemination and Implementation Research. Clin Transl Sci. 2015;8(6):710–716. doi: 10.1111/cts.12356. http://dx.doi.org/10.1111/cts.12356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Rosas SR, Kane M. Quality and rigor of the concept mapping methodology: a pooled study analysis. Eval Program Plann. 2012;35(2):236–245. doi: 10.1016/j.evalprogplan.2011.10.003. http://dx.doi.org/10.1016/j.evalprogplan.2011.10.003. [DOI] [PubMed] [Google Scholar]

- 18.Kane M, Trochim WM. Concept mapping for planning and evaluation. Thousand Oaks, CA: Sage; 2007. http://dx.doi.org/10.4135/9781412983730. [Google Scholar]

- 19.Brownson R, Kelly C, Eyler A, et al. Environmental and policy approaches for promoting physical activity in the United States: A research agenda. J Phys Act Health. 2008;5(4):488–503. doi: 10.1123/jpah.5.4.488. http://dx.doi.org/10.1123/jpah.5.4.488. [DOI] [PubMed] [Google Scholar]

- 20.Proctor E, Luke D, Calhoun A, et al. Sustainability of evidence-based healthcare: research agenda, methodological advances, and infrastructure support. Implement Sci. 2015;10:88. doi: 10.1186/s13012-015-0274-5. http://dx.doi.org/10.1186/s13012-015-0274-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Chastonay P, Papart J, Laporte J, et al. Use of concept mapping to define learning objectives in a master of public health program. Teach Learn Med. 1999;11(1):21–25. http://dx.doi.org/10.1207/S15328015TLM1101_6. [Google Scholar]

- 22.Brownson RC, Dodson EA, Stamatakis KA, et al. Communicating evidence-based information on cancer prevention to state-level policy makers. J Natl Cancer Inst. 2011;103(4):306–316. doi: 10.1093/jnci/djq529. http://dx.doi.org/10.1093/jnci/djq529. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Concept Systems Incorporated. [Accessed February 3, 2016]; www.conceptsystems.com/software/

- 24.Brownson RC, Jacobs JA, Tabak RG, Hoehner CM, Stamatakis KA. Designing for dissemination among public health researchers: findings from a national survey in the United States. Am J Public Health. 2013;103(9):1693–1699. doi: 10.2105/AJPH.2012.301165. http://dx.doi.org/10.2105/AJPH.2012.301165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Green LW, Glasgow RE. Evaluating the relevance, generalization, and applicability of research: issues in external validation and translation methodology. Eval Health Prof. 2006;29(1):126–153. doi: 10.1177/0163278705284445. http://dx.doi.org/10.1177/0163278705284445. [DOI] [PubMed] [Google Scholar]

- 26.National Cancer Institute. Designing for Dissemination: Conference Summary Report. Washington, DC: National Cancer Institute; Sep 19-20, 2002. [Google Scholar]

- 27.Owen N, Goode A, Fjeldsoe B, Sugiyama T, Eakin E. Designing for the dissemination of environmental and policy initiatives and programs for high-risk groups. In: Brownson R, Colditz G, Proctor E, editors. Dissemination and Implementation Research in Health: Translating Science to Practice. New York: Oxford University Press; 2012. pp. 114–127. http://dx.doi.org/10.1093/acprof:oso/9780199751877.003.0006. [Google Scholar]

- 28.Glasgow RE, Emmons KM. How can we increase translation of research into practice? Types of evidence needed. Annu Rev Public Health. 2007;28:413–433. doi: 10.1146/annurev.publhealth.28.021406.144145. http://dx.doi.org/10.1146/annurev.publhealth.28.021406.144145. [DOI] [PubMed] [Google Scholar]

- 29.Klesges LM, Estabrooks PA, Dzewaltowski DA, Bull SS, Glasgow RE. Beginning with the application in mind: designing and planning health behavior change interventions to enhance dissemination. Ann Behav Med. 2005;29(Suppl):66–75. doi: 10.1207/s15324796abm2902s_10. http://dx.doi.org/10.1207/s15324796abm2902s_10. [DOI] [PubMed] [Google Scholar]

- 30.Brownson RC, Dodson EA, Kerner JF, Moreland-Russell S. Framing research for state policymakers who place a priority on cancer. Cancer Causes Control. 2016;27:1035. doi: 10.1007/s10552-016-0771-0. http://dx.doi.org/10.1007/s10552-016-0771-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.