Abstract

Eyes are central to face processing however their role in early face encoding as reflected by the N170 ERP component is unclear. Using eye tracking to enforce fixation on specific facial features, we found that the N170 was larger for fixation on the eyes compared to fixation on the forehead, nasion, nose or mouth, which all yielded similar amplitudes. This eye sensitivity was seen in both upright and inverted faces and was lost in eyeless faces, demonstrating it was due to the presence of eyes at fovea. Upright eyeless faces elicited largest N170 at nose fixation. Importantly, the N170 face inversion effect (FIE) was strongly attenuated in eyeless faces when fixation was on the eyes but was less attenuated for nose fixation and was normal when fixation was on the mouth. These results suggest the impact of eye removal on the N170 FIE is a function of the angular distance between the fixated feature and the eye location. We propose the Lateral Inhibition, Face Template and Eye Detector based (LIFTED) model which accounts for all the present N170 results including the FIE and its interaction with eye removal. Although eyes elicit the largest N170 response, reflecting the activity of an eye detector, the processing of upright faces is holistic and entails an inhibitory mechanism from neurons coding parafoveal information onto neurons coding foveal information. The LIFTED model provides a neuronal account of holistic and featural processing involved in upright and inverted faces and offers precise predictions for further testing.

Keywords: N170, eye-tracking, inversion, holistic, eyes, faces

1. Introduction

The eyes play an important role in face perception. Eye tracking studies suggest that regardless of task and initial fixation position, participants tend to fixate on or close to the eye region (e.g. Arizpe et al., 2012; Barton et al., 2006; Janik et al., 1978). Eyes are also the most attended feature regardless of face familiarity (Heisz & Shore, 2008) and this preference is seen in infants (Maurer, 1985), with a clear sensitivity to gaze direction already present at birth (Batki et al., 2000). The eyes seem to be the diagnostic feature used to recognize identity, several facial expressions, and gender (Dupuis-Roy et al., 2009; Schyns et al., 2007). Better expertise in face processing seems to be driven by better information extraction from the eye region (Vinette et al., 2004), a capacity that might go awry in some cases of prosopagnosia in which the eye region is not properly attended (Caldara et al., 2005). Eyes provide essential cues to others’ attention and intention through gaze perception, putting them at the core of social cognition and its impairments as seen in Autism Spectrum Disorder (Itier & Batty, 2009 for a review). While electrophysiological studies also point toward a special status for eyes presented in isolation (Bentin et al., 1996; Itier et al., 2007; Itier et al., 2006), their role in the earliest stages of face encoding is unclear and the featural versus holistic nature of early face perception has puzzled cognitive neuroscientists for nearly two decades. The present paper provides new evidence supporting a particular sensitivity to eyes even in the context of the whole face and their role in early face encoding stages. These findings have important implications for our understanding of face perception and how it breaks down in various disorders, as well as more generally for visual perception. A new neuronal model is proposed that accounts for all the N170 modulations reported, for the holistic processing of upright faces and for the featural processing of inverted faces.

Scalp electrophysiological studies have identified a now well-known ERP component called the N170, the earliest reliable face-sensitive component occurring between 130–200ms over occipito-temporal sites (Bentin et al., 1996). The N170 has been proposed to reflect early perceptual face encoding stages (Eimer, 2000; Sagiv & Bentin, 2001) where features are “glued” together in a holistic facial percept. However, the N170 is even larger for eye regions presented in isolation than for upright faces (Bentin et al., 1996; Itier et al., 2007; Itier et al., 2006) and this eye sensitivity is seen as early as 4 years of age (Taylor et al., 2001a). This featural sensitivity does not include other facial features as nose and mouth elicit delayed and smaller N170s than faces (Bentin et al., 1996; Nemrodov & Itier, 2011; Taylor et al., 2001b). However, eyeless faces elicit N170s with amplitudes similar to normal intact faces, albeit delayed (Eimer, 1998; Itier et al., 2007; Itier et al., 2011; Kloth et al., 2013) and this finding has been interpreted as supporting the view that the N170 reflects a holistic processing stage rather than an eye detector.

The idea that the N170 reflects holistic face processing is also supported by the inversion manipulation, which is known to disrupt face perception and recognition more so than perception and recognition of objects (Yin, 1969). Numerous behavioural studies have shown that objects are processed mostly in a piecemeal way while faces are perceived mostly holistically (e.g. Tanaka & Farah, 1993; Tanaka & Gordon, 2011) and that inversion disrupts this holistic processing (Rossion, 2009). In ERP studies, faces presented upside down trigger delayed but most importantly larger N170s compared to upright faces (Bentin et al., 1996; Itier & Taylor, 2002; Rossion et al., 1999), while upside down objects usually elicit only delayed responses (Itier et al., 2006; Kloth et al., 2013). Similar to objects, animal faces or impoverished human face stimuli, such as sketches or Mooney faces, also show delayed N170 with inversion but no increase in amplitude and sometimes even a slight amplitude reduction (de Haan et al., 2002; Itier et al., 2006; Itier et al., 2011; Latinus & Taylor, 2005; Sagiv & Bentin, 2001; Wiese et al., 2009). The amplitude increase with inversion, also termed the N170 “face inversion effect” (FIE), is thus believed to reflect the disruption of early holistic processing stages specific to human faces and has been used as a hallmark of face specificity. At the neuronal level, this increase has been explained by the recruitment, in addition to face-sensitive neurons, of object-sensitive neurons (Itier & Taylor, 2002; Rossion et al., 1999; Sadeh & Yovel, 2010; Yovel & Kanwisher, 2005), other face-sensitive neurons tuned to the inverted orientation (Eimer et al., 2010), or eye-sensitive neurons (Itier et al., 2007).

Itier et al. (2007) showed that, in contrast to intact faces, inversion of eyeless faces elicited a much reduced N170 FIE and thus proposed that eyes played an important role in this early face specific phenomenon, an idea reinforced by the replication of this finding in later studies (Itier et al., 2011; Kloth et al., 2013; Nemrodov & Itier, 2011). A model involving eye-and face-sensitive neuronal populations (Itier et al., 2007; Itier & Batty, 2009) tried to account for the N170 FIE as well as the larger N170 response to isolated eyes that is seen regardless of eye orientation (Itier et al., 2006; Itier et al., 2007; Itier et al., 2011). According to this model, upright intact faces trigger the activation of face-sensitive neurons which would inhibit eye-sensitive neurons in the context of a configurally correct face, a mechanism that accounts for the lack of amplitude change with upright eyeless faces. Presenting faces upside-down, however, would stop this inhibition, due to the disruption of holistic processing, and the N170-FIE would then reflect the co-activation of both neuronal populations. Both neuronal populations would also respond to isolated eyes, explaining the larger N170 amplitude for eyes than for upright faces.

ERP studies usually use a centrally presented cross to control for fixation position. In most face studies, this central fixation is situated close to the eyes, on the nasion or on the nose. In particular, the studies that reported larger N170 amplitude for isolated eyes or a lack of FIE for inverted eyeless faces presented the fixation on the nasion area (Bentin et al., 1996; Itier et al., 2007, 2011; Kloth et al., 2013; Taylor et al., 2001b). However, recent studies have reported changes in N170 amplitude as a function of fixation location on the face. Fixation on the nasion and mouth yielded larger N170 amplitudes than fixation on the nose in one study (McPartland et al., 2010), although eye-tracking was not employed to ensure participants were indeed fixating at these fixation locations during face presentation. Another study using a gaze contingent paradigm with eye tracking reported largest N170 response for fixation on the nasion when faces were presented upright and for fixation on the mouth when faces were presented inverted (Zerouali et al., 2013). This study suggested that the encoding of a face as indexed by the N170 arises from a general upper-visual field advantage rather than from sensitivity to eyes, whether the face is upright or inverted. These results raise the concern that what was taken as evidence for a specific role of the eyes in the FIE (Itier et al., 2007) might simply reflect an artifact of gaze position rather than a true eye sensitivity.

To address this concern and to probe further the role of eyes in early face encoding, we investigated whether fixation on various facial features modulated the N170 response and the N170 FIE. Crucially, in addition to intact faces we also tested eyeless faces. This new condition allowed us to confirm the potential sensitivity to eyes (or lack thereof) and to test the hypothesis that eyes are important in driving the N170 FIE (Itier et al., 2007). Intact and eyeless faces were presented upright and inverted with fixation locations on the middle of the forehead, nasion, left eye, right eye, tip of the nose, and mouth. To ensure a correct point of gaze, eye tracking was used with a fixation-contingent stimulus presentation and any trial in which gaze deviated by more than 1.8° of visual angle around that fixation location was excluded (Fig. 1). In addition, to prevent participants from using anticipatory strategies the fixation cross was always presented in the centre of the screen, while faces were moved around it to obtain the desired fixation position (Fig. 1). House stimuli were used as a control category to ensure that our face stimuli elicited a proper face sensitive N170.

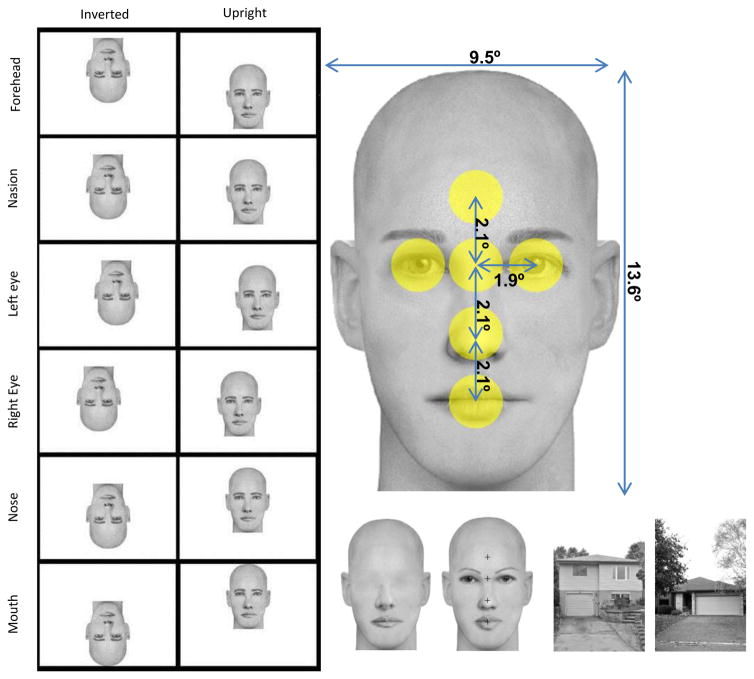

Figure 1.

Left panel: examples of one face presented at each fixation location in the inverted and upright conditions. Participants fixated in the center of the monitor represented here by each white rectangle (images are to scale) and the face was offset in such a way that gaze fixated 6 possible face locations: forehead, nasion, left eye, right eye, tip of the nose, and mouth. Note that eye positions are from a viewer perspective (i.e. left eye is on the left of the picture). This particular positioning of the face to obtain the desired feature fixated resulted in the face situated almost entirely in the upper visual field when fixation was on the mouth in upright faces and almost entirely in the lower visual field when fixation was on the forehead, while the opposite pattern was seen for inverted faces. Right panel, up: one face exemplar with picture size and distances between features in visual angles. The yellow circles represent the interest areas of 1.8° centered on each feature that were used to reject eye gaze deviations in each fixation condition. Right panel, bottom: houses, eyeless and intact face examples, with fixation crosses overlaid on the intact face to indicate where the fixation would occur (note that fixation crosses were never presented overlaid on faces in the actual experiment).

If Zerouali et al. (2013) were correct, we expected to find an interaction between face orientation and fixation location such that the largest N170 responses would be seen for nasion fixation with upright faces and the largest N170 responses would be seen for mouth fixation with inverted faces, reproducing the upper-lower visual field effect. This should also be seen regardless of whether eyes are present or absent from the face. Contrary to this expectation, we found that the N170 was largest when fixation was on the eyes compared to the other fixations and this was seen in both upright and inverted faces, ruling out a simple upper versus lower visual field effect and supporting a special sensitivity to eyes. Crucially, this eye sensitivity disappeared in eyeless faces, demonstrating that it was due to the presence of eyes at fovea. In other words, the sensitivity for eyes was present beyond the classic N170 FIE. However, for eyeless faces, the inversion effect interacted with fixation location such that when fixation was on the mouth, a normal inversion effect was seen while the FIE was maximally reduced when fixation was around the eyes, as reported before (Itier et al., 2007). These findings suggest that eyes do play a role in the inversion effect but only when they are in fovea. We propose a new mechanism to explain this set of data and the N170 FIE. We discuss these findings and their implication for understanding early face perception and in particular holistic versus featural processing.

2. Methods

2.1. Participants

Forty-one undergraduate students from the University of Waterloo (UW) were tested and received course-credit for their participation. They all reported normal or corrected-to-normal vision, no history of head-injury or neurological disease, and were not taking any medication. They all signed informed written consent and the study was approved by the Research Ethics Board at UW. Twenty-one participants were rejected, 10 for not completing the experiment and thus registering too few trials per condition, 8 for too many artefacts also resulting in too few trials per condition, 3 for data-transfer problems. The results from twenty-one participants were kept in the final analysis (20.0 ±1.4 years, 7 male, 18 right-handed, 13 right-eye dominant).

2.2. Stimuli

Two categories of gray-scale images (faces, eyeless-faces) were presented upright and inverted with six fixation-locations (forehead, nasion, left-eye, right-eye, nose, mouth). House stimuli were also presented in both orientations with a central fixation and were used as a control object category. There were thus 26 conditions in total (6 fixation locations × 2 face categories × 2 orientations + houses × 2 orientations).

The faces were created using FACES™ 4.0 by IQBiometrix Inc and eye-removal was done in Adobe™ Photoshop CS5. Forty different face identities were created (20 males, 20 females) by choosing a combination of different internal features displayed at the exact same location within the same bald face outline. This ensured features remained in constant relative positions in every face. This also ensured that the overall luminance across pictures was identical within a category, with only tiny local variations seen as a result of using different features (e.g. a larger mouth, smaller eyes, thinner eye brows etc.). A simple 180° rotation created the inverted stimuli and all images were presented over a white background. Stimuli subtended 9.5° horizontally and 13.6 ° vertically of visual angles. Angular distances between fixation locations were as follows: forehead-nasion: 2.1°; nasion-nose tip: 2.1°; nose tip-mouth: 2.1 °; nasion-left/right eye: 1.9°. The positioning of the face to obtain the desired feature fixated resulted in opposite face positions for upright and inverted faces for two fixation locations. When upright, faces were situated almost entirely in the upper visual field when fixation was on the mouth and almost entirely in the lower visual field when fixation was on the forehead. The opposite pattern was seen when faces were inverted (Fig. 1).

Root Mean Square Contrast and mean luminance (pixel intensity) of the pictures were calculated using custom Matlab (Mathworks, Inc.) scripts (see Table 1). Houses and intact faces were compared using independent t-tests (two-tailed, equal variance not assumed) and intact and eyeless faces were compared using paired sample t-tests (two-tailed). Compared to house stimuli, mean luminance was greater (t(39.2)=−11.09, p<0.001) and contrast was lower (t(43.9)=44.02, p<0.001) for face stimuli. Luminance was also greater (t(39)= −25.5, p<0.001) and contrast was lower (t(39)=32.9, p<0.001) for eyeless compared to intact faces, as expected given the removal of the high contrast zone that eyes represent.

Table 1.

Mean luminance (pixel intensity) and RMS contrast for the three categories of pictures, standard deviations in parentheses.

| category | Luminance | RMS Contrast |

|---|---|---|

| Houses | 171.6 (13.5) | 0.45 (0.04) |

| Intact faces | 195.3 (0.68) | 0.17 (0.01) |

| Eyeless faces | 197.7 (0.35) | 0.12 (0.01) |

2.3. Design

Participants sat 70cm in front of a computer monitor in a dimly-lit sound-attenuated Faraday-cage protected booth and performed an orientation-detection task using a game-controller, pressing one button for upright and another for inverted stimuli. Button order was counter-balanced across participants. Participants were asked not to move their eyes and to respond as quickly and accurately as possible while remaining as still as possible in a chinrest. Practice trials were given prior to the actual experiment. The fixation cross was always presented centrally and stimuli were presented offset so that a given feature would be fixated. The experiment was programmed with Experiment Builder (SR Research, http://sr-research.com).

A trial began with a 0–100ms jittered fixation-cross. An eye-tracking trigger then required fixation on the cross for 250ms before the stimulus was presented for 250ms. A white response-screen was then presented for 900ms. There were 8 blocks of 260 stimuli (10 pictures per condition × 26 conditions). This resulted in a total of 80 trials per condition (2080 trials total).

2.4. Electrophysiological and Eye-tracking Recordings

The EEG recordings were low-pass filtered at 100Hz and collected continuously at 516Hz by an Active-two Biosemi system at seventy-two recording sites: sixty-six channels in an electrode-cap under the 10/20 system-extended and three pairs of additional electrodes. Two pairs of electrodes, situated on the outer canthi and infra-orbital ridges, monitored horizontal and vertical eye movements; one recording pair was situated over the mastoids. A Common Mode Sense (CMS) active-electrode and a Driven Right Leg (DRL) passive-electrode acted as a ground during recording. The electrodes were average-referenced offline.

An SR Research EyeLink 1000 eye-tracker sampling at 1000Hz was used to ensure correct fixation during the entire stimulus presentation. A nine-point automatic calibration was used with participants’ dominant eye (as determined by the Miles test) before every block. If participants lingered over 10 seconds without triggering the fixation-trigger, a drift-correction was engaged. After two drift-corrections, a mid-block recalibration was performed. Participants used a chin rest to aid in maintaining a constant head-position.

2.5. Data Analysis

Only correctly answered trials were used for analysis. Trials with saccades beyond the fixation-area were removed from further analysis. A fixation area was defined as subtending 1.8° diameter visual angle centred on the fixation cross ensuring fixation-areas did not overlap (Fig. 1). This step in the pre-processing removed an average of 4.2% of trials (±3.4SD) across the 21 participants included in the final sample.

All ERP data were analyzed using EEGLab (Delorme & Makeig, 2004) and ERPLAB (http://erpinfo.org/erplab) toolboxes implemented in Matlab. ERP data were digitally band-pass filtered (0.01Hz–30Hz). Trials were visually inspected and those contaminated by artefacts including ocular movements were rejected. After trial rejection, participants with less than 40 trials in each condition (out of 80 initial trials) were rejected. The N170 was maximal at electrodes CB1 and CB2 and was thus measured at these sites between 120–220ms post-stimulus-onset using automatic peak detection. The P1 component was similarly measured at these electrodes between 50 and 150ms and analyzed to determine whether the effects reported on the N170 were face-specific.

Accuracy, Reaction Times (RTs), P1 and N170 amplitudes and latencies were all analysed separately using repeated measures ANOVAs. In a first step, houses (which were only fixated centrally) were compared with the intact face category when fixation was on the nasion (approximate center of the face). For this analysis, within subject factors were category (2: house, face), orientation (2: upright, inverted) and additionally for ERP components, hemisphere (2: left, right). Please note that the data for the P1 component are provided as supplementary material.

The main analyses were focused on the comparison between intact and eyeless faces using the following within-subject factors: face category (2: intact, eyeless), orientation (2: upright, inverted), fixation (6: forehead, nasion, left-eye, right-eye, nose, mouth) and hemisphere (2: left, right) for the ERP analyses. In all ANOVAs Greenhouse-Geisser adjusted degrees of freedom were used when necessary and pairwise comparisons were Bonferroni corrected. Data were analysed using SPSS Statistics 21.

3. Results

3.1. Intact Faces versus Houses

3.1.1. Behavioural results

Accuracy on this orientation detection task was overall high. Participants responded more accurately to faces than to houses (93.8% and 91.5%, respectively; effect of category, F(1,20)=5.73, p=0.027, ηp2=0.223). The category by orientation interaction (F(1,20)=12.4, p<0.005) was due to an effect of inversion for faces, with higher accuracy for upright than inverted faces (95% vs 92%, F=10.5, p<0.005) while no inversion effect was found for houses (F=1.8, p=0.195).

Responses were faster to faces than houses (513ms vs. 568ms, effect of category, F(1,20)=93.15, p<0.001, ηp2=0.823) and the category by orientation interaction (F(1,20)=39.1, p<0.001, ηp2=0.662) was due to the classic inversion effect found for faces (F(1,20)=13.6, p<0.001) with longer RT for inverted than upright faces (527ms vs. 500ms), while no orientation effect was seen for houses (F=3.2, p=0.09).

3.1.2. N170 latency and amplitude

The N170 was earlier for face than house stimuli (effect of category, F(1,20)=26.4, p<0.001, ηp2=0.57; face:150±2ms, house:169±5ms). Latency was delayed with inversion (effect of orientation, F(1,20)=5.4, p<0.05, ηp2= 0.21) but for houses, this inversion effect was seen only in the left hemisphere (hemisphere by orientation by category interaction, F(1,20)=5.4, p<0.05, ηp2=0.21).

As expected, the N170 was much larger for faces than houses (main effect of category, F(1,20)=62.5, p<0.001, ηp2=0.84), as seen on Fig. 2. The N170 was also larger for inverted than upright stimuli (main effect of orientation, F(1,20)=107.9, p<0.001, ηp2=0.84) and this inversion effect was largest for faces (category by orientation interaction, F(1,20)=8.3, p<0.01, ηp2=0.29). The interactions of hemisphere by orientation (F(1,20)=8, p=0.01, ηp2=0.29), hemisphere by category (F(1,20)=7.4, p=0.013, ηp2=0.27) and hemisphere by orientation by category (F(1,20)=6.7, p=0.017, ηp2=0.25) were all significant. These effects reflected the lack of hemisphere effect for houses analysed separately (F(1,20)=0.15, p=0.7) while intact faces yielded larger N170 on the right than on the left hemisphere (effect of hemisphere, F(1,20)=4.4, p<0.05), and this hemisphere effect was more pronounced when faces were inverted than upright (hemisphere by orientation, F(1,20)=15.3, p=0.001).

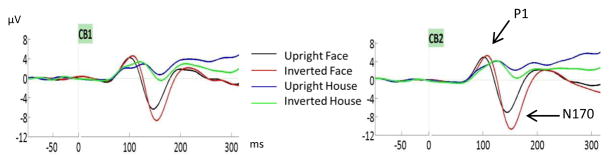

Figure 2.

P1 and N170 ERP components for intact faces at nasion fixation and for houses (fixated in the center) presented upright and inverted. Components were measured at CB1 and CB2 electrodes. Note the much larger N170 response to faces and the larger inversion effect for faces than houses.

3.2. Intact versus eyeless faces

3.2.1. Behavioural results

Responses were slightly impaired by inversion (main effect of orientation, F(1,20)=4.8, p<.05, ηp2=0.19) and by eye removal (main effect of stimulus category, F(1,20)=13.7, p<.001, ηp2=0.41). The orientation by category interaction (F(1,20)=10.14, p=0.005, ηp2=0.336) was due to better responses for upright than inverted intact faces (94.7% vs. 91.8%, respectively, p<0.001) while no inversion effect was seen for eyeless faces (F= 0.26, p=0.616). No effect of, or interaction with, fixation were found.

For RT analysis an interaction between orientation and stimulus category was also found (F(1,20)=13.56, p<0.001, ηp2=0.404). Post-hoc comparisons revealed an inversion effect for intact faces (F(1,20)=14.7, p<0.001), with faster reaction times for upright than inverted intact faces (504ms and 525ms respectively), while no inversion effect was found for eyeless faces (F=1.4, p=0.253).

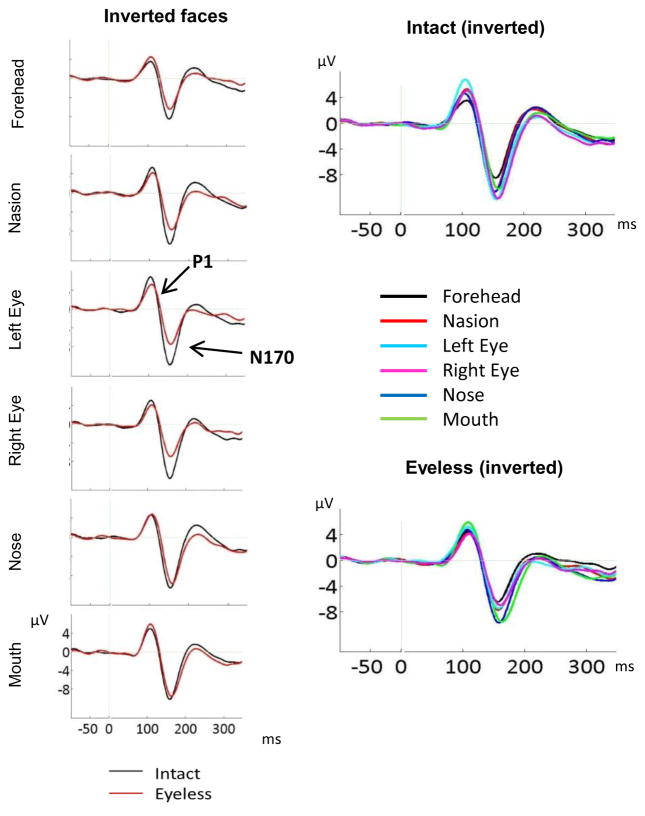

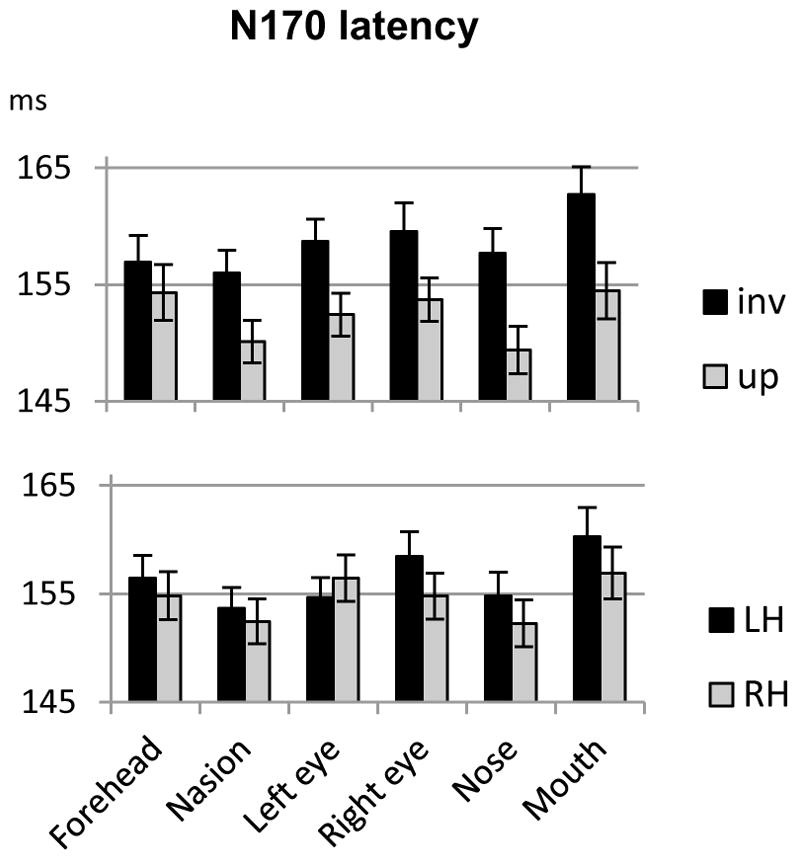

3.2.2. N170 Latency

A main effect of stimulus category (F(1,20)=47.13, p<0.001, ηp2=0.702) was due to longer latencies for eyeless than intact faces (158 vs. 153ms, respectively). The main effect of fixation (F(5,100)=15.6, p<0.001, ηp2=0.44) was due to shorter latencies for nose and nasion fixations compared to both eyes and mouth fixations (p<0.01 for all comparisons). N170 latency was also delayed by inversion (main effect of orientation, F(1,20)=59.8, p<0.001, ηp2=0.75) however this inversion effect varied with fixation location as reflected by an orientation by fixation interaction (F(5,100) = 3.5, p<.05, ηp2=0.15). The inversion effect was significant at each fixation location analysed separately (p<0.001 for each), except at forehead fixation (Fig. 3).

Figure 3.

Mean N170 latency recorded at each fixation location for upright and inverted faces (averaged across hemispheres and face type; upper panel) and left and right hemispheres (LH and RH respectively, averaged across face types and orientations; lower panel). Errors bars represent standard errors to the means.

The hemisphere by fixation interaction (F(5,100)=8.5, p<0.001, ηp2=0.29) was also significant. When each fixation was analysed separately, only fixation on the right eye yielded a significant effect of hemisphere (F(1,20)=5.2, p<0.05) due to a shorter latency on the right than on the left hemisphere. As seen for P1 (see supplementary data), when fixation was on the right eye, the rest of the face was situated in the left hemifield, thus yielding faster latency in the right hemisphere. Although non-significant, the pattern was opposite for the left eye fixation, with shorter latency on the left than on the right hemisphere (Fig. 3).

3.2.3. N170 Amplitude

Apart from a non-significant main effect of hemisphere, all other main effects and interactions were significant in the omnibus ANOVA, in particular the category by orientation by fixation interaction (F(5,100)=4.04, p=0.006, ηp2=0.17), which justified follow up analyses.

3.2.3.1. Eye saliency: upright intact versus upright eyeless faces

We first compared upright intact and eyeless faces to assess the effect of eyes, using a 2(hemisphere) × 2(category) × 6(fixation) ANOVA. The main effects of category (F(1,20)=4.5, p<0.05) and fixation (F(5,100)=5, p=0.006) were significant but modulated by a strong category by fixation interaction (F(5,100)=12.7, p<0.001, ηp2=0.39). As seen on Figure 4, direct category comparison at each fixation location revealed a significantly larger N170 amplitude for intact than eyeless faces when fixation was on the left eye (F(1,20)=12.6, p=0.002) and right eye (F(1,20)=23.1, p<0.001) while amplitude was larger for eyeless than intact faces when fixation was on the nose (F(1,20)=4.4, p<0.05). No category effect was found for forehead, nasion and mouth locations.

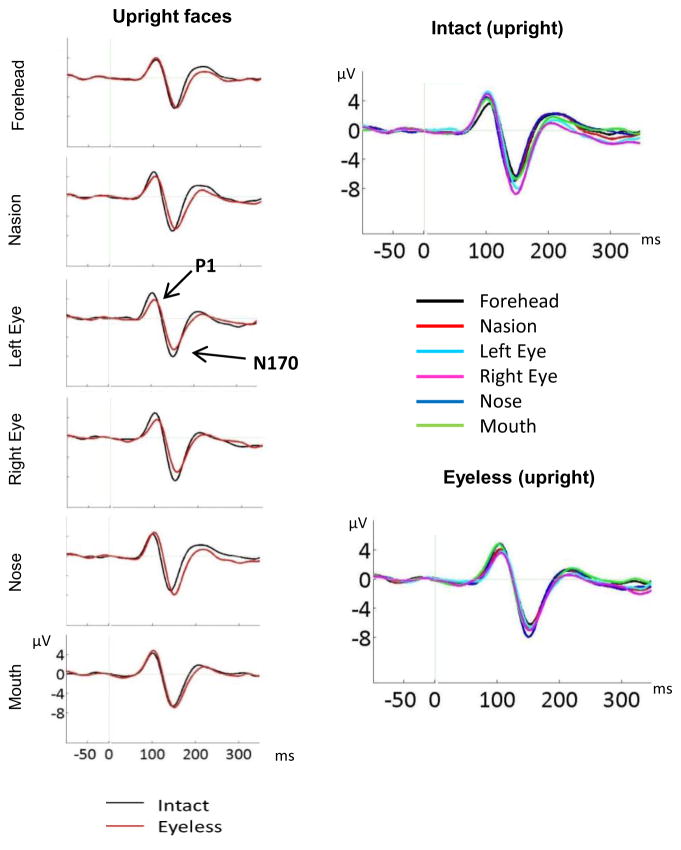

Figure 4.

Left panel: ERP components for upright intact and upright eyeless faces displayed at each fixation location (CB2 electrode). The larger P1 and N170 for intact than eyeless faces are clearly seen for eye fixations while a larger N170 for eyeless than intact faces is clearly visible for nose fixation. Right panel: N170 to intact and eyeless upright faces for all fixation locations (CB2 electrode). The larger N170 for eye fixations is clearly seen for intact faces while a larger N170 for nose fixation is clearly seen for eyeless faces.

Intact and eyeless faces were also analysed separately to assess the effect of fixation. For upright intact faces, the main effect of fixation (F(5,100)=12.69, p<0.001) was due to significantly larger amplitudes for each eye compared to all other fixation locations (p values between 0.025 and 0.001, see Fig. 4). No other paired comparison was significant. Thus, N170 was largest for eye fixations but not different between the other facial fixation locations.

For upright eyeless faces (Fig. 4), the effect of fixation (F(5,100)=3.6, p=0.018, ηp2=0.15) was due to largest amplitudes seen for the nose fixation (paired comparison significant with forehead (p=0.026), nasion (p=0.006), and right eye (p=0.019) fixations, and trend with left eye (p=0.09) fixation). Amplitudes were not significantly different between nose and mouth fixations but paired comparisons between mouth and the other fixation locations were not significant.

3.2.3.2. Inversion effect as a function of category and fixation

Given the three way interaction between category, orientation, and fixation, we assessed the effect of inversion separately for each category.

Intact faces

Intact faces analysed separately yielded a main effect of orientation (F(1,20)=79.2, p<0.001, ηp2=0.79) with larger amplitudes for inverted than upright intact stimuli (Fig. 5). A significant main effect of fixation (F(5,100) =17.5, p<0.001, ηp2=0.47) was due to larger amplitudes for both eyes compared to all other fixations (each paired comparisons significant at p<0.005 except for right eye-nose comparison with p=0.012). The orientation by fixation interaction (F(5,100)=2.7, p<0.05, ηp2=0.12) reflected a clearer pattern for upright than inverted faces, although the effect of fixation was significant for both orientations analysed separately (upright: F(5,100)=12.69, p<0.001, ηp2=0.39; inverted faces: F(5,100)=13.5, p<0.001, ηp2=0.4). As reported in the preceding section, for upright intact faces, amplitudes were significantly larger for each eye compared to all other fixation locations. For inverted faces, eyes also yielded the largest amplitudes however the left eye-nose comparison approached significance (p=0.07) while amplitudes for the right eye fixation did not differ significantly from those for nose and nasion fixation conditions (p=0.92 and p=0.18 respectively). In addition, forehead fixation yielded smaller amplitude than all other fixations (p<0.05) except mouth fixation (Fig. 5–6).

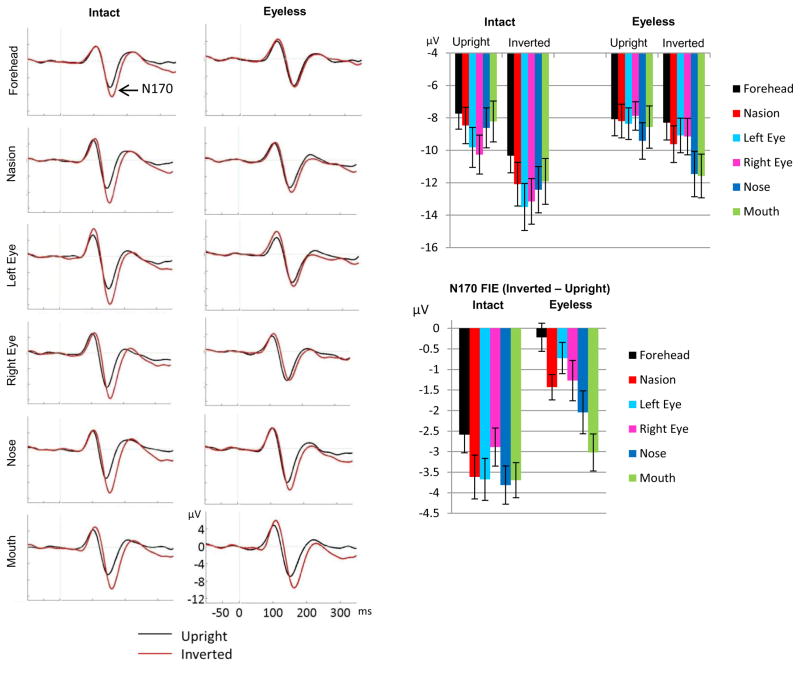

Figure 5.

N170 inversion effect displayed for intact and eyeless faces at each fixation location (left panels: ERP waveforms displayed at CB2 site; right panel up: bar plots with standard errors, averaged across CB1 and CB2 electrodes; right panel bottom: bar plots of the inverted-upright N170 amplitude difference for each category across both hemispheres). A clear inversion effect is seen for all fixation locations for intact faces, and the magnitude of this FIE does not differ between fixation locations. For eyeless faces, no N170 FIE was seen for forehead fixation, a very small effect was seen for nasion and both eye fixations, and a clear effect was seen for nose and mouth fixations. The FIE at mouth fixation did not differ significantly between intact and eyeless faces.

Figure 6.

Left panel: ERP components for inverted intact and inverted eyeless faces displayed at each fixation location (CB2 site). The larger N170 for intact than eyeless faces is clearly seen for forehead, nasion, and both eye fixations while N170 was identical for the two face categories for mouth fixation. Right panel: N170 to intact and eyeless inverted faces for all fixation locations (CB2 site). The larger N170 for eye fixations is clearly seen for intact faces while a larger N170 for nose and mouth fixations is clearly seen for eyeless faces.

Analysis of each fixation separately confirmed a clear effect of orientation for each fixation location (Fig. 5) with the classic larger amplitude for inverted than upright faces (forehead: F(1,20)=34.4, p<0.001; nasion, F(1,20)=46, p<0.001; left eye, F(1,20)=51.8, p<0.001; right eye, F(1,20)=38.9, p<0.001; nose, F(1,20)=67.1, p<0.001; mouth, F(1,20) =74.4, p<0.001).

The hemisphere by orientation interaction was also significant (F(1,20) =14.6, p=0.001, ηp2=0.42) and was due to a clear hemisphere effect for inverted faces (F(1,20)=5.5, p<0.05) with largest amplitudes over the right hemisphere, but no hemisphere effect for upright faces (F(1,20)=2, p=0.17). The hemisphere by fixation interaction (F(5,100)=3.4, p<0.05, ηp2=0.15) was due to an effect of hemisphere for right eye and nasion fixations (p<0.05, larger on the right hemisphere) but not for the other fixation locations.

Eyeless faces

Eyeless faces analysed separately yielded a main effect of orientation (F(1,20)=24.5, p<0.001, ηp2=0.55) and a main effect of fixation (F(5,100) =15.5, p<0.001, ηp2=0.44). These effects were modulated by a significant fixation by orientation interaction (F(5,100) =8.8, p<0.001, ηp2=0.31) which was due to a lack of orientation effect for forehead fixation, a trend for an orientation effect for left eye fixation (F(1,20)=3.6, p=0.071) and a clear effect of inversion for the other fixation locations (nasion, F(1,20) =21.9, p<0.001; right eye, F(1,20) =6.7, p=0.017; nose, F(1,20) =15.3, p<0.001; mouth, F(1,20) =45.1, p<0.001) (Fig. 5).

The effect of fixation was significant for both upright (F(5,100)=3.6, p=0.018, ηp2=0.15) and inverted (F(5,100)=22.9, p<0.001, ηp2=0.53) eyeless faces analysed separately but paired comparisons varied. As reported in the preceding section on the effect of eyes, for upright eyeless faces, amplitudes were largest for nose fixation. For inverted eyeless faces, amplitudes for nose and mouth fixations did not differ significantly and were both significantly larger than amplitudes for all other fixation locations (p<0.005 for all comparisons); the amplitude for forehead fixation was also smaller than that for nasion (p=0.001).

The hemisphere by fixation (F(5,100)=7.9, p<0.001, ηp2=0.28) and the hemisphere by fixation by orientation (F(5,100)=2.7, p<0.5, ηp2=0.12) interactions were also significant. When each fixation location was analysed separately, amplitudes tended to be larger over the right than the left hemisphere for right eye (p=0.07), nose (p=0.08) and mouth (p=0.08) fixations and this hemisphere effect was more pronounced for inverted than upright stimuli for mouth (hemisphere by orientation, F(1,20)=4.8, p<0.05) and nose (hemisphere by orientation, F(1,20)=4, p=0.06) fixations.

3.2.3.3. Inversion effect and eyes: inverted intact versus inverted eyeless faces

We also assessed the effect of eye presence/absence on the N170 FIE by comparing directly inverted intact and inverted eyeless faces (Fig. 6). A strong category by fixation interaction emerged (F(5,100)=21.5, p<0.001, ηp2=0.31). Direct category comparison at each fixation location revealed significantly larger N170 amplitudes for intact than eyeless inverted faces for all fixations except mouth fixation (effect of category for forehead (F(1,20)=36, p<0.001), nasion (F(1,20)=50, p<0.001), left eye (F(1,20)=65.1, p=0.001), right eye (F(1,20)=75.7, p<0.001), nose (F(1,20)=12.7, p<0.002), mouth (F(1,20)=2.2, p=.15)).

Finally, as the FIE is typically defined by the difference between upright and inverted faces, and because upright faces differed between intact and eyeless faces, we also compared directly the FIE between face categories using a 2(hemisphere) × 2(category) × 6(fixations) ANOVA performed on the inverted – upright N170 amplitude difference. A main effect of category was found (F(1,20) = 68, p<0.001), due to an overall reduction in FIE for eyeless faces. A significant interaction between fixation and category (F(5,100) = 4.05, p=0.006) confirmed the reduction in FIE for all fixations except mouth for which only a trend was found (effect of category for forehead (F(1,20)=31.7, p<0.001); nasion (F(1,20)=21.7, p<0.001); left eye (F(1,20)=45.8, p=0.001); right eye (F(1,20)=14.5, p<0.001); nose (F(1,20)=16.9, p<0.001); mouth (F(1,20)=3.9, p=0.062)). We also compared the N170 amplitude differences across fixation locations for each category. A small effect of fixation was found for intact faces (F(5,100) = 2.65, p<0.05) but paired comparisons were not significant (Fig. 5, bottom right panel) suggesting overall similar FIE across fixation locations. For eyeless faces however, the effect of fixation was highly significant (F(5,100) = 8.8, p<0.001) and paired comparisons revealed a significantly smaller FIE for forehead compared to nasion, nose and mouth fixations, and for left eye compared to mouth fixation (all Bonferroni corrected comparisons at p<0.05). Thus, removing the eyes reduced the FIE for all fixations except mouth fixation.

4. Discussion

In the present study we explored the effect of fixation location on the N170 ERP component, the earliest neural marker of face perception, to test the idea of an eye detector and of its possible role in driving the N170 face inversion effect. Using eye tracking to enforce correct fixation on the desired facial part we found that fixation location clearly affected the N170 amplitude and interfered with the face inversion effect when eyes were removed. We discuss below these effects in turn and their important implications for our understanding of early face perception stages. We finish the discussion by presenting a new neuronal account of the N170 and its modulations which can explain holistic processing in upright faces and featural processing in inverted faces. We start by discussing our control condition.

4.1. Intact faces versus houses: “normal” N170 response

In contrast to some of our previous studies which employed real face photographs, in this study we used artificially created facial stimuli. Various face identities were obtained using different internal facial features but all faces had the same bald outline to keep head size constant and to avoid the hair area to create a zone of darker contrast and to influence the N170 response. In addition every feature was situated at the same respective location within the outline, ensuring minimal variations in feature position within fixation conditions. We compared the N170 response to these artificial faces and to house stimuli to ensure proper face sensitivity. As reported in previous orientation discrimination tasks (e.g. Itier et al., 2006; 2007) faces were responded to better and faster than houses and elicited an inversion effect with better and faster responses for upright than inverted faces while no such inversion effects were found for houses. At the ERP level, P1 and N170 ERP components were delayed for houses compared to faces and the N170 was much larger for faces than houses (Fig. 2). In addition, although an N170 inversion effect was found for houses as sometimes reported (e.g. Eimer, 2000) this effect was much larger for faces than houses. All these results demonstrate proper face sensitivity for our stimuli. The next step was to assess the sensitivity of the N170 to the eyes by comparing the effect of fixation location on N170 for intact and eyeless faces.

4.2. Saliency of the eyes regardless of face orientation: ruling out simple hemifield and upper-lower visual field effects

In upright intact faces, the N170 amplitude was largest for fixation on the left and right eyes (Fig. 4–5). The amplitude did not differ significantly between other fixation locations including the forehead, the nasion, the nose, and the mouth. These results are in contrast to McPartland et al. (2010) who reported larger N170 amplitude for fixation on the nasion and mouth compared to fixation on the nose. However, that study did not use an eye tracker to confirm gaze fixation and participants might have moved their eyes slightly away from the desired fixation location.

All fixations were situated on the face midline except eye fixations. When fixation was on one eye, the rest of the face was mostly situated in the contralateral hemifield (Fig. 1). Latency analyses revealed that when fixation was on the right eye, faster latencies were found in the right hemisphere while the opposite pattern was seen for the left eye and left hemisphere. These small hemifield effects were seen for both P1 and N170 latencies (Fig. S1; Fig. 3) but were not consistently significant across eye fixations. Importantly, no such effects were seen for the N170 amplitude. Moreover, as the N170 amplitude is known to decrease with face eccentricity (Rousselet et al., 2005), we expected smaller rather than larger amplitudes for eye fixations compared to midline fixations. Finally and most importantly, larger amplitudes were seen for eye fixations only when eyes were present (intact faces), despite identical positions of the face on the monitor between intact and eyeless faces. These amplitude results argue against a simple hemifield effect as the source of the present eye sensitivity.

The sensitivity for eyes was also found for inverted intact faces and was thus orientation-independent, a result arguing against the idea that eyes might yield larger amplitudes only because they are situated in the upper visual field (Zerouali et al., 2013). As clearly seen on Fig. 1, the face was in the same upper visual field position for both upright faces fixated on the mouth and for inverted faces fixated on the forehead, yet significantly larger N170 amplitude was found for the inverted face condition (Fig. 5). Similarly, although the face was in the same lower visual field position for both upright faces fixated on the forehead and for inverted faces fixated on the mouth, larger N170 amplitude was found for the inverted face condition. Although for forehead and mouth fixations completely opposite vertical face positions were seen when the face was upright or inverted, inversion effects were found in the same direction (larger for inverted than upright faces). These results rule out a simple upper-lower visual field explanation for the N170 FIE and for the eye sensitivity.

The reasons why our results are different from those reported by Zerouali et al. (2013) are unclear although likely linked to the differences in design and stimuli used. The faces in that study were of a larger visual angular size than our stimuli and the N170s to face locations were computed for areas of interest that were much larger than ours and of unequal sizes. For instance the nasion location included the narrow area in between the two eyes while each eye area included the entire eye and the eye brow and the mouth included the entire mouth feature. In contrast we used an interest area of 1.8° around the fixation location which ensured that the analysis reflected information in fovea and that areas were of the same size yet non-overlapping. Future studies will have to explain how and why such differences in design yielded opposite results.

4.3. In support of the eye detector hypothesis

Rather than a simple low level visual effect of face position our results suggest a special sensitivity for the eye feature within the face demonstrated here by i) the larger N170 amplitude when fixation was on the eyes compared to other fixation locations in intact faces and ii) the significant reduction in N170 amplitude for eyeless faces fixated at these eye fixation locations. Eye sensitivity was previously demonstrated by a larger N170 for isolated eyes than whole faces (Bentin et al., 1996; Itier et al., 2006; Itier et al., 2007; Itier et al., 2011; Kloth et al, 2013; Taylor et al., 2001a,b). For this reason Bentin et al. (1996) initially suggested that the N170 might reflect the activity of an eye detector. The sensitivity for eyes within the face irrespective of its orientation is a new finding, and was made possible by the use of an eye tracker and a strict analysis process.

Eimer (1998) originally argued against the eye detector hypothesis based on the finding that eyeless faces elicited N170s of similar amplitudes to intact faces. The argument was that removing eyes from a face did not modulate the N170 so the eyes could not have any specific role in eliciting the N170 component in the first place. This finding was replicated by later studies (Itier et al., 2007; Itier et al., 2011; Kloth et al., 2013; Nemrodov & Itier, 2011). Importantly, all these studies used a central fixation which positioned participants’ gaze around the nasion area (the stimuli used by Itier and colleagues were actually slightly cropped so that eyes were exactly in the center of faces and thus fixation was on the nasion). In the present study, the lack of amplitude change between upright intact and upright eyeless faces was replicated for fixation on the nasion and was also seen for fixation on the forehead and the mouth. However, along with the new finding of larger amplitude for eye fixations, these results in fact support the eye detector hypothesis rather than going against it: the amplitude was larger for eye fixations than other fixation locations and this difference was abolished when eyes were not present. The novelty here is that to elicit larger N170 amplitudes eyes need to be in fovea. Fixating between the two eyes or close to them is not enough to trigger maximal N170 amplitude (fovea is usually defined as 1–2° of visual angle and nasion was just less than 2° away from the center of each eye).

Is it the position of any feature in fovea that matters or the fact that the foveated feature is an eye? In the present study, fixation on the mouth or nose in intact upright faces elicited N170s of similar amplitude as fixation on the forehead or nasion where no feature was seen. These results support the idea of a special sensitivity to eyes rather than to any feature presented in fovea. They are also in line with reports of larger N170 to isolated eyes than to faces (Bentin et al., 1996; Itier et al., 2006; Itier et al., 2007; Itier et al., 2011; Kloth et al., 2013; Taylor et al., 2001a,b) while similar presentation of isolated mouths or noses elicited smaller and delayed N170s (Bentin et al., 1996; Nemrodov & Itier, 2011; Taylor et al., 2001b). Although in previous studies isolated eye regions were presented centrally, putting fixation at the nasion, it is possible that without an eye tracker to enforce fixation as used in the present study, small deviations of gaze position occurred, putting one eye in full fovea.

Importantly, the current N170 latency results suggest a general effect of the presence of the eyes as the N170 peak was earlier for intact than eyeless faces regardless of fixation location, a finding replicating previous reports using central or nasion fixation (Eimer, 1998; Itier et al., 2007; Itier et al., 2011; Kloth et al., 2013). This suggests a general “boost” of the signal when eyes are present in the face. Overall, these results support an early sensitivity to eyes per se, within the context of an upright face, with earliest N170 when eyes are present in the face and largest N170 when eyes are in fovea. The P1 component was also largest for eyes in intact faces and this is likely due to local contrast differences at that stage (cf. Table 1), as the eyes have a high energy density spectrum. This early sensitivity might also reflect the initial stages of the eye detection process.

4.4. N170 Face Inversion effect and eyes

We found the classic N170 face inversion effect (FIE) for intact faces with larger amplitude and delayed latency for inverted compared to upright faces (e.g. Bentin et al., 1996; Eimer, 2000; Itier & Taylor, 2002; Rossion et al., 2000). Importantly, this inversion effect was seen regardless of fixation location. In contrast the FIE for eyeless faces was dependent on fixation location. The FIE for eyeless faces was dramatically reduced compared to intact faces when fixation was on the nasion or on the usual eye location, replicating previous reports using nasion fixations (Itier et al., 2007; Itier et al., 2011; Kloth et al., 2013). This result was central to the claim that the N170 FIE was mostly driven by the presence of the eyes (Itier et al., 2007). However, the fact that the FIE was less reduced for the nose fixation and was normal for the mouth fixation (Fig. 5–6) argues against this idea. The presence of eyes is not necessary for a normal FIE to take place as long as fixation is far away from the normal eye location.

A remarkable finding was the complete lack of inversion effect for eyeless faces when fixation was on the forehead while a clear inversion effect was seen at that same forehead location for intact faces (Fig. 5–6). Since no feature was in fovea when fixation was on the forehead, the inversion effect at that location in intact faces was likely due to the proximity of a feature, here the eyes. Moreover, the FIE was reduced for eyeless faces compared to intact faces when fixation was on the nose, the feature closest to eyes, while it was not for mouth fixation, the feature farthest away from the eyes. These results suggest that the presence of eyes, even outside of fovea, impacted the FIE, and that this impact was a function of the distance between the fixated area and the eyes location. The farther away from the eyes, the less the FIE was diminished by eye removal with no significant decrease for the most distant feature, the mouth.

Overall, the results showed that the largest reduction in FIE for eyeless faces was seen when no feature was present in fovea. However, the direct comparison of upright and inverted eyeless faces revealed a small inversion effect even for nasion, left and right eye fixations where no feature was seen. This suggests that even without a feature at fixation, inversion of the rest of the face was enough to elicit a small but statistically significant N170 amplitude increase. We attribute this effect to the presence of features nearby. Thus, there seems to be two factors contributing to the FIE in this study: a general but small effect of face inversion (features in periphery), and a larger effect of the presence of a feature (here the eyes) in fovea. At the behavioural level, removal of eyes from faces completely abolished the inversion effect on hit rates and reaction times. However, it is important to acknowledge that taking the eyes out of the face distorts the face, rendering eyeless faces odd looking. It is thus possible that the general reduction in FIE with eyeless faces was in part due to this distortion and bizarreness of the stimuli, especially as both eyes were taken out. Thus, in the absence of a control condition in which another feature than the eyes is removed (or two other features), we cannot make the claim that the eyes per se had an effect, above and beyond the simple removal of a feature.

Itier et al. (2007) revisited

Itier et al. (2007) proposed that the N170 amplitude variations could be explained by the relative activation of both face and eye sensitive neurons depending on the stimulus presented. The first idea of this model was that upright faces trigger the response of face sensitive neurons while inverted faces, like isolated eyes, trigger the response of both eye sensitive and face sensitive neurons, explaining the larger N170 amplitude for inverted faces and eyes compared to upright faces. When eyes are removed, the eye sensitive neurons would no longer be engaged, explaining the reduction in FIE for eyeless faces. The fact that we found a normal FIE in eyeless faces when fixation was on the mouth suggests this explanation is incomplete and needs to take into account the distance from fixation to the eye location.

The second idea of the model was a possible inhibition of eye sensitive neurons by face sensitive neurons in upright intact faces due to the upright face context. While both face and eye sensitive neurons would respond to isolated eyes, only face sensitive neurons would respond to intact or eyeless faces (eye sensitive neurons being inhibited or not responsive), explaining the amplitude difference between eyes and upright faces and the lack of amplitude variation between intact and eyeless upright faces. This explanation cannot account for the larger N170 amplitude for eye fixations compared to other fixation locations within intact upright faces. It also cannot account for the intriguing finding of larger N170 amplitude for the nose fixation compared to other fixation locations in eyeless faces.

Thus, while the current findings support a special sensitivity for eyes within the face at the neuronal level, they cannot be explained by the model proposed by Itier et al. (2007). Below we propose major modifications to this model that explain the current findings and also account for holistic and featural face processing.

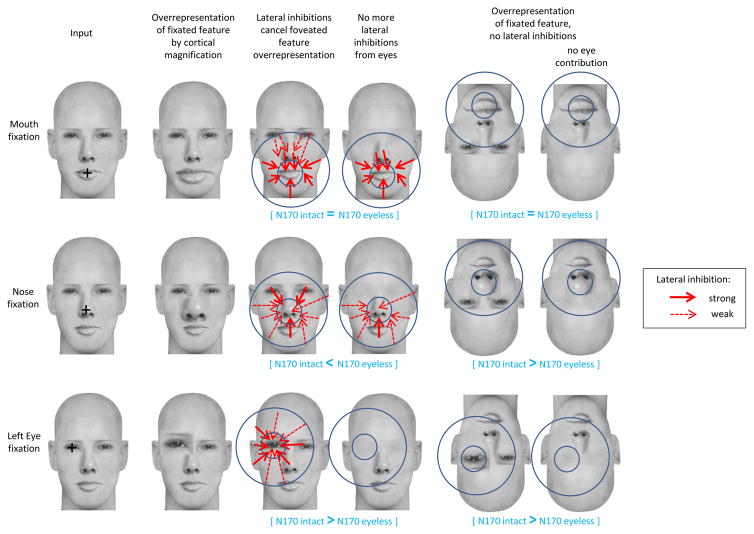

4.5. Novel neuronal account of the N170 component and its modulations: the Lateral Inhibition, Face Template and Eye Detector based (LIFTED) model

The sparse coding theory of face representation (Young & Yamane, 1992, see also Rolls & Treves, 2011) assumes that relatively small groups of neurons code for various features or parts and that the representation of a face and its identity is coded at the neuronal population level. We assume that the N170 ERP component reflects the overall activation of neuronal populations from face sensitive areas. As in monkeys’ infero-temporal cortex, we assume neurons in face sensitive areas are mostly responsive to face parts and their combinations with a focus on features related to the eyes and the face outline geometry (Freiwald et al., 2009). Thus, rather than assuming only two neuron populations as in Itier et al. (2007) (face sensitive and eye sensitive neurons), we now assume that neuron assemblies code for face parts and their configuration at the population level (i.e. many neurons respond to the mouth, many respond to the nose, eyes, eye brows, face outline, ears, hair etc.). As we proposed elsewhere (Nemrodov & Itier, 2011), eyes act as an anchor point from which the position and orientation of other features are coded based on an upright human face template.

Inhibition of foveated features by perifoveal features ensures holistic processing

Cortical magnification refers to the larger amount of primary visual cortex devoted to the processing of visual information within fovea compared to the amount of striate cortex devoted to the processing of visual information outside of fovea (Daniel & Whitteridge, 1961). Cortical magnification results in a stronger input from fixated features (in fovea) than non-fixated features (outside of fovea) into the higher face sensitive visual areas. This means that for an upright face, fixation on the eyes, the nose, or the mouth should yield larger N170 amplitude than fixation on an area of the face lacking features such as forehead or nasion. We found that fixation on one eye resulted in the largest N170 amplitude and we interpreted this finding as reflecting the activity of an eye detector where neurons coding for eyes are either more numerous or respond more than other neurons. In contrast, similar amplitudes were found for all other fixation locations, whether fixation was on a feature (nose and mouth) or not (forehead, nasion). We thus suggest that the coding of nose and mouth in upright intact faces was inhibited when these features were in fovea and this inhibition came from neurons coding the other face parts (outside of fovea).

When fixation was on the mouth in upright faces, the N170 was similar regardless of eye presence or absence. We thus also propose that the strength of the inhibition depends on the retinotopic distance between the feature in fovea and the peripheral, inhibiting features. When mouth was fixated, mouth-coding neurons were inhibited mainly by neurons coding the nose and face outline while the inhibition from eye-coding neurons might have been too weak to significantly impact the overall response (Fig. 7). This would explain why similar N170 amplitudes were found for intact and eyeless upright faces for mouth fixation (note however that on Fig. 5 the N170 amplitude for mouth fixation was slightly larger in eyeless compared to intact faces, which is in the predicted direction of a weaker inhibition of mouth-coding neurons due to eye removal). When fixation was on the nose in intact upright faces, nose-coding neurons were inhibited by neurons coding for the eyes, mouth, and the close portions of face outline. Because eyes were close, their inhibitory effect on nose-coding neurons was likely fairly strong. When eyes were no longer present nose-coding neurons were no longer inhibited by eye-coding neurons (Fig. 7), explaining the intriguing result of a larger N170 amplitude for eyeless than intact faces at nose fixation.

Figure 7.

Schematic representation of how lateral inhibitions work according to the LIFTED model and the contribution of the visual information to the scalp recorded N170 ERP component. Three fixation location conditions are represented: mouth, nose and left eye. The smaller blue circles represent the fovea; the larger blue circles represent the parafovea, i.e. the approximate distance from fixation under which peripheral features contribute to the inhibition and to the overall neuronal response (N170). The overrepresentation of the fixated feature due to cortical magnification is represented as a disproportionately large feature. In upright faces, this overrepresentation is cancelled by lateral inhibitions (red arrows) from neurons coding for parafoveal features onto neurons coding for the foveated feature. The strength of the lateral inhibitions depends on the distance between the fixated and peripheral features (stronger when distances are shorter, represented by thicker arrows). In inverted faces, lateral inhibitions are no longer seen. See main text for the explanation of the N170 variations in each fixation condition.

Without an inhibition mechanism, any feature in fovea would be treated preferentially over the surrounding visual information because of cortical magnification and it would be impossible to perceive the face as a whole. We believe this inhibition of the foveated feature by peripheral features is necessary for holistic processing, the hallmark of upright face processing (Rossion, 2009). Holistic processing refers to the perceptual integration of all facial features into a whole and numerous studies have shown that it is what characterizes the processing of faces over other visual objects which are processed more featurally (Maurer et al., 2002; Rossion, 2009; Tanaka & Gordon, 2011). The mechanism we describe does precisely that. While neurons coding for most of the facial information outside of fovea contribute to the overall N170 component amplitude, they also inhibit the neurons coding the facial information at fovea. This cancels the over-representation of the visual information at fovea and ensures that all facial features are represented in one Gestalt, including features outside of fovea (Fig. 7). This inhibition mechanism allows features to be perceptually “glued” into a holistic representation.

Local lateral inhibitory connections exist in higher visual areas (Adini et al., 1997) making this inhibitory mechanism possible and inhibition has already been invoked in the face processing field for the N200 intracranial component and the N170 scalp component (Allison et al., 2002; Itier et al., 2007). Note that the eye detector anchors the percept at the eyes. This eye anchoring mechanism is supported by studies showing that the simple detection of a face is impaired by removing the eyes more so than by removing the nose or the mouth (Lewis & Edmonds, 2003). It is also in line with image classification studies showing that eyes are the first feature attended (Vinette et al., 2004). Both the eye anchoring mechanism and the holistic processing are possible based on an upright human face template (see also Rossion, 2009; and Johnson, 2005, for developmental evidence). It is noteworthy that the N170 latency was shortest overall at nose and nasion fixation locations, an effect that might reflect most efficient holistic processing around the face center of gravity (e.g. Bindemann et al., 2009) where all facial features can be best captured.

Our results support the idea of an eye detector which would be specialized in detecting eyes and would enable them to become an anchoring point for the face percept. However, to ensure holistic processing, eye-coding neurons should also be inhibited by neurons coding for the rest of the face when eyes are fixated. In fact, given their salience, eyes should arguably be the most inhibited feature. We believe this is the case and that the face outline and the other facial features actively inhibit eyes when they are fixated (Fig. 7), although the present design does not allow us to test this directly. However, fixation on eyes yielded the largest response (which is why we invoked an eye detector in the first place) in turn suggesting that this inhibition was only partial in the present study. One possibility is that the size of the stimuli we used did not allow for a full inhibition of eyes by other facial features. We proposed earlier that the strength of the lateral inhibitions depends on the retinotopic distance between the feature in fovea and the peripheral features. The distance between the eyes and the mouth was already invoked earlier to explain the lack of significant amplitude modulation for mouth fixation in eyeless faces. It is possible that with the current stimuli, the contribution of the other features (in particular the mouth) to the inhibition of fixated eyes was not strong enough to compensate fully for the eye detector activity.

The hypothesis that the strength of lateral inhibitions depends on distances between features implies that the size of the stimulus and the distance at which it is viewed will affect the inhibition process. It is possible that eyes could be completely inhibited when fixated if their distance to other features is small enough. With such stimuli (smaller than the ones used here) no N170 amplitude differences would be found between fixation locations and the activity of the eye detector would not be seen. In contrast, with larger stimuli than the present ones, even larger N170 amplitude differences should be found between eye fixations and other fixation locations. It might also be the case that differences start appearing between the other fixation locations. Thus, we propose that holistic processing depends on the distance at which a face is seen, which makes sense in real life situations: a face seen at a distance is perceived as a small whole (maximal holistic processing) while in a face seen from very close, distances between features would become too great to allow for a complete holistic percept and lateral inhibitions between features would then be cancelled. In such instances, the processing of a face would be piecemeal.

To summarize, we propose that the processing of an upright face is coded against a human face template representation and that an eye detector enables eyes to be detected and to become an anchor point from which the distances to other features are coded. The processing is holistic and at the neural level this is made possible by lateral inhibitions from neurons coding parafoveal information onto neurons coding foveated information, so as to cancel out the overrepresentation of foveated information that would normally arise from cortical magnification. The strengths of the lateral inhibitions depend on the angular distances between the foveated feature and the peripheral features. These properties imply that holistic processing as measured by the scalp N170 ERP component is inherently a dynamic process that depends on the size of the face and the distance at which it is seen.

The face inversion effect as a consequence of the lack of lateral inhibitions

Numerous studies have shown that when faces are upside down, holistic processing is disrupted and inverted faces are processed in a featural way (Tanaka & Farah, 1993; Rossion, 2009). At the neuronal level and following our logic, inversion would simply cancel the lateral inhibitions described above. This would mean that features in fovea would be more represented than the peripheral features because of cortical magnification (Fig. 7). The representation of peripheral features would also be a function of their retinotopic distance from fovea with the most distant features not represented at all. This would explain the narrowing of the perceptual field in inverted faces for which features have to be processed sequentially and independently because of the loss of holistic processing (Rossion, 2009). This featural processing requires viewers to change their face scanning pattern toward more fixations on specific features (Barton et al., 2006; Hills et al., 2012) in order to collect all relevant facial information to do the task at hand (e.g. recognize the identity). Note that the same effects would be seen with upright faces seen too close to allow for proper holistic processing, as described earlier. The simple lack of inhibition of the fixated feature can explain all the results we obtained with inverted stimuli.

At forehead fixation there was no feature. In intact inverted faces the eyes were the closest feature, thus activation of eye-coding neurons contributed to the overall N170 signal. This explains the increase in N170 amplitude for inverted faces fixated on the forehead and the lack of such increase in inverted eyeless faces for this fixation location. When fixation was on the eyes, nose, and mouth, the increase in N170 amplitude between upright and inverted faces reflected the activation of neurons coding for the feature in fovea (which was greater than usual given the feature overrepresentation due to cortical magnification) plus the weaker activation of the neurons coding for the nearby features, itself dependant on retinotopic distance from fixation.

As mentioned in the preceding section, we assume that eyes are also inhibited when fixated on in upright faces, just like the other features (Fig. 7). When released from their inhibition by other face features in inverted intact faces, eye-coding neurons also responded more than in upright faces and thus yielded an increase in N170 amplitude. When eyes were no longer present in fovea, eye-coding neurons did not respond and the N170 FIE was considerably diminished in eyeless faces. However, as mentioned earlier, the N170 amplitude for inverted eyeless faces was weakly but significantly larger than for upright eyeless faces even at eye fixation locations. Although no feature was in fovea, this effect can be explained by the contribution of neurons coding for nearby features (nose, face outline) which were close enough to the eye fixation to contribute to the overall response. Likewise, the shorter distance between the empty nasion fixation and the nose feature compared to each empty eye fixation and the nose explains the slightly larger N170 amplitude for nasion fixation compared to eye fixations in inverted eyeless faces (Fig. 5), due to a slightly larger contribution of the nose-coding neurons to the overall response.

When fixation was on the nose, neurons coding for that feature responded. Interestingly, the N170 amplitude for inverted eyeless faces was slightly reduced compared to inverted intact faces at nose fixation (and a clear reduction of the FIE was seen at nose fixation). Again, this can be explained by the slight contribution of eye-coding neurons situated in periphery of nose fixation in inverted intact faces, which was lost when eyes were no longer present (Fig. 7). In contrast, when fixation was on the mouth, the FIE did not differ significantly between intact and eyeless faces because in intact faces, the contribution from the eyes to the overall response was too weak due to their distance from the mouth, making their absence in eyeless faces insignificant (Fig. 7). Based on the present data and stimuli used, features contribute to the overall N170 response if they are situated between ~2.8° (distance between an eye and the nose) and 3.5° (distance between an eye and the mouth) of visual angle from the fixated feature, i.e. when they are in parafoveal vision. Distances between features would increase with larger faces (or decreased viewing distance), and diminish with smaller faces (or increased viewing distance), resulting in different contributions of features to the overall response and leading to variations of the N170 amplitude with stimulus size/viewing distance.

4.6. Implications of the LIFTED model for visual perception

According to the LIFTED model, upright faces are processed holistically and this entails an inhibition of the neurons coding the foveated feature by the neurons coding features situated in parafovea so as to cancel the foveal overrepresentation that would normally arise from cortical magnification. The strength of the lateral inhibitions depends on feature distances (and thus stimulus size/viewing distance). In contrast, inverted faces are processed analytically and the N170 they elicit results from the stronger response of neurons coding the foveated feature (due to foveal overrepresentation) in addition to the (weaker) contribution of neurons coding features in parafovea, itself a function of feature angular distances from fixation. With this theory there is no need to invoke the extra recruitment of object sensitive neurons to account for the increase in N170 amplitude with face inversion, as proposed previously (e.g. Rossion et al., 1999; Itier & Taylor, 2002, Sadeh & Yovel, 2010, Yovel & Kanwisher, 2005). The FIE can be explained simply by the cancellation of the lateral inhibitions and foveal magnification. In other words, the specificity of early face processing lies in the lateral inhibition mechanism that is weak or lacking for object representation. The N170 FIE is specific to faces because the release of this inhibition results in a larger response of the neurons coding for the feature in fovea when the face is inverted compared to when the face is upright. Faces are also unique because they possess eyes which are detected by the proposed eye detector in order to anchor the face percept. This theory can also explain why little or no FIE is seen on the N170 elicited by objects. Objects are processed mostly featurally regardless of their orientation and the same neuronal activation is required with the main contribution coming from features within fovea and no or much less lateral inhibition from neurons coding object parts situated in parafovea. Similarly, this theory can explain why upright animal faces yielded slightly larger N170 than upright human faces (de Haan et al., 2002; Itier et al., 2011): the inhibition mechanism for holistic processing might not work properly because animal faces deviate slightly from the human face template. This impaired lateral inhibition mechanism for animal faces would explain the lack of N170 FIE for other-species faces (de Haan et al., 2002; Itier et al., 2011; Wiese et al., 2009) as upright and inverted animal faces would be processed similarly and mostly featurally. Finally, a default in this inhibition and/or in the eye detector mechanisms could also be at the root of face recognition impairments in some clinical and neuropsychological disorders. For instance cases of developmental or acquired prosopagnosia have been reported to process upright and inverted faces similarly (Behrmann et al., 2005; Busigny & Rossion, 2010; Duchaine et al., 2007) and to use the eye region less (Caldara et al., 2005). Individuals with Autism Spectrum Disorders also seem to process upright and inverted faces similarly (e.g. Webb et al., 2012) and avoid the eyes (Klin et al., 2002; Pelphrey et al., 2002), which might be linked to their reported deficits in face recognition (see Itier & Batty, 2009).

Conclusion

The N170 amplitude was largest when fixation was on the eyes compared to any other facial locations and this effect was seen regardless of face orientation and was lost when eyes were removed. These results suggest a special sensitivity of this ERP component to eye features and support the eye detector theory. Importantly, all the modulations of the N170 can be explained by lateral inhibitions of the neurons coding for the fixated feature in fovea by the neurons coding for features situated in parafovea when faces are upright, which explains holistic processing, and by the loss of these inhibitions with inversion, which explains the perceptual narrowing phenomenon and the featural processing of inverted faces. The LIFTED model assumes that the percept, whether upright or inverted, is compared to an upright human face template. In addition to further testing of this new model, outstanding questions that need to be addressed by future studies are i) what makes a face template, ii) how (and when) is the comparison of the percept to the face template achieved and iii) what exactly is the nature of the eye detector/anchoring mechanism.

Supplementary Material

Acknowledgments

This study was supported by grants from the Natural Sciences and Engineering Research Council of Canada (NSERC Discovery Grant #418431), the Canada Foundation for Innovation (CFI), the Ontario Research Fund (ORF) and the Canada Research Chair program to RJI.

References

- Adini Y, Sagi D, Tsodyks M. Excitatory-inhibitory network in the visual cortex: psychophysical evidence. Proceedings of the National Academy of Sciences of the United States of America. 1997;94(19):10426–31. doi: 10.1073/pnas.94.19.10426. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allison T, Puce A, McCarthy G. Category-sensitive excitatory and inhibitory processes in human extrastriate cortex. Journal of neurophysiology. 2002;88(5):2864–8. doi: 10.1152/jn.00202.2002. [DOI] [PubMed] [Google Scholar]

- Arizpe J, Kravitz DJ, Yovel G, Baker CI. Start position strongly influences fixation patterns during face processing: difficulties with eye movements as a measure of information use. PloS one. 2012;7(2):e31106. doi: 10.1371/journal.pone.0031106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barton JJS, Radcliffe N, Cherkasova MV, Edelman J, Intriligator JM. Information processing during face recognition: The effects of familiarity, inversion, and morphing on scanning fixations. Perception. 2006;35(8):1089–1105. doi: 10.1068/p5547. [DOI] [PubMed] [Google Scholar]

- Batki A, Baron-Cohen S, Wheelwright S, Connellan J, Ahluwalia J. Is there an innate gaze module? Evidence from human neonates. Infant Behavior and Development. 2000;23(2):223–229. [Google Scholar]

- Behrmann M, Avidan G, Marotta JJ, Kimchi R. Detailed exploration of face- related processing in congenital prosopagnosia: 1. Behavioral findings. Journal of cognitive neuroscience. 2005;17(7):1130–49. doi: 10.1162/0898929054475154. [DOI] [PubMed] [Google Scholar]

- Bentin S, Allison T, Puce A, Perez E, McCarthy G. Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience. 1996;8(6):551–565. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bindemann M, Scheepers C, Burton A. Viewpoint and center of gravity affect eye movements to human faces. Journal of Vision. 2009;9:1–16. doi: 10.1167/9.2.7. [DOI] [PubMed] [Google Scholar]

- Busigny T, Rossion B. Acquired prosopagnosia abolishes the face inversion effect. Cortex. 2010;46(8):965–81. doi: 10.1016/j.cortex.2009.07.004. [DOI] [PubMed] [Google Scholar]

- Caldara R, Schyns PG, Mayer E, Smith ML, Gosselin F, Rossion B. Does prosopagnosia take the eyes out of face representations? Evidence for a defect in representing diagnostic facial information following brain damage. Journal of cognitive neuroscience. 2005;17(10):1652–66. doi: 10.1162/089892905774597254. [DOI] [PubMed] [Google Scholar]

- Daniel PM, Whitteridge D. The representation of the visual field on the cerebral cortex in monkeys. The Journal of physiology. 1961;159:203–21. doi: 10.1113/jphysiol.1961.sp006803. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Haan M, Pascalis O, Johnson MH. Specialization of neural mechanisms underlying face recognition in human infants. Journal of cognitive neuroscience. 2002;14(2):199–209. doi: 10.1162/089892902317236849. [DOI] [PubMed] [Google Scholar]

- Delorme A, Makeig S. EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. Journal of Neuroscience Methods. 2004;134(1):9–21. doi: 10.1016/j.jneumeth.2003.10.009. doi:16/j.jneumeth.2003.10.009. [DOI] [PubMed] [Google Scholar]

- Duchaine B, Yovel G, Nakayama K. No global processing deficit in the Navon task in 14 developmental prosopagnosics. Social cognitive and affective neuroscience. 2007;2(2):104–13. doi: 10.1093/scan/nsm003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dupuis-Roy N, Fortin I, Fiset D, Gosselin F. Uncovering gender discrimination cues in a realistic setting. Journal of vision. 2009;9(2):10.1–8. doi: 10.1167/9.2.10. [DOI] [PubMed] [Google Scholar]

- Eimer M. Does the face-specific N170 component reflect the activity of a specialized eye processor? Neuroreport. 1998;9(13):2945–8. doi: 10.1097/00001756-199809140-00005. [DOI] [PubMed] [Google Scholar]

- Eimer M. Effects of face inversion on the structural encoding and recognition of faces: Evidence from event-related brain potentials. Cognitive Brain Research. 2000;10(1–2):145–158. doi: 10.1016/S0926-6410(00)00038-0. [DOI] [PubMed] [Google Scholar]

- Eimer M, Kiss M, Nicholas S. Response profile of the face-sensitive N170 component: a rapid adaptation study. Cerebral cortex. 2010;20(10):2442–52. doi: 10.1093/cercor/bhp312. [DOI] [PubMed] [Google Scholar]

- Freiwald Wa, Tsao DY, Livingstone MS. A face feature space in the macaque temporal lobe. Nature neuroscience. 2009;12(9):1187–96. doi: 10.1038/nn.2363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heisz JJ, Shore DI. More efficient scanning for familiar faces. Journal of Vision. 2008;8(1):1–10. doi: 10.1167/8.1.9. [DOI] [PubMed] [Google Scholar]

- Hills PJ, Sullivan AJ, Pake JM. Aberrant first fixations when looking at inverted faces in various poses: The result of the centre of gravity effect? British Journal of Psychology. 2012;103(4):520–538. doi: 10.1111/j.2044-8295.2011.02091.x. [DOI] [PubMed] [Google Scholar]