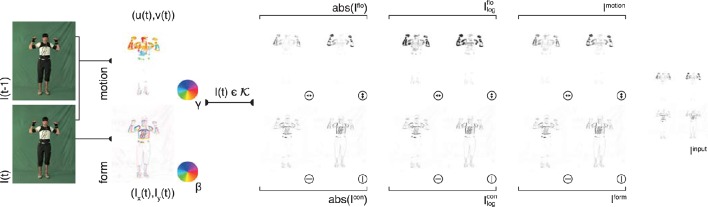

Figure 2.

Input generation. Each frame of an action sequence is transformed into a combined motion and form representation. In the top row, the estimated optical flow is displayed in the second column (direction γ color-encoded) for two consecutive frames (first column). The optical flow field is then separated into horizontal and vertical components (third column) and their absolute value is log transformed (forth column) and normalized (fifth column). Form representations (bottom row) are derived framewise by estimating the horizontal and vertical derivatives Ix and Iy (second column, gradient orientation with polarity β color-encoded). The resulting contrast images are then log-transformed and normalized. The form and motion representations are combined into a single feature map Iinput which is then fed into the convolutional neural network. Image sizes are increased for a better visibility. Written informed consent for the publication of exemplary images was obtained from the shown subject.