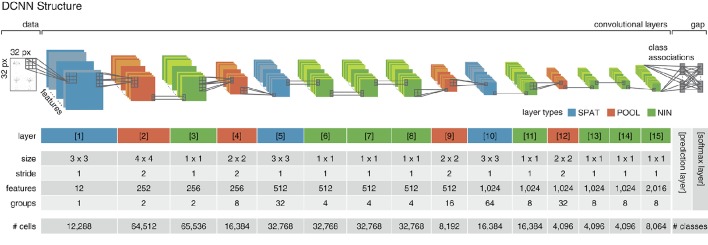

Figure 3.

Deep convolutional neural network structure. The implemented DCNN follows the structure proposed in Esser et al. (2016) and employs three different convolutional layer types (layers 1–15). Spatial layers (SPAT; colored in blue) perform a linear filtering operation by convolution. Pooling layers (POOL; colored in red) decrease the spatial dimensionality while increasing the invariance and diminishing the chance of overfitting. Network-in-network layers (NIN; colored in green) perform a parametric cross channel integration (Lin et al., 2013). The proposed network consists of a data (or input) layer, 15 convolutional layers and a prediction and softmax layer on top. Each of the cells in the last convolutional layer (layer 15) is associated with one class of the classification problem. In the prediction layer, the average class-associated activations are derived (global average pooling/gap) and fed into the softmax layer (i.e., one per class), where the cross-entropy error is calculated and propagated backwards through the network. The parameters used for the convolutional layers of the network are given in the central rows of the table. In the last row, the number of artificial cells per layer is listed. The cell count in the prediction and softmax layer depends on the number of categories of the classification task (i.e., the number of actions in the dataset).