Abstract

PURPOSE

Technology could transform routine decision making by anticipating patients’ information needs, assessing where patients are with decisions and preferences, personalizing educational experiences, facilitating patient-clinician information exchange, and supporting follow-up. This study evaluated whether patients and clinicians will use such a decision module and its impact on care, using 3 cancer screening decisions as test cases.

METHODS

Twelve practices with 55,453 patients using a patient portal participated in this prospective observational cohort study. Participation was open to patients who might face a cancer screening decision: women aged 40 to 49 who had not had a mammogram in 2 years, men aged 55 to 69 who had not had a prostate-specific antigen test in 2 years, and adults aged 50 to 74 overdue for colorectal cancer screening. Data sources included module responses, electronic health record data, and a postencounter survey.

RESULTS

In 1 year, one-fifth of the portal users (11,458 patients) faced a potential cancer screening decision. Among these patients, 20.6% started and 7.9% completed the decision module. Fully 47.2% of module completers shared responses with their clinician. After their next office visit, 57.8% of those surveyed thought their clinician had seen their responses, and many reported the module made their appointment more productive (40.7%), helped engage them in the decision (47.7%), broadened their knowledge (48.1%), and improved communication (37.5%).

CONCLUSIONS

Many patients face decisions that can be anticipated and proactively facilitated through technology. Although use of technology has the potential to make visits more efficient and effective, cultural, workflow, and technical changes are needed before it could be widely disseminated.

Keywords: health information technology, screening, decision making, computer-assisted, patient education, primary care, practice-based research

INTRODUCTION

Today’s patients face complex medical decisions. Clinicians traditionally support patients with decisions by giving advice during in-person visits. Unfortunately, the competing demands and time constraints associated with office visits limit the help clinicians can realistically provide.1,2 More importantly, both difficult and even routine choices might entail a “decision journey”—that is, the support required may extend over time, a period during which patients may contemplate options, gather additional information, confer with family and friends, consider personal preferences, and address worries or concerns.3–5 These tasks are beyond what can be accomplished during an office visit.6–9

Although the components of a “good” medical decision journey have been well described,10–13 patients generally receive limited support during these journeys.14–17 Patients want to be included in decisions, yet many decisions occur with minimal patient input.17–19 When conversations do take place, they rarely include all elements needed for an informed decision.14,18,20 One proven solution is to use decision aids. Decision aids increase knowledge, reduce decisional conflict, engage patients, and reduce rates of elective invasive procedures and unnecessary testing.21–23 Yet it is difficult for clinicians to routinely use decision aids, and accordingly, they are not commonly used.24–29

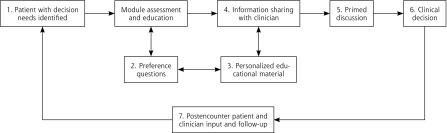

Health information technology (HIT) offers a potential to systematically automate decision-making processes outside the constraints of clinical encounters. This strategy could enable visits with clinicians to focus on decision elements that occur best in person.30,31 HIT has the capacity to anticipate decision needs through programmed logic, deliver information and decision support before visits, collect patient-reported information, share information with the clinician about where the patient is with decisions and preferences, set a decision-making agenda, and even follow up on next steps (Figure 1).

Figure 1.

Conceptual model for engaging patients through an informed decision-making module embedded in a patient portal and electronic health record.

Note: To engage patients in their decision, the informed decision-making (IDM) module guides patients and clinicians through a series of 7 steps that can be applied to a wide range of decisions beyond the test case (cancer screening) investigated in this study. The IDM module (1) reaches patients outside the confines of an office visit to explore a potential decision by completing the module; (2) walks patients through an intake that assesses personal preferences, knowledge, and needs, and patients’ readiness to make a decision; (3) provides personalized educational material tailored to patients’ stated preferences and decision stage; (4) allows patients to share their preferences and decision needs with their clinician; (5) prompts patients and clinicians to use the reported information to make a decision; (6) guides the patient to make a choice, which can include deferring the decision; and (7) invites patients and clinicians to provide input after the encounter.

METHODS

This pragmatic observational cohort study evaluated how clinicians and patients used an integrated decision module and explored the module’s impact on care for 3 routine decision scenarios: when to start breast cancer screening, how to be screened for colorectal cancer, and not being screened for prostate cancer. These 3 screening topics were selected because they represent common patient decisions—even prostate cancer screening, which although not recommended for the general population, is often inquired about by patients and may be appropriate for a subset of men willing to risk screening harms.32 The study was reviewed by the Virginia Commonwealth University Institutional Review Board (HM14750).

Setting

Twelve primary care practices in northern Virginia participated. The practices shared 1 of 2 electronic health records (EHRs), Allscripts Enterprise or Professional. All practices used a central patient portal, called MyPreventiveCare.33 At the time of the study, 55,453 unique patients (34.5% of the practice population) had a portal account and 23,546 used the portal during the study period. Although portal users were representative of the practices, prior investigation demonstrated users were more likely to be older or have comorbidities, and were slightly less likely to be African American or Hispanic.34

Participants

Patients age 50 to 75 years who were overdue for colorectal cancer screening,35 women aged 40 to 49 years who had not had a mammogram in 2 years, and men aged 55 to 69 years who had not obtained a prostate-specific antigen (PSA) test in 2 years were invited to complete the decision module. Patients were excluded if they had a prior diagnosis of colorectal, breast, or prostate cancer, or prior abnormal screening test results.

Invitations to use the module occurred in 3 phases. In phase 1 (January 6, 2014, through February 16, 2014), eligible patients who logged into the portal were prompted to review the module. In phase 2 (February 17, 2014, through May 15, 2014), eligible patients who had a visit scheduled 2 weeks in advance were e-mailed an invitation to review the module, 2 weeks, 1 week, and 2 days before their visit. In phase 3 (May 26, 2014, through August 15, 2014), any eligible patient was e-mailed up to 3 weekly invitations to review the module, irrespective of appointment status.

Decision Module

The decision module promoted the 2012 prostate, 2009 breast, and 2008 colon cancer screening recommendations made by the US Preventive Services Task Force.32,35,36 It included 7 components (Figure 1). The system identified eligible patients through well-established programmed logic by querying the EHR and portal data.33 It flagged eligible patients when they used the portal (phase 1) or during a daily scheduled query (phases 2 and 3) and then invited patients by prompt or e-mail to complete a previsit assessment in the decision module. Seventeen interactive questions explored patients’ stage of readiness for deciding about cancer screening, information needs and fears, desired assistance, preferred format for receiving information and statistics, and planned next steps.

Based on patients’ responses, the module assembled a customized educational page that included information about topics of greatest interest to patients (eg, risk of cancer, benefits or harms of screening, screening logistics) in the format they preferred (ie, words, numbers, pictures, or stories). Questions were derived from validated instruments when possible.37–39

The module asked patients if they would like to discuss screening at their next office visit and if they would like a summary to be automatically forwarded to their clinician for review. The summary included whether patients had made a screening decision, the topics they wanted to discuss, their fears and worries, and their preferred level of decision control.37 Supplemental Appendix 1, available at http://www.annfammed.org/content/15/3/217/suppl/DC1/, displays screenshots of MyQuestions, educational content, and clinician summaries. The system automatically sent a postencounter questionnaire to both the patient and clinician after the office visit. The questionnaire sought feedback on the module and the events before, during, and after the clinical encounter. Nonresponders received up to 5 reminders.

The design of the module was informed by a 2-year process of patient and stakeholder engagement including focus groups to identify important module content; patient iterative input of draft module content; cognitive and usability testing consistent with national standards40; and a patient, clinician, and health system workgroup that met quarterly for 2 years to help plan the decision module design, implementation, and interpretation of findings.41 A clinician from each practice served on the clinician workgroup and helped to standardize and promote implementation at his or her office.

Outcomes

Primary outcomes included measures of how the decision module was used and its impact on decision making. We used 5 data sources: (1) the EHR (demographics, diagnoses, screening status, module eligibility), (2) the portal (health risk assessment responses, prompt, or e-mail invitation receipt), (3) the decision module (patients’ responses, paradata–clicks within the decision module pertaining to use of educational materials),42,43 and (4) the patient postencounter questionnaire and (5) the clinician postencounter questionnaire (see Supplemental Appendices 2 and 3, respectively, for the questionnaires, available at http://www.annfammed.org/content/15/3/217/suppl/DC1/).

Decision module uptake—measured by the number of eligible patients who started and completed the module—was calculated by race, ethnicity, sex, preferred language, insurance type, and phase of module invitation. Measures of module use included educational content patients reviewed (paradata), whether patients elected to share responses with their clinician, and whether patients believed their clinician reviewed their forwarded summary. EHR data indicated whether patients obtained breast, colorectal, or prostate screening within 3 months of using the module.

Analysis

The analysis included all patients with a MyPreventiveCare account who met eligibility criteria. Patients were subdivided into starters and nonstarters, and starters were further subdivided into completers or noncompleters. We used χ2 tests for independent group comparisons, generalized linear mixed models (GLMMs) for dependent group comparisons, and ANOVA to compare time to follow-up between groups. We used a GLMM when comparing phase eligibility to include a patient-level random effect that accounted for patients being eligible in multiple phases. We did not adjust for other covariates. All analyses were completed using SAS version 9.4 (SAS Institute).

RESULTS

Decision Module Use

During the nearly 1-year study period, 11,458 patients (20.7% of 55,453 unique portal users) faced a screening decision for colorectal cancer (6,329 patients), breast cancer (3,733 patients), or prostate cancer (1,396 patients) (Table 1). Prompted by the invitation, 2,355 patients started and 903 completed the module. Patients faced with a prostate cancer screening decision were most likely, while patients faced with breast cancer screening were least likely, to start and complete the module (P <.001).

Table 1.

Patients Starting and Completing the Informed Decision-Making Module by Study Phase and Cancer Screening Decision

| Module Use | Cancer Screening Decision

|

|||

|---|---|---|---|---|

| Colon | Breast | Prostate | Total | |

| Overall use: phases 1, 2, and 3 combineda | ||||

| Eligible, No. | 6,329 | 3,733 | 1,396 11 | ,458 |

| Starters, No. (%) | 1,249 (19.7) | 638 (17.1) | 468 (33.5) | 2,355 (20.6) |

| Completers, No. | 489 | 190 | 224 | 903 |

| Of starters, % | 39.2 | 9.8 | 47.9 | 38.3 |

| Of eligible, % | 7.7 | 5.1 | 6.0 | 7.9 |

| Phase 1: prompt on MyPreventiveCare log-ina | ||||

| Eligible, No. | 542 | 297 | 171 | 1,010 |

| Starters, No. (%) | 154 (28.4) | 70 (23.6) | 86 (50.3) | 310 (30.7) |

| Completers, No. | 39 | 12 | 21 | 72 |

| Of starters, % | 25.3 | 17.1 | 24.4 | 23.2 |

| Of eligible, % | 7.2 | 4.0 | 12.3 | 7.1 |

| Phase 2: prompt via e-mail before appointmenta | ||||

| Eligible, No. | 354 | 171 | 85 | 610 |

| Starters, No. (%) | 140 (39.5) | 53 (31) | 53 (62.4) | 246 (40.3) |

| Completers, No. | 75 | 19 | 35 | 129 |

| Of starters, % | 53.6 | 35.9 | 66.0 | 52.4 |

| Of eligible, % | 21.2 | 11.1 | 41.2 | 21.1 |

| Phase 3: prompt via e-mail without an appointmenta | ||||

| Eligible, No. | 5,136 | 3,220 | 1,080 | 9,436 |

| Starters, No. (%) | 469 (9.1) | 264 (8.2) | 189 (17.5) | 922 (9.8) |

| Completers, No. | 174 | 77 | 87 | 338 |

| Of starters, % | 37.1 | 29.2 | 46.0 | 36.7 |

| Of eligible, % | 3.4 | 2.4 | 8.1 | 3.6 |

Phases 1, 2, and 3 combined: January 2, 2014, through August 15, 2014. Phase 1: January 2, 2014, through February 16, 2014. Phase 2: February 17, 2014, through May 25, 2014. Phase 3: May 26, 2014, through August 15, 2014.

Note: The difference in starting and completing the decision module was statistically different for breast vs colorectal (P =.001), breast vs prostate (P <.001), and colorectal vs prostate cancer (P <.001) screening decisions. The difference in starting and completing the decision module was statistically different for phase 1 vs phase 2 (P <.001), phase 1 vs phase 3 (P <.001), and phase 2 vs phase 3 (P <.001).

Patients eligible in phase 2 were most likely to start the module (phase 1, 2, and 3 = 20.6%, 30.7%, and 9.8%, P <.001) and complete the module (phase 1, 2, and 3 = 7.9%, 21.1%, and 3.6%, P <.001) (Table 1). Although phase 3 had the lowest percentage of starters and completers, because many patients were included in phase 3, it yielded the largest absolute number of starters and completers. A detailed analysis of module attrition has been previously reported.44

When the module was used, paradata indicated that 291 patients (23.6%) clicked on at least 1 educational resource in the module (Supplemental Appendix 1, section 16)—an average of 3.5 resources per patient. The most frequent information patients reviewed were options for getting screened (206 patients, 70.8%), what screening test works best (145 patients, 49.8%), problems or complications from screening (133 patients, 45.7%), and how the test is performed (120 patients, 41.2%). Among the 1,044 patients who reached the forwarding question, 493 (47.2%) indicated a desire to forward a summary to their clinician.

Characteristics of Decision Module Users

Women were less likely than men to use the module (Table 2). Within the colorectal cancer screening cohort, which included both sexes, more men started the module (P =.03). Similarly, across all phases, men were more likely than women to share a summary with their clinician (56.3% vs 37.0%, P <.001). Module use was lower for patients with no prior screening than for those previously screened (P <.001) and for patients who preferred a language other than English (P <.001). Hispanics tended to be less likely than non-Hispanics to start the module (P =.07). Factors other than socioeconomic status also appeared important. Asian patients, a demographic with often high socioeconomic status,45 were least likely to start the module (P <.001), and African American patients were most likely to complete it (P <.001). Start and completion rates were higher for Medicare beneficiaries than for commercially insured patients (P <.001).

Table 2.

Use of the Informed Decision-Making Module by Demographic Characteristics

| Characteristic | Module Starters

|

Module Nonstarters | |

|---|---|---|---|

| Noncompleters | Completers | ||

| Age, mean (SD), y | 53.5 (8.3) | 54.9 (8.2) | 52.4 (8.2) |

| Total, No. (%) | 1,452 (12.7) | 903 (7.9) | 9,103 (79.4) |

| Sex | |||

| Male | 595 (13.9) | 472 (11.1) | 3,201 (75.0) |

| Female | 857 (11.9) | 431 (6.0) | 5,902 (82.1) |

| Race, No. (%) | |||

| Asian | 132 (11.4) | 60 (5.2) | 966 (83.4) |

| African American | 62 (12.6) | 63 (12.8) | 368 (74.6) |

| White | 1,019 (13.7) | 618 (8.3) | 5,788 (78.0) |

| Other | 67 (11.7) | 43 (7.5) | 464 (80.8) |

| Unreported | 172 (9.5) | 119 (6.6) | 1,517 (83.9) |

| Ethnicity, No. (%) | |||

| Hispanic | 43 (9.7) | 38 (8.5) | 364 (81.8) |

| Non-Hispanic | 1,065 (13.5) | 658 (8.4) | 6,153 (78.1) |

| Unknown | 344 (11.0) | 207 (6.6) | 2,586 (82.4) |

| Language, No. (%) | |||

| English | 1,249 (13.3) | 770 (8.2) | 7,375 (78.5) |

| Other | 203 (9.8) | 133 (6.5) | 1,728 (83.7) |

| Insurance type, No. (%) | |||

| Commercial | 1,304 (13.8) | 782 (8.3) | 7,349 (77.9) |

| Medicare | 92 (17.2) | 69 (12.9) | 374 (69.9) |

| Medicaid | 1 (20.0) | 0 (0) | 4 (80.0) |

| None | 55 (3.7) | 52 (3.5) | 1,376 (92.8) |

| Prior screening, No. (%) | |||

| Yes | 538 (14.3) | 347 (9.3) | 2,861 (76.4) |

| No | 914 (11.9) | 556 (7.2) | 6,242 (80.9) |

Note: Given the large sample size, all differences across groups (noncompleters, completers, and nonstarters) were statistically significant (P <.001) with the exception of Medicaid insurance type.

Impact on Care

The next office visit occurred, on average, 50 days (range, 0–312 days) after the decision module session. The average differed by phase (phase 1 = 160 days, phase 2 = 7 days, and phase 3 = 14 days; P <.001), although some patients in each phase did not have a visit after module use. About 50% to 60% of patients and clinicians reported the clinician reviewed the forwarded summary before the visit. Patients reported that screening was discussed more often during wellness visits than during chronic or acute care visits (82.5%, 63.9%, 60.0%, respectively, P =.03) (Table 3). Patients who forwarded a summary were slightly more likely to report discussion of screening than those who did not (72.9% vs 67.7%, P =.04).

Table 3.

Satisfaction With the Informed Decision-Making Module and Reported Impact on Care

| Statement or Measure | Patient Agreement, % (n = 277)a | Clinician Agreement, % (n = 281)b |

|---|---|---|

| Doctor believed to have seen response summary at time of appointment | ||

| Yes | 57.8 | 50.0 |

| No | 21.1 | 50.0 |

| Cannot remember | 21.1 | 0 |

| Doctor discussed screening test at visit | ||

| Yes | 70.7 | 65.5 |

| No | 20.7 | 24.6 |

| Cannot remember | 8.6 | 9.9 |

| How use of module changed the conversation | ||

| Motivated patient to talk with doctor | 39.0 | 41.3 |

| Prompted doctor to talk with patient | 28.1 | 39.7 |

| Did not change anything | 47.3 | 33.3 |

| Other | 2.1 | 9.5 |

| How conversation helped patient with fears or worries ranked as most important on module | ||

| Reduced fears or worries | 80.9 | NA |

| Did not help with fears or worries | 19.1 | NA |

| Doctor recalled addressing patients’ fears or worries about cancer screening | ||

| Yes | NA | 39.0 |

| No | NA | 29.3 |

| Cannot remember | NA | 31.7 |

| Strongly/somewhat agree vs strongly/somewhat disagree regarding completion of module and forwarding of summaryc | ||

| Look and layout were easy to understand | 56.0 vs 9.7 | NA |

| Took too long to complete | 34.3 vs 29.2 | NA |

| Was easy to complete | 72.2 vs 11.1 | NA |

| Helped patient with cancer screening decision | 42.6 vs 20.4 | 44.9 vs 8.2 |

| Made visit more productive | 40.7 vs 17.6 | 38.1 vs 16.3 |

| Got patient more involved with the decision | 47.7 vs 17.6 | 51.7 vs 6.1 |

| Helped to change patient’s screening plans | 22.7 vs 30.1 | 13.6 vs 17.0 |

| Improved patient-doctor communication | 37.5 vs 16.7 | 42.2 vs 12.2 |

| Improved patient’s knowledge before visit | 48.1 vs 15.7 | 45.6 vs 6.1 |

| Made the doctor more sensitive to patient’s needs | 27.3 vs 11.6 | 48.3 vs 10.2 |

NA = not applicable.

Response rate = 44.7%.

Response rate = 45.3%.

Response options were strongly agree, somewhat agree, neither agree nor disagree, somewhat disagree, and strongly disagree. Values for neither agree nor disagree are not reported in table.

A sizable subset of patients and clinicians (30% to 50%) reported that the module prompted cancer screening discussions, helped patients get more involved, improved knowledge, enhanced communication, made clinicians more sensitive to patients’ concerns, and facilitated decisions (Table 3). Although only 39.0% of clinicians recalled addressing the patient’s fears/worries, 80.9% of patients said the clinician helped reduce their fears/worries.

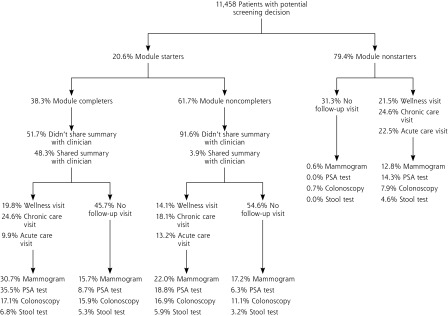

Module completion was associated with screening behavior (Figure 2): completers were more likely to get screened within 3 months than nonstarters and noncompleters (P <.001), a pattern suggesting that they had already made a screening choice—mostly to get screened. Among patients eligible for colon cancer screening, completers were more likely than nonstarters to get a colonoscopy (P <.001) or a stool test (P <.001). Comparing nonstarters with completers, there was a greater increase in colonoscopies than stool tests for the latter (P <.001).

Figure 2.

Relationship of the informed decision-making module with follow-up visits and with breast, colorectal, and prostate cancer screening.

PSA = prostate-specific antigen.

Note: Percentages of patients who received screening tests were derived from electronic health record data for a period of 3 months after completion of the decision module. Although the colorectal cancer screening rate appears low, this study included only the subset of practice patients overdue for that screening. On the basis of prior studies and practice quality program participation, about 70% of patients in the study practices have been screened for colorectal cancer.46

DISCUSSION

This pragmatic observational cohort study harnessed EHR data and patient portal capabilities to create an automated decision module that could identify patients likely to be facing common cancer screening decisions, engage and inform patients, clarify questions and fears, identify next decision steps, and improve the decision-making process. We found that practices had large decision burdens—with 1 in 5 patients facing a decision—yet only 20.6% of patients facing a decision started and 7.9% completed our decision module. Users reviewed a range of topics, and one-half of patients forwarded their priorities and concerns to clinicians. Both patients and clinicians reported that module completion helped with decisions: one-third to one-half reported it made appointments more productive, got patients more involved in decisions, broadened knowledge, and improved communication.

Our proposed decision module is appealing, yet a clear challenge is getting patients to use such a system. Decision support use is high in the context of clinical trials.23,25 Routine use of decision support in clinical practice, which we tried to automate in this study, is low (9% to 10% of encounters in implementation trials), however.26 Furthermore, pragmatic trials looking at patient portal use frequently demonstrate low initial uptake with increasing use over time as the portal becomes part of new standard workflows.47,48

Our findings regarding patient use by phase provide some insight into how such systems could be integrated into workflow. Linking invitations to visits (phase 2) resulted in greater response rates and less time between portal use and visits; inviting anyone with a need (phase 3) resulted in a greater absolute number of users and may have prompted needed visits. Findings further suggest some groups of patients (possibly men more than women and African Americans more than Asians) and types of decisions (prostate cancer screening more than breast cancer screening) may derive greater benefit from a decision module, but comparison trials are needed to fully understand these observations.

Most trials of traditional decision aids demonstrate reduced use of services with marginal benefit and increased use of beneficial services.23,49–52 Although Figure 2 shows decision module users had higher rates of breast and prostate cancer screening, which may not be good for screening services with small or zero net benefit, users self-selected, and module responses indicated a substantial proportion were already inclined to be screened. Randomized controlled trials of similar systems and trials that assess whether decisions better align with values are needed. Additionally, longer observational periods are required to see the full increase on desired colorectal cancer screening.

This feasibility study had limitations. It involved a large population but lacked controls. It relied on surveys to gauge associations. Completers were more likely to be screened, but this self-selected sample may have been more motivated than other patients. We conducted paired postencounter surveys to elicit feedback from patients and clinicians after visits; overall response rates were 45%, and the rate of paired responses was lower.

Confirming this study’s findings in controlled trials will be important and has implications well beyond cancer screening. Health systems and payers could expand the role of portals to help patients prepare for other complex decisions. Implementing this model of using technology for decision making is likely to encounter technologic challenges,53–56 and engaging patients online and integrating the process with practice workflow may not be easy. If, however, future research confirms the benefits of this approach—more informed patients, better decisions, and wiser use of encounter time—the return on investment could offset the implementation costs and improve care.

Acknowledgment

Our practice partners at Privia Medical Group and Fairfax Family Practice Centers provided their insights and hard work; they include Broadlands Family Practice, Family Medicine of Clifton/Centreville, Fairfax Family Practice, Herndon Family Medicine, Lorton Stations Family Medicine, Prince William Family Medicine, South Riding Family Medicine, Town Center Family Medicine, and Vienna Family Medicine.

Footnotes

Conflicts of interest: authors report none.

Funding support: Funding and support for this project was provided by the Patient Centered Outcomes Institute (PCORI grant no. IP2PI000516-01) and National Center for Advancing Translational Sciences (CTSA grant no. ULTR00058).

Disclaimer: The opinions expressed in this manuscript are those of the authors and do not necessarily reflect those of the funders.

Supplementary materials: Available at http://www.AnnFamMed.org/content/15/3/217/suppl/DC1/.

References

- 1.Solberg LI. Theory vs practice: should primary care practice take on social determinants of health now? No. Ann Fam Med. 2016;14(2):102–103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Yarnall KS, Pollak KI, Østbye T, Krause KM, Michener JL. Primary care: is there enough time for prevention? Am J Public Health. 2003;93(4):635–641. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Longo DR, Ge B, Radina ME, et al. Understanding breast cancer patients’ perceptions: health information-seeking behaviour and passive information receipt. J Commun Healthc. 2009;2(2):184–206. [Google Scholar]

- 4.Longo DR, Woolf SH. Rethinking the information priorities of patients. JAMA. 2014;311(18):1857–1858. [DOI] [PubMed] [Google Scholar]

- 5.Longo DR, Schubert SL, Wright BA, LeMaster J, Williams CD, Clore JN. Health information seeking, receipt, and use in diabetes self-management. Ann Fam Med. 2010;8(4):334–340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Légaré F, Ratté S, Gravel K, Graham ID. Barriers and facilitators to implementing shared decision-making in clinical practice: update of a systematic review of health professionals’ perceptions. Patient Educ Couns. 2008;73(3):526–535. [DOI] [PubMed] [Google Scholar]

- 7.Silveira MJ, Feudtner C. Shared medical decision making. JAMA. 2005;293(9):1058–1059. [DOI] [PubMed] [Google Scholar]

- 8.Jaén CR, Stange KC, Nutting PA. Competing demands of primary care: a model for the delivery of clinical preventive services. J Fam Pract. 1994;38(2):166–171. [PubMed] [Google Scholar]

- 9.Stange KC, Zyzanski SJ, Jaén CR, et al. Illuminating the ‘black box’. A description of 4454 patient visits to 138 family physicians. J Fam Pract. 1998;46(5):377–389. [PubMed] [Google Scholar]

- 10.Briss P, Rimer B, Reilley B, et al. ; Task Force on Community Preventive Services. Promoting informed decisions about cancer screening in communities and healthcare systems. Am J Prev Med. 2004;26(1):67–80. [DOI] [PubMed] [Google Scholar]

- 11.Braddock CH, III, Edwards KA, Hasenberg NM, Laidley TL, Levinson W. Informed decision making in outpatient practice: time to get back to basics. JAMA. 1999;282(24):2313–2320. [DOI] [PubMed] [Google Scholar]

- 12.Woolf SH. Shared decision-making: the case for letting patients decide which choice is best. J Fam Pract. 1997;45(3):205–208. [PubMed] [Google Scholar]

- 13.Gupta M. Improved health or improved decision making? The ethical goals of EBM. J Eval Clin Pract. 2011;17(5):957–963. [DOI] [PubMed] [Google Scholar]

- 14.Wunderlich T, Cooper G, Divine G, et al. Inconsistencies in patient perceptions and observer ratings of shared decision making: the case of colorectal cancer screening. Patient Educ Couns. 2010;80(3):358–363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Shay LA, Lafata JE. Understanding patient perceptions of shared decision making. Patient Educ Couns. 2014;96(3):295–301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Arora NK, Hesse BW, Rimer BK, Viswanath K, Clayman ML, Croyle RT. Frustrated and confused: the American public rates its cancer-related information-seeking experiences. J Gen Intern Med. 2008;23(3):223–228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hoffman RM, Lewis CL, Pignone MP, et al. Decision-making processes for breast, colorectal, and prostate cancer screening: the DECISIONS survey. Med Decis Making. 2010;30(5)(Suppl):53S–64S. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Ling BS, Trauth JM, Fine MJ, et al. Informed decision-making and colorectal cancer screening: is it occurring in primary care? Med Care. 2008;46(9)(Suppl 1):S23–S29. [DOI] [PubMed] [Google Scholar]

- 19.Nekhlyudov L, Li R, Fletcher SW. Informed decision making before initiating screening mammography: does it occur and does it make a difference? Health Expect. 2008;11(4):366–375. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.McQueen A, Bartholomew LK, Greisinger AJ, et al. Behind closed doors: physician- patient discussions about colorectal cancer screening. J Gen Intern Med. 2009;24(11):1228–1235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.O’Connor AM, Bennett CL, Stacey D, et al. Decision aids for people facing health treatment or screening decisions. Cochrane Database Syst Rev. 2009;(3):CD001431. [DOI] [PubMed] [Google Scholar]

- 22.Leatherman S, Warrick L. Effectiveness of decision aids: a review of the evidence. Medical care research and review. Med Care Res Rev. 2008;65(6)(Suppl):79S–116S. [DOI] [PubMed] [Google Scholar]

- 23.Stacey D, Légaré F, Col NF, et al. Decision aids for people facing health treatment or screening decisions. Cochrane Database Syst Rev. 2014;1(1):CD001431. [DOI] [PubMed] [Google Scholar]

- 24.Jimbo M, Rana GK, Hawley S, et al. What is lacking in current decision aids on cancer screening? CA Cancer J Clin. 2013;63(3):193–214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Shultz CG, Jimbo M. Decision aid use in primary care: an overview and theory-based framework. Fam Med. 2015;47(9):679–692. [PubMed] [Google Scholar]

- 26.Lin GA, Halley M, Rendle KA, et al. An effort to spread decision aids in five California primary care practices yielded low distribution, highlighting hurdles. Health Aff (Millwood). 2013;32(2):311–320. [DOI] [PubMed] [Google Scholar]

- 27.Hill L, Mueller MR, Roussos S, et al. Opportunities for the use of decision aids in primary care. Fam Med. 2009;41(5):350–355. [PubMed] [Google Scholar]

- 28.O’Connor AM, Wennberg JE, Legare F, et al. Toward the ‘tipping point’: decision aids and informed patient choice. Health Aff (Millwood). 2007;26(3):716–725. [DOI] [PubMed] [Google Scholar]

- 29.Friedberg MW, Van Busum K, Wexler R, Bowen M, Schneider EC. A demonstration of shared decision making in primary care highlights barriers to adoption and potential remedies. Health Aff (Millwood). 2013;32(2):268–275. [DOI] [PubMed] [Google Scholar]

- 30.Fowler FJ, Jr, Levin CA, Sepucha KR. Informing and involving patients to improve the quality of medical decisions. Health Aff (Millwood). 2011;30(4):699–706. [DOI] [PubMed] [Google Scholar]

- 31.Krist AH, Beasley JW, Crosson JC, et al. Electronic health record functionality needed to better support primary care. J Am Med Inform Assoc. 2014;21(5):764–771. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Moyer VA, U.S. Preventive Services Task Force. Screening for prostate cancer: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2012;157(2):120–134. [DOI] [PubMed] [Google Scholar]

- 33.Krist AH, Peele E, Woolf SH, et al. Designing a patient-centered personal health record to promote preventive care. BMC Med Inform Decis Mak. 2011;11:73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Krist AH, Woolf SH, Bello GA, et al. Engaging primary care patients to use a patient-centered personal health record. Ann Fam Med. 2014;12(5):418–426. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.U.S. Preventive Services Task Force. Screening for colorectal cancer: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2008;149(9):627–637. [DOI] [PubMed] [Google Scholar]

- 36.U.S. Preventive Services Task Force. Screening for breast cancer: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2009;151(10):716–726, W-236. [DOI] [PubMed] [Google Scholar]

- 37.Degner LF, Sloan JA, Venkatesh P. The Control Preferences Scale. Can J Nurs Res. 1997;29(3):21–43. [PubMed] [Google Scholar]

- 38.O’Connor AM. Validation of a decisional conflict scale. Med Decis Making. 1995;15(1):25–30. [DOI] [PubMed] [Google Scholar]

- 39.The Ottawa Hospital Research Institute. Ottawa Decision Support Framework (ODSF). https://decisionaid.ohri.ca/odsf.html Accessed Sep 2016.

- 40.U.S. Department of Health and Human Services. Usability.gov. A Step-by-Step Usability Guide. http://usability.gov/ Accessed Dec 2016.

- 41.Woolf SH, Zimmerman E, Haley A, Krist AH. Authentic engagement of patients and communities can transform research, practice, and policy. Health Aff (Millwood). 2016;35(4):590–594. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Sowan AK, Jenkins LS. Paradata: a new data source from web-administered measures. Computers, Informatics, Nursing: CIN. 2010;28(6):333–342; quiz 343–334. [DOI] [PubMed] [Google Scholar]

- 43.Couper MP, Lyberg LE. The user of paradata in survey research. 55th Session of the International Statistical Institute. The Hague, The Netherlands: http://isi.cbs.nl/iamamember/CD6-Sydney2005/ISI2005_Papers/1635.pdf. Published 2005 Accessed Dec 2016. [Google Scholar]

- 44.Hochheimer CJ, Sabo RT, Krist AH, Day T, Cyrus J, Woolf SH. Methods for evaluating respondent attrition in web-based surveys. J Med Internet Res. 2016;18(11):e301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Cook WK, Chung C, Tseng W. Demographic and socioeconomic profiles of Asian Americans, Native Hawaiians, and Pacific Islanders. Paper presented at: Asian & Pacific Islander American Health Forum 2011; San Francisco, CA. [Google Scholar]

- 46.Krist AH, Woolf SH, Rothemich SF, et al. Interactive preventive health record to enhance delivery of recommended care: a randomized trial. Ann Fam Med. 2012;10(4):312–319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Ralston S, Kellett N, Williams RL, Schmitt C, North CQ. Practice-based assessment of tobacco usage in southwestern primary care patients: a Research Involving Outpatient Settings Network (RIOS Net) study. J Am Board Fam Med. 2007;20(2):174–180. [DOI] [PubMed] [Google Scholar]

- 48.Silvestre AL, Sue VM, Allen JY. If you build it, will they come? The Kaiser Permanente model of online health care. Health Aff (Millwood). 2009;28(2):334–344. [DOI] [PubMed] [Google Scholar]

- 49.Legare F, Ratte S, Stacey D, et al. Interventions for improving the adoption of shared decision making by healthcare professionals. Cochrane Database Syst Rev. 2010(5):CD006732. [DOI] [PubMed] [Google Scholar]

- 50.Ruffin MT, Fetters MD, Jimbo M. Preference-based electronic decision aid to promote colorectal cancer screening: results of a randomized controlled trial. Prev Med. 2007;45(4):267–273. [DOI] [PubMed] [Google Scholar]

- 51.Jimbo M, Kelly-Blake K, Sen A, Hawley ST, Ruffin MT., IV Decision Aid to Technologically Enhance Shared decision making (DATES): study protocol for a randomized controlled trial. Trials. 2013;14:381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Krist AH, Woolf SH, Johnson RE, Kerns JW. A randomized controlled trial of a web-based decision aid to promote shared decision-making for prostate cancer screening. Paper presented at: 33rd Annual Meeting of the North American Primary Care Research Group; October 16, 2005; Quebec, Canada. [Google Scholar]

- 53.Irizarry T, DeVito Dabbs A, Curran CR. Patient portals and patient engagement: a state of the science review. J Med Internet Res. 2015; 17(6):e148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Goldzweig CL, Orshansky G, Paige NM, et al. Electronic patient portals: evidence on health outcomes, satisfaction, efficiency, and attitudes: a systematic review. Ann Intern Med. 2013;159(10):677–687. [DOI] [PubMed] [Google Scholar]

- 55.Ammenwerth E, Schnell-Inderst P, Hoerbst A. The impact of electronic patient portals on patient care: a systematic review of controlled trials. J Med Internet Res. 2012;14(6):e162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Ash JS, Sittig DF, Campbell EM, Guappone KP, Dykstra RH. Some unintended consequences of clinical decision support systems. AMIA Annual Symposium proceedings/AMIA Symposium. 2007:26–30. [PMC free article] [PubMed] [Google Scholar]