Abstract

Background

Automatic detection or classification of adventitious sounds is useful to assist physicians in diagnosing or monitoring diseases such as asthma, Chronic Obstructive Pulmonary Disease (COPD), and pneumonia. While computerised respiratory sound analysis, specifically for the detection or classification of adventitious sounds, has recently been the focus of an increasing number of studies, a standardised approach and comparison has not been well established.

Objective

To provide a review of existing algorithms for the detection or classification of adventitious respiratory sounds. This systematic review provides a complete summary of methods used in the literature to give a baseline for future works.

Data sources

A systematic review of English articles published between 1938 and 2016, searched using the Scopus (1938-2016) and IEEExplore (1984-2016) databases. Additional articles were further obtained by references listed in the articles found. Search terms included adventitious sound detection, adventitious sound classification, abnormal respiratory sound detection, abnormal respiratory sound classification, wheeze detection, wheeze classification, crackle detection, crackle classification, rhonchi detection, rhonchi classification, stridor detection, stridor classification, pleural rub detection, pleural rub classification, squawk detection, and squawk classification.

Study selection

Only articles were included that focused on adventitious sound detection or classification, based on respiratory sounds, with performance reported and sufficient information provided to be approximately repeated.

Data extraction

Investigators extracted data about the adventitious sound type analysed, approach and level of analysis, instrumentation or data source, location of sensor, amount of data obtained, data management, features, methods, and performance achieved.

Data synthesis

A total of 77 reports from the literature were included in this review. 55 (71.43%) of the studies focused on wheeze, 40 (51.95%) on crackle, 9 (11.69%) on stridor, 9 (11.69%) on rhonchi, and 18 (23.38%) on other sounds such as pleural rub, squawk, as well as the pathology. Instrumentation used to collect data included microphones, stethoscopes, and accelerometers. Several references obtained data from online repositories or book audio CD companions. Detection or classification methods used varied from empirically determined thresholds to more complex machine learning techniques. Performance reported in the surveyed works were converted to accuracy measures for data synthesis.

Limitations

Direct comparison of the performance of surveyed works cannot be performed as the input data used by each was different. A standard validation method has not been established, resulting in different works using different methods and performance measure definitions.

Conclusion

A review of the literature was performed to summarise different analysis approaches, features, and methods used for the analysis. The performance of recent studies showed a high agreement with conventional non-automatic identification. This suggests that automated adventitious sound detection or classification is a promising solution to overcome the limitations of conventional auscultation and to assist in the monitoring of relevant diseases.

Introduction

Most diseases related to an obstructed or restricted respiratory system can be characterised from the sounds generated while breathing. These include asthma, COPD, and pneumonia amongst others. Airway abnormalities can cause breathing sounds to be abnormal. Examples of this could be the absence of sounds or additive unusual ones. The latter are referred to as adventitious sounds. An expert can perform auscultation using a stethoscope to detect abnormalities in sounds and use this information when making a diagnosis. However, the correct detection of these sounds relies on both, the presence of an “expert”, and their degree of expertise.

While computerised respiratory sound analysis, specifically for the detection or classification of adventitious sounds, has been the focus of an increasing number of studies recently, a standardised approach and comparison has not been well established. Several reviews related to automatic adventitious sounds analysis have been published [1–6]. The article in [1] provided a review of 49 articles which included the type of sensor, the data set, the features, the analysis techniques, and also the performance metrics used. The review categorised features into time-domain, frequency-domain, wavelet-domain, and a combination of different domains. Signal pre-processing techniques such as de-noising, resampling, and analogue pre-filtering were also presented, as well as the number of sensors and their positioning. Information on analysis type, approach, and data management was not reviewed. The conclusion of this work was that a multi domain feature has advantages in characterising different types of lung sounds.

A review of computerised respiratory sounds specifically in patients with COPD was done in [2]. This included a total of seven papers. The focus of this review was studies that tried to find the characteristics of adventitious sounds in COPD (wheeze, crackle, and rhonchi), including occurrence timing and the power spectrum.

The review in [3] provided information on machine learning techniques used in lung sound analysis. This covered types of analysis, sensor type, number of subjects, machine learning techniques used, and the outcome of each reference. A total of 34 studies were reviewed. The review concluded that artificial intelligence techniques are needed to improve accuracy and enable commercialisation as a product. Another review, published by the same group, provided a summary of 55 studies on computer-based respiratory sound analysis [4]. The review included analysis type, sensor used, data set used, sensor location, and method of analysis. This work provided several recommendations for sensor type, position, and the use of more advanced machine learning techniques.

The survey in [5] focused on automated wheeze detection for asthmatic patients, and provided a review on instrumentation, placement, processing methods, features used, and the outcome, of a total of 27 studies. The study recommended placing the stethoscope on the trachea as this preserves more frequency information when compared to the chest wall.

A systematic review and meta-analysis of computerised lung sound analysis to aid in the diagnosis of diseases was presented in [6]. A total of 8 articles were selected for this systematic review which consisted of studies on wheeze, crackle, and other adventitious sounds for specific diseases such as asthma and COPD. The review included the number of subjects, age range, gender ratio, methodology, case, recording device, algorithm, and type of sounds analysed. The quality of each study was assessed using the Newcastle-Ottawa Score (NOS). The NOS is normally used for assessing non-randomised studies including control-studies. Four of the selected articles were then used for meta-analysis. This obtained an average of 80% sensitivity and 85% specificity in abnormal sound detection.

This systematic review adds to these existing reviews by providing more thorough information in a standardised format, with more works being reviewed, and more recent developments included. The comparison of this work with the previously mentioned reviews can be seen below.

[1], 2015, 49 articles, focused on respiratory sound analysis

[2], 2015, 7 articles, focused on COPD patients

[3], 2013, 34 articles, focused on machine learning techniques in lung sound analysis

[4], 2013, 55 articles, focused on computer-based respiratory sound analysis

[5], 2012, 21 articles, focused on wheeze analysis for asthma patients

[6], 2011, 8 articles, focused on abnormal lung sound detection

This work, 77 articles, focused on automatic adventitious respiratory sound analysis.

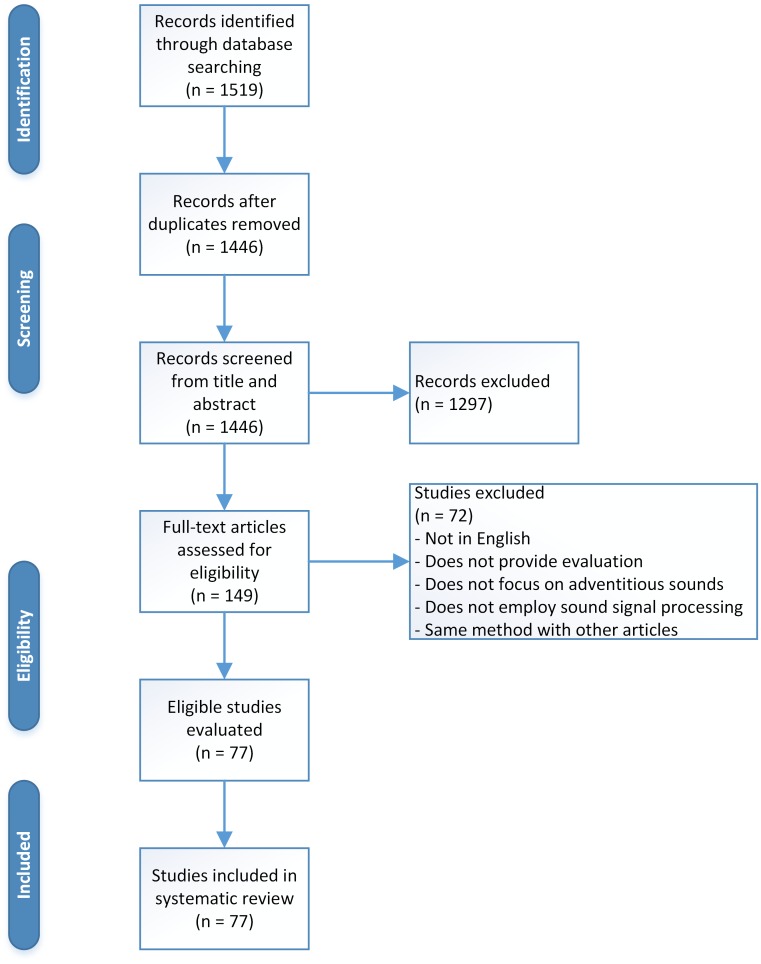

A standardised approach was used for this systematic review (Fig 1 and S1 File). Table 1 provides a summary of normal breath sounds, while adventitious sounds are summarised in Table 2. Analysis type, level, and approach to differentiate between studies are provided in Table 3. Dataset size and data management, which are an important part in the analysis, are stated in Tables 4 and 5. Furthermore, performance analysis of several studies with the same approach and purpose is given in Table 6. A discussion based on the outcome of the review is provided, as well as recommendations for future works.

Fig 1. Study selection flow diagram.

Table 1. Normal breath sounds.

| Breath Sounds | Location | Rangea | Pitchb | Qualityc | Timing (I:E ratio)d | Pausee |

|---|---|---|---|---|---|---|

| Vesicular | Most of lung fields | 100—1,000 Hz Energy drop at 200 Hz | Low | Low-pass filtered noise like Soft Rustling sound | During inspiration and early expiration (2:1 ratio) | Pause between different breath cycle |

| Broncho-Vesicular | Between scapulae on posterior chest and center part of anterior chest | Intermediate between Vesicular and Bronchial | Intermediate | Intermediate intensity | During both inspiration and expiration (1:1 ratio) | N/M |

| Bronchial | Large airways on chest near second and third intercostal space | Similar to Tracheal | High | Loud Hollow | During both inspiration and expiration (1:2 ratio) | Short pause between inspiration and expiration phase |

| Tracheal | Suprasternal notch on trachea | 100—5,000 Hz Energy drop at 800 Hz | High | Harsh Very loud | During both inspiration and expiration (1:1 ratio) | Distinct pause between inspiration and expiration phase |

| Mouth | Mouth | 200—2,000 Hz | N/M | White-noise like Silent when normal | N/M | N/M |

Table 2. Types of adventitious sounds and its characteristics.

| Types | Continuity | Durationa | Timingb | Pitchc | Qualityd | Causee | Disease Associatedf |

|---|---|---|---|---|---|---|---|

| Wheeze | Continuous | > 80ms | Inspiratory, Mostly Expiratory, Biphasic | High (> 400Hz) | Sibilant, Musical | Airway narrowing, airflow limitation | Asthma, COPD, Foreign body |

| Rhonchi | Continuous | > 80ms | Inspiratory, Mostly Expiratory, Biphasic | Low (< 200Hz) | Sibilant, Musical | Secretion in bronchial, muchosal thickening | Bronchitis, COPD |

| Stridor | Continuous | > 250ms | Mostly Inspiratory, Expiratory, Both | High (> 500Hz) | Sibilant, Musical | Turbulent airflow in larynx or lower bronchial tree (Upper airway obstruction) | Epiglottitis, foreign body, croup, laryngeal oedema |

| Fine Crackle | Discontinuous | ± 5 ms | Inspiratory (late) | High (650 Hz) | Non-musical, Explosive | Explosive opening of small airways | Pneumonia, Congestive heart failure, Lung fibrosis |

| Coarse Crackle | Discontinuous | ± 15 ms | Mostly Inspiratory (early), Expiratory, Both | Low (350 Hz) | Non-musical, Explosive | Air bubble in large bronchi or bronchiectatic segments | Chronic bronchitis, bronchiectasis, COPD |

| Pleural Rub | Discontinuous | > 15ms | Biphasic | Low (< 350Hz) | Non-Musical, Rhythmic | Pleural membrane rubbing against each other | Inflammation of lung membrane, lung tumour |

| Squawk | Continuous | ± 200 ms | Inspiratory | Low (200—300 Hz) | Short Musical and non-musical | Oscillation of peripheral airways | Hypersensitivity pneumonia, pneumonia |

| Gasp | Continuous | > 250ms | Inspiratory | High | Whoop | Gasping for breath | Whooping cough |

Table 3. Sound and analysis type.

| Ref | Year | Sound Type | Approach | Level | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| W | R | C | S | E | U | SC | De | Cl | Se | Ev | Re | ||

| [37] | 2016 | • | o | o | • | • | • | • | |||||

| [38] | 2016 | • | • | • | • | ||||||||

| [39] | 2016 | • | • | • | • | • | |||||||

| [40] | 2016 | • | • | • | |||||||||

| [41] | 2016 | • | • | • | • | • | |||||||

| [42] | 2016 | o | • | • | • | ||||||||

| [43] | 2016 | • | • | • | • | • | |||||||

| [44] | 2016 | • | • | • | • | ||||||||

| [45] | 2016 | • | • | • | • | • | |||||||

| [46] | 2016 | • | • | • | • | ||||||||

| [47] | 2015 | • | o | • | • | • | • | ||||||

| [48] | 2015 | • | • | • | • | • | |||||||

| [49] | 2015 | • | • | • | • | • | |||||||

| [50] | 2015 | • | • | • | • | • | • | ||||||

| [51] | 2015 | • | • | • | |||||||||

| [52] | 2015 | • | • | • | • | • | • | • | |||||

| [53] | 2015 | • | o | • | • | ||||||||

| [54] | 2015 | • | o | • | • | • | • | ||||||

| [55] | 2015 | • | • | • | • | • | • | ||||||

| [56] | 2015 | • | • | • | • | • | |||||||

| [57] | 2015 | o | o | • | • | • | • | ||||||

| [58] | 2015 | • | • | • | |||||||||

| [59] | 2015 | • | • | • | |||||||||

| [60] | 2015 | • | • | • | • | • | • | • | |||||

| [61] | 2015 | • | • | • | |||||||||

| [62] | 2015 | • | • | • | |||||||||

| [63] | 2014 | • | • | • | • | ||||||||

| [64] | 2014 | • | • | • | • | ||||||||

| [65] | 2014 | • | • | • | • | • | |||||||

| [66] | 2014 | • | • | • | • | ||||||||

| [67] | 2014 | • | • | • | |||||||||

| [68] | 2014 | • | • | • | • | • | |||||||

| [69] | 2014 | • | • | • | • | • | |||||||

| [70] | 2014 | • | • | • | |||||||||

| [71] | 2014 | • | • | • | • | ||||||||

| [72] | 2013 | • | • | • | • | ||||||||

| [73] | 2013 | • | • | • | • | • | |||||||

| [74] | 2013 | • | • | • | • | • | |||||||

| [75] | 2013 | • | • | • | |||||||||

| [76] | 2013 | • | • | • | |||||||||

| [77] | 2013 | • | • | • | • | ||||||||

| [78] | 2012 | • | • | • | • | ||||||||

| [79] | 2012 | • | • | • | • | • | • | • | • | ||||

| [80] | 2012 | • | • | • | |||||||||

| [81] | 2012 | • | • | • | |||||||||

| [82] | 2012 | • | • | • | • | ||||||||

| [83] | 2012 | • | • | • | • | ||||||||

| [84] | 2012 | • | • | • | |||||||||

| [85] | 2012 | • | • | • | |||||||||

| [86] | 2011 | • | • | • | • | ||||||||

| [87] | 2010 | • | • | • | • | • | |||||||

| [88] | 2010 | • | • | • | |||||||||

| [89] | 2009 | • | • | • | |||||||||

| [90] | 2009 | • | • | • | |||||||||

| [91] | 2009 | • | • | • | • | • | • | • | |||||

| [92] | 2009 | • | • | • | • | ||||||||

| [93] | 2009 | • | • | • | • | • | • | • | • | ||||

| [94] | 2009 | • | • | • | |||||||||

| [95] | 2009 | • | • | • | • | ||||||||

| [96] | 2008 | • | • | • | • | • | |||||||

| [97] | 2008 | • | • | • | • | ||||||||

| [98] | 2008 | • | • | • | |||||||||

| [99] | 2007 | • | • | • | |||||||||

| [100] | 2007 | o | • | • | • | • | • | ||||||

| [101] | 2007 | • | • | • | • | ||||||||

| [102] | 2005 | • | • | • | • | ||||||||

| [103] | 2005 | • | • | • | |||||||||

| [104] | 2005 | • | • | • | |||||||||

| [105] | 2005 | • | • | • | |||||||||

| [106] | 2004 | • | • | • | • | ||||||||

| [107] | 2000 | • | • | • | • | • | • | ||||||

| [108] | 1997 | • | • | • | • | ||||||||

| [109] | 1997 | • | • | • | • | • | • | ||||||

| [110] | 1996 | • | • | • | • | ||||||||

| [111] | 1995 | • | • | • | |||||||||

| [112] | 1992 | • | • | • | • | • | |||||||

| [113] | 1984 | • | • | • | |||||||||

Symbol ‘•’ denotes focus of study in corresponding article

while ‘o’ denotes sound included in study but not as main focus

W: Wheeze, R: Rhonchi, C: Crackle, S: Stridor

E: Egophony, Squawk, or Pleural Rub

U: Unspecified CAS or DAS, SC: Sound Cause

De: Detection, Cl: Classification

Se: Segment, Ev: Event, Re: Recording

Table 4. Sensor and data source for lung sound analysis.

| Ref | Year | Data Source | # Sensor | Sensor Position | Total Data | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Sensor | Database | Neck | Anterior | Posterior | Mouth | Multiple | ||||

| [37] | 2016 | [114]★ | - | 1 | • | 95 recordings | ||||

| [38] | 2016 | custom♦ | - | 1 | • | • | • | • | 227 recordings 171 normal 33 wheeze 19 crackle 4 w&c |

|

| [39] | 2016 | [122]♦ | ATS, COPD | 1 | N/M | 112 recordings 70 wheeze 42 normal |

||||

| [40] | 2016 | [115]★ [137]♦ |

- | 1 | • | 18 volunteers 9 asthmatic 9 normal 3036 segments 568 wheeze 2468 normal |

||||

| [41] | 2016 | [116]★ | - | 5 | • | • | • | 870 events 1494 segments |

||

| [42] | 2016 | [122]♦ | - | 1 | • | • | • | 20 volunteers 40 recordings 400 crackle events |

||

| [43] | 2016 | Electronic ♦ | - | 1 | • | • | • | 3120 short recordings 1560 normal 1560 abnormal |

||

| [44] | 2016 | - | [129] | N/A | 36 recordings 318 events |

|||||

| [45] | 2016 | - | [124] [138] [139] | N/A | 30 volunteers 72 events |

|||||

| [46] | 2016 | [140]★ | - | 14 | • | • | 600 events 200 crackle 200 normal 200 wheeze |

|||

| [47] | 2015 | custom ♦ | - | 1 | • | • | • | • | 38 patients 57 recording 28 normal 26 wheeze 3 crackle |

|

| [48] | 2015 | Condenser★ analog ♦ |

[128] | 1 | • | 20 recordings and additional data | ||||

| [49] | 2015 | [115]★ [137]♦ |

- | 1 | • | 58 recordings | ||||

| [50] | 2015 | N/M | [127] | N/M | 45 recordings | |||||

| [51] | 2015 | N/M | 41 recordings | |||||||

| [52] | 2015 | Piezoelectric★ electronic ♦ |

- | 1 | • | • | • | 130 recordings 66 patient 64 healthy 3140 events 1567 abnormal 1573 normal |

||

| [53] | 2015 | [123]■ | - | 1 | • | 45 recordings | ||||

| [54] | 2015 | [121]♦ | - | 1 | • | • | • | 12 volunteers 113 wheeze events |

||

| [55] | 2015 | - | [129] [130] | N/A | 28 recordings | |||||

| [56] | 2015 | [119]♦ [120]♦ |

- | 1 | N/M | 24 recordings | ||||

| [57] | 2015 | [140]★ | - | 14 | • | • | 40 recordings 20 healthy 10 obstructive 10 restrictive |

|||

| [58] | 2015 | - | [124] | N/A | 26 recordings 17 wheeze 9 normal 1188 segments 898 normal 290 wheeze |

|||||

| [59] | 2015 | [140]★ | - | 14 | • | • | 7 volunteers 231 events |

|||

| [60] | 2015 | Piezoelectric★ electronic ♦ |

- | 1 | • | • | 230 recordings 115 normal 115 abnormal |

|||

| [61] | 2015 | [117]★ | [125] [126] | 3 | • | • | • | 260 segments | ||

| [62] | 2015 | [121]♦ | - | 1 | • | • | • | 100 events | ||

| [63] | 2014 | - | [130] [131] [133] | N/A | 9 recordings Total Data N/M |

|||||

| [64] | 2014 | [120]♦ | - | 1 | • | • | 60 volunteers | |||

| [65] | 2014 | [140]★ | [129] [131] [132] | 1 | • | 339 events 239 events |

||||

| [66] | 2014 | N/M | 371 recordings | |||||||

| [67] | 2014 | - | [124] | N/A | 2 recordings | |||||

| [68] | 2014 | ★ | [138] [139] | 1 | • | 30 recordings 120 events |

||||

| [69] | 2014 | - | [124] | N/A | 13 events | |||||

| [70] | 2014 | - | [124] | N/A | 68 recordings | |||||

| [71] | 2014 | - | [129] | N/A | 92 events 27 normal 31 crackle 34 wheeze |

|||||

| [72] | 2013 | ★ | - | 7 | • | • | • | • | 60 volunteers 345 recordings |

|

| [73] | 2013 | N/M | 6 events | |||||||

| [74] | 2013 | [119]♦ | - | 1 | • | • | • | • | 40 recordings | |

| [75] | 2013 | - | [124] | N/A | 68 recordings | |||||

| [76] | 2013 | [140]★ | - | 14 | • | • | 26 volunteers 6000 segments |

|||

| [77] | 2013 | soft ♦ | - | 1 | • | 8 volunteers 59 recordings |

||||

| [78] | 2012 | [119]♦ | - | 1 | • | • | 28 recordings | |||

| [79] | 2012 | Piezoelectric★ electronic ♦ |

- | 1 | • | 126 recordings 63 normal 63 abnormal 723 events 351 normal 372 abnormal |

||||

| [80] | 2012 | - | [124] | N/A | 47 recordings | |||||

| [81] | 2012 | N/M | 180 segments 98 normal 82 wheezes |

|||||||

| [82] | 2012 | - | ACCP | N/A | 10 short recordings (200ms) 33 crackle events |

|||||

| [83] | 2012 | N/M | 26 recordings | |||||||

| [84] | 2012 | N/M | 433 segments | |||||||

| [85] | 2012 | [140]★ | [129] [131] [132] | 1 | • | 47 recordings 689 events |

||||

| [86] | 2011 | [140]★ | [129] [131] [132] | 1 | • | 585 events | ||||

| [87] | 2010 | - | [124] | N/A | 4-7 recordings each class | |||||

| [88] | 2010 | [140]★ | - | 5 | • | • | • | 21 volunteers 393 wheeze events |

||

| [89] | 2009 | [140]★ | - | 14 | • | • | 7 volunteers 492 segments |

|||

| [90] | 2009 | - | [124] | N/A | 24 recordings 2807 segments |

|||||

| [91] | 2009 | Electronic ♦ | - | 1 | • | 36 recordings 360 events |

||||

| [92] | 2009 | - | [129] [132] [134] [135] | N/A | 25 recordings 9 FC 8 CC 8 Squawk 96 segments 32 FC 32 CC 32 squawk |

|||||

| [93] | 2009 | Condenser★ piezoelectric ♦ | - | 1 | • | • | • | 162 volunteers 1544 events |

||

| [94] | 2009 | - | [124] | N/A | 40 events 28 recordings 112 events |

|||||

| [95] | 2009 | - | [129] | N/A | 17 recordings | |||||

| [96] | 2008 | [140]★ | - | 1 | • | 65 volunteers | ||||

| [97] | 2008 | - | [125] | N/A | 40 events 21 normal 19 wheeze |

|||||

| [98] | 2008 | [141]★ | [129] [132] | 1 | • | 14 volunters 186 events 100 normal 86 wheeze |

||||

| [99] | 2007 | ECM★ [137]♦ |

- | 1 | • | 30 volunteers | ||||

| [100] | 2007 | - | [129] [131] [142] | N/A | 18 recordings 5 FC 5 CC 4 normal 4 wheeze 182 crackle events |

|||||

| [101] | 2007 | [141]★ | - | 5 | • | • | • | • | 13 volunteers 422 wheeze events |

|

| [102] | 2005 | Electret★ | - | 2 | • | • | 57 volunteers 18 Obstructive 19 Restrictive 20 healthy |

|||

| [103] | 2005 | [115]★ | - | 1 | • | 16 volunteers 12 asthmatic 4 healthy |

||||

| [104] | 2005 | ★ | - | 25 | • | • | 29 volunteers 10 healthy 19 patients |

|||

| [105] | 2005 | N/M | 2 volunteers 391 events 238 CC 153 FC |

|||||||

| [106] | 2004 | Piezoelectric■ | - | 1 | • | 31 volunteers 16 asthmatic 15 healthy |

||||

| [107] | 2000 | LS-60★ | [129] [131] | 2 | • | • | 2127+321 events 788+251 abnormal 1360+70 normal |

|||

| [108] | 1997 | - | ACCP | N/A | 2 recordings | |||||

| [109] | 1997 | ★ | - | 2 | • | • | 69 volunteers 28 obstructive 23 restrictive 18 healthy |

|||

| [110] | 1996 | N/M | • | 13 volunteers 4 healthy 9 patients 5000 segments |

||||||

| [111] | 1995 | N/M | 710 segments 375 wheeze 335 normal |

|||||||

| [112] | 1992 | ★ | - | 1 | N/M | 9 patients | ||||

| [113] | 1984 | - | [136] | N/A | 147 events | |||||

★ denotes microphone,

♦ denotes stethoscope,

■ denotes accelerometer

ATS: American Thoracic Society website, COPD: COPD website

ACCP: American College Chest Physician teaching tape

N/A: Not Applicable, N/M: Not Mentioned

Table 5. Data, features, and methods of analysis.

| Ref | Year | Data Set | Features | Method | Performance | |||

|---|---|---|---|---|---|---|---|---|

| Training | Validation | Test | Total | |||||

| [37] | 2016 | 70 Rec, 20 W, 50 N | 25 Rec, 7 W, 18 N | 39 Rec, 10 W, 29 N | 95 Rec | Spectral features (PSD mean, harmonics) | SVM, LRM | 71.4% Se, 88.9% Sp, for SVM on validation set at Rec level |

| [38] | 2016 | 5-fold CV | 227 Rec | Denoising autoencoders | SVM | 90% Se, 64% Sp for W Rec level and 90% Se, 44% Sp for C Rec level | ||

| [39] | 2016 | N/A | 112 Rec | 112 Rec | Rule-based Seg selection, Power Ratio | Threshold | 90% Se, 90.48% Sp at Rec level | |

| [40] | 2016 | N/M | 3036 Seg | MFCC | GMM | 88.1% Se, 99.5% Sp at Seg level | ||

| [41] | 2016 | 65% | 10-fold CV | 35% | 870 Ev | Ensemble Empirical Mode Decomposition and Instantaneous Frequency | SVM | 94.2% Se, 96.1% Sp, for SVM on best iteration of test set at Ev level |

| [42] | 2016 | 10-fold CV | LOOCV | 400 Ev | Musical features, wavelet-based, teager energy, entropy | LRM | 76 ± 23% Se, 77 ± 22% PPV at Seg level | |

| [43] | 2016 | LOOCV | 3120 Rec | MFCC | HMM | Best Acc at Seg level 82.82%, average Acc of 87.7% at Rec level | ||

| [44] | 2016 | 219 Ev, 71 N, 39 FC, 39 CC, 35 mono W, 35 poly W | 40 holdout CV | 99 Ev, 31 N, 18 FC, 18 CC, 16 mono W, 16 poly W | 318 Ev | Higher Order Statistics (Cumulants) | GA + k-NN and NB | 94.6% Overall Acc on test set at Ev level |

| [45] | 2016 | LOOCV | 72 Ev | LFCC, MFCC, IMFCC, and LPCC | MLP | 97.83% best Overall Acc using MFCC at Ev level | ||

| [46] | 2016 | LOOCV | 600 Ev | Energy of High Q-Factor Wavelet coefficients | k-NN, SVM | 95.17% average Acc for SVM at Ev level | ||

| [47] | 2015 | LOOCV | 57 Rec | Peak to mean ratio, expected number of false positives | Threshold+SVM | 86% Acc on Rec level | ||

| [48] | 2015 | 20 Rec | - | Multiple sets | > 20 Rec | 13 MFCC each with first and second derivatives | k-NN | Performance of 6 different types of test reported as Acc |

| [49] | 2015 | 23 Rec, 13 W, 10 N | - | 35 Rec, 19 W, 16 N | 58 Rec | Duration, frequency range, area, power, and slope of spectrum | BPNN | 94.6% Se, 100% Sp at Rec level |

| [50] | 2015 | N/A | 45 Rec | 45 Rec | Entropy-based Features | Threshold | 99% Acc Stridor, 70% Acc W, 87% Acc C, 99% Acc N, at Rec level | |

| [51] | 2015 | 41 Rec | 41 Rec | Spectral features | GMM | 92.85% Se, 100% Sp at Rec level | ||

| [52] | 2015 | LOOCV | 130 Rec | MFCC, correlation score with other auscultation point and other Seg | HMM | Best Acc of 92.26% at Ev level and best Acc of 91% at Rec level | ||

| [53] | 2015 | 21 Rec, 5 W, 21 Non-W | 20%-80% Train Validation Set repeated 20 times | Leave-one-out CV | 45 Rec | MFCC, Kurtosis, Entropy | 2 SVM + Threshold | 97.68% Reliability (TPR.TNR) using MFCC at Seg level |

| [54] | 2015 | 10-fold CV | 113 Ev | Musical features and spectrogram signature | LRM, RF | 90.9% ± 2% Se, 99.4% ± 1% Sp for RF at Seg level | ||

| [55] | 2015 | 70% of data | 15% of data | 15% of data | 28 Rec | Averaged Power Spectrum | ANN | 97.8% Se, 100% Sp on test set at Ev level |

| [56] | 2015 | N/A | 24 Rec | 24 Rec | Fractal Dimension, CORSA criterion for Crackle | Threshold | Average Se of 89 ± 10%, PPV of 95 ± 11% at Ev level for different Rec | |

| [57] | 2015 | LOOCV | 40 Rec | AR Model | GMM, SVM | 90% best total Acc for GMM on Rec level | ||

| [58] | 2015 | LTOCV | 1188 Seg | MFCC, WPT, FT | C-Weighted SVM | 81.5 ± 10% Se, 82.6 ± 7% Sp for MFCC features on Seg level | ||

| [59] | 2015 | N/M | 231 Ev | Quartile Frequency Ratios, Mean Crossing Irregularity | SVM, k-NN, NB | 75.78% best Overall Acc for kNN at Ev level | ||

| [60] | 2015 | LOOCV | 230 Rec | MFCC | Subject adaptation HMM | 89.4% Se, 80.9% Sp at Ev level and 90.4% Se, 78.3% Sp at Rec level | ||

| [61] | 2015 | 10-fold CV | 260 Seg | Audio Spectral Envelope and Tonality Index | SVM | 93% Overall Acc at Seg level | ||

| [62] | 2015 | N/A | 100 Ev, 50 C, 50 N | 100 Ev | Mathematical morphology | Threshold | 86% Se, 92% Sp at Ev level | |

| [63] | 2014 | N/M | Delay Coordinate | Threshold | 98.39% Acc at Ev level | |||

| [64] | 2014 | 5-fold CV | 60 Vol | frequency ratio, average instantaneous frequency, eigenvalues | SVM | Individual Acc reported for all case of one-versus-one and one-versus-all for all features at Rec level | ||

| [65] | 2014 | LOOCV | 578 Ev | Instantaneous Kurtosis, Discriminanting Function, Sample Entropy | SVM | 97.7% Mean Acc (Inhale), 98.8% Mean Acc (exhale) at Ev level | ||

| [66] | 2014 | 371 Ev | 371 Rec | Centroid, time duration, slope, and area ratio of spectrum | SVM | 88.7% Se, 93.9% Sp at Rec level | ||

| [67] | 2014 | LOOCV | 2 Rec | Teager energy, wavelet, fractal dimension, empirical mode decomposition, entropy, and GARCH process | LRM | MCC of 80% at Seg level | ||

| [68] | 2014 | 5-fold CV | 120 Ev | Lacunarity, sample entropy, skewness, and kurtosis | SVM, ELM | 86.30% Se, 86.90% Sp for ELM at Ev level | ||

| [69] | 2014 | LOOCV | 13 Ev | MFCC | MLP | 100% Acc W, 75% Acc C, 80% Acc N at Ev level | ||

| [70] | 2014 | 10-fold CV | 68 Rec | MFCC | SVM, k-NN | 100% Acc N, 100% Acc AOP, 96% Acc PP for kNN at Rec level | ||

| [71] | 2014 | 60 Ev | 14 Ev | 18 Ev | 92 Ev | Wavelet packet transform | ANN | 98.89% best average Acc for Symlet-10 wavelet base at Ev level on test set |

| [72] | 2013 | 75%-25% Train Validation Set repeated 6 times | 345 Rec | Spectrogram evaluation for W, db5 Wavelet degree of similarity for C | ANN | 80% Se, 67% Sp at Rec level | ||

| [73] | 2013 | N/A | 6 Ev | 6 Ev | Time Frequency Analysis and Wavelet Packet Decomposition | Threshold | All Ws detected | |

| [74] | 2013 | N/A | 40 Rec | 40 Rec | Time Frequency Analysis | Threshold | 99.2% Se, 72.5% Sp at Ev level | |

| [75] | 2013 | 60%-40% Train Validation Set repeated 25 times | 68 Rec | MFCC | SVM | 94.11% Acc N, 92.31% Acc AOP, 88% Accruacy PP, for SVM at Rec level | ||

| [76] | 2013 | 2000 Seg, 1000 N, 1000 C | 2000 Seg, 1000 N, 1000 C | 2000 Seg, 1000 N, 1000 C | 6000 Seg | Time Frequency Analysis (Spectrogram), Time Scale Analysis (Wavelet) | SVM, MLP, k-NN | 97.5% Overall Acc rate for SVM using Time Frequency Analysis at Seg level |

| [77] | 2013 | N/A | 59 Rec | 59 Rec | Correlation Coefficient | Threshold | 88% Se, 94% Sp at Rec level | |

| [78] | 2012 | 10-fold CV | 28 Rec | Cortical Model | SVM | 89.44% Se, 80.50% Sp at Rec level | ||

| [79] | 2012 | LOOCV | 126 Rec, 723 Ev | Power, spectral features, and duration distribution | HMM | 88.7% Se, 91.5% Sp at Ev level and 87% Se, 81% Sp at Rec level | ||

| [80] | 2012 | N/A | 47 Rec | 47 Rec | Local similarity measure using Mutual Information, Weighted cepstral features | Threshold | High Acc for local similarity measure and separability index of 1 for weighted cepstral | |

| [81] | 2012 | N/A | 180 Seg | 180 Seg | fractional Hilbert transform | Threshold | Acc of 90.5% at Seg level | |

| [82] | 2012 | N/A | 33 C Ev | 33 Ev | fractional Hilbert transform and correlation coefficient | Threshold | Se 94.28%, PPV 97.05% at Ev level | |

| [83] | 2012 | N/A | 26 Rec, 13 N, 13 W | 26 Rec | LPC prediction error ratio | Threshold | 70.9% Se, 98.6% Sp at Ev level | |

| [84] | 2012 | N/A | 433 Seg | 433 Seg | Abnormality level | Threshold | 84.5% Acc at Seg level | |

| [85] | 2012 | 50%-50% Train Validation Set repeated 100 times | 689 Ev | Multi-scale PCA (Wavelet) | Empirical Classification | 97.3% ± 2.7% Overall Acc for N vs CAS, 98.34% Overall Acc for N vs CAS+DAS at Ev level | ||

| [86] | 2011 | LOOCV | 585 Ev | Temporal-Spectral Dominance spectrogram | k-NN | 92.4% ± 2.9% Overall Acc at Ev level | ||

| [87] | 2010 | LOOCV | 4-7 Rec Each | MFCC | GMM | 52.5% Overall Acc on validation | ||

| [88] | 2010 | N/A | 21 Vol, 393 W Ev | 393 Ev | Continuous Wavelet Transform | Man-Whitney U Test | Significance test for features | |

| [89] | 2009 | LOOCV | 492 Seg | Kurtosis, Renyi entropy, frequency power ratio, Mean crossing irregularity | FDA | 93.5% Overall Acc at Seg level | ||

| [90] | 2009 | LOOCV | 2807 Seg | Fourier Transform, LPC, Wavelet Transform, MFCC | VQ, GMM, ANN | 94.6% Se, 91.9% Sp for GMM using MFCC at Seg level | ||

| [91] | 2009 | 180 Ev | - | 180 Ev | 360 Ev | averaged power spectrum | MLP, GAL, ISNN | Overall Acc of 98% for ISNN at Ev level |

| [92] | 2009 | 75%-25% train-test split repeated 200 times | 362 Ev | Lacunarity | Discriminant Analysis | 99.75% maximum mean Acc at Seg level | ||

| [93] | 2009 | LOOCV | 1544 Ev | MFCC | HMM | 93.2% Se, 64.8% Sp at Ev level | ||

| [94] | 2009 | 40 Ev, 20 W, 20 N | - | 28 Rec, 112 Ev, 40 W, 72 N | 152 Ev | Amplitude and Frequency of largest edge of pre-processed spectrogarm | MLP | 86.1% Se, 82.5% Sp on test set at Ev level |

| [95] | 2009 | N/A | 17 Rec | 17 Rec | Entropy-based features | Threshold | 84.4% Se, 80% Sp at Rec level | |

| [96] | 2008 | 40 Vol | LOOCV | 25 Vol | 65 Vol | AR Coefficients | k-NN, Minimum Distance-based | 92% Se, 100% Sp using k-NN on test set at Rec level |

| [97] | 2008 | N/A | 40 Ev | 40 Ev | Peak selection based on time duration | Threshold | 84% Se, 86% Sp at Ev level | |

| [98] | 2008 | N/A | 186 Ev | 186 Ev | Distortion in Histogram of Sample Entropy | Threshold | 97.9% Acc Expiration, 85.3% Acc Inspiration at Ev level | |

| [99] | 2007 | N/M | 870 Ev | MFCC | GMM | Acc 94.9% at Seg level | ||

| [100] | 2007 | N/A | 18 Rec | 182 C Ev | Fractal Dimension | Threshold | 92.9% Se, 94.4% PPV at Ev level, 93.9% best Acc for classification | |

| [101] | 2007 | 3 Vol, 85 W Ev | - | 10 Vol, 337 W Ev | 422 W Ev | Peak selection based on local maxima, coexistence, continuity, grouping | Threshold | Se 95.5 ± 4.8%, Sp 93.7 ± 9.3% at Ev level on test set |

| [102] | 2005 | 50%-50% train-test Seg from same Ev split | 57 Vol | AR parameters and Cepstral Coefficients | MLP | 10-20% average misclassification error on test set at Ev level for cepstral features | ||

| [103] | 2005 | N/A | 16 Vol | 16 Vol | spectrogram image | Edge Detection | Se and Sp above 89% | |

| [104] | 2005 | 912 Seg | 114 Seg | 114 Seg | 1140 Seg | multi-variate AR model | BPNN | 80.7% Se, 84.21% Sp at Seg level on validation set |

| [105] | 2005 | 160 Ev, 80 CC, 80 FC | - | 231 Ev, 158 CC, 73 FC | 391 Ev | wavelet network | Discriminant Function | 84% and 70% Acc for FC and CC respectively on test set at Ev level |

| [106] | 2004 | N/A | 31 Vol | 31 Vol | energy | Threshold | 100% Se and Sp for a high airflow and 71% Se, 88.2% Sp for low airflow, at Ev level | |

| [107] | 2000 | 1253 Ev, 509 Ab, 744 N | repeated 5 times | 1195 Ev, 530 Ab, 665 N | 2448 Ev | averaged power spectrum | BPNN | Best Se 59%, 81% Sp for recorded sound and Se 87%, 95% Sp for CD data at Ev level for Ab vs N respiratory sound classification |

| [108] | 1997 | N/A | 2 Rec | 2 Rec | Matched wavelet | Threshold | Detection Acc of 99.8% and classification Acc of almost 100% at Seg level | |

| [109] | 1997 | LOOCV | 69 Vol | AR model, crackle parameters | k-NN, multinomial, voting | Overall Acc of 71.07% at Rec level to classify pathology | ||

| [110] | 1996 | 50%-50% training-test split | 13 Vol | Wavelet packet decomposition | LVQ (ANN Variant) | 59% Se, 24% PPV for FC, 19% Se, 6% PPV for CC, and 58% Se, 18% PPV for W at Seg level | ||

| [111] | 1995 | 242 Seg, 128 W, 114 N | - | 2 test set: 233 Seg, 107 W, 126 N, and 235 Seg, 140 W, 95 N | 710 Seg | Power spectrum | BPNN, RBF, SOM, LVQ | Overall Acc of 93% and 96% on the two sets by using LVQ at Seg level |

| [112] | 1992 | N/A | 9 Vol | 9 Vol | Energy envelope, Crackle characteristics | Threshold, Hierarchical clustering | 100% Acc on classifying FC vs CC at Ev level | |

| [113] | 1984 | 42 Ev, 6 for each types | - | 105 Ev, 10-15 for each types | 147 Ev | LPC | Clustering (Minimum Distance) | Overall Acc of 95.24% at Ev level |

Rec: Recording, Ev: Event, Seg: Segment

W: Wheeze, FC: Fine Crackle, CC: Coarse Crackle, N: Normal, Ab: Abnormal, Vol: Volunteer

CV: Cross-Validation, Se; Sensitivity, Sp: Specificity, PPV: Positive Predictive Value, Acc: Accuracy

N/A: Not Applicable, N/M: Not Mentioned

Table 6. Accuracy percentage measure from literature.

| WSD (%) | WED (%) | CSD (%) | WSC (%) | WEC (%) | CEC (%) | |

|---|---|---|---|---|---|---|

| 93.8 [40] 97.9 [54] 82.1 [58] 93.25 [90] 71.2 [110] |

100 [73] 85.85 [74] 85 [97] 94.6 [101] 100 [106] 79.6 [106] |

83.5 [42] 84.5 [84] 99.75 [92] 99.8 [108] 62.27 [110] |

93 [61] 90.5 [81] 93.5 [89] 94.9 [99] 93 [111] 96 [111] |

95.15 [41] 98 [46] 95.3 [55] 75.78 [59] 98.39 [63] 97.7 [65] 98.8 [65] 100 [69] 84.75 [83] 92.4 [86] 97.5 [91] 84.3 [94] 97.9 [98] 85.3 [98] |

95 [46] 98.15 [55] 89 [62] 97.5 [91] |

|

| accuracy range | 71.2–97.9 | 79.6–100 | 62.27–99.8 | 90.5–96 | 75.78–100 | 89–98.15 |

Objectives

The objective of this systematic review is to provide a summary of the existing literature on algorithms for the detection or classification of adventitious respiratory sounds. The review is organised as follows: A summary of normal and adventitious sound characteristics is provided initially. Types of analysis performed are discussed, including the adventitious sound types analysed, approach of each analysis technique, and the level at which the analyses were performed. Instrumentation and data collection methods are also provided, including sensor type, number, and position, as well as the amount of data obtained. Several works obtained data for analysis from online repositories and book audio CD companions. These databases were listed as well. A summary of data management, features, and detection or classification methods is also presented, including the performance reported in each work. Overall, a total of 77 articles are considered. This systematic review provides a complete summary of methods used in the existing literature to give a baseline for future works.

Methods

The systematic review was performed following the recommendations of the Preferred Reporting Items for Systematic Reviews and meta-Analysis (PRISMA) statement [7]. The PRISMA checklist is provided in S1 File.

Data sources and study selection

Studies included in this review are peer-reviewed articles written in English published between 1938 and 2016. The types of study are automatic detection or classification of adventitious sounds based on sound signal processing. No age limitation was considered as an eligibility criterion. Most data in the literature was taken from both healthy volunteers and patients with pulmonary diseases. The outcomes of the studies considered were reported as a performance measure of the automatic systems developed. The types of performance measures reported depend on the approach of each study.

The references for this review were searched using the SCOPUS (1938-2016) and IEEExplore (1984-2016) databases. Additional articles were obtained from the bibliographies of articles found. The date of the last search was 1st November 2016. Electronic search terms for these databases included adventitious sound detection, adventitious sound classification, abnormal respiratory sound detection, abnormal respiratory sound classification, wheeze detection, wheeze classification, crackle detection, crackle classification, rhonchi detection, rhonchi classification, stridor detection, stridor classification, pleural rub detection, pleural rub classification, squawk detection, and squawk classification. Articles which focused on adventitious sounds detection or classification based on breath sound with performance reported were identified from the search results. Screening was done by selecting articles based on the title and abstract. Further selection was performed on screened articles based on the eligibility criteria.

To ascertain the validity of the review, only peer-reviewed articles that provided sufficient information to approximately reproduce the results achieved were considered. Issues related to data collection and management, which may introduce bias within each study, were identified and reviewed. Thorough information on types of instrumentation or repository used, total number of data, and how the data were used are reported in the review.

Data extraction and synthesis

Data extraction was performed by the investigators on eligible articles. A data extraction form was created to obtain important information from these articles. Extracted data were summarised into tables and further described in Section Results. Investigators extracted data about the adventitious sound type analysed, approach and level of analysis, instrumentation, location of sensor, amount of data obtained and used, data management, features, methods, and performance achieved for each study. The principal summary measure which will be used in this systematic review is the reviewed algorithm’s range of accuracy achieved for specific tasks.

A summary of normal and adventitious respiratory sounds and their characteristics is given prior to the article’s review. This summary aims to provide insight into the sounds that need to be detected or classified. Limitations of conventional auscultation are discussed next. A short description of the available commercial devices for automatic respiratory sound analysis is provided. Studies on different adventitious sound types and analysis types are identified and summarised. The different instrumentation used to collect data is also identified for each reference. The methods of analysis are discussed in separate sections. These are based on the techniques used to perform the detection or classification of adventitious sounds. The performance reported in the literature is transformed to overall accuracy where possible, for data synthesis. Balanced accuracy was used when sensitivity and specificity measures were reported instead of the overall accuracy.

Results

This section provides the results of the systematic review performed. The section is organised as follows: A summary of normal and abnormal breath sounds is first given. This is followed by an outline of the limitations of conventional auscultation to underline the need for automated detection or classification of adventitious sounds. Commercial devices related to respiratory sound analysis are also discussed in this section. The results of the systematic review are subsequently presented. These include explanations of the type of analysis, instrumentation, and methods.

Review of normal and abnormal respiratory sounds

Respiratory sounds are sounds generated by the respiratory system. These can usually be heard by performing auscultation. Auscultation is generally carried out to check physical health, and it involves listening to both, cardiac and respiratory sounds. Respiratory sounds heard from auscultation can be normal or abnormal. Finding abnormal respiratory sounds and differentiating them from normal sounds is important as abnormal sounds are characteristic of several serious diseases, such as asthma, COPD, and pneumonia.

Normal respiratory sounds

Normal respiratory sounds can be categorised based on the location where they are heard or generated. Depending on the auscultation location, different types of respiratory sounds have distinct characteristics such as duration, pitch, and sound quality. Normal respiratory sounds and their characteristics are briefly discussed below. A summary is also presented in Table 1.

-

Vesicular Sounds

Normal vesicular sounds are soft, non-musical, and can be heard on auscultation performed over most of the lung fields. Vesicular breath sounds are audible during the whole inspiration phase. However, due to the passive nature, as well as the origin, of the sounds, they can only be heard in the early expiration phase [8]. Hence vesicular sounds are longer during inspiration than during expiration. The pitch as well as the intensity are also higher in the inspiration phase compared to expiration. And while there is normally no pause between inspiration and expiration sounds in one cycle, different breath cycles are separated with a pause [8].

Vesicular sounds have a low pitch and very limited frequency range, usually with a drop in energy after around 100—200 Hz [9]. This is due to the chest wall acting like a low-pass filter on the sounds generated. The intensity of the vesicular sounds also varies depending on the part of the chest that auscultation is performed on [8].

-

Bronchial Sounds

Normal bronchial sounds are heard over the large airways on the chest, specifically near the second and third intercostal space. Bronchial sounds are more hollow and high-pitched compared to vesicular sounds [8]. Bronchial sounds are audible during both, inspiratory and expiratory phases [10]. In contrast with vesicular sounds, due to the sounds being originated in larger airways, the expiratory phase sounds are normally audible for longer than the inspiratory phase ones. The intensity of expiration phase sounds is also higher, compared to the intensity in the inspiration phase. Unlike in vesicular sounds, there is a short pause in-between each cycle of breathing.

Bronchial sounds contain more energy at a higher frequency bandwidth than vesicular sounds [8]. The sounds heard are usually high-pitched, loud, and tubular.

-

Broncho-vesicular Sounds

Broncho-vesicular sounds are normally heard on the posterior chest between the scapulae, as well as in the centre part of the anterior chest. The quality of the sound is between bronchial and vesicular sounds. They are softer than bronchial sounds but still mimic tubular sounds. The inspiratory and expiratory phases can be heard as having similar durations [11].

-

Tracheal Sounds

Tracheal sounds are harsh, loud, and usually have high pitch [8]. The sounds are normally heard when auscultation is performed over the trachea, specifically on the suprasternal notch. The sounds heard are usually hollow and tubular as they are generated by turbulent airflow passing through the pharynx and glottis [10]. The gap between inspiratory and expiratory phases in tracheal sounds is distinct, with both phases having a similar duration.

The energy distribution in frequency is more spread when compared to the other normal sounds, with a much energy in the higher frequency components. The frequency range of normal tracheal sounds can reach up to 5,000 Hz with an energy drop usually occurring from 800 Hz [12]. The sounds heard over the trachea have a high intensity and can give more information as they are not filtered by the chest wall.

-

Mouth Sounds

Breath sounds heard from the mouth are produced by central airways, and caused by turbulent airflow below the glottis. Breath sounds from the mouth have a wide frequency range of 200 to 2,000 Hz [13]. The energy distribution is similar to that of white noise. For a healthy person, breath sounds from the mouth should be silent.

The comparison and summary of the types and characteristics of normal respiratory sounds can be seen in Table 1.

Different locations for auscultation provide different sound characteristics, even for normal breath sounds. This may cause automatic analysis of lung sounds to be more complex when signals are obtained from multiple locations.

Abnormal respiratory sounds

Abnormal breath sounds include the absence or reduced intensity of sounds while breathing, normal breath sounds heard in abnormal areas, as well as adventitious sounds. Adventitious sounds refer to sounds superimposed on normal breath sounds. These can be characterised based on the underlying conditions and hence be very useful in helping diagnosis. Adventitious sounds can be classified into two categories, continuous and discontinuous, based on their duration.

-

Continuous Adventitious Sounds

Continuous Adventitious Sounds (CAS) are abnormal sounds superimposed on normal breath sounds with durations of more than 250 ms [14]. Based on the pitch, CAS can be further categorised as high-pitched (Wheeze, Stridor, and Gasp) or low-pitched (Rhonchi and Squawk). Based on the associated condition and cause of the adventitious sounds, different types of CAS can also be separated.-

Wheeze and RhonchiWheeze and rhonchi are both continuous adventitious sounds which can be heard during inspiration, mostly at expiration, or during both phases [10]. Wheeze is a high-pitched CAS while rhonchi are low-pitched. Wheeze sounds are caused by the airway narrowing which then causes an airflow limitation [15] while rhonchi are related to the thickening of mucus in the larger airways [16]. According to [17], although wheeze and rhonchi belong to CAS, they do not necessarily have durations of more than 250 ms. Some have reported that wheeze and rhonchi can have minimum durations of around 80 to 100 ms.Wheeze and rhonchi both present as sinusoid-like signals, with frequency ranges between 100-1,000 Hz. Wheeze is defined as a high-pitched continuous sound with dominant frequency of a minimum of 400 Hz, while rhonchi is a low-pitched continuous sound with dominant frequency of a maximum of 200 Hz [14]. Both wheeze and rhonchi are musical sounds, usually with up to three harmonic frequencies [18].

-

StridorStridor is a type of CAS with a sibilant and musical quality, similar to wheeze. Stridor can mostly be heard on the inspiration phase although, on some occasions, it can be heard on expiration or even in both phases [10]. Different from wheeze, stridor sound is generated by turbulent airflow in the larynx or bronchial tree, and is related to an upper airway obstruction. This is why stridor can be heard more clearly on the trachea, while wheezing can also be heard clearly by chest auscultation [19]. Stridor sounds are characterised by a high pitch of more than 500 Hz [10]. They are also normally harsher and louder than wheeze sounds. As a type of CAS, stridor sounds have a duration of more than 250 ms.The differential diagnosis for stridor are epiglottitis, croup, and laryngeal oedema. All of these conditions are related to upper airway obstructions. Stridor sounds can also be heard when there is a foreign body such as a tumour in the upper airway tract.

-

GaspInspiratory gasps can be heard usually after a bout of coughing when a patient finally tries to inhale. The whoop sound of an inspiratory gasp is caused by fast moving air through the respiratory tract. Whoop sounds typically have a high pitch and long duration, which makes inspiratory gasps belong to CAS. The whooping sound is a pathognomonic symptom of whooping cough (pertussis) [20]. This is the only disease associated with a whooping sound inspiratory gasp.

-

SquawkSquawks are adventitious sounds that can be heard during the inspiratory phase. The sound is a mix of both musical and non-musical. Squawk is also called short wheeze as the sound’s characteristics are similar to a low-pitched wheeze but with a shorter duration [8]. The pitch of squawk is normally between 200–300 Hz [10]. The sounds are generated by oscillation at the peripheral airways [21]. Squawk can usually be heard in a patient with hypersensitivity pneumonia, although they have been reported in patients with common pneumonia several times [22].

-

-

Discontinuous Adventitious Sounds

Discontinuous Adventitious Sounds (DAS) are abnormal sounds superimposed on normal breath sounds with a short duration of less than 25 ms [14]. DAS can be further classified based on the source from where the sounds are generated.-

Fine CrackleFine crackle sounds are caused by explosive openings of the small airways. The sound is high-pitched (around 650 Hz) and has a short duration (around 5 ms) [23]. Crackle sounds are explosive and non-musical [8, 24]. Fine crackles are audible only at the late stages of inspiratory phases. Fine crackle sounds are usually associated with pneumonia, congestive heart failure, and lung fibrosis.

-

Coarse CrackleCoarse crackle sounds are generated by air bubbles in large bronchi. The sounds can be heard mostly during the early stages of inspiration, but are also audible at the expiratory stage. Coarse crackles have a low pitch, around 350 Hz, with a sound duration of around 15 ms [23]. Coarse crackle sounds can be heard on patients with chronic bronchitis, bronchiectasis, as well as COPD.

-

Pleural RubPleural rub are non-musical rhythmic sounds, which are categorised as DAS as the duration of each rub is around 15 ms [10]. Pleural rub sounds are caused by the rubbing of pleural membranes when breathing. The sound generated by the friction can be heard on both phases (biphasic), inspiration and expiration. Pleural rub sounds have a low pitch, normally below 350 Hz [10]. They are usually caused by inflammation of the pleural membrane [8]. Pleural tumour can also cause them [10].

-

Table 2 provides a summary and comparison of the different adventitious sounds. From Table 2, it can be seen how developing a classification tool for adventitious sounds is a challenging task, since there is a significant overlap between the characteristics of different sounds. In addition, typical characteristics may not be general and representative for different patients.

Auscultation

Auscultation is the medical term referring to the use of a stethoscope or other tools to listen to the sounds generated from inside the body. It is used to help diagnose a vast number of conditions. Normally, auscultation is performed to listen to lung, cardiac, abdomen, and blood vessel sounds. Most of the time, auscultation is performed on the anterior and posterior chest [25].

The stethoscope used for auscultation usually consists of two parts, a diaphragm and a bell. The diaphragm is used to listen to high-pitched sounds while the bell is for low-pitched sounds. Auscultation is recommended to be performed in a quiet environment to enable the expert to listen to the sounds clearly [8].

Drawbacks and Limitations of Conventional Auscultation

The first limitation of conventional auscultation is that it cannot be performed frequently and thus cannot provide continuous monitoring. Auscultation needs to be performed by an expert, especially when trying to detect and determine abnormal sounds. This is very limiting, for example, in the case of asthma, because symptoms such as wheezes most often occur during the night. The requirements of performing auscultation in a quiet environment, and ideally with the patient in a still position, are also very restrictive.

The number of people capable of performing auscultation is also limited. An expert on auscultation needs to have lot of experience in order to be able to determine the types of sounds heard and decide on how this information can help in diagnosis or monitoring. Symptoms might be missed and their severity underestimated by both patients and physicians [26], resulting in proper care not being given.

Limitations of the human auditory system are also a drawback in conventional auscultation. A study in [27], advocates that conventional auscultation should not be used as a reference in research on automatic lung sound analysis. The intensity of respiratory sounds can mask the adventitious sounds, resulting in only normal sounds being heard. The varying amplitude of adventitious sounds may also cause the human ear to miss some cases where the intensity is too low to be detected.

These limitations and drawbacks hinder the effectiveness of conventional auscultation as a mean of monitoring and managing symptoms. Automated lung sound analysis, specifically automatic detection and classification of adventitious sounds, could potentially overcome these limitations.

Available Automated Lung Sound Analysis Devices

Automatic lung sound analysis, aiming to overcome the limitations mentioned above, has been the recent focus of a significant amount of research, and some commercial systems for very specific applications are already in the market [25]. These include the Wheezometer [28], Wholter [29], VRI [30], LSA-2000 [31], LEOSound [32], Multichannel STG [33], STG for PC [34], and Handheld STG [35].

Wheezometer and WHolter were developed by Karmelsonix (now Respiri). Wheezometer is used to measure the wheeze percentage and uses one sensor placed over the trachea. WHolter has a similar sensor and algorithm to Pulmotrack [36], but is intended for home monitoring use. The data recorded by WHolter is uploaded to a computer to be analysed. Vibration Response Imaging (VRI) developed by Deep Breeze uses 34 or 40 sensors placed on the posterior chest. The device is capable of detecting lung vibration energy and visualises it in a grayscale image. LSA-2000, by Kenzmedico uses up to 4 sensors attached over the chest to identify interstitial pneumonia. LEOSound developed by Heinen and Lowerstein uses 3 sensors capable of storing data for wheeze and cough detection. Multichannel STG uses 14 sensors placed on multiple locations on the posterior chest, trachea, and an over the heart sensor. The device is capable of counting crackles, rhonchi and wheezes. Smaller versions of STG use an electronic stethoscope coupled with either a PC (STG for PC) or a handheld device (Handheld STG).

Automated lung sound analysis devices should be easy to use, portable, and require as small a number of sensors as is possible [25], The use of multiple sensors and bulky devices is not suitable and cost-effective for home monitoring purposes. All the devices listed above are typically large and complex, with the exception of the Wheezometer, but this can only provide spot-checks, not continuous monitoring. WHolter has portability but works as data logger with a separate analysis device. While STG for PC and Handheld STG use an electronic stethoscope that is also not suitable for continuous monitoring. Thus, portable or wearable non-intrusive devices that can be used to monitor lung sounds without the help of experts are still needed.

Other than the devices mentioned above, the development of algorithms to detect or classify lung sounds has been the focus of a lot of research works. These works developed detection or classification methods by extracting certain features from the sounds. The detection and classification methods used vary from empirically determined to the use of machine learning. A systematic review of automatic detection or classification of adventitious sounds is presented in next subsection.

Review of algorithms for automatic adventitious respiratory sound analysis

This section reviews published studies on the detection or classification of adventitious respiratory sounds. The review is organised as follows. The types of sound being investigated will be discussed first. This is followed by a discussion of the level at which the analysis is performed. The sensor types, number, and placement is reviewed next. Available online databases with recordings of adventitious sounds are presented. The methodology of analysis is reviewed last, including the use of the data, validation, features, and the classification or detection methods used.

Study selection

A total of 77 full-articles were included in this systematic review. Database search on SCOPUS and IEEExplore, as well as citation tracking identified a total of 1519 records. Removal of duplicates and non-accessible full-text articles left 1446 articles. Out of these, 1297 articles were excluded based on title and abstract screening. From the screening, 149 full-text articles were then assessed for eligibility, and 72 studies were excluded. This study selection resulted in a total of 77 eligible full-articles which were all included in the review. The flow diagram for this study selection can be seen in Fig 1.

Characteristics of studies included in this systematic review are given in Tables 3 and 4. The characteristics summarised for each work are: type of sound analysis, approach and level of analysis, instrumentation or database used to obtain data, and amount of data used in the analysis.

Types of sounds analysed

Although all eligible articles included in this review targeted adventitious sounds, different works had different specific aims. Hence, some of the works investigated one type of adventitious sound and compared it with normal breath sounds- this can be performed as a detection or classification scheme. Others reported the classification of several types of adventitious sounds. There were also works that performed classification on the cause of adventitious sounds generation.

Examples of the analysis performed in the published papers included: wheeze detection, wheeze classification against normal breath sounds, classification of monophonic and polyphonic wheeze, crackle detection in a recording, and classification of crackle and normal breath sounds. Other than wheeze and crackle analysis, adventitious sounds analysis was performed in combination in different works. Generally, the analysis was on classification tasks, such as: wheeze and rhonchi classification, classification of wheeze and crackle, wheeze and stridor classification, and other combinations. Another example was classification between sounds caused by airway obstruction or parenchymal. 55 (71.43%) of the studies focused on wheeze, 40 (51.95%) on crackle, 9 (11.69%) on stridor, 9 (11.69%) on rhonchi, and 18 (23.38%) on other sounds such as pleural rub, squawk, as well as the pathology. A summary of the types of sounds analysed in each article can be seen in Table 3.

Level of analysis

There are three different levels of adventitious sound analysis that can be performed. Several studies performed detection and classification of adventitious sounds at a segment level. For detection at the segment level, features are usually extracted on segments generated by signal windowing. Classification may also be performed at the segment level. Random segments from both, adventitious and normal sounds, are obtained and used to perform this classification. Different from classification at the segment level, classification at the event level is usually done after obtaining manually isolated events of adventitious sounds and normal breath sounds. At the recording level, the task performed is usually the detection of events.

Different levels of analysis result in different performance measures. At the segment level, one possible performance measure is to regard each segment as either true positive, true negative, false positive, or false negative. Another approach is to combine the detected segments, for example by taking a few consecutive detected segments as a positive event or by taking the mean values of extracted features. For the reported works using the event level (usually a classification task), the performance is measured from individually isolated events. Detection tasks performed at the recording level measure the performance at the event level. As for classifications performed at the recording level, the analysed recording will either be classified as containing abnormal sounds or as a normal recording. More detail on how each work in the literature performed analysis and measured the performance can be seen in Table 3.

Sensor and its placement

Most research works on adventitious sound analysis used data recorded from patients in hospital. The most common sensors being used for data collection were microphones. The types of microphone mentioned were the SP0410HR5H-PB [114], KEC-2738 [115], TSD108 [116], Panasonic WM-61 [117], SONY ECM-44 BPT, and SONY ECM-77B [118]. Several articles also used microphones but without mentioning the type specifically. Electronic stethoscopes were also used by several researchers. These include the ThinkLab Rhythm:ds32a Digital Stethoscope [119], WelchAllyn Meditron Electronic Stethoscope [120], and Littmann 3M Electronic Stethoscope Model 4000 [121], and 3200 [122]. One paper used an accelerometer BU-3173 [123] as a sensor. Other than the sensors above, several studies stated the use of either a microphone or stethoscope without specifically mentioning the type. In total, there were 31 studies that used microphones and 21 studies that used electronic stethoscope.

Conventional auscultation is usually performed on the anterior and posterior chest in order to obtain vesicular breath sounds. For the development of algorithms for the detection or classification of adventitious sounds, several studies used the trachea, specifically the suprasternal notch, as the location for the sensor. Mouth breath sounds were also used in one of the papers to detect wheezes.

The number of sensors used to perform the analysis varies from only one sensor up to a set of 14. In some papers, although only one sensor was used, the sensor is not kept in a fixed position but it is used to detect sounds from multiple locations, similar to performing conventional auscultation. This was generally the case when the analysis was performed using a digital stethoscope for data collection. A summary of the sensors used in each work can be seen in Table 4.

Databases

Several works used available databases as a source for analysis instead of collecting their own data. The databases used are from online repositories and from audio CD companion books. The online repositories available were from R.A.L.E [124], East Tennessee State University repository [125], Littmann repository [126], and from SoundCloud [127]. The audio CDs companion used were from books such as Understanding Lung Sounds 2nd Edition [128], Understanding Lung Sounds 3rd Edition [129], Auscultation Skills: Breath and Heart Sounds [130], Fundamentals of Lung and Heart Sounds [131], Understanding Heart Sounds and Murmurs [132], Heart and Lung Sounds Reference Library [133], Secrets Heart & Lung Sounds Workshops [134], Lung Sounds: An Introduction to the Interpretation of the Auscultatory Finding [135], and The Chest: Its Signs and Sounds [136].

Breath sounds from online or book databases were taken from multiple locations, such as the chest, neck, and mouth. The sensor used for the data collection varied and included an electret microphone and accelerometer in [124], and the Littmann Digital Stethoscope in Littman repository [126].

Method of analysis and performance

Algorithms developed to detect or classify adventitious sounds usually involve two steps. The first step is to extract the relevant features that will be used as detection or classification variables. The second step is to use detection or classification techniques on the data, based on the features extracted. In developing a detection or classification algorithm, especially if machine learning techniques are used, it is important to take note of how the data is used to train, test, and validate the algorithm. In this section, the literature published will be discussed. The following aspects were reviewed: features extracted; classifier or detection techniques used; how the training, testing, and validation was performed; as well as the performance achieved. The section is organised based on the classifier or detection techniques used. These are empirical rule-based (such as with thresholding or peak selection), Support Vector Machine (SVM), Artificial Neural Network (ANN) variant, and other techniques such as clustering and statistical models. Table 5 is provided to summarise the review.

Empirical Rule Based Methods

A study by [62] performed crackle classification. The data used included 50 crackle events and 50 normal breath sounds. The sounds were recorded using a Littmann 3M 4000 Electronic Stethoscope at multiple positions on the chest. The classification performed was based on the mathematical morphology of a crackle event in the spectrogram. The classification achieved 86% sensitivity with a specificity of 92%.

Wheeze classification was performed in [95]. The data used for the study was obtained from [129]. A total of 17 recordings, with 7 normal and 10 containing wheezes were used. The classification performed was to determine whether a recording was normal or contained wheezes. The feature used was extracted based on the entropy of each frame of the segmented recording. The feature set was the ratio and difference of the maximum and minimum entropy of the segments of a recording. Based on an empirical threshold, the classification was performed. The study achieved 84.4% sensitivity and 80% specificity.

A empirical threshold was also used as a classifier by [50] to perform multi-class classifications between wheeze, stridor, crackle, and normal events. This study was a continuation of [95] above. The data used for this study was obtained from both hospital and the Soundcloud online repository with the search term ‘lung sounds’. A total of 45 recordings were used, containing several cycles of respiration each. Similar to the algorithm in [95], entropy was extracted from the segmented recording. For the multi-class classification, two entropy-based features were extracted instead of just one as in the previous study. The entropy-based features were the difference and ratio of maximum and minimum entropy of a segment in a recording. Similar to [95], the performance was measured by classifying a whole recording using the extracted features. The performance reported was 99% for stridor, 70% for wheeze, 87% for crackle, and 99% for normal sounds.

A finding from [63] claimed that the delay coordinate can be used as a feature to perform a classification between wheeze events and normal breath sounds, achieving 98.39% overall accuracy. The underlying reason was that the wheeze sound signal is a sinusoid while a normal breath sound is noise-like. A threshold can be found to perform the classification based on the persistent homology of delay embeddings. Another study from the same group [73] previously focused on wheeze sound detection in a recording. The data used contained 6 wheeze events in a recording which could all be detected using an energy threshold classifier on certain frequency bands and wavelet packet decomposition.

Wheeze detection was also studied by [77], with signals obtained using a stethoscope that was built using a microphone inside a chamber. The sounds were recorded from the neck. A total of 59 recordings, 25 with wheezes and 34 normal, from 8 young children were used for analysis. The feature used was the correlation coefficient, while the classifier was an empirically determined threshold. The features were extracted from each segment of a recording. Several consecutive high correlation coefficients were regarded as a wheeze event. Finally, each recording was classified as containing wheeze or being normal by using a threshold, calculated as the ratio between wheeze duration and normal respiratory duration. The performance achieved was 88% sensitivity with 94% specificity.

The study in [74] also focused on wheeze detection. The wheeze sounds were recorded using a single digital stethoscope from multiple positions. In total, 40 recordings were used for the study. The features were obtained from time-frequency analysis, with a rule-based decision making, such as finding and selecting peaks based on energy threshold, derived from the algorithm developed by [101]. The study achieved 72.5% specificity with a sensitivity of 99.2%.

Classification of CAS and DAS against normal breath sounds was carried out by [80]. 47 recordings from an online repository [124] were used. These contained 10 normal, 20 CAS, and 17 DAS recordings. There were two features analysed in this study. The first feature was a similarity measure of segments in the recording using mutual information. The second feature was a weighted cepstral feature. The study claimed a high accuracy of classification by using a threshold classifier using the first feature, while a separability index of 1 was found using the second set of features for both CAS and DAS classification.

Wheeze segment classification was performed in [81], also using a threshold-based classifier. A total of 180 segments were analysed. These contained 82 wheeze segments and 98 normal segments. The feature used in this study was the fractional Hilbert transform. The overall accuracy achieved was 90.5%. The same research group performed crackle detection also using the fractional Hilbert transform as a feature in [82]. The correlation coefficient was used as additional feature to detect crackle. The performance achieved was a sensitivity of 94.28% and Positive Predictive Value (PPV) of 97.05%, at the event level, on 10 short recordings with 33 crackle events.

Crackle detection was also performed in [56] by using thresholding on fractal dimension and the CORSA [143] criterion of crackle. A total of 24 recordings were used for the analysis, obtained using a stethoscope. The performance reported was an average sensitivity of 89 ± 10% and PPV of 95 ± 11%, at the event level, for different recordings.

A study in [84] also performed crackle detection using a threshold-based classifier. The feature used in this study was the abnormality level. A total of 433 segments were used in the analysis with no further detail given. The performance reported was 84.5% accuracy.

Wheeze detection was performed in [83] using the Linear Predictive Coding (LPC) prediction error ratio as a feature. A total of 26 recordings were used for analysis, with 13 of them containing wheeze sounds. By using a threshold classifier on the prediction error, 70.9% sensitivity and 98.6% specificity at the event level was achieved.

The work in [97] used peak selection based on time duration to perform wheeze detection. A total of 40 events were obtained from several databases. The only currently available database is [125]. From the 40 events, 19 of them were wheezes and 21 were normal respiratory sounds. The performance reported was 84% sensitivity and 86% specificity.

Wheeze and normal respiratory event classification was performed in [98]. Signals from 14 volunteers were recorded using one SONY ECM-77B microphone. An additional 100 normal and 86 wheeze events from [129, 132] were obtained. The classification was done using distortion in histograms of sample entropy as a feature. Performance of 97.9% accuracy for expiration and 85.3% accuracy for inspiration phase, at the event level, was reported.

Threshold on fractal dimension was used to perform the detection of crackle segments in [100]. A total of 18 recordings with 182 crackle events were analysed. 92.9% sensitivity and 94.4% PPV, at the event level, detection of crackle were achieved.

The work in [106] performed wheeze detection with signals obtained from 16 asthmatic patients and 15 healthy volunteers. Data were recorded using one piezoelectric microphone placed on the neck. A threshold energy was used achieving 100% sensitivity and specificity for high airflow at the event level. Wheeze detection was also the focus of the study in [101]. Signals from 13 volunteers containing 422 wheeze events were recorded using five SONY ECM-77B microphones placed on the neck, anterior, and posterior chest. Data from 10 out of 13 volunteers were used as a test set containing 337 wheeze events. The detection was made by selecting peaks based on sets of rules. Sensitivity of 95.5 ± 4.8% and specificity of 93.7 ± 9.3%, at the event level on the test set, was achieved.

The study in [108] studied both crackle segment detection and classification using signals obtained from the ACCP teaching tape. The feature used for detection was the correlation between a crackle signal in the time domain with a wavelet decomposition. The crackle segment detection achieved 99.8% accuracy. Classification between fine and coarse crackle was performed on the detected crackle segments. The article claimed that the achieved accuracy was “almost” 100%.

Prior to this, [112] also performed crackle detection and classification. A threshold on energy envelope was used to detect and isolate crackle segments. The detected crackles were further classified into fine or coarse by using crackle typical characteristics such as peak frequency and time duration. The algorithm was applied to signals from 9 patients obtained using a microphone. The study claimed to achieve 100% accuracy in classifying crackles into fine or coarse.

Support Vector Machine Based Methods

The work in [37] used an SVM classifier to perform wheeze detection. The signals used were obtained with a single microphone (SP0410HR5H-PB) used to record mouth breath sounds. A total of 95 recordings were collected, with 27 of them containing wheezes. 70 recordings with wheezes in 20 of them, were used to train the SVM classifier while the rest were used to test the classifier. A separate set of 39 recordings with 10 wheezes were used as an additional test set. Spectral-based features were used for the classifier. The recordings were divided into segments and the features were extracted from each frame of the segmented recordings. Using this method, 71.4% sensitivity and 88.9% specificity was achieved on the validation set at the recording level. Logistic Regression Model (LRM) classifier was also used, but the result using SVM achieved a better overall performance.

A study in [41] used five TSD108 microphones to obtain recordings from 30 volunteers to be used for CAS classification. In total, 870 inspiratory cycles, from which 485 samples containing CAS, were recorded. Four of the sensors were placed on the back while one sensor was put on neck. From the 870 cycles, 1494 segments were obtained with 633 of them containing CAS. A feature set based on instantaneous frequency was extracted and an SVM classifier was used. To obtain the optimal SVM parameters, 10-fold cross-validation (CV) was used, using 559 cycles out of the 870 recorded. The SVM model was then developed using 100 iterations of 65%-35% of random data, split out of the 1494 segments. If at least one segment in a cycle was classified as CAS, the whole cycle would be classified as CAS. The best performance obtained was a sensitivity of 94.2% and a specificity of 96.1% at the cycle level.

The study in [38] used SVM to perform classification of recordings using a denoising autoencoder as feature set. The data for the study was recorded using a stethoscope on the neck, anterior, and posterior chest. A total of 227 recordings were obtained, 171 normal, 33 containing wheeze, 19 containing crackle, and 4 containing both wheeze and crackle. The performance achieved was 90% sensitivity with 64% specificity for wheeze and 90% sensitivity with 44% specificity for crackle at the recording level.

The same research group built a custom stethoscope and algorithm in [47] to perform wheeze detection. The detection scheme used consisted of processing the spectrogram of sound recordings to select potential wheezes by using the energy threshold, and performing the classification on selected potential wheezes to obtain the final classification result for the recording classification. The performance achieved was 86% accuracy at the recording level, by taking into account the expected number of false positives.