Abstract

Emotion dysregulation is an important aspect of many psychiatric disorders. Brain-computer interface (BCI) technology could be a powerful new approach to facilitating therapeutic self-regulation of emotions. One possible BCI method would be to provide stimulus-specific feedback based on subject-specific electroencephalographic (EEG) responses to emotion-eliciting stimuli.

To assess the feasibility of this approach, we studied the relationships between emotional valence/arousal and three EEG features: amplitude of alpha activity over frontal cortex; amplitude of theta activity over frontal midline cortex; and the late positive potential over central and posterior mid-line areas. For each feature, we evaluated its ability to predict emotional valence/arousal on both an individual and a group basis.

Twenty healthy participants (9 men, 11 women; ages 22–68) rated each of 192 pictures from the IAPS collection in terms of valence and arousal twice (96 pictures on each of 4 days over 2 weeks). EEG was collected simultaneously and used to develop models based on canonical correlation to predict subject-specific single-trial ratings. Separate models were evaluated for the three EEG features: frontal alpha activity; frontal midline theta; and the late positive potential. In each case, these features were used to simultaneously predict both the normed ratings and the subject-specific ratings.

Models using each of the three EEG features with data from individual subjects were generally successful at predicting subjective ratings on training data, but generalization to test data was less successful. Sparse models performed better than models without regularization. The results suggest that the frontal midline theta is a better candidate than frontal alpha activity or the late positive potential for use in a BCI-based paradigm designed to modify emotional reactions.

A brain-computer interface (BCI) is a system that measures central nervous system (CNS) activity and converts it into an artificial output that replaces, restores, enhances, supplements, or improves natural CNS output (Wolpaw & Wolpaw, 2012). A number of studies have been successful in training individuals to control EEG signals to replace lost communication or control (e.g., Wolpaw et al., 1991; Pfurtscheller et al., 1993; Kostov & Polak, 2000; McFarland et al., 2010). More recently there has been increased interest in developing BCI methods for improving motor, cognitive, or emotional function (e.g., Pichiorri et al., 2015; Daly & Sitaram, 2012). These efforts logically begin with studies that establish the relationships between specific EEG features and specific functions.

Most studies of the correlations between emotionally relevant stimuli and EEG features have adopted one of two approaches. One approach has examined the relationships between emotion and well-defined EEG features such as frontal asymmetry in alpha (8–13 Hz) activity (e.g., Coan & Allen, 2004, Gable & Poole, 2014), frontal midline theta (4–7 Hz) activity (e.g., Aftanas et al., 2001; Knyazev et al., 2009), and the late positive potential over central and posterior midline areas (e.g., Leite et al., 2012; Cuthbert et al., 2000). In contrast, the second approach has used machine learning methods to evaluate the relationships between emotion and classifiers that incorporate many EEG features (e.g., the powers in many frequency bands at many scalp locations (e.g., Liu et al., 2014; Yoon & Chung, 2013)). For example, Liu et al. (2014) reported the accuracies of several classifiers that used theta, alpha, low beta, high beta and gamma band powers from 62 scalp-recording channels to predict emotion. The specific channel-frequency combinations that contained the information were not identified. However, there are notable exceptions to these trends. For example, Wang et al. (2014) evaluated several classifiers at each of 5 spectral bands.

The use of many EEG features without regard to physiological considerations could possibly result in the inadvertent use of non-brain artifacts, signal features that are not generated by the brain. For example, emotional stimuli are known to elicit changes in muscle tension that can be detected with EMG recording (Baur et al., 2015). High-frequency activity recorded at the scalp is largely EMG activity (Pope et al., 2009), particularly at recording sites along the edge of typical scalp montages (Goncharova et al, 2003). In this regard, it is of interest that Wang et al. (2014) report that scalp-recorded gamma (30–50 Hz) activity resulted in the best classification of emotional stimuli. While EMG activity can be a useful index of emotional reactivity, if the goal is to develop a BCI-based method to improve emotional function, the focus must be on EEG features associated with emotion. EMG or other non-brain artifacts must be excluded.

This consideration suggests that BCI methods that use well-defined EEG features might be more promising. Furthermore, with this approach, the methods used for feature extraction and training could be optimized more readily. For example, signal processing for lower-frequency features such as theta activity might benefit from longer window lengths and different spatial filter designs than higher frequency features or time-domain features. In addition, learned control of such EEG features might have feature-specific consequences on emotional function.

Base on this rationale, the present study compares the ability of frontal alpha activity, frontal midline theta activity, and the late positive potential to predict emotional responses on individual trials. In addition, high-frequency features from electrodes located at the edge of the montage are also evaluated to assess the ability of EMG activity to predict emotion.

Methods

Participants

The participants were 20 adults (9 men, and 11 woman, ages 22 to 68, median= 31). All gave informed consent for the study, which had been reviewed and approved by the New York State Department of Health Institutional Review Board.

Stimuli and Procedure

One hundred and ninety-two color pictures were selected from the International Affective Picture System (IAPS)(Lang et al., 2008) that represented a range of content as assessed by the reported norms for valence and arousal. Average valence ratings for the normed sample of the selected items ranged between 1.78 and 8.34 with a mean of 5.30. Arousal ratings ranged between 2.00 and 7.29 with a mean of 4.53. Both scales went from 1 to 9.

The participant sat in a reclining chair facing a 94×53-cm video screen 1.5 m away. EEG was recorded with 9-mm tin electrodes embedded in a cap (ElectroCap, Inc.) at 64 scalp locations according to the modified 10–20 system of Sharbrough et al. (1991). The electrodes were referenced to the right ear and their signals were amplified and digitized at 256 Hz by g.USB amplifiers. BCI operation and data collection were supported by the BCI2000 platform (Schalk et al., 2004; Schalk & Mellinger, 2014). Data was collected during four daily sessions spread over 1–3 weeks. Each session consisted of 8 runs lasting approximately 3 min. each. In each session, the participant rated 96 pictures. Each of the 192 pictures was rated twice: once in one of the first two sessions and once in one of the last two. The participant was given the same instructions used by Lang et al. (1999) (except that the numerical ratings were entered on a wireless keypad rather than with paper and pencil). During each run, the participant was presented with 12 pictures, each lasting 6 sec, after which s/he was prompted to rate the picture on an Unhappy (1) to Happy (9) Scale (i.e., valence) and then prompted to rate it on a Calm (1) to Excited (9) scale (i.e., arousal). Subjects had up to 12 sec to respond and there was a variable 3–5 sec interval between trials. EEG was recorded continuously during the runs.

Offline Analysis

EEG signals from the 64 scalp electrodes were re-referenced according to a Laplacian transform with 6-cm inter-electrode spacing (McFarland et. al. 1997). Three frequency domain and one time domain feature sets were produced, each consisting of 48 features. These features were selected to represent frontal midline theta (2–7 Hz) activity, frontal alpha (8–13 Hz) activity, frontal high-frequency (30–55 Hz) activity, and the late positive potential.

For the frequency-domain features, the data was evaluated with a 64th-order autoregressive spectral analysis (McFarland and Wolpaw, 2008). Amplitudes for 1-Hz-wide spectral bands were computed for 1000-msec sliding windows that were updated every 62.5 msec and included the entire 6 sec. of stimulus presentation. The theta feature set included channels AFz, F1, Fz, F2, FC1, FCz, FC2 and Cz with 1-Hz bins between 2–7 Hz. The alpha feature set included channels F5, F3, F4, F6, FC5, FC3, FC4 and FC6 with 1-Hz bins between 8–13 Hz. The frontal high-frequency feature set included channels Fp1, Fp2, AF7, AF8, F7, F8, FT7, and FT8 with 5-Hz wide bins between 30–55 Hz.

The late positive potential (LPP) data set included channels Fz, FCz, Cz, CPz, Pz, and POz. These data were first baseline-corrected using the 100-msec period immediately prior to stimulus presentation. Then a 64 point running average was computed and the resulting signal was sampled once for each of 8 successive 750-msec intervals beginning with stimulus presentation.

Each data set was used to predict valence and arousal ratings by a sparse canonical correlation algorithm (Witten et al., 2009) with the R package PMA downloaded from CRAN.r-project.org. Both individual and normed ratings were included in the model since the sparse algorithm would select those features best predicted by the EEG. The subjective ratings were based on 9-point scales which are more readily modeled with regression than classified as discrete categories, an issue we have previously discussed (e.g., McFarland and Krusienski, 2012). Data from the first two training sessions served as the training set and data from the second two sessions served as the test set. Both the training set and the test set consisted of ratings on the entire set of 192 pictures. This sparse canonical algorithm is:

| (1) |

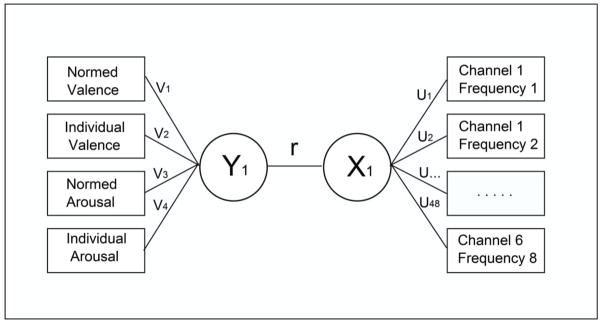

where X is the matrix of predictors (i.e., EEG features), Y is the matrix of values to be predicted (i.e., subjective ratings) and u and v are referred to as canonical variates. P1 and P2 are the sums of the absolute values for u and v respectively. The terms, c1 and c2 are penalties that tend to produce sparse solutions with good correlation when appropriate values are selected. For each subject we compared models with the L1 penalty with models without a penalty. The PMA package uses a closed-form solution for u and v involving eigenvectors. In all of our analyses we evaluated the correlation between the first eigenvector of X and that of Y since these account for the most variance. We determined the values of c1 and c2 using only the training data with the k-fold cross-validation option in the PMA package using the default of 7 folds. The canonical correlation algorithm as applied here uses what is common to the EEG features to predict what is common to the rating scales. The general form of the canonical correlation model is shown in Figure 1.

Figure 1.

The canonical correlation model. The boxes on the left represent the observed subjective ratings, those on the right represent the observed EEG features. The circles represent latent variables: Y1 corresponds to the first canonical variable representing subjective ratings and X1 corresponds to the first canonical variable representing EEG features. The value for r represents the correlation between these latent variables.

Results

Table 1 shows the correlations between subjective ratings for the individual participants. Correlations between individual valence ratings at time 1 (sessions 1 and 2) and time 2 (sessions 3 and 4) are all positive and highly significant, indicating that these ratings are reliable for each individual. Correlations between the normed (Lang et al., 2008) and individual valence ratings are also all positive and highly significant, indicating that our sample evaluated these pictures much like the norming sample. This same pattern was present for arousal ratings, although overall the correlations were somewhat lower and in one case not significant. Individual correlations between valence and arousal ratings were generally similar between time 1 and time 2, but highly variable across subjects. As for the normed ratings (Lang et al., 2008), almost all the individual valence/arousal correlations are negative (average: r=−0.39, p < 0.0001), but a few are significantly positive (e.g., subjects R and O) and a few are essentially non-existent (e.g., subjects L and P). In one person (subject N), the correlation between valence and arousal ratings is nearly perfect, suggesting that this subject did not treat the two scales differently. Thus, valence and arousal ratings correlate differently in different subjects.

Table 1.

Summary of correlations between ratings of valence (V) and arousal (A) for individual participants. Correlations of 0.15 or greater are significant at p < 0.05, those of 0.19 or greater at p < 0.01 and those of 0.28 or greater at p < 0.0001. V 12 is the correlation between individual valence ratings on sessions 1 and 2 with those for the same pictures on sessions 3 and 4; V 1n is the correlation between valence norms and individual valence ratings for the first two sessions; V 2n is the correlation between valence norms and that for individual ratings for the second two sessions; A 12 is the correlation between individual arousal for the first two sessions with that for the second two sessions; A 1n is the correlation between arousal norms and individual arousal ratings for the first two sessions; A 2n is the correlation between arousal norms and individual arousal ratings for the second two sessions; VA 1 is the correlation between individual valence ratings on the first two sessions with individual arousal ratings on the first two sessions; VA 2 is the correlation between individual valence ratings on the second two sessions with individual arousal ratings on the second two sessions

| Participant | V 12 | V 1n | V 2n | A 12 | A 1n | A 2n | VA 1 | VA 2 |

|---|---|---|---|---|---|---|---|---|

| A | 0.86 | 0.70 | 0.70 | 0.66 | 0.45 | 0.44 | −0.29 | −0.26 |

| B | 0.82 | 0.78 | 0.79 | 0.79 | 0.62 | 0.59 | −0.49 | −0.77 |

| C | 0.86 | 0.80 | 0.82 | 0.69 | 0.52 | 0.48 | −0.40 | −0.20 |

| D | 0.67 | 0.66 | 0.84 | 0.31 | 0.50 | 0.49 | −0.25 | −0.27 |

| E | 0.83 | 0.79 | 0.79 | 0.75 | 0.56 | 0.51 | −0.76 | −0.72 |

| F | 0.76 | 0.78 | 0.78 | 0.43 | 0.39 | 0.47 | −0.01 | −0.21 |

| G | 0.90 | 0.84 | 0.85 | 0.74 | 0.65 | 0.52 | −0.51 | −0.57 |

| H | 0.87 | 0.84 | 0.84 | 0.75 | 0.64 | 0.66 | −0.35 | −0.33 |

| I | 0.78 | 0.70 | 0.72 | 0.62 | 0.52 | 0.51 | −0.20 | −0.22 |

| J | 0.83 | 0.77 | 0.83 | 0.67 | 0.57 | 0.64 | −0.67 | −0.76 |

| K | 0.87 | 0.84 | 0.84 | 0.75 | 0.64 | 0.66 | −0.20 | −0.22 |

| L | 0.72 | 0.70 | 0.79 | 0.49 | 0.52 | 0.43 | −0.04 | −0.01 |

| M | 0.89 | 0.76 | 0.81 | 0.48 | 0.37 | 0.31 | 0.12 | 0.11 |

| N | 0.96 | 0.89 | 0.87 | 0.89 | 0.43 | 0.45 | −0.93 | −1.00 |

| O | 0.79 | 0.75 | 0.80 | 0.25 | −0.14 | 0.08 | 0.53 | 0.25 |

| P | 0.92 | 0.87 | 0.87 | 0.39 | 0.36 | 0.36 | −0.04 | 0.01 |

| Q | 0.90 | 0.83 | 0.86 | 0.78 | 0.61 | 0.62 | −0.40 | −0.56 |

| R | 0.76 | 0.70 | 0.79 | 0.51 | 0.31 | 0.22 | 0.42 | 0.46 |

| S | 0.92 | 0.78 | 0.78 | 0.57 | 0.47 | 0.51 | −0.37 | −0.20 |

| T | 0.59 | 0.51 | 0.75 | 0.51 | 0.46 | 0.54 | 0.08 | −0.41 |

| mean | 0.83 | 0.76 | 0.81 | 0.60 | 0.47 | 0.47 | −0.24 | −0.29 |

| sd | 0.09 | 0.09 | 0.05 | 0.17 | 0.18 | 0.15 | 0.37 | 0.36 |

| range | .59/.96 | .51/.89 | .70/.87 | .25/.89 | −.14/.65 | .08/.66 | −.93/.53 | −1.00/.46 |

The average correlations between time 1 and time 2 were 0.83 for valence and 0.60 for arousal. For session 1, the average correlations with the normed values were 0.76 for valence and 0.47 for arousal. For session 2, they were 0.81 for valence and 0.47 for arousal. These differences between individual and normed ratings were evaluated with repeated-measures ANOVAs and are significant for both valence (df= 2/38, F= 8.80, p<0.0007) and arousal (df= 2/38, F= 15.29, p< 0.0001) with individual ratings showing higher reliability than their correlations with the norms, particularly for arousal ratings. Thus, individual’s ratings were in general similar to the normed ratings, but more similar to their own ratings at a different time.

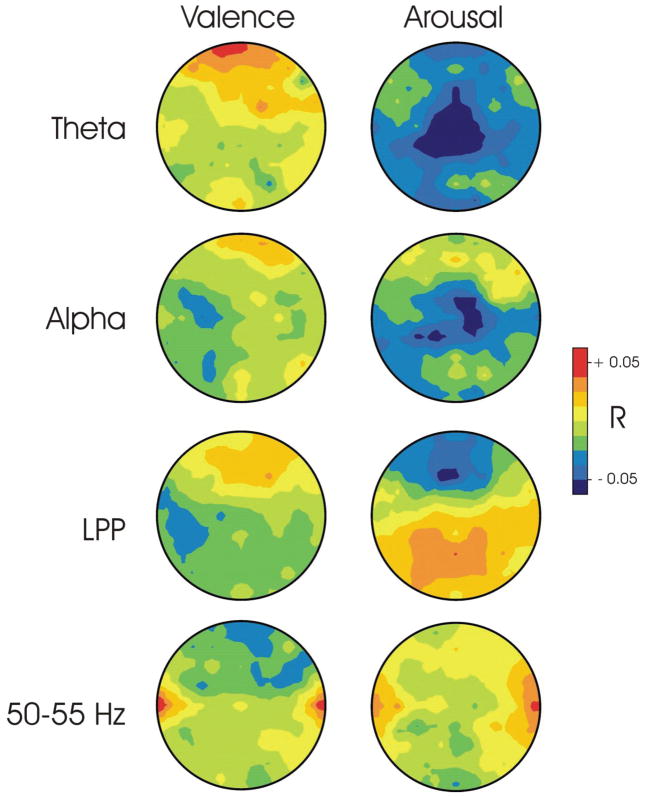

Figure 2 shows average topographies of the correlations between valence and arousal ratings for four EEG features: frontal midline theta activity, frontal alpha activity, frontal high-frequency activity, and the LPP. As Figure 2 shows, frontal theta is positively correlated with valence ratings in the most frontal sites and negatively correlated with arousal ratings over central regions. Similar trends are apparent for frontal alpha. The LPP shows a negative correlation with arousal ratings over frontal midline sites and a positive correlation with arousal ratings over more posterior midline sites. Finally, high frequency activity shows positive correlations with both ratings over temporal sites. For each of these feature sets, correlations of all of the features used in modeling were averaged for each subject using Fisher’s z-scores. The resulting averages for each feature set were then evaluated with t-tests to determine whether this average was significantly different from zero. Only the average correlation of theta with arousal was significantly different from zero (df=19, t=−2.62, p<0.02). The average correlation of LPP with both valence and arousal were not significantly different from zero. For alpha, the correlations with valence and arousal averaged over left channels were not significantly different from those averaged over right channels.

Figure 2.

Topographies of average correlations between EEG features and subjective ratings (i.e., Pearson’s r averaged over all 20 subjects with Fisher’s z-transform). Theta topographies are at 5 Hz, alpha is at 11 Hz, LPP is at 750 msec and the high frequency topography is at 55 Hz.

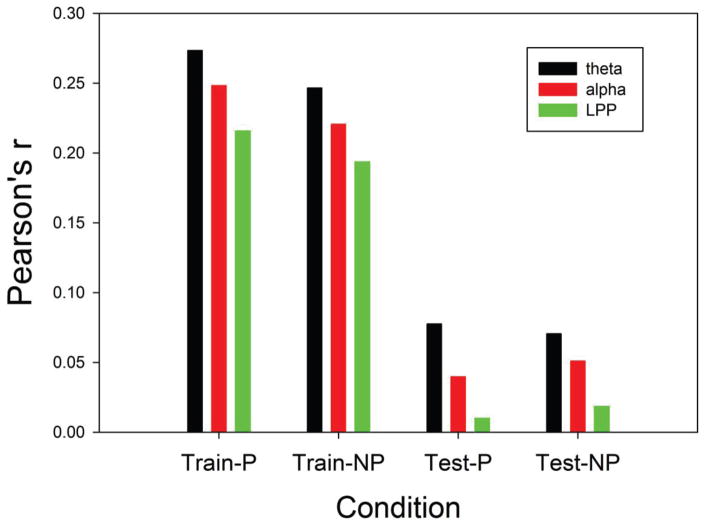

Figure 3 shows the mean of the first canonical correlation of subjective ratings (i.e., optimal weighted average of valence and arousal) for frontal theta, frontal alpha, and the LPP. These results were evaluated with an ANOVA with repeated measures on features (theta, alpha, LPP), phase (training vs test), and penalty (optimized vs none). As Figure 3 shows, the predictions for the training set were considerably higher than those for the test set (df= 1/19, F= 231.57, p < 0.0001). Also, frontal theta produced better prediction of subjective ratings than the other two features, resulting in a significant main effect for feature type (df= 2/38, F= 4.14, p<0.0237). The interaction between these two effects was not significant. The effect of penalty interacted with phase (df= 1/19, F= 9.50, p<0.0061), with the penalized models performing slightly better in the training phase.

Figure 3.

Average correlation (Pearson’s r) between the first canonical correlation of EEG features and the first canonical correlation of subjective ratings. Means for Train-P (peanalized training data) and Test-P are for penalized models and those for Train-NP and Test-NP are models without a penalty.

Table 2 shows validation sample predictions of subjective ratings by the three EEG features based on the results of sparse canonical correlation analysis. Also included are results for high-frequency frontal activity. Six of the 20 subjects had significant correlations with the validation sample models for theta, two for alpha, none for LPP, and two for high-frequency activity. Thus, the frontal theta feature produced significant validation sample results in more individuals. While the high-frequency feature produced significant results in several individuals, the possibility that this may reflect scalp EMG activity cannot be ruled out. Note that these high frequency features were all located along the frontal and temporal edges of the montage, areas associated with EMG activity (Goncharova et al., 2003).

Table 2.

Individual subjects’ correlations (Pearson’s r) between the first canonical correlation for subjective ratings and the first canonical correlation for EEG features

| Participant | Theta | Alpha | LPP | High Freq |

|---|---|---|---|---|

| A | −0.050 | 0.101 | 0.103 | 0.204** |

| B | 0.063 | −0.090 | 0.000 | 0.030 |

| C | −0.046 | 0.036 | 0.090 | −0.053 |

| D | 0.129 | 0.003 | −0.071 | 0.140 |

| E | 0.143* | −0.039 | −0.079 | 0.010 |

| F | −0.039 | 0.020 | 0.063 | 0.097 |

| G | 0.280*** | 0.174* | 0.023 | −0.030 |

| H | 0.039 | 0.010 | −0.050 | 0.016 |

| I | 0.060 | 0.006 | 0.033 | −0.000 |

| J | 0.209** | 0.146* | 0.020 | 0.091 |

| K | 0.111 | 0.024 | 0.073 | 0.057 |

| L | 0.276*** | 0.070 | −0.089 | −0.076 |

| M | 0.251*** | 0.113 | −0.056 | 0.410*** |

| N | −0.041 | 0.001 | 0.090 | 0.000 |

| O | 0.081 | 0.019 | 0.026 | 0.140 |

| P | −0.076 | −0.039 | 0.060 | 0.073 |

| Q | 0.156* | 0.009 | −0.079 | 0.054 |

| R | 0.070 | 0.039 | 0.054 | 0.031 |

| S | −0.090 | 0.127 | −0.050 | −0.033 |

| T | 0.024 | −0.017 | 0.037 | 0.047 |

p < 0.05

p < 0.01

p < 0.001

Table 3 shows validation sample predictions of subjective ratings by the three EEG features separately for valence and arousal ratings. Two of the 20 subjects had significant correlations with the validation sample models for valence and theta, two for valence and alpha and three for valence and LPP. Four of the 20 subjects had significant correlations for arousal and theta, none for arousal and alpha, and one for arousal and LPP.

Table 3.

Individual subjects’ correlations (Pearson’s r) between the first canonical for each rating scale (valence and arousal separately) and the first canonical correlation for EEG features

| Participant | Theta Valence | Theta Arousal | Alpha Valence | Alpha Arousal | LPP Valence | LPP Arousal |

|---|---|---|---|---|---|---|

| A | −0.006 | 0.000 | −0.053 | 0.113 | −0.156* | 0.103 |

| B | 0.136 | 0.011 | 0.009 | −0.107 | −0.003 | 0.000 |

| C | −0.011 | −0.033 | 0.041 | 0.134 | 0.090 | −0.013 |

| D | 0.054 | 0.199* | −0.010 | 0.001 | 0.124 | −0.144* |

| E | 0.010 | 0.141 | 0.093 | −0.034 | −.056 | −0.077 |

| F | −0.033 | 0.037 | 0.030 | 0.070 | 0.050 | 0.120 |

| G | 0.140 | 0.280*** | 0.172* | 0.109 | 0.080 | 0.040 |

| H | 0.073 | 0.000 | 0.055 | 0.010 | −0.060 | 0.050 |

| I | 0.010 | 0.074 | 0.010 | 0.010 | 0.123 | 0.087 |

| J | 0.200** | 0.140 | 0.146* | 0.114 | 0.127 | 0.019 |

| K | 0.107 | 0.084 | 0.019 | 0.097 | −0.013 | 0.023 |

| L | 0.266*** | 0.209** | 0.080 | 0.076 | −0.091 | 0.077 |

| M | 0.009 | 0.230** | 0.114 | −0.109 | 0.040 | −0.039 |

| N | 0.001 | −0.049 | 0.015 | −0.007 | 0.090 | −0.011 |

| O | 0.110 | 0.000 | 0.071 | −0.050 | −0.180* | 0.014 |

| P | −0.083 | 0.067 | 0.018 | −0.034 | −0.144* | 0.060 |

| Q | −0.003 | 0.140 | 0.038 | 0.071 | −0.079 | −0.020 |

| R | −0.011 | 0.070 | 0.035 | 0.070 | 0.020 | 0.050 |

| S | 0.009 | −0.090 | 0.106 | 0.096 | −0.039 | −0.040 |

| T | 0.023 | 0.117 | −0.030 | 0.066 | −0.089 | 0.040 |

p < 0.05

p < 0.01

p < 0.001

Table 4 shows validation sample predictions of alpha asymmetry scores computed by subtracting amplitudes at each frequency of electrodes on the right side of the scalp from corresponding values on the left side of the scalp. These are presented for valence, arousal, and combined ratings. Two of the 20 subjects had significant correlations with the validation sample models for combined ratings, one for the valence ratings, and two for the arousal ratings.

Table 4.

Individual subjects’ correlations (Pearson’s r) between the first canonical correlation for subjective ratings and the first canonical correlation for alpha asymmetry scores

| Participant | Alpha Combined | Alpha Valence | Alpha Arousal |

|---|---|---|---|

| A | 0.05 | 0.04 | 0.05 |

| B | −0.01 | 0.02 | −0.01 |

| C | 0.09 | −0.08 | 0.09 |

| D | 0.11 | −0.11 | 0.10 |

| E | −0.24*** | −0.06 | −0.22** |

| F | −0.06 | −0.03 | −0.06 |

| G | 0.08 | 0.08 | 0.08 |

| H | 0.04 | 0.11 | 0.03 |

| I | 0.01 | 0.07 | −0.05 |

| J | 0.01 | −0.02 | −0.08 |

| K | −0.02 | −0.01 | −0.05 |

| L | 0.16* | 0.19** | 0.03 |

| M | 0.00 | −0.14 | −0.03 |

| N | −0.09 | 0.00 | 0.01 |

| O | 0.01 | 0.05 | 0.01 |

| P | 0.09 | −0.02 | 0.15* |

| Q | 0.01 | 0.01 | −0.03 |

| R | 0.09 | 0.09 | 0.08 |

| S | 0.09 | 0.08 | −0.05 |

| T | 0.02 | 0.04 | 0.11 |

P < 0.05

p < 0.01

p < 0.001

Discussion

In the present study, the participants reliably rated IAPS pictures in terms of valence and arousal as indexed by within-subject test-retest correlations. However, the relationship between valence and arousal ratings varied considerably between individuals. This high inter-individual variation in the correlations between valence and arousal ratings has also been described in a review of the IAPS by Kuppens et al. (2013) who suggest that the meaning of these scales varies between subjects. This fact motivates the use of the canonical correlation used in the present study that maximizes the covariance between subjective ratings with EEG features. Since the meaning of the rating scales may vary between individuals, use of the canonical correlation with individual data insures that the optimal relationship with EEG features will be used by the model. Using this methodology, we found that reliable prediction of emotional ratings occurred in a subset of subjects. At the same time, these correlations are not large.

Frontal midline theta produced significant validation sample results in more individuals in the present study. This finding would not have been apparent from a review of the psychophysiology literature where effects of group averages are reported since these analyses do not provide a way to evaluate the extent to which EEG features predict the variance in individual ratings. On the other hand, due to the complexity of interpreting multivariate models (McFarland, 2013), studies that combine many EEG features into a complex prediction algorithm do not provide a means of identifying specific EEG features with superior prediction accuracy. This is an important issue for any approach that attempts to use EEG features for practical purposes. Our concern is with identifying features that might be used to modify individuals’ emotional reactivity to specific classes of stimuli. Being able to quantify individuals’ reactivity would also be important for other uses of EEG, such as clinical diagnosis and treatment evaluation.

Only a fraction of the participants in this study generated models that generalized to new data. This is also likely to be the case in group studies that consider average results. The fraction of subjects that produce models that generalize to a significant extent probably reflects both the emotional reactivity of the subjects as well as the current methodology to detect EEG features associated with emotion. The fact that only a fraction of subjects show moderate correlations between EEG features and ratings may account for inconsistencies in the literature. For example, anterior alpha asymmetry is observed in some studies (e.g., Gable & Pool, 2014; Kline et al., 2007) but not others (e.g., Parvaz et al., 2012; Uusberg et al., 2014). To some extent this may depend upon the reactivity of subjects (e.g., Gable & Pool, 2014; Kline et al., 2007). In the present study, only two out of twenty subjects showed significant correlation of frontal alpha with valence ratings and the group mean laterality effect was not significant. While we did not explicitly use a difference score, the canonical correlation algorithm should have produced model weights that reflect hemispheric differences if these were indeed optimal for predicting ratings.

The reaction of individuals to emotional pictures may also vary with the nature of the material. For example, Schienle et al (2008) report that individuals with spider phobia have more pronounced LPP responses to pictures of spiders than controls, while not differing in their response to other materials. Likewise, individuals with cocaine use disorders produced an enhanced LPP response to drug-related pictures and a blunted response to pictures considered pleasant by controls (Dunning et al., 2011). These examples illustrate the idiosyncratic nature of emotional responses to affective pictures. The IAPS collection is a heterogeneous group of images that probably elicits quite varied reactions across individuals and items. At the same time, this richness of expression is exactly what one might expect (and use for personalized medicine purposes) given the varied nature of human emotional disorders. Finally, Mauss and Robinson (2009) suggest that there are individual differences in awareness (e.g., alexithymia) and willingness (e.g., individuals high in social desirability) to report emotional states.

Two of the subjects in the present study showed significant correlations between subjective ratings and high frequency features (30–55Hz) located along the frontal-temporal periphery of the topography. We selected these sites and frequency ranges to represent what is most probably EMG activity (Goncharova et al, 2003). A number of previous studies have used high frequency surface recorded EEG activity to evaluate emotion induction by affective picture stimuli (e.g., Kang et al., 2014; Martini et al., 2012; Muller et al, 1999). EMG is one of the many recordable responses to emotional stimuli (e.g., Baur et al., 2015; Jackson et al., 2000) and represents a valid marker for emotionality. However, when the intent is to record brain activity and use it to modify function, muscle activity is simply a source of artifacts that must be recognized and ignored. This is particularly important for high-frequency scalp-recorded activity, which may be almost entirely due to EMG contamination (Pope et al, 2009). Furthermore, if the purpose is to record cranial EMG activity, there are better recording and signal processing options than are typically employed in EEG studies.

We intentionally limited the number of EEG features examined in the present study in order to avoid overfitting. In addition, the sparse canonical correlation model we used is recommended for situations involving many predictors and limited data (Wilms and Croux, 2015). However the use of the sparse model had only a minor effect. It is likely that the large reduction in prediction of the validation data set is due to the fact that this data was collected on different days from that used for estimating model parameters. We did this intentionally so that the model would be causal. The results suggest that the relationship between emotion ratings and the EEG is not reliable in many individuals although there may be a subset that does have a more reliable relationship between the two.

Recent interest in identification of EEG correlates of emotion is motivated by diverse interests. EEG-based emotion recognition might serve as a form of communication for the disabled (Kashihara, 2014). Alternatively it could be used to develop a human-like human-computer interface (Liu et al, 2014), to control neural stimulation (Widge et al, 2014) or for neuromarketing (Kong et al., 2013). Each of these potential applications poses unique requirements for research and require a fairly high rate of emotion identification to be successful. Emotion recognition could also be used to facilitate emotion regulation (Ruiz et al., 2014). Emotion regulation could potentially provide a technology for treating many different emotional disorders such as substance abuse or anxiety disorders (Zilverstand et al., in press). Emotion regulation applications might not require the same high degree of accuracy for success.

For EEG-based emotion regulation, it is necessary to identify EEG features that can be detected on single trials, are related to the target emotion, and can be controlled by the individual. The present study is concerned with the first two objectives, identifying EEG features that can be detected in single trials and are related to the target emotion. Subsequent work will evaluate the potential for training individuals to control these EEG features and the impact of this training on behavior. The present study is a first effort to identify EEG features related to emotion. Further work evaluating alternative emotion generating protocols and alternative signal processing methods are likely to improve detection performance beyond that reported here.

Conclusion

The relationship between arousal and valence varied across individuals. The prediction of subjective ratings on training data was considerably better than was generalization to test data. The results suggest that frontal midline theta is a potential candidate for use in BCI-based modification of emotional reactions.

Acknowledgments

Support: NIH 1P41EB018783(JRW), EB00856(JRW&GSchalk), DA033088(MAP), DA034954(RZG).

References

- Aftanas LI, Varlamov AA, Pavlov SV, Makhnev VP, Reva NV. Affective picture processing: event-related synchronization within individually defined human theta band is modulated by valence dimension. Neuroscience Letters. 2001;303:115–118. doi: 10.1016/s0304-3940(01)01703-7. [DOI] [PubMed] [Google Scholar]

- Baur R, Conzelmann A, Wieser MJ, Pauli P. Spontaneous emotion regulation: differential effects on evoked brain potentials and facial muscle activity. International Journal of Psychophysiology. 2015;96:38–48. doi: 10.1016/j.ijpsycho.2015.02.022. [DOI] [PubMed] [Google Scholar]

- Coan JA, Allen JJB. Frontal EEG asymmetry as a moderator and mediator of emotion. Biological Psychology. 2004;67:7–49. doi: 10.1016/j.biopsycho.2004.03.002. [DOI] [PubMed] [Google Scholar]

- Cuthbert BN, Schupp HT, Bradley MM, Birbaumer N, Lang PJ. Brain potentials in affective picture processing: covariation with autonomic arousal and affective report. Biological Psychology. 2000;52:95–111. doi: 10.1016/s0301-0511(99)00044-7. [DOI] [PubMed] [Google Scholar]

- Daly JJ, Sitaram R. BCI therapeutic applications for improving brain function. In: Wolpaw JR, Wolpaw EW, editors. Brain-Computer Interfaces: Principles and Practice. New York: Oxford University Press; 2010. pp. 351–362. [Google Scholar]

- Dunning JP, Parvaz MA, Hajcak G, Maloney T, Alia-Klein N, Woicik PA, Telang F, Wang GJ, Volkow ND, Goldstein RZ. Motivated attention to cocaine and emotional cues in abstinent and current cocaine users- an ERP study. European Journal of Neuroscience. 2011;33:1716–1723. doi: 10.1111/j.1460-9568.2011.07663.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gable PA, Poole BD. Influence of trait behavioral inhibition and behavioral approach motivation systems on the LPP and frontal asymmetry to anger pictures. Social, Cognitive and Affective Neuroscience. 2014;9:182–189. doi: 10.1093/scan/nss130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goncharova II, McFarland DJ, Vaughan TM, Wolpaw JR. EMG contamination of EEG: spectral and topographical characteristics. Clinical Neurophysiology. 2003;114:1580–1593. doi: 10.1016/s1388-2457(03)00093-2. [DOI] [PubMed] [Google Scholar]

- Jackson DC, Malmstadt JR, Larson CL, Davidson RJ. Suppression and enhancement of emotional responses to unpleasant pictures. Psychophysiology. 2000;37:515–522. [PubMed] [Google Scholar]

- Kang JH, Jeong JW, Kim HT, Kim SH, Kim SP. Representation of cognitive reappraisal goals in frontal gamma oscillations. PLoS One. 2014;9:e113375. doi: 10.1371/journal.pone.0113375. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kashihara K. A brain-computer interface for potential non-verbal facial communication based on EEG signals related to specific emotions. Frontiers in Neuroscience. 2014;8:244. doi: 10.3389/fnins.2014.00244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kline JP, Blackhart GC, Williams WC. Anterior EEG asymmetries and opponent process theory. International Journal of Psychophysiology. 2007;63:302–307. doi: 10.1016/j.ijpsycho.2006.12.003. [DOI] [PubMed] [Google Scholar]

- Knyazev GG, Slobodskoj-Plusnin JY, Bocharov AV. Event-related delta and theta synchronization during explicit and implicit emotion processing. Neuroscience. 2009;164:1588–1600. doi: 10.1016/j.neuroscience.2009.09.057. [DOI] [PubMed] [Google Scholar]

- Kong W, Zhan X, Hu S, Vecchiato G, Babiloni F. Electronic evaluation of video commercials by impression index. Cognitive Neurodynamics. 2013;7:531–535. doi: 10.1007/s11571-013-9255-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kostov A, Polak M. Parallel man-machine training in development of EEG-based cursor control. IEEE Transactions on Rehabilitation Engineering. 2000;8:203–205. doi: 10.1109/86.847816. [DOI] [PubMed] [Google Scholar]

- Kuppens P, Tuerlinckx F, Russell JA, Barrett LF. The relationship between valence and arousal in subjective experience. Psychological Bulletin. 2013;139:917–940. doi: 10.1037/a0030811. [DOI] [PubMed] [Google Scholar]

- Lang PJ, Bradley MM, Cuthbert BN. International Affective Picture System (IAPS): Technical Manual and Affective Ratings. The Center for Research in Psychophysiology, University of Florida; Gainesville, FL: 1999. [Google Scholar]

- Leite J, Carvalho S, Galdo-Alvarez S, Alves J, Sampaio A, Goncalves OF. Affective picture modulation: valence, arousal, attention allocation and motivational significance. International Journal of Psychophysiology. 2012;83:375–381. doi: 10.1016/j.ijpsycho.2011.12.005. [DOI] [PubMed] [Google Scholar]

- Liu YH, Wu CT, Cheng WT, Hsiao YT, Chen PM, Teng JT. Emotion recognition from single-trial EEG based on kernel Fisher’s emotion pattern and imbalanced quasiconformal kernel support vector machine. Sensors. 2014;14:13361–13388. doi: 10.3390/s140813361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martini N, Menicucci D, Sebastiani L, Bedini R, Pingitore A, Vanello N, Milanesi M, Landini L, Gemignani A. The dynamics of EEG gamma responses to unpleasant visual stimuli: From local activity to functional connectivity. Neuroimage. 2012;60:922–932. doi: 10.1016/j.neuroimage.2012.01.060. [DOI] [PubMed] [Google Scholar]

- Mauss IB, Robinson MD. Measures of emotion: A review. Cognition and Emotion. 2009;23:209–237. doi: 10.1080/02699930802204677. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McFarland DJ. Characterizing multivariate decoding models based on correlated EEG spectral features. Clinical Neurophysiology. 2013;124:1297–1302. doi: 10.1016/j.clinph.2013.01.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McFarland DJ, Krusienski DJ. BCI signal processing: feature translation. In: Wolpaw JR, Wolpaw EW, editors. Brain-Computer Interfaces: Principles and Practice. New York: Oxford University Press; 2012. pp. 147–163. [Google Scholar]

- McFarland DJ, Sarnacki WA, Wolpaw JR. Electroencephalographic (EEG) control of three-dimensional movement. Journal of Neural Engineering. 2010;7:036007. doi: 10.1088/1741-2560/7/3/036007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McFarland DJ, Wolpaw JR. Sensorimotor rhythm-based brain–computer interface (BCI): model order selection for autoregressive spectral analysis. Journal of Neural Engineering. 2008;5:155–162. doi: 10.1088/1741-2560/5/2/006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muller MM, Keil A, Gruber T, Elbert T. Processing of affective pictures modulates right-hemisphere gamma band EEG activity. Clinical Neurophysiology. 1999;110:1913–1920. doi: 10.1016/s1388-2457(99)00151-0. [DOI] [PubMed] [Google Scholar]

- Parvaz MA, MacNamara A, Goldstein RZ, Hajeak G. Event-related induced frontal alpha as a marker of prefrontal cortex activation during cognitive reappraisal. Cognitive Affective and Behavioral Neuroscience. 2012;12:730–740. doi: 10.3758/s13415-012-0107-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfurtscheller G, Flotzinger D, Kalcher J. Brain-computer interface- a new communication device for handicapped persons. Journal of Microcomputer Appllications. 1993;16:293–299. [Google Scholar]

- Pichiorri F, Morone G, Petti M, Toppi J, Pisotta I, Molinari M, Paolucci S, Astolfi L, Cincotti F, Mattia D. Brain-computer interface boosts motor imagery practice during stroke recovery. Annals of Neurology. 2015;77:851–865. doi: 10.1002/ana.24390. [DOI] [PubMed] [Google Scholar]

- Pope KJ, Fitzgibbon SP, Lewis TW, Whitham EM, Willoughby JO. Relation of gamma oscillations in scalp recordings to muscular activity. Brain Topography. 2009;22:13–17. doi: 10.1007/s10548-009-0081-x. [DOI] [PubMed] [Google Scholar]

- Ruiz S, Buyukturkoglu K, Rana M, Birbaumer N, Sitaram R. Real-time dMRI brain computer interfaces: self-regulation of single brain regions to networks. Biological Psychology. 2014;95:4–20. doi: 10.1016/j.biopsycho.2013.04.010. [DOI] [PubMed] [Google Scholar]

- Schalk G, McFarland DJ, Hinterberger T, Birbaumer N, Wolpaw JR. BCI2000: a general-purpose brain-computer interface (BCI) system. IEEE Transactions on Biomedical Engineering. 2004;51:1034–1043. doi: 10.1109/TBME.2004.827072. [DOI] [PubMed] [Google Scholar]

- Schalk G, Mellinger G. A practical guide to brain-computer interfacing with BCI2000: General purpose software for brain-computer interface research, data acquisition, stimulus presentation, and brain monitoring. Springer; London: 2014. [Google Scholar]

- Sharbrough F, Chatrian CE, Lesser RP, Luders H, Nuwer M, Picton TW. American electroencephalographic society guidelines for standard electrode position nomenclature. Journal of Clinical Neurophysiology. 1991;8:200–202. [PubMed] [Google Scholar]

- Schienle A, Schafer A, Naumann E. Event-related brain potentials of spider phobics to disorder-relevant, generally disgusting and fear-inducing pictures. Journal of Psychophysiology. 2008;22:5–13. [Google Scholar]

- Uusberg A, Uibo H, Tiimus R, Sarapuu H, Kreegipuu K, Allik J. Approach-avoidance activation without anterior asymmetry. Frontiers in Psychology. 2014;5:192. doi: 10.3389/fpsyg.2014.00192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang XW, Nie D, Lu BL. Emotion state classification from EEG data using machine learning approach. Neurocomputing. 2014;129:94–106. [Google Scholar]

- Widge AS, Dougherty DD, Moritz CT. Affective brain-computer interfaces as enabling technology for responsive psychiatric stimulation. Brain Computer Interfaces. 2014;1:126–136. doi: 10.1080/2326263X.2014.912885. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilms I, Croux C. Sparse canonical correlation analysis from a predictive point of view. Biomedical Journal. 2015;57:834–851. doi: 10.1002/bimj.201400226. [DOI] [PubMed] [Google Scholar]

- Witten DM, Tibshirani R, Hastie T. A penalized matrix decomposition, with applications to sparse principal components and canonical correlation analysis. Biostatistics. 2009;10:515–534. doi: 10.1093/biostatistics/kxp008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolpaw JR, McFarland DJ, Neat GW, Forneris CA. An EEG-based brain-computer interface for cursor control. Electroencephalography and Clinical Neurophysiology. 1991;78:252–259. doi: 10.1016/0013-4694(91)90040-b. [DOI] [PubMed] [Google Scholar]

- Wolpaw JR, Wolpaw EW. Brain-computer interfaces: something new under the sun. In: Wolpaw JR, Wolpaw EW, editors. Brain-Computer Interfaces: Principles and Practice. New York: Oxford University Press; 2012. pp. 3–12. [Google Scholar]

- Yoon HJ, Chung SY. EEG-based emotion estimation using Bayesian weighted-log-posterior function and perceptron convergence algorithm. Computers in Biology and Medicine. 2013;43:2230–2237. doi: 10.1016/j.compbiomed.2013.10.017. [DOI] [PubMed] [Google Scholar]

- Zilverstand A, Parvaz MA, Goldstein RZ. Neuroimaging cognitive reappraisal in clinical populations to define neural targets for enhancing emotion regulation. A systematic review. Neuroimage. doi: 10.1016/j.neuroimage.2016.06.009. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]