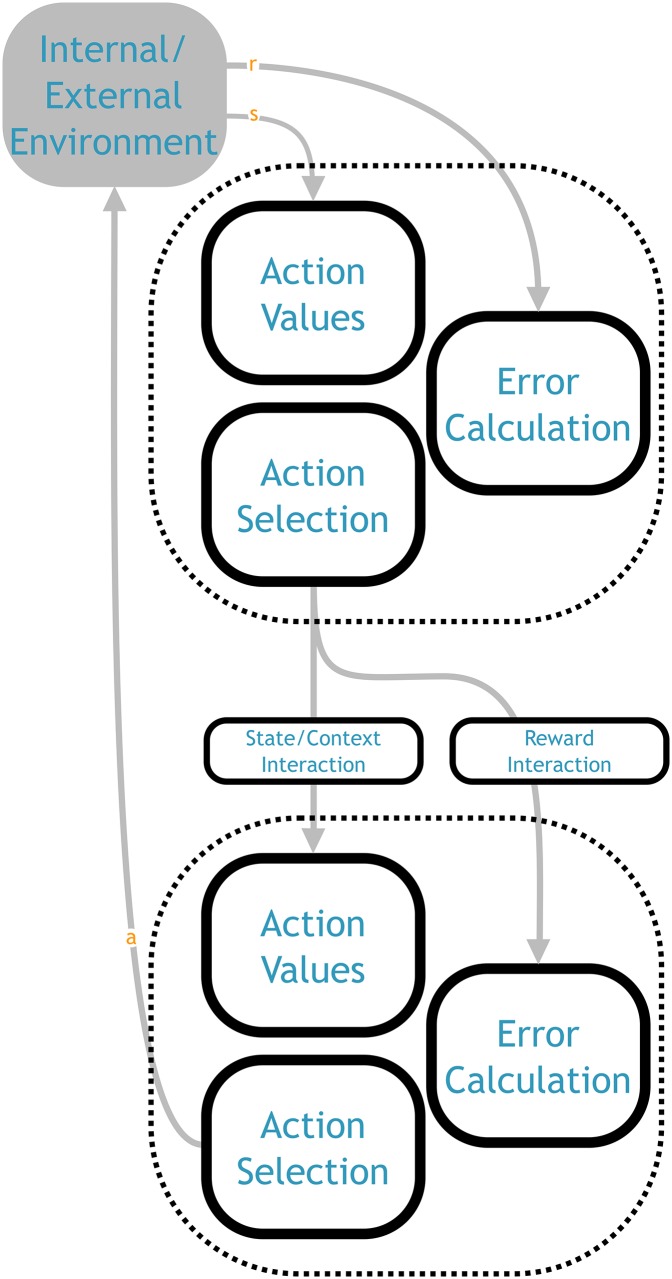

Fig 5. Hierarchical composition of the basic SMDP architecture (from Fig 1).

The output of the higher level can modify either the state input to the action values component of the lower level (a state or context interaction), or the reward input to the error calculation component (reward interaction). Shown here with a two layer hierarchy, but this same approach can be repeated to any desired depth.