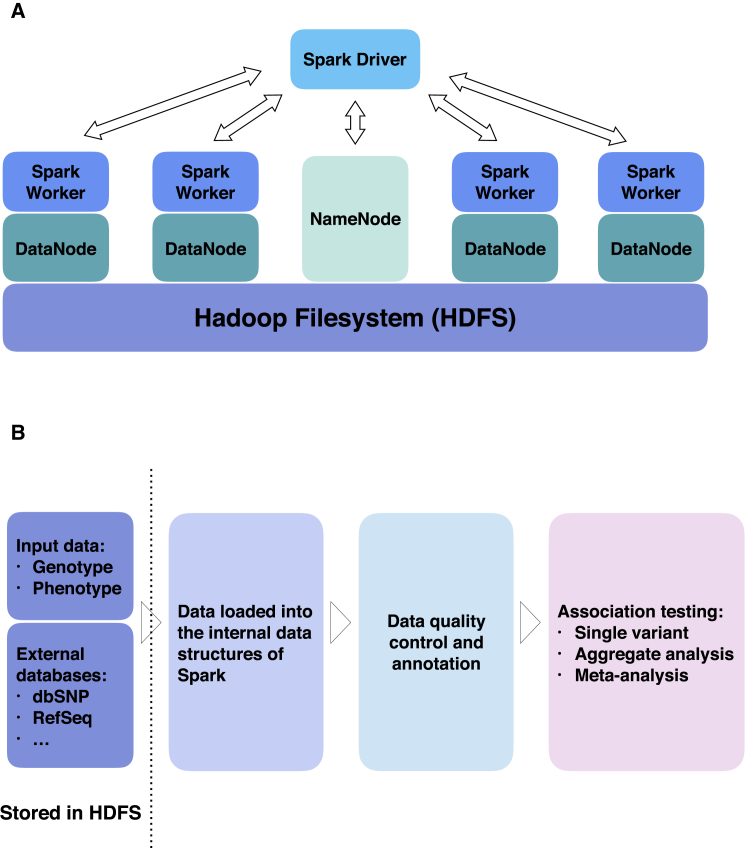

Figure 1.

Spark Architecture and SEQSpark Workflow

(A) Interaction of the Spark components—driver and workers and the Hadoop filesystem (HDFS) components—NameNode and DataNodes. The NameNode is the master node and manages the file system’s meta-data. A file in the HDFS can be split into several blocks and those blocks are stored in a set of slave nodes (DataNodes). The NameNode determines the mapping of the blocks to the DataNodes, while the DataNodes performs the read and write operations within the file system. The Spark driver talks with the HDFS NameNode and obtains the meta-data from NameNode and then distributes the jobs to the Spark workers.

(B) SEQSpark workflow that begins with importing data and databases (used for annotation). The data are loaded into the internal data structures of Spark. Data quality control and annotation can be performed followed by association testing.