Abstract

Several studies have linked codeletion of chromosome arms 1p/19q in low-grade gliomas (LGG) with positive response to treatment and longer progression-free survival. Hence, predicting 1p/19q status is crucial for effective treatment planning of LGG. In this study, we predict the 1p/19q status from MR images using convolutional neural networks (CNN), which could be a non-invasive alternative to surgical biopsy and histopathological analysis. Our method consists of three main steps: image registration, tumor segmentation, and classification of 1p/19q status using CNN. We included a total of 159 LGG with 3 image slices each who had biopsy-proven 1p/19q status (57 non-deleted and 102 codeleted) and preoperative postcontrast-T1 (T1C) and T2 images. We divided our data into training, validation, and test sets. The training data was balanced for equal class probability and was then augmented with iterations of random translational shift, rotation, and horizontal and vertical flips to increase the size of the training set. We shuffled and augmented the training data to counter overfitting in each epoch. Finally, we evaluated several configurations of a multi-scale CNN architecture until training and validation accuracies became consistent. The results of the best performing configuration on the unseen test set were 93.3% (sensitivity), 82.22% (specificity), and 87.7% (accuracy). Multi-scale CNN with their self-learning capability provides promising results for predicting 1p/19q status non-invasively based on T1C and T2 images. Predicting 1p/19q status non-invasively from MR images would allow selecting effective treatment strategies for LGG patients without the need for surgical biopsy.

Keywords: Low grade gliomas, 1p/19q codeletion, Therapy response, Convolutional neural networks

Introduction

Magnetic resonance (MR) imaging is a non-invasive medical imaging technique that provides outstanding soft tissue contrast and has become the standard imaging technique for brain tumor diagnosis [1]. Gliomas are the most frequent primary brain tumors originating in the brain [2]. World Health Organization (WHO) classifies them into four grades based on their aggressiveness. Low-grade gliomas (LGG), also named diffuse low-grade and intermediate-grade gliomas (WHO grades II and III), include oligodendrogliomas, astrocytomas, and oligoastrocytomas [3–5]. Compared to high-grade gliomas (HGG: WHO grade IV, glioblastoma), LGG are less aggressive tumors with better prognosis. A subgroup of LGG will progress to glioblastoma (HGG, grade IV), but other subgroups will progress slower or remain stable [4, 6–8]. In addition, some LGG are sensitive to therapy, and their survival ranges from 1 to 15 years [6, 8]. Presently, treatment includes observation, surgery, radiotherapy, and chemotherapy either separately or in combination [4]. Although histological grading of tumors is the gold standard for diagnosis and subsequent treatment planning, it is known that histopathological diagnosis lacks information about other tumor properties (e.g., genomic biomarkers) that can impact optimal therapy options [3]. Therefore, other tests of LGG (e.g., molecular biomarkers testing) are also obtained to improve treatment planning. Several studies [9–12] have shown that codeletion of 1p/19q chromosome arms is a strong prognostic molecular marker for positive tumor response to chemotherapy and radiotherapy in LGG and associated with longer survival. Therefore, predicting 1p/19q status is crucial for effective treatment planning of LGG.

Currently, determining 1p/19q status requires surgical biopsy typically followed by fluorescence in-situ hybridization (FISH) [13] to identify chromosomal deletion. Several studies have shown that imaging can predict 1p19q status from MR images or positron emission tomography (PET) images. Fellah et al. [9] presented univariate analysis and multivariate random forest models to determine 1p/19q status from multimodal MR images including conventional MR images, diffusion-weighted imaging (DWI), perfusion-weighted imaging (PWI), and MR spectroscopy. DWI, PWI, and MRI spectroscopy showed no significant difference between tumors with and without 1p/19q loss in their study. They concluded that inclusion of DWI, PWI, and MR spectroscopy was not useful for determining 1p/19q status compared with conventional MR images. Jansen et al. [10] presented detection of 1p/19q status from [18F] fluoroethyltyrosine-PET (FET-PET) images. They derived several biomarkers from PET images and correlated with 1p/19q status, and showed that these biomarkers do not reliably predict the status of 1p/19q in individual patients. Iwadate et al. [11] studied detection of 1p/19q codeletion from 11C–methionine PET images and concluded that 11C–methionine PET might help discriminate tumors with and without 1p/19q codeletion preoperatively. Bourdillion et al. [12] presented prediction of anaplastic transformation in grade 2 oligodendrogliomas based on MR spectroscopy and 1p/19q status. They showed that choline/creatine ratio > 2.4 was associated with the occurrence of anaplastic transformation in patients without 1p/19q codeletion. On the other hand, no anaplastic transformation was observed in patients with 1p/19q codeletion.

In this study, we present a robust and non-invasive method to predict the 1p/19q status of LGG from post-contrast T1- and T2-weighted MR images using convolutional neural networks (CNN).

Material and Methods

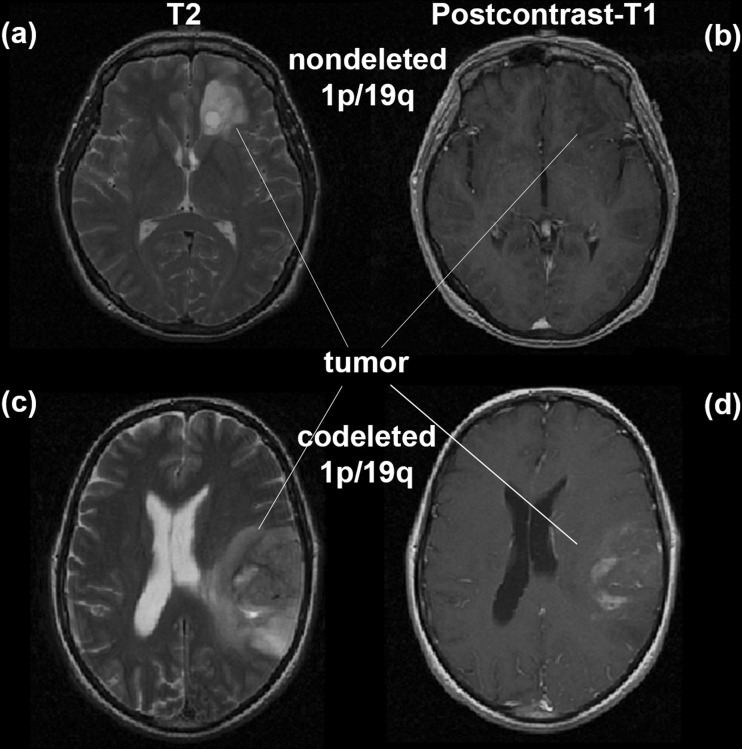

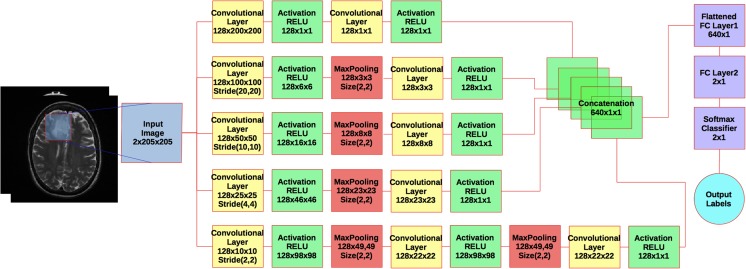

We use a combination of two commonly acquired image types, T2 and post-contrast T1-weighted images, as input to our classification algorithm. Figure 1 shows examples of LGG image characteristics in post-contrast T1- and T2-weighted images with and without 1p/19q codeletion. Our classification algorithm consists of several pre-processing steps: multi-modal image registration, tumor segmentation, data normalization, and data augmentation. After applying pre-processing steps to data, we use the segmented images to train a multi-scale CNN for prediction of 1p/19q status. A flowchart and implementation details of the multi-scale CNN are shown in Fig. 2.

Fig. 1.

An example of low-grade glioma with and without 1p/19q codeletion. Images a and b show T2 and post contrast T1 for non-deleted 1p/19q. Images c and d show T2 and post contrast T1 for codeleted 1p/19q

Fig. 2.

A flowchart of the multi-scale CNN architecture. Blue box is the input image. Yellow boxes are convolutional layers. Green boxes are rectified linear units (RELU), activations. Red Boxes are max pooling layers. Purple boxes are fully connected layers plus a softmax binary classifier. Cyan circle shows the output label

Brain Tumor Data

One hundred fifty nine (n = 159) consecutive (01 October 2002–01 August 2011) pre-operative LGG patients, with stereotactic MRI images, who had biopsy proven 1p/19q status consisting either no deletion or co-deletion, were identified from our brain tumor patient database at Mayo Clinic for this study. The data included 102 non-deleted and 57 codeleted LGG. The types of LGG were oligoastrocytoma (n = 97), oligodendrogliomas (n = 45), and astrocytomas (n = 17). In total, 477 slices (3 slices per LGG including one centered at the tumor ‘equator’ plus one slice above and below) were included for this study. Post-contrast T1- and T2-weighted images were available for all selected patients. Institutional Review Board (IRB) approval was obtained for this study, and requirement for patient consent was waived.

All images were acquired for biopsy planning purposes, and so a very consistent scanning protocol was used, including 3-mm-thick T2 images and 1-mm-thick axial spoiled-gradient recalled images all acquired at 1.5 T or 3 T on either General Electric Medical System (Waukesha, WI, USA) or Siemens Medical System (Malvern, PA, USA) scanner.

Pre-Processing

Multi-Modal Image Registration

We registered post-contrast T1-weighted images to T2-weighted images of the same patient by using the ANTs open source software library for image registration [14, 15]. We performed rigid registration using cubic b-spline interpolation and mutual information metric, which takes into account translational movements, to align our intra-patient images.

Tumor Segmentation

We used our semi-automatic LGG segmentation software to segment the tumors in 2D [16]. First, the user selects the slice where the area of the tumor appears largest, and then draws a region-of-interest (ROI) that completely encloses the tumor and some normal tissue. Second, a normal brain atlas [17] and post-contrast T1-weighted images are registered to T2-weighted images. Third, the posterior probability of each pixel/voxel belonging to normal and abnormal tissues is calculated based on information derived from the atlas and ROI. Finally, geodesic active contours [18] use the probability map of the tumor to shrink the ROI until optimal tumor boundaries are found. With that, a morphological binary dilation of five pixels is applied to make sure boundaries are included in the tumor ROI.

Data Normalization

After registration and segmentation steps, the dataset of raw MR image data is normalized to balance intensity values and narrow the region of interest. This step aims to finely tune the input information fed into the CNN. The normalization process begins with skull-stripping using Brain Extraction Tool (BET) [19] based on FSL library [20], then followed by standard scoring. Standard scores (also called z-scores) are calculated for each image by subtracting the mean of image intensities from an individual intensities and then dividing the difference by the standard deviation of the image intensities (see Eq. 1).

| 1 |

where X is image intensities, μ is mean of the image intensities, and σ is the standard deviation of the image intensities.

Data Augmentation

Using deep networks, in particular CNNs, there is a high susceptibility to overfitting. This is a direct result of the large number of network parameters relative to the number of features provided by the MR images. The number of features available from MR images may not be sufficient to provide sufficient learning and generalizability to the parameters in the network, hence, the need to increase the number of MR images. One approach to address this is through artificially augmenting the dataset using label-preserving transformations [21]. This “data augmentation” consists of generating image translations, rotations, and horizontal and vertical flipping. We apply a random combination of these transformations on each image, hence, creating “new” images. This multiplies our dataset by several fold and help reduce over-fitting.

Convolutional Neural Networks

Convolutional Neural Networks (CNN) is a type of feed-forward artificial neural network for learning a hierarchical representation of image data [22]. Unlike a regular neural network, the layers of a CNN have neurons arranged in three dimensions (width, height, and depth) and respond to a small region of the input image, called receptive field, instead of all of the neurons in a fully connected manner. Each neuron learns to detect features from a local region the input image. This allows capturing features of local structures and preserving the topology of the input image. The final output layer will reduce the full image into a single vector of class scores, arranged along the depth dimension.

The main types of layers needed to build a CNN deep learning system are: input layer, convolutional layer, activation layer, pooling layer, and fully connected layer. Most implementations have many of each type of layer, hence, the title ‘deep’learning. Each layer is described in more details.

Input layer. This layer holds the raw pixels values of the input image after applied pre-processing steps.

Convolutional layer. This layer is composed of several feature maps along the depth dimension, each corresponding to a different convolution filter. All neurons with the same spatial dimension (width and height) are connected to the same receptive filed in the input image or, generally, in the previous layer. This allows capturing a wide variety of imaging features. The depth of the layer, i.e., the number of convolution filters, defines the number of features that can be extracted from each input receptive field. Each neuron in a feature map shares exactly the same weights, which define the convolution filter. This allows reducing the number of weights, and thus increasing the generalization ability of the architecture.

Activation layer. This layer applies an activation function to each neuron in the output of the previous layer. For example, rectified linear unit (RELU) where RELU(x) = max(0,x) is the most common activation function used in CNNs architectures and fires the real value of the output and thresholds at zero. This layer does not change the size of the previous layer. It simply replaces negative values with ‘0’.

Pooling layer. Placed after an activation layer, and this layer down-samples along spatial dimensions (width and height). It selects the invariant imaging features by reducing the spatial dimension of the convolution layer. The most popular type is max pooling, which selects the maximum value of its inputs as the output, thus preserving the most prominent filter responses.

Fully connected layer. As with neural networks, this layer connects all neurons in the previous layer to this layer with a weight for each such connection. If used as the output, each output nodes represents the ‘score’ for each possible class.

To allow learning of complex relationships and to achieve a more hierarchical representation of the input image, multiple convolutional-pooling layers are stacked to form a deep architecture of multiple non-linear transformations. This allows learning a hierarchy of complex features carrying predictive power for image classification tasks.

Multi-Scale CNN Parameters

In our study, we use a CNN architecture that consists of different sizes of convolutional filters to train on our dataset (see Fig. 2). This can be considered as multi-scale CNN that learns global and local imaging features with different convolutional filter sizes and concatenates their output before the classification step. The parameters of each layer are seen in the boxes in Fig. 2. For example, the size of the first convolutional layer in the first branch is 128 × 200 × 200 and corresponds to depth of layer, 128 filters, and size of filters 200 × 200 in 2D spatial space (x, y). Default stride size is 1 px in x and y. Specific strides for each layer are shown in the boxes. Since every tumor has a different shape and size, we embedded each 2D tumor slice into standard background (zero intensity level) of size 205 × 205 px, which is the smallest that encompasses the largest tumor size in our data set.

In our architecture, probability of tumor slices belonging to each class were computed with softmax classifier and the parameters of the CNN were updated by minimizing a negative log likelihood loss function [23]. We used a stochastic gradient descend (SGD) [24] algorithm with mini batches of 32 samples in our study. The SGD algorithm with mini batches is commonly used to train neural networks on large datasets because it efficiently finds good values without high computation or memory requirements. Specifically, only a small batch of training data at a time is used at each update of weights instead of using all training samples to compute the gradient of the loss function. The learning rate was initially set to 0.001 and decreased 50% at every 50 epoch. The training was stopped when the change in validation loss smaller 0.02 for 10 consecutive epochs.

Implementation and Experiments

Implementation

Our code is based on the Keras package [25], built on top of Theano library; a Python library. Keras can leverage graphical processing units (GPUs) to accelerate the deep learning algorithms. We trained our CNN architecture on an NVIDIA GTX 970 GPU card. The training took about 10 min to 2 days depending on the amount of the used data. For example, using 30-fold augmented data as the training data at each epoch took about 2 days to train the network.

Experiments

We divided our data (n = 477 slices) into training and test sets. A total of 90 slices (45 non-deleted and 45 codeleted) were randomly selected from the data at the beginning, as a test set, and were never seen by the CNN during the training. From the remaining data (n = 387), 252 slices that were balanced for equal class probability (n = 126 codeleted +126 non-deleted) were randomly selected at each epoch for training. Twenty percent of the training set was separated as validation set (n = 68) during the training. The data augmentation was applied to the training set (n = 7560 for 30-fold augmentation) at each epoch to increase the training samples and to achieve generalization ability.

We used the performance of our CNN architecture on validation set to tune the hyper-parameters of the CNN. Since our architecture includes multi-size of convolutional filters and multi-branch CNN, it takes into account a range of values for hyperparameters in the architecture. For further tuning of hyperparameters, we first investigated the contribution of each image channel for prediction of 1p/19q status. Therefore, we experimented three configurations of our multi-scale CNN based on combinations of input images without data augmentation, i.e., T1 only (configuration 1), T2 only (configuration 2), and T1 and T2 combined (configuration 3). We selected the best configuration (configuration 3) among these based on their performance on the test set. Next, we trained the best configuration from above with multiple folds k ∈ {10, 20, 30} of data augmentation to tune the k hyper-parameter of the data augmentation. To evaluate the influence of different optimizers on learning, we compared the performances of our CNN architecture using four different optimizers: SGD, root mean square propagation (RMSprop), improved adaptive gradient algorithm (AdaDelta), and adaptive moment estimation (Adam). Configuration 4 was defined as the one using the best performing parameters and data augmentation. For each configuration, sensitivity, specificity, and accuracy were computed as follows:

sensitivity or true positive rate (TPR):

where TP is true positives, FN is false negatives specificity (SPC), or true negative rate:

where TN is true negatives, FP is false positives and accuracy (ACC):

We also compared the performance of our method to the performance of a classical machine-learning algorithm using support vector machine (SVM) classifier with greedy feature selection. Using seven selected features (from intensity-based features, local binary patterns, Gabor filters, Laplacian of Gaussian, gray-level co-occurrence matrix, and boundary sharpness), the SVM classifier was trained and tested on the same data.

Results

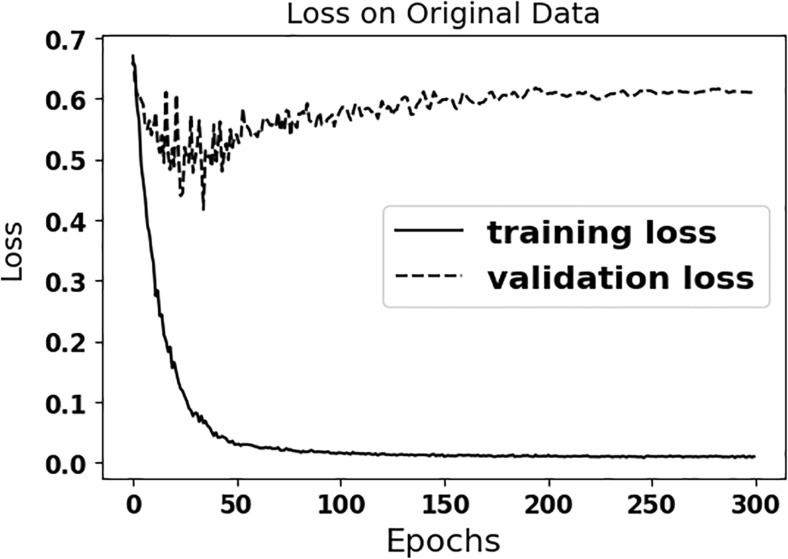

Figure 3 shows that the multi-scale CNN is overfitting to the original (limited size) training data, when data augmentation is not used, as the distance between the training and validation losses increases over time. The accuracy approaches 100% after 50 epochs for the training set and the loss gets closer to zero, but the performance of trained CNN without data augmentation remains below 80% for the test data (see Table 1). As seen in the Table 1, the performance of CNN with data augmentation (configuration 4) is better than the other configurations without data augmentation.

Fig. 3.

Loss plots are shown for the training and validation sets on the original data for configuration 4

Table 1.

Statistics for test set

| Configurations | Sensitivity | Specificity | Accuracy |

|---|---|---|---|

| 1 | 80.0% | 46.7% | 63.3% |

| 2 | 86.7% | 64.4% | 75.6% |

| 3 | 84.4% | 73.3% | 78.9% |

| 4 | 93.3% | 82.2% | 87.7% |

Table shows sensitivity, specificity, and accuracy for each configuration of multi-scale CNN for the test set. Configurations: 1 using T1C only and no augmentation (NA), 2 using T2 only and NA, 3 using T1C and T2 combined (T1 T2) and NA, 4 using T1 T2 and 30-fold AG and further training.

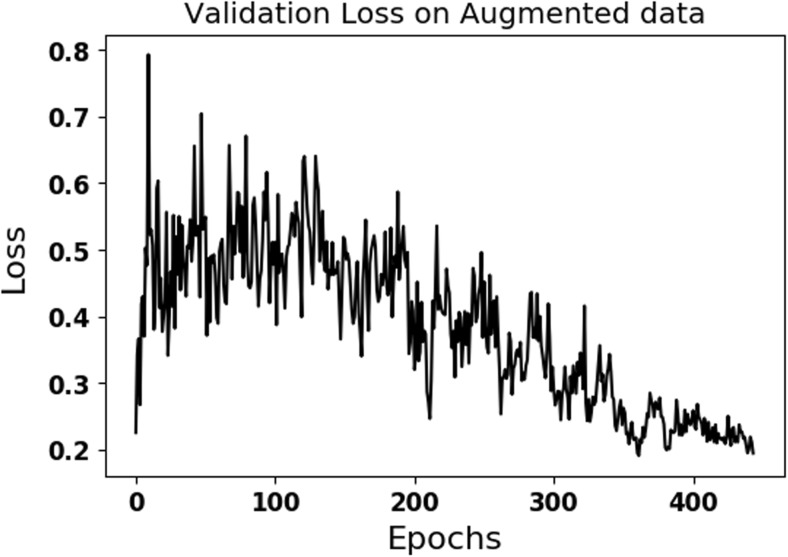

Data augmentation of original data with k=30-fold (ACC = 89.5) gave the better accuracy on the validation dataset compared to the ones using k=10-fold (ACC = 85.5) and k=20-fold (ACC = 83.3) augmentations. Compared to training on the data without augmentation, accuracy and loss in Fig. 5 fluctuate and are noisier due to variations introduced with data augmentation. The results of the SVM classifier using texture features on the test set were 80% (sensitivity), 82% (specificity), and 81% (accuracy), which are inferior than the results of multi-scale CNN (configuration 4).

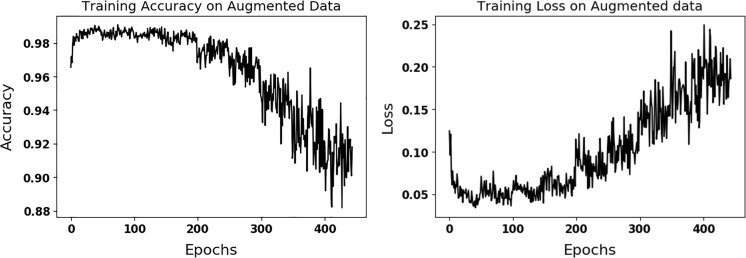

Fig. 5.

Validation loss is shown on the validation set for the best performing configuration (configuration 4) using data augmentation

Table 2 compares the performance of the best performing configuration using four different optimizers. As seen in Table 2, the influence of optimizers on learning is not much and SGD gives better performance on the test data compared to other optimizers. Figure 4 shows the performance of the best performing CNN configuration 4 on the training data. Fluctuations seen in Fig. 4 are due to randomly introduced augmented data at each epoch. Table 1 shows the performance of multi-scale CNN configurations on the test dataset. Figure 5 shows the validation loss of the best performing CNN (configuration 4) on the validation set. As seen in Figs. 4 and 5, the training and validation losses converge to same value of about 0.2 over time. Table 3 shows the confusion matrix of 1p19q status on test set for the best performing CNN (configuration 4). False negatives are higher than false positives.

Table 2.

Statistics for optimizers

| Configurations | Sensitivity | Specificity | Accuracy |

|---|---|---|---|

| SGD | 93.3% | 82.2% | 87.7% |

| RMSprop | 84.4% | 84.4% | 84.4% |

| AdaDelta | 82.2% | 84.4% | 83.3% |

| Adam | 88.8% | 82.2% | 85.5% |

The performance of the best CNN configuration using four different optimizers on the test dataset was shown.

Fig. 4.

Accuracy (left) and loss (right) plots are shown for the training of the best performing configuration (4) on the augmented data

Table 3.

Confusion matrix of classification of 1p19q status on test set for the best CNN (configuration 4)

| n = 90 | Predicted labels | ||

|---|---|---|---|

| Co-deleted | Non-deleted | ||

| Actual labels | Co-deleted | 42 | 3 |

| Non-deleted | 8 | 37 | |

Discussion

In this study, we present a robust and non-invasive method to predict 1p/19q chromosomal arm deletion from post-contrast T1- and T2-weighted MR images using multi-scale CNN approach. As mentioned in previous studies [4, 6–8], a subset of LGG are sensitive to therapy and have longer progress-free survival while others may progress to HGG. Although histologic grade is the most important factor in therapeutic decision-making, it lacks information about other tumor properties that can impact optimal therapy options and recent reports suggest genomic marker may be more predictive than histologic grade [26]. Adding other information such as 1p/19 chromosomal arms deletion, which has been associated with positive response to therapy, could help improve therapeutic decision-making.

An important challenge in applying deep learning methods to medical images is having an adequate number of data sets. Our multi-scale CNN reached to 100% sensitivity, specificity, and accuracy in training set without data augmentation (see Fig. 3), but had less than 80% specificity and accuracy for the (unseen) test set (see Table 1). This demonstrated that our algorithm did not truly learn the distinctive imaging features, but rather, overfit to the training examples. As seen in Table 1, configuration 4 using augmented data to train our multi-scale CNN approach perform better than the other configurations on the unseen test dataset. Fluctuations as seen in Fig. 4 are results of introducing new augmented training data at each epoch. As seen in the results, augmenting a randomly selected set of the original training data, which is described in the experiment section, at each epoch improves generalization ability of the multi-scale CNN and prevents overfitting. As seen in Table 1, configuration 4 yields the best performance on the test dataset. The performance of configuration 4 in the training and validation datasets is in the same order with its performance in the test dataset. This highlights the generalizability of configuration 4 and its minimal overfitting.

The misclassification rate on the test dataset is about 11%. Some part of this error might be due to the error rate introduced by the FISH test that was used to determine the 1p/19 status. Scheie et al. [13] showed that the reliability of FISH test was 95% and 87.5% for the detection of 1p and 19q deletions, respectively.

As seen in Fig. 5, the loss on validation dataset for configuration 4 stops fluctuating and stabilizes with slight changes after 400 epochs for several epochs. This means that the network is not learning much any more and had converged to a steady state. As seen in Table 2, SGD optimizer gave superior performance on the test dataset compared to other three optimizers.

As seen in our results, deep learning algorithm performs better than the classical machine-learning algorithm using SVM. As mentioned before, Fellah et al. [9] presented the first study that determined the 1p/19q status from DWI, PWI, MR spectroscopy, and conventional MR images. Their misclassification rates were 48 and 40% for using only the conventional MR images and using all multimodal images, respectively. Compared to their results, our best-performing configuration (4) shows better performance in our dataset, with 93.3% sensitivity, 82.2% specificity, and 87.7% accuracy in the unseen test dataset. Moreover, our data was evaluated in a larger dataset (159 LGG vs. 50 LGG). However, it is hard to make the direct comparison since we used different datasets. To the best of our knowledge, our study is the first in using the deep learning to predict 1p19q from MR images in LGGs.

Although the original data size was limited, artificially augmenting data helped increase the volume of our data. It may be that further performance gains will be realized with larger patient populations with more heterogeneous data. Furthermore, including weight regularization such as L1 or L2 in the network might improve generalizability of our networks. Further studies with larger patient populations are required to investigate these and confirm our current findings.

Conclusions

Our multi-scale CNN approach provides promising results for predicting 1p/19q codeletion status non-invasively, based on post-contrast T1 and T2 images. Data augmentation helps counter overfitting and provides learning the invariant patterns in the images. Our presented method could be further improved and potentially used as an alternative to surgical biopsy and pathological analysis for predicting 1p/19q codeletion status.

Acknowledgements

This work was supported by National Institutes of Health 1U01CA160045.

Compliance with Ethical Standards

Conflict of Interest

The authors declare that there is no conflict of interest.

References

- 1.DeAngelis LM. Brain tumors. N Engl J Med. 2001;344(2):114–23. doi: 10.1056/NEJM200101113440207. [DOI] [PubMed] [Google Scholar]

- 2.Cha S. Update on brain tumor imaging: from anatomy to physiology. AJNR Am J Neuroradiol. 2006;27(3):475–87. [PMC free article] [PubMed] [Google Scholar]

- 3.Kleihues P, Burger PC, Scheithauer BW. The new WHO classification of brain tumours. Brain Pathol. 1993;3(3):255–68. doi: 10.1111/j.1750-3639.1993.tb00752.x. [DOI] [PubMed] [Google Scholar]

- 4.Network TCGAR Comprehensive, integrative genomic analysis of diffuse lower-grade gliomas. New England Journal of Medicine. 2015;372(26):2481–98. doi: 10.1056/NEJMoa1402121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Louis DN, Ohgaki H, Wiestler OD, Cavenee WK, Burger PC, Jouvet A, et al. The 2007 WHO classification of Tumours of the central nervous system. Acta Neuropathologica. 2007;114(2):97–109. doi: 10.1007/s00401-007-0243-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.van den Bent MJ, Brandes AA, Taphoorn MJ, Kros JM, Kouwenhoven MC, Delattre JY, et al. Adjuvant procarbazine, lomustine, and vincristine chemotherapy in newly diagnosed anaplastic oligodendroglioma: long-term follow-up of EORTC brain tumor group study 26951. Journal of clinical oncology : official journal of the American Society of Clinical Oncology. 2013;31(3):344–50. doi: 10.1200/JCO.2012.43.2229. [DOI] [PubMed] [Google Scholar]

- 7.van den Bent MJ. Practice changing mature results of RTOG study 9802: another positive PCV trial makes adjuvant chemotherapy part of standard of care in low-grade glioma. Neuro Oncol. 2014;16(12):1570–4. doi: 10.1093/neuonc/nou297. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Macdonald DR, Gaspar LE, Cairncross JG. Successful chemotherapy for newly diagnosed aggressive oligodendroglioma. Annals of neurology. 1990;27(5):573–4. doi: 10.1002/ana.410270519. [DOI] [PubMed] [Google Scholar]

- 9.Fellah S, Caudal D, De Paula AM, Dory-Lautrec P, Figarella-Branger D, Chinot O, et al. Multimodal MR imaging (diffusion, perfusion, and spectroscopy): is it possible to distinguish Oligodendroglial tumor grade and 1p/19q Codeletion in the Pretherapeutic diagnosis? Am J Neuroradiol. 2013;34(7):1326–33. doi: 10.3174/ajnr.A3352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Jansen NL, Schwartz C, Graute V, Eigenbrod S, Lutz J, Egensperger R, et al. Prediction of oligodendroglial histology and LOH 1p/19q using dynamic [18F]FET-PET imaging in intracranial WHO grade II and III gliomas. Neuro-Oncology. 2012;14(12):1473–80. doi: 10.1093/neuonc/nos259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Iwadate Y, Shinozaki N, Matsutani T, Uchino Y, Saeki N: Molecular imaging of 1p/19q deletion in oligodendroglial tumours with 11C–methionine positron emission tomography. Journal of Neurology, Neurosurgery & Psychiatry, 2016. [DOI] [PMC free article] [PubMed]

- 12.Bourdillon P, Hlaihel C, Guyotat J, Guillotton L, Honnorat J, Ducray F, et al. Prediction of anaplastic transformation in low-grade oligodendrogliomas based on magnetic resonance spectroscopy and 1p/19q codeletion status. J Neuro-Oncol. 2015;122(3):529–37. doi: 10.1007/s11060-015-1737-x. [DOI] [PubMed] [Google Scholar]

- 13.Scheie D, Andresen PA, Cvancarova M, Bo AS, Helseth E, Skullerud K, et al. Fluorescence in situ hybridization (FISH) on touch preparations: a reliable method for detecting loss of heterozygosity at 1p and 19q in oligodendroglial tumors. The American journal of surgical pathology. 2006;30(7):828–37. doi: 10.1097/01.pas.0000213250.44822.2e. [DOI] [PubMed] [Google Scholar]

- 14.Avants BB, Tustison NJ, Song G, Cook PA, Klein A, Gee JC. A reproducible evaluation of ANTs similarity metric performance in brain image registration. Neuroimage. 2011;54(3):2033–44. doi: 10.1016/j.neuroimage.2010.09.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Avants BB, Tustison NJ, Stauffer M, Song G, Wu B, Gee JC. The insight toolkit image registration framework. Front Neuroinform. 2014;8:44. doi: 10.3389/fninf.2014.00044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Akkus Z, Sedlar J, Coufalova L, Korfiatis P, Kline TL, Warner JD, et al. Semi-automated segmentation of pre-operative low grade gliomas in magnetic resonance imaging. Cancer Imaging. 2015;15:12. doi: 10.1186/s40644-015-0047-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Rohlfing T, Zahr NM, Sullivan EV, Pfefferbaum A. The SRI24 Multi-channel atlas of normal adult human brain structure. Human brain mapping. 2010;31(5):798–819. doi: 10.1002/hbm.20906. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Marquez-Neila P, Baumela L, Alvarez L. A morphological approach to curvature-based evolution of curves and surfaces. IEEE Trans Pattern Anal Mach Intell. 2014;36(1):2–17. doi: 10.1109/TPAMI.2013.106. [DOI] [PubMed] [Google Scholar]

- 19.Smith SM. Fast robust automated brain extraction. Human Brain Mapping. 2002;17(3):143–55. doi: 10.1002/hbm.10062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Jenkinson M, Beckmann CF, Behrens TE, Woolrich MW, Smith SM. Fsl. Neuroimage. 2012;62(2):782–90. doi: 10.1016/j.neuroimage.2011.09.015. [DOI] [PubMed] [Google Scholar]

- 21.Krizhevsky A, Ilya Sutskever, and Hinton GE: Imagenet classification with deep convolutional neural networks. Advances in Neural Information Processing Systems, 2012.

- 22.Lecun Y, Bottou L, Bengio Y, Haffner P: Gradient-based learning applied to document recognition. Proceedings of the IEEE 86(11):2278–324, 1998.

- 23.Ypsilantis PP, Siddique M, Sohn HM, Davies A, Cook G, Goh V, et al.: Predicting response to neoadjuvant chemotherapy with PET imaging using convolutional neural networks. PLoS ONE 10(9), 2015 [DOI] [PMC free article] [PubMed]

- 24.Tsuruoka Y, Ji T, Ananiadou S: Stochastic gradient descent training for L1-regularized log-linear models with cumulative penalty. Proceedings of the Joint Conference of the 47th Annual Meeting of the ACL and the 4th International Joint Conference on Natural Language Processing of the AFNLP Association for Computational Linguistics p. 477–85, 2009.

- 25.Keras CF. Github (https://github.com/fchollet/keras), 2015.

- 26.Eckel-Passow JE, Lachance DH, Molinaro AM, Walsh KM, Decker PA, Sicotte H, et al. Glioma groups based on 1p/19q, IDH, and TERT promoter mutations in tumors. New England Journal of Medicine. 2015;372(26):2499–508. doi: 10.1056/NEJMoa1407279. [DOI] [PMC free article] [PubMed] [Google Scholar]