Abstract

Background

Chronic disease patients are affected by low computer and health literacy, which negatively affects their ability to benefit from access to online health information.

Objective

To estimate reliability and confirm model specifications for eHealth Literacy Scale (eHEALS) scores among chronic disease patients using Classical Test (CTT) and Item Response Theory techniques.

Methods

A stratified sample of Black/African American (N = 341) and Caucasian (N = 343) adults with chronic disease completed an online survey including the eHEALS. Item discrimination was explored using bi-variate correlations and Cronbach’s alpha for internal consistency. A categorical confirmatory factor analysis tested a one-factor structure of eHEALS scores. Item characteristic curves, in-fit/outfit statistics, omega coefficient, and item reliability and separation estimates were computed.

Results

A 1-factor structure of eHEALS was confirmed by statistically significant standardized item loadings, acceptable model fit indices (CFI/TLI > 0.90), and 70% variance explained by the model. Item response categories increased with higher theta levels, and there was evidence of acceptable reliability (ω = 0.94; item reliability = 89; item separation = 8.54).

Conclusion

eHEALS scores are a valid and reliable measure of self-reported eHealth literacy among Internet-using chronic disease patients.

Practice implications

Providers can use eHEALS to help identify patients’ eHealth literacy skills.

Keywords: eHEALS, eHealth literacy, Internal structure, Validity, Chronic disease

1. Introduction

In the evolving technological era, patients now have online access to personal healthcare information, as well as the ability to communicate with peers and providers through the Internet and mobile devices. Although a rising number of patients living with chronic disease are accessing online health information [1], research suggests many of these patients lack the skills to effectively navigate and identify relevant health information [2]. Individuals who live with chronic disease are considered unique online health information seekers, because they are searching for specialized and sometimes sophisticated health information that will help them to learn as much as possible about their condition [3]. Chronic disease patients tend to be older [4], disproportionately affected by low socioeconomic status [5], experience poor health-related outcomes [6], and report both low computer and health literacy [7]. As such, it is critical to understand the knowledge and self-efficacy that chronic disease patients with diverse backgrounds have in their ability to access online health information.

eHealth literacy is defined as an individual’s capability to locate, comprehend, and evaluate online health information to make informed health decisions [8]. Chronic disease patients who lack adequate eHealth literacy skills often feel overwhelmed, confused, and frightened when searching for health information online [9], which can lead to resisting the use of the Internet as a source of health information. Healthcare providers who are aware of their patients’ eHealth literacy skillsets can better recommend relevant health information resource or train them to use technology-based resource [9]. Improving patients’ skills using Internet-based health resources, such as interactive discussion forums and secure messaging platforms [10], may enhance patients’ health-related knowledge and self-efficacy and self-manage their chronic condition(s).

A central theoretical construct of eHealth literacy is self-efficacy, which is an estimate of an individual’s belief in his or her own ability to succeed in specific situations or to accomplish a specified task [11]. High self-efficacy and eHealth literacy predict engagement in health promoting behaviors, such as chronic disease self-management [8,12]. Measuring health seekers’ self-efficacy to successfully locate, understand, and act on web-based health information is particularly important for patients living with chronic disease, because these patient populations experience barriers to healthcare access, cognitive declines attributable to advanced age, and physical immobility [13,14].

Currently, the only instrument specifically designed to measure eHealth literacy is the eHealth Literacy Scale (eHEALS). eHEALS was developed to measure an individual’s confidence in their ability to locate and evaluate health information from static webpages, as well as to inform the development and recommendation of health programs that align with a patient’s perceived eHealth literacy skill level [15]. While eHEALS has been used to assess eHealth literacy among patients living with chronic disease [16,17]; there is limited evidence about the reliability and validity of eHealth literacy scores within this population.

van der Vaart and colleagues [18] adapted the original English version of eHEALS to the Dutch language and reported adequate validity and reliability evidence of eHEALS scores earned by patients with rheumatic arthritis (N = 189). However, weak correlations between eHEALS scores and Internet use brought into question the validity of eHEALS to measure eHealth literacy among English-speaking patients living with chronic disease [19]. Moreover, the sample was limited to patients living with only one chronic disease and the size of the sample did not meet sampling recommendations for CFA [20]. Given the limitations in previous research administering the eHEALS in chronic disease populations, the purpose of this study was to explore the one-factor structure and reliability of eHEALS scores among a diverse sample of US chronic disease patients who report using the Internet to find health information. This study goes beyond classical measurement approaches [21,22] by applying a higher-level Item Response Theory (IRT) technique to explore the reliability and internal structure of responses to eHEALS items.

2. Methods

2.1. Sample and procedures

Participants were recruited to complete a web-based survey through an opt-in Qualtrics Panel. Participants who completed the survey received a cash value reward credited to their Qualtrics account. Institutional Review Board approval was obtained to prior to data collection. Participants were included in the main analyses if they reported: (1) using the Internet or e-mail at least once in the past 12 months, and (2) currently living with at least one chronic disease.

2.2. Measures

2.2.1. eHealth literacy

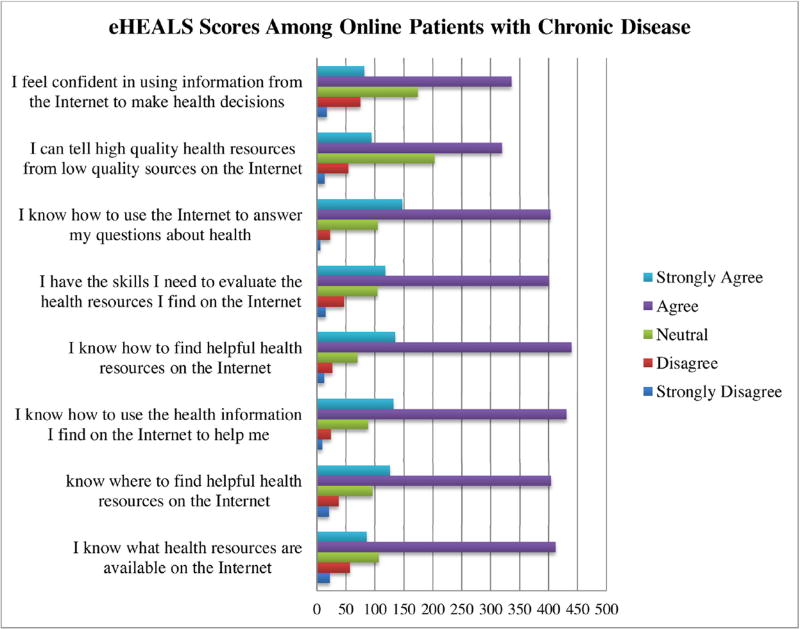

eHealth literacy was measured using the English version of the eHEALS [15]. Fig. 1 shows 8-items measured on a 5-point Likert-type scale ranging from 1 (strongly disagree) to 5 (strongly agree). Items measured an individual’s knowledge of health information resources on the Internet and more specifically, the perceived confidence in their ability to find, evaluate, and use this health information to make informed health decisions [15]. eHEALS scores range from 8 to 40, where a higher score indicates greater perceived eHealth literacy and a lower score indicates lower perceived eHealth literacy.

Fig. 1.

Responses to eHEALS items.

2.2.2. Demographic variables

Demographic variables were measured in the survey included: (a) gender (male or female); (b) age (in years); (c) race (White/Caucasian or Black/African American); (d) annual income (less than $20,000, $20,000–$34,999, $35,000–$49,999, $50,000–$74,999, $75,000 or more); and (e) highest level of education (less than a high school degree, high school degree/GED, some college, bachelor’s degree, master’s degree, advanced graduate work). Participants were presented a list of chronic diseases and they were asked to select all conditions they have been diagnosed with, including: type 2 diabetes, cardiovascular disease, chronic lung disease, arthritis, mental health disorder, cancer, and other chronic disease.

2.3. Data analysis

Descriptive statistics were used to summarize responses to eHEALS items. Internal consistency, item difficulty, and item discrimination of eHEALS items were computed using SPSS v23 [23,24]. Internal consistency was computed with Cronbach’s alpha. Item discrimination was determined by calculating corrected eHEALS item-total correlations, which should be 0.30 or higher [25]. Mplus v7.3 [26] was used to conduct a categorical confirmatory factor analysis (CCFA) with weighted least squares and adjusted means and variances (WLSMV) to calculate model fit indices and standardized factor loadings. Good model fit was defined as: (1) non-statistically-significant (p > 0.05) chi-square value, (2) root mean square error of approximation (RMSEA) estimate less than 0.05 [27], and (3) Comparative Fit Index/Tucker Lewis Index (CFI/TLI) greater than 0.90 [27].

The current study applied a higher-level Item Response Theory (IRT) technique [21,22] to further explore the reliability and internal structure of responses to eHEALS items. RStudio eRm [28] was used to analyze data according to the partial credit model (PCM), an Item Response Theory procedure. Linacre’s [29] guide-lines for optimizing rating scale category effectiveness was used to guide PCM analyses. Monotonicity, an assumption of PCM, was evaluated using item characteristic curves (ICCs) to ensure the average theta (i.e., ability level) increases across response categories [29,30]. Step difficulties were determined using thresholds, where average theta was expected to increase across response category thresholds in accordance with higher theta levels [29]. Infit and outfit mean squares (MSQ) for each item should range from 0.5 to 1.5 logits, and infit t-statistics should range from −2 to 2 logits [29]. The item separation, which should be 2 or greater, and the reliability index, which should be 0.80 or higher, are defined by identifying items on a latent continuum to assess item placement stability across patients with chronic disease [31].

3. Results

3.1. Sample and eHealth literacy description

Of the 811 participants who completed the survey, 648 (79.9%) met inclusion criteria. Participants reported being diagnosed with cardiovascular disease (n = 311; 47.9%), arthritis (n = 131; 20.2%), mental health disorder (n = 181; 27.9%), chronic lung disease (n = 52; 8.1%), and cancer (n = 31; 4.5%). A large proportion reported being diagnosed with another chronic disease not listed (n = 269; 41.5%).

The final sample consisted of mostly females (n = 493; 72.1%) and almost equal representation from Black/African Americans (n = 341; 49.9%) and Caucasians (n = 343; 50.1%). Participants were, on average, 47.24 years old (SD = 17.10 years). Approximately 43.2% (n = 300) of participants earned $50,000 or more annually and 19.3% (n = 132) earned less than $20,000. Only 3.7% (n = 25) of participants did not complete high school, and over 70.2% (n = 500) reported at least some level of college education.

Fig. 1 shows responses to each eHEALS item. The average self-reported eHealth literacy score in this sample was 30.34 (SD = 5.30; min = 8, max = 40). Most respondents (greater than 60%) “agreed” or “strongly agreed” with each item assessing confidence using the Internet for their health. Fewer than 10 respondents “strongly disagreed” with the statement in Item 3 (“I know how to use the health information I find on the Internet to help me”; n = 9) and Item 6 (“I know how to use the Internet to answer my questions about health”; n = 6). Linacre’s [29] third guideline was not upheld for these items, because of the limited representation in the response option, “strongly disagree.”

3.2. Classical test theory analyses

The internal consistency of data collected using the eHEALS was α = 0.90, which is higher than the minimum recommended value of 0.70. Table 1 shows that item difficulty ranged from 3.57 (SD = 0.92) to 3.97 (SD = 0.76). Item discrimination ranged from 0.61 to 0.78, which is well above the recommended cut-off point of 0.3 [25].

Table 1.

Item Difficulty and Discrimination Statistics.

| eHealth Literacy Item | Item Difficulty, M(SD) | Correct Item-Total Correlation |

|---|---|---|

| E1. I know what health resources are available on the Internet | 3.71 (0.91) | 0.67 |

| E2. I know where to find helpful health resources on the Internet | 3.84 (0.89) | 0.73 |

| E3. I know how to use the health information I find on the Internet to help me | 3.95 (0.76) | 0.77 |

| E4. I know how to find helpful health resources on the Internet | 3.96 (0.78) | 0.78 |

| E5. I have the skills I need to evaluate the health resources I find on the Internet | 3.82 (0.87) | 0.68 |

| E6. I know how to use the Internet to answer my questions about health | 3.97 (0.76) | 0.71 |

| E7. I can tell high quality health resources from low quality health resources on the Internet | 3.63 (0.88) | 0.64 |

| E8. I feel confident in using information from the Internet to make health decisions | 3.57 (0.92) | 0.61 |

3.3. Factor analysis

In the initial CCFA testing the one-factor model, the standardized loadings for all 8 eHEALS items were statistically significant (p < 0.05) and ranged from 0.70 to 0.91. Chi-square test of model fit (p < 0.001), CFI (.97) and TLI (.95) values suggested good fit. However, the RMSEA value was 0.18, which is greater than the recommended 0.05 cutoff estimates and signifies poor fit. Because RMSEA suggested poor fit, subsequent CCFAs were computed to explore model fit of eHealth literacy as a two- and three-factor model. As shown in Table 2, adequate model fit was achieved when eHEALS scores were fit into a 3-factor model. In the 3-factor model, the RMSEA value was 0.06 (90% CI = 0.04–0.09), which is closer to the recommended cutoff estimate. Approximately 70% of variance in eHEALS scores was explained by Factor 1, yet only 9% and 5% was explained by Factors 2 and 3 respectively. In the three-factor CCFA model, Factors 1–3 were highly correlated (r = 0.73–0.80) and standardized factor loadings were statistically significant (p < 0.05), ranging from 0.59 to 1.04. Moreover, Factors 1–3 work together as unidimensional measure of eHealth literacy [32]. Omega coefficient suggests that eHEALS scores among patients with chronic disease have high reliability, ω = 0.94.

Table 2.

Fitting eHEALS Scores in 1, 2, and 3 Factors with a Confirmatory Factor Analysis.

| Model Fit Statistic | 1 Factor | 2 Factors | 3 Factors |

|---|---|---|---|

| Chi-Square Test | |||

| Value | 439.48 | 152.35 | 25.02 |

| Degrees of Freedom | 20 | 13 | 7 |

| p-Value | <0.001 | <0.001 | <0.001 |

| RMSEA | |||

| Estimate (90% CI) | 0.18 (0.16–0.19) | 0.13(0.11–0.14) | 0.06 (0.04–0.09) |

| CFI | 0.97 | 0.99 | 0.99 |

| TLI | 0.95 | 0.98 | 0.99 |

3.4. Partial credit model (PCM)

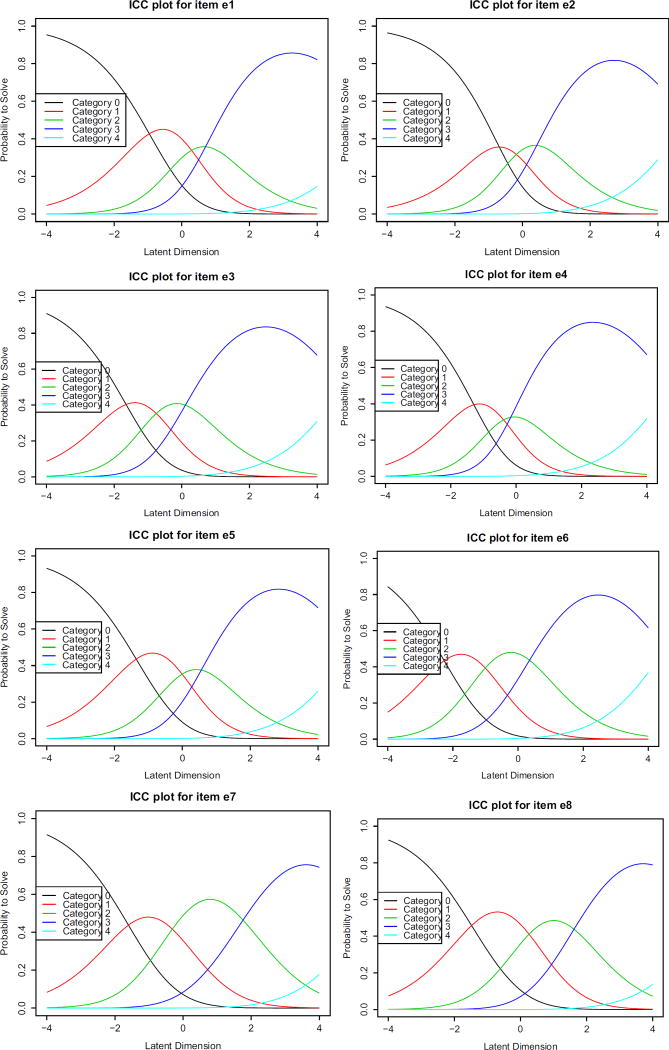

Fig. 2 presents ICCs showing that the average theta increased across response categories for all items. ICCs explored the probability of selecting a response category according to the underlying latent dimension. Results suggest that monotonicity of response options exists for each item. This figure also shows that respondents with a lower theta (theta < 0) had a greater probability of selecting response options “strongly disagree” or “disagree” for all eHEALS items. Likewise, respondents with a higher theta value (theta > 0) had a higher probability of selecting response category “strongly agree” to Item 2, Item 3, Item 4, and Item 6.

Fig. 2.

eHEALS ICCs exploring probability of response categories by latent variable.

Note: Category 0 = Strongly Disagree; Category 1 = Disagree; Category 2 = Neutral; Category 3 = Agree; Category 4 = Strongly Agree.

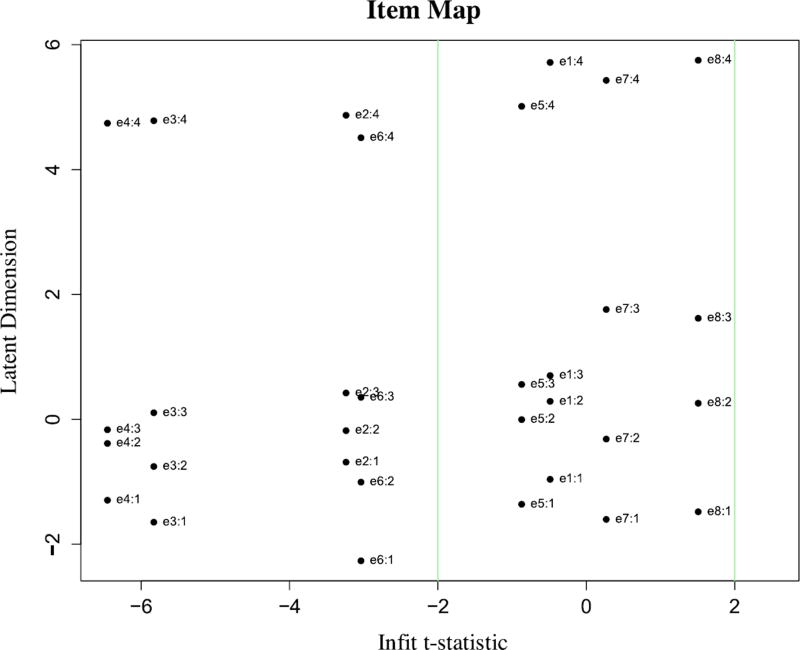

Table 3 shows that threshold values increased across response categories for each item, suggesting that higher response categories corresponded with higher theta levels and adequate step difficulty in each item. Table 4 shows that eHEALS items’ infit and outfit MSQ values were within the recommended range of 0.5 and 1.5 logits. However, Table 4 and Fig. 3 show that Items 2, 3, 4 and 6 exceeded the recommended infit t-statistic range of −2 to 2, which suggests under-fit of these items. Item reliability was 0.89 and item separability was 8.54, indicating high reliability and stability of items on the latent continuum.

Table 3.

Threshold Values of Response Options for eHEALS Items.

| eHealth Literacy Item | Location | Threshold 1 | Threshold 2 | Threshold 3 | Threshold 4 |

|---|---|---|---|---|---|

| E1. I know what health resources are available on the Internet | 1.44 | −0.96 | 0.29 | 0.70 | 5.72 |

| E2. I know where to find helpful health resources on the Internet | 1.11 | −0.69 | −0.18 | 0.42 | 4.87 |

| E3. I know how to use the health information I find on the Internet to help me | 0.62 | −1.65 | −0.76 | 0.11 | 4.78 |

| E4. I know how to find helpful health resources on the Internet | 0.72 | −1.29 | −0.39 | −0.17 | 4.74 |

| E5. I have the skills I need to evaluate the health resources I find on the Internet | 1.05 | −1.36 | 0.0 | 0.56 | 5.01 |

| E6. I know how to use the Internet to answer my questions about health | 0.40 | −2.27 | −1.0 | 0.35 | 4.51 |

| E7. I can tell high quality health resources from low quality health resources on the Internet | 1.32 | −1.60 | −0.32 | 1.76 | 5.43 |

| E8. I feel confident in using information from the Internet to make health decisions | 1.54 | −1.48 | 0.26 | 1.62 | 5.75 |

Table 4.

Infit and Outfit MSQ and T-Statistic Values.

| eHealth Literacy Item | p-value | Infit MSQ | Outfit MSQ | Infit T-Statistic | Outfit T-Statistic |

|---|---|---|---|---|---|

| E1. I know what health resources are available on the Internet | 0.14 | 0.97 | 1.06 | −0.50 | 0.78 |

| E2. I know where to find helpful health resources on the Internet | 1.0 | 0.79 | 0.76 | −3.24 | −3.52 |

| E3. I know how to use the health information I find on the Internet to help me | 1.0 | 0.64 | 0.58 | −5.83 | −6.68 |

| E4. I know how to find helpful health resources on the Internet | 1.0 | 0.59 | 0.53 | −6.45 | −7.04 |

| E5. I have the skills I need to evaluate the health resources I find on the Internet | 0.98 | 0.94 | 0.89 | −0.87 | −1.61 |

| E6. I know how to use the Internet to answer my questions about health | 1.0 | 0.81 | 0.73 | −3.04 | −4.26 |

| E7. I can tell high quality health resources from low quality health resources on the Internet | 0.36 | 1.02 | 1.02 | 0.27 | 0.31 |

| E8. I feel confident in using information from the Internet to make health decisions | 0.002 | 1.09 | 1.17 | 1.51 | 2.66 |

Fig. 3.

Item map of infit t-statistic and latent dimension.

4. Discussion and conclusion

Findings of this study support the intended use of eHEALS to measure self-reported eHealth literacy among chronic disease patients. Similar to other studies exploring the validity of eHEALS scores in other populations [15,18,33], eHEALS is a 1-factor measure of eHealth literacy. Overall, results from CTT, CFA, and PCM procedures in this study support the internal structure and reliability of eHEALS among adults who use the Internet who live with at least one chronic disease. Chronic disease patients with higher eHealth literacy were more likely to “agree” or “strongly agree” they have the self-efficacy and knowledge to locate, understand, and act upon health information found from electronic sources, including the Internet.

ICCs from the PCM analysis supported the presence of all eHEALS response options due to each option’s curve being highest on one point on the latent continuum. However, participants in the study were most likely to select the response option “strongly agree” across all items. This potentially positive finding suggests that patients with chronic disease generally feel confident to find, appraise, and apply health information from electronic sources to address their health problems. Full-labeled 5-point scales, such as those in the eHEALS, maximize reliability and interpretability of data [34] and are less likely to result in extreme response style [35]. However, it is possible that patients with chronic disease with low computer and media literacy may be more prone to certain types of survey response bias, in which they choose response options that are not reflective of their self-efficacy beliefs. To obtain a more precise measure of eHealth literacy, future research should explore which response options are most appropriate to include on the eHEALS.

Future psychometric research on the eHEALS should also incorporate user-centered research methods, such as cognitive interviews, behavioral observations, and computer adaptive testing (CAT). Cognitive interviews that explore the processes used by chronic disease patients when responding to different eHEALS items may provide guidance on the optimal number of response options that respondents can choose. Also, behavioral observations of eHealth literacy skills are needed, especially for patients using various digital devices to access health information. van der Vaart et al. [18] previously used web-based health information retrieval assignments to assess the validity of eHEALS scores in adults. Results indicated non-significant relationships between eHEALS scores and behavioral measures of eHealth literacy. These negative findings support the need to establish the concurrent validity of self-report and behavioral measures of eHealth literacy in people living with chronic disease. One final approach that could prove especially useful is integrating CAT into the eHEALS. The CAT approach will help to ensure that items are tailored to a user’s eHealth literacy level. Using CAT when administering the eHEALS will likely strengthen its precision and sensitivity, while also maintaining its brevity and usefulness in practical environments, both online and offline [36]. Researchers adopting these types of user-centered approaches to evaluating the psychometric properties of the self-reported eHEALS are likely to generate unique insights regarding the scale’s strengths and weaknesses.

The current study is not without limitations. For example, we did not examine how eHEALS scores among chronic disease patients predict future health information seeking or engagement in protective health behaviors. Future research among individuals with chronic disease should explore convergent and divergent validity evidence by examining relationships between eHEALS scores and frequency of Internet use, engagement in health promoting behaviors, and other patient-centered health outcomes.

4.1. Practical implications

eHEALS is a brief, 8-item scale that can be easily integrated into a variety of clinical settings and contexts to identify a patient’s self-reported eHealth literacy level [15]. Health care providers can build rapport and mutual understanding with their patients through understanding their eHealth literacy skills and evaluating interest in learning about disease management using the Internet [8]. Providers who are aware of their patient’s eHealth literacy skills can best prescribe or recommend online resource that will promote their understanding of a specific condition or recommended health behavior. Moreover, providers who identify patients with low eHealth literacy can recommend eHealth literacy training programs or services [37]. Providing these trainings to those who are willing to participate could allow patients to gain skills necessary to access and benefit from high-quality health information on the Internet. Findings from this study support use of eHEALS as part of a screening instrument that could help identify patients’ eHealth literacy skills and a diagnostic tool used by providers to recommend online, high-quality resources.

Because of the rising adoption and popularity of social media, online health information is becoming more and more dynamic and interactive. Research has recommended adapting and updating the eHEALS to include items that better assess the dynamic and participatory nature of online information sharing [19]. Future research should adapt and/or build on the eHEALS to measure more interactive eHealth literacies that assess consumer penchant to seek out health information retrieved, created, or curated from social media platforms. Interactive eHealth literacy can be measured by assessing COPD patients’ skills in building online relationships with other users by contributing to message boards, as well as formulating questions and information germane to COPD-related complications.

4.2. Conclusion

This study used a combination of advanced measurement theories and techniques to evaluate the internal structure and reliability of eHEALS scores among a stratified sample including Black/African American adults living with chronic disease, a population underrepresented in eHealth research. Results suggest that eHEALS scores among patients living with chronic disease are reliable and represent one overall construct of eHealth literacy. Healthcare providers can use eHEALS to identify which of their patients are in need of training to enhance their eHealth literacy. Overall, findings from this study support the use of eHEALS to measure self-reported eHealth literacy in populations living with chronic disease.

Acknowledgments

Funding

This work was supported by the STEM Translational Communication Center and the Graduate Division of the University of Florida’s College of Journalism and Communications, Gainesville, FL.

Research reported in this publication was supported by the National Heart, Lung, and Blood Institute of the National Institutes of Health under Award Number F31HL132463. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

References

- 1.Pew Internet & American Life Project, Chronic disease and the Internet. [accessed 03.18.16]; http://www.pewinternet.org/2010/03/24/chronic-disease-and-the-internet/

- 2.Lee K, Hoti K, Hughes JD, Emmerton LM. Consumer use of Dr. Google: a survey on health information-seeking behaviors and navigational needs. J. Med. Internet Res. 2015;17:e288. doi: 10.2196/jmir.4345. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.McCray AT. Promoting health literacy. J. Am. Med. Inf. Assoc. 2005;12:152–163. doi: 10.1197/jamia.M1687. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Office of Disease Prevention and Health Promotion, Older adults. [accessed 03.18.16]; https://www.healthypeople.gov/2020/topics-objectives/topic/older-adults.

- 5.Mielck A, Vogelmann M, Leidl R. Health-related quality of life and socioeconomic status: inequalities among adults with a chronic disease. Health Qual. Life Outcomes. 2014;12:58. doi: 10.1186/1477-7525-12-58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Parekh AK, Goodman RA, Gordon C, Koh HK. Managing multiple chronic conditions: a strategic framework for improving health outcomes and quality of life. Publ. Health Rep. 2011;126:460–471. doi: 10.1177/003335491112600403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Egbert N, Nanna KM. Health literacy: challenges and strategies. Online J. Issues Nurs. 2009;14 [Google Scholar]

- 8.Norman CD, Skinner HA. eHealth literacy: essential skills for consumer health in a networked world. J. Med. Internet. Res. 2006;8:e9. doi: 10.2196/jmir.8.2.e9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Fox S. E-patients with a disability or chronic disease. [accessed 03.18.16]; http://www.pewinternet.org/files/old-media/Files/Reports/2007/EPatients_Chronic_Conditions_2007.pdf.

- 10.Haun JN, Patel NR, Lind JD, Antinori N. Large-scale survey findings inform patients’ experiences in using secure messaging to engage in patient-provider communication and self-care management: a qualitative assessment. J. Med. Internet. Res. 2015;17:e282. doi: 10.2196/jmir.5152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Bandura A. In: Self-efficacy. Ramachuaudran VS, editor. New York: 1994. [Google Scholar]

- 12.Clark NM, Dodge JA. Exploring self-efficacy as a predictor of disease management health. Educ. Behav. 1999;26:72–89. doi: 10.1177/109019819902600107. [DOI] [PubMed] [Google Scholar]

- 13.Chang BL, Bakken S, Brown SS, Houston TK, Kreps GL, Kukafka R, Safra C, Satvri PZ. Bridging the digital divide: reaching vulnerable populations. J. Med. Inf. Assoc. 2014;11:448–457. doi: 10.1197/jamia.M1535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Neter E, Brainin E. eHealth literacy: extending the digital divide to the realm of health information. J. Med. Internet Res. 2012;14:e19. doi: 10.2196/jmir.1619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Norman CD, Skinner HA. eHEALS: the eHealth literacy scale. J. Med. Internet Res. 2006;8:e27. doi: 10.2196/jmir.8.4.e27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Marrie RA, Salter A, Tyry T, Fox RJ, Cutter GR. Health literacy association with behaviors and healthcare utilization in multiple sclerosis: a cross-sectional study. Interact. J. Med. Res. 2014;3:e3. doi: 10.2196/ijmr.2993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Tennant B, Stellefson M, Dodd V, Chaney B, Chaney D, Paige S, Alber J. eHealth literacy and web 2.0 health information seeking behaviors among baby boomers and older adults. J. Med. Internet Res. 2015;17:e70. doi: 10.2196/jmir.3992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.van der Vaart R, van Deursen AJAM, Drossaert CHC, Taal E, van Dijk JAMG, van de Laar MAFJ. Does the eHealth literacy scale (eHEALS) measure what it intends to measure? Validation of a dutch version of the eHEALS in two adult populations. J. Med. Internet Res. 2011;13:e86. doi: 10.2196/jmir.1840. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Norman C. eHealth literacy 2.0: problems and opportunities with an evolving concept. J. Med. Internet Res. 2011;13:e125. doi: 10.2196/jmir.2035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.MacCallum RC, Wildaman KF, Preacher KJ, Hong S. Sample size in factor analysis: the role of model error. Multivar. Behav. Res. 2001;36:611–637. doi: 10.1207/S15327906MBR3604_06. [DOI] [PubMed] [Google Scholar]

- 21.Mitsutake S, Shibata A, Ishii K, Oka K. Developing Japanese version of the eHealth literacy scale (eHEALS) Nihon. Koshu. Eisei. Zasshi. 2011;58:361–371. [PubMed] [Google Scholar]

- 22.Paramio PG, Almagro BJ, Hernando GA, Aquaded GJI. Validation of the eHealth literacy scale (eHEALS) in Spanish university students. Rev. Esp. Salud. Publica. 2015;89:329–338. doi: 10.4321/S1135-57272015000300010. [DOI] [PubMed] [Google Scholar]

- 23.Crocker L, Algina J. Introduction to classical and modern test theory. New York: 1986. [Google Scholar]

- 24.I.B.M., SPSS Software. [accessed 03.18.16]; http://www-01.ibm.com/software/analytics/spss/

- 25.Pallant J. SPSS Survival Manual: A Step by Step Guide to Data Analysis Using SPSS for Windows. Maidenhead: 2007. [Google Scholar]

- 26.Mplus, Mplus at a glance. [accessed 03.18.16]; https://www.statmodel.com/glance.shtml.

- 27.Hu L, Bentler PM. Cutoff criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Struct. Equat. Model. 1999;6:1–55. [Google Scholar]

- 28.Mair P, Hatzinger R. Estimation of partial credit models: Inside R. [accessed 03.18.16]; http://www.inside-r.org/packages/cran/eRm/docs/PCM.

- 29.Linacre JM. Optimizing rating scale category effectiveness. J. Appl. Meas. 2002;3:85–106. [PubMed] [Google Scholar]

- 30.Allen MJ, Yen WM. Introduction to measurement theory. Long Grove: 1979. [Google Scholar]

- 31.Fox CM, Jones JA. Uses of rasch modeling in counseling psychology research. J. Couns. Psychol. 1998;45:30–45. [Google Scholar]

- 32.Abdi H. Factor rotations. In: Lewis-Beck M, Bryman A, editors. Encyclopedia for Research Methods for the Social Sciences. Thousand Oaks: 2003. [Google Scholar]

- 33.Chung SY, Nahm ES. Testing reliability and validity of the eHealth literacy scale (eHEALS) for older adults recruited online. Comput. Inf. Nurs. 2015;33:150–156. doi: 10.1097/CIN.0000000000000146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Vaerenbergh YV, Thomas TD. Response styles in survey research: a literature review of antecedents consequences, and remedies. Int. J. Publ. Opin. Res. 2012;25:195–217. [Google Scholar]

- 35.Moors G, Kieruj ND, Vermunt JK. The effect of labeling and numbering of response scales on the likelihood of response bias. Soc. Method. 2014;44:369–399. [Google Scholar]

- 36.Revicki DA, Cella DF. Health status assessment for the twenty-first century: item response theory, item banking and computer adaptive testing. Qual. Life. Res. 1997;6:595–600. doi: 10.1023/a:1018420418455. [DOI] [PubMed] [Google Scholar]

- 37.Watkins I, Xie B. eHealth literacy interventions for older adults: a systematic review of the literature. J. Med. Internet Res. 2014;16:e225. doi: 10.2196/jmir.3318. [DOI] [PMC free article] [PubMed] [Google Scholar]