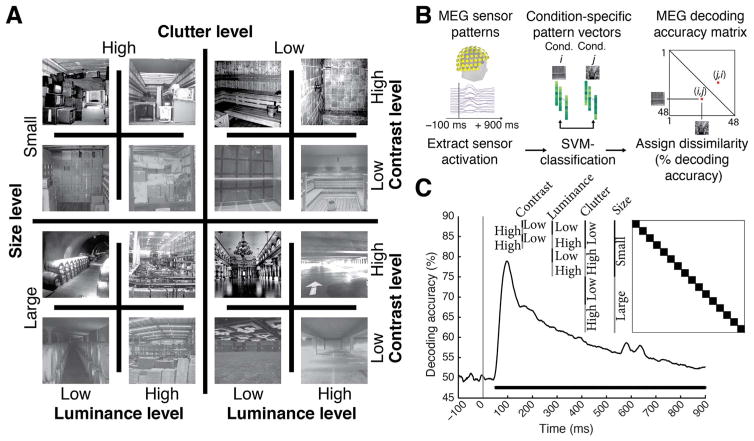

Fig. 1. Image set and single-image decoding.

A) The stimulus set comprised 48 indoor scene images differing in the size of the space depicted (small vs. large), as well as clutter, contrast, and luminance level; here each experimental factor combination is exemplified by one image. The image set was based on behaviorally validated images of scenes differing in size and clutter level, de-correlating factors size and clutter explicitly by experimental design (Park et al., 2015). Note that size refers to the size of the real-world space depicted on the image, not the stimulus parameters; all images subtended 8 visual angle during the experiment. B) Time-resolved (1 ms steps from −100 to +900 ms with respect to stimulus onset) pairwise support vector machine classification of experimental conditions based on MEG sensor level patterns. Classification results were stored in time-resolved 48×48 MEG decoding matrices. C) Decoding results for single scene classification independent of other experimental factors. Decoding results were averaged across the dark blocks (matrix inset), to control for luminance, contrast, clutter level and scene size differences. Inset shows indexing of matrix by image conditions. Horizontal line below curve indicates significant time points (n=15, cluster-definition threshold P < 0.05, corrected significance level P < 0.05); gray vertical line indicates image onset.