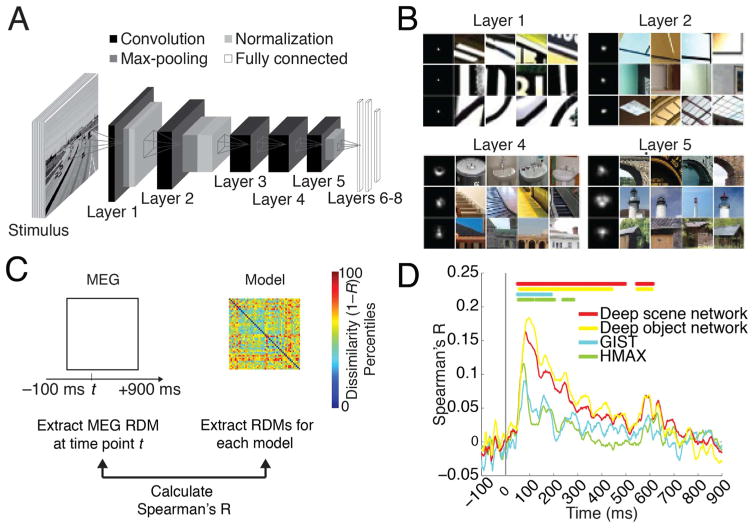

Fig. 3. Predicting emerging neural representations of single scene images by computational models.

A) Architecture of deep convolutional neural network trained on scene categorization (deep scene network). B) Receptive field (RF) of example deep scene neurons in layers 1, 2, 4, and 5. Each row represents one neuron. The left column indicates size of RF, and the remaining columns indicate image patches most strongly activating these neurons. Lower layers had small RFs with simple Gabor filter-like sensitivity, whereas higher layers had increasingly large RFs sensitive to complex forms. RFs for whole objects, texture, and surface layout information emerged although these features were not explicitly taught to the deep scene model. C) We used representational dissimilarity analysis to compare visual representations in brains with models. For every time point, we compared subject-specific MEG RDMs (Spearman’s R) to model RDMs and results were averaged across subjects. D) All investigated models significantly predicted emerging visual representations in the brain, with superior performance for the deep neural networks compared to HMAX and GIST. Horizontal lines indicate significant time points (n=15, cluster-definition threshold P < 0.05, corrected significance level P < 0.05); gray vertical line indicates image onset.