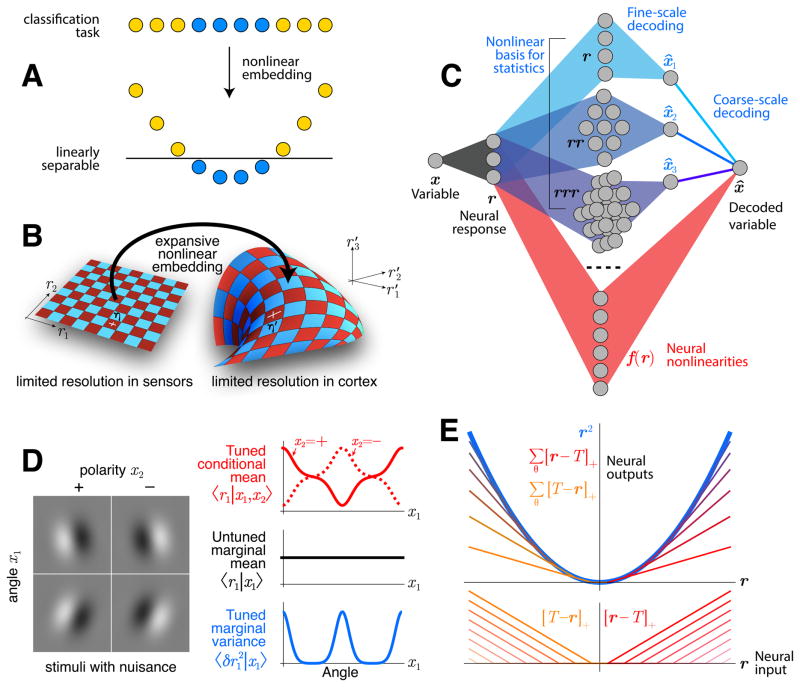

Figure 2. Nonlinearity and redundancy in neural computation.

A: The task of separating yellow from blue dots cannot be accomplished by linear operations, because the task-relevant variable (color) is entangled with a nuisance variable (horizontal position). After embedding the data nonlinearly into a higher-dimensional space, the task-relevant variable becomes linearly separable. B: Signals from the sensory periphery have limited information content, illustrated here by a cartoon tiling of an abstract neural response space r = (r1,r2). Each square represents the resolution at which the responses can be reliably discriminated, up to a precision determined by noise (η′). When sensory signals are embedded into a higher-dimensional space r′ = (r′1,r′2,r′3), the noise is transformed the same way as the signals. This produces a redundant population code with high-order correlations. C: Such a code is redundant, with many copies of the same information in different neural response dimensions. Consequently, there are many ways to accomplish a given nonlinear transformation of the encoded variable, and thus not all details about neural transformations (red) matter. For this reason it is advantageous to model the more abstract, representational level on which the nonlinearity affects the information content. Here, a basis of simple nonlinearities (e.g. polynomials, bluish) can be a convenient representation for these latent variables, and the relative weighting of different coarse types of nonlinearities may be more revealing than fine details as long as the different readouts of fine-scale are dominated by information-limiting noise. D: A simple example showing that the brain needs nonlinear computation due to nuisance variation. Linear computation can discriminate angles x1 of Gabor images I of a fixed polarity x2=±1, because the nuisance-conditional mean of the linear function r=w·I is tuned to angle (red). If the polarity is unknown, however, the mean is not tuned (black). Tuning is recovered in the variance (blue), since the two polarities cause large amplitudes (plus and minus) for some angles. Quadratic statistics T(r)=r2 therefore encode the latent variable x1. E: A quadratic transformation r2 can be produced by the sum of several rectified linear functions [·]+ with uniformly spaced thresholds T.