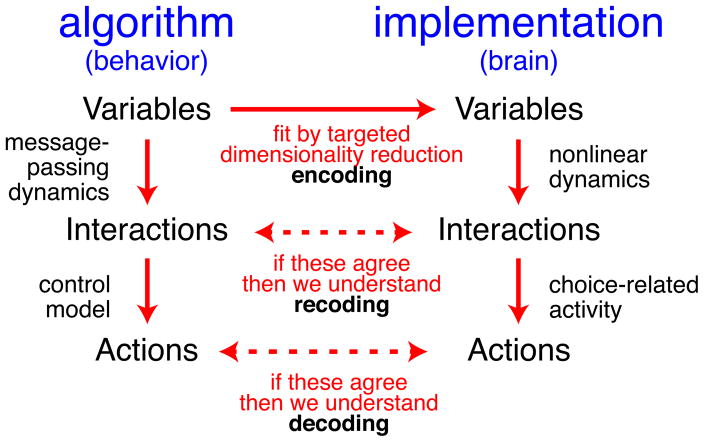

Figure 3. Schematic for understanding distributed codes.

This figure sketches how behavioral models (left) that predict latent variables, interactions, and actions should be compared against neural representations of those same features (right). This comparison first finds a best-fit neural encoding of behaviorally relevant variables, and then checks whether the recoding and decoding based on those variables match between behavioral and neural models. The behavioral model defines latent variables needed for the task, and we measure the neural encoding by using targeted dimensionality reduction between neural activity and the latent variables (top). We quantify recoding of by the transformations of latent variables, and compare those transformations established by the behavioral model to the corresponding changes measured between the neural representations of those variables (middle row). Our favored model predicts that these interactions are described by a message-passing algorithm, which describes one particular class of transformations (Figure 1). Finally, we measure decoding by predicting actions, and compare predictions from the behavioral model and the corresponding neural representations (bottom row). A match between behavioral models and neural models, by measures like log-likelihood or variance explained, provides evidence that these models accurately reflect the encoding, recoding and decoding processes, and thus describe the core elements of neural computation.