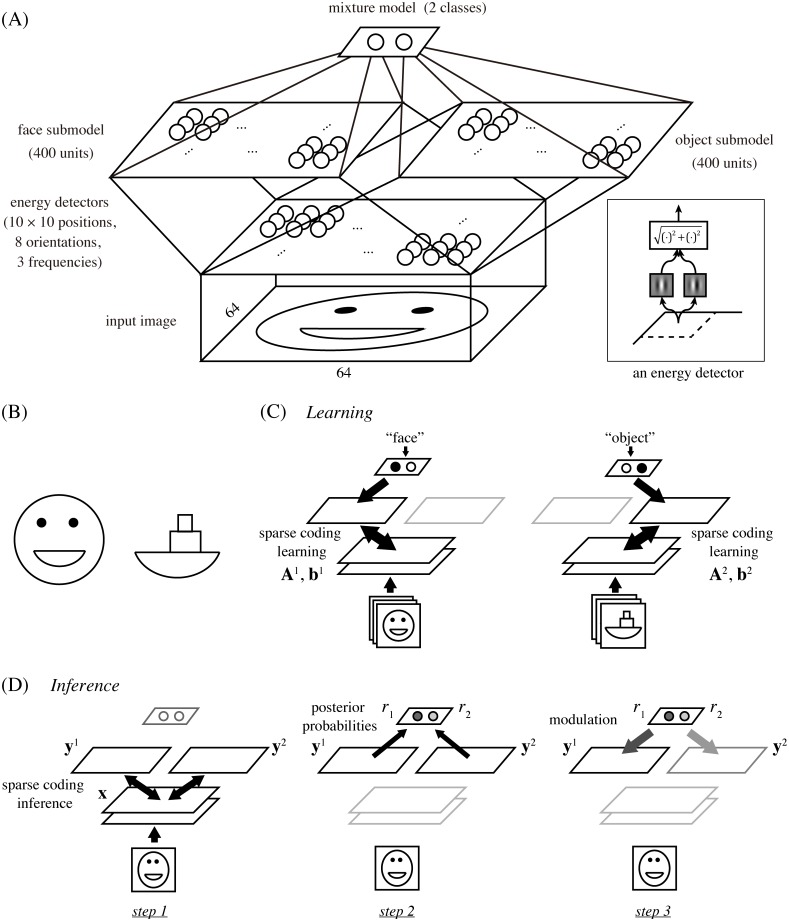

Fig 1.

(A) The architecture of our hierarchical model. It starts with an energy detector bank and proceeds to two sparse coding submodels for faces and objects, which are then combined into a mixture model. Inset: an energy detector model. (B) Cartoon face and boat. Note that the mouth of the face and the base of the boat are the same shapes. (C) Learning scheme. We assume explicit class information, either “face” or “object,” of input images to be given during training, which allows us to use a standard sparse coding learning for each submodel with the corresponding dataset. (D) Inference scheme. For testing response properties, the network first interprets the input separately by the sparse code of each submodel (step 1), then compares the goodnesses of the obtained interpretations as posterior probabilities (step 2), and finally modules multiplicatively the responses in each submodel with the corresponding posterior probability (step 3). Note that the normalization of the probablities in step 2 leads to competition between the submodels in step 3.