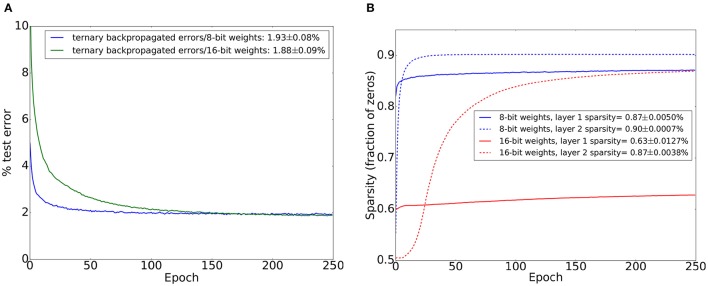

Figure 3.

MNIST test set errors on a similar network to the one used in Figure 3 except that binary unipolar 0/1 neural activations are used instead of bipolar −1/1 activations. A dropout probability of 0.2 was used between all layers. Mean and standard deviations are from 20 training trials. (A) Test set accuracy when using ternary errors and limited precision weights (8 bits and 16 bits). Legend shows final test set error and its standard deviation. (B) Sparsity (fraction of zeros) of the activations of each of the two hidden layers when using 8-bit weights and 16-bit weights. Sparsity was evaluated on the test set after each training epoch. Sparsity figures in the legend refer to sparsity on the test set after the last training epoch.