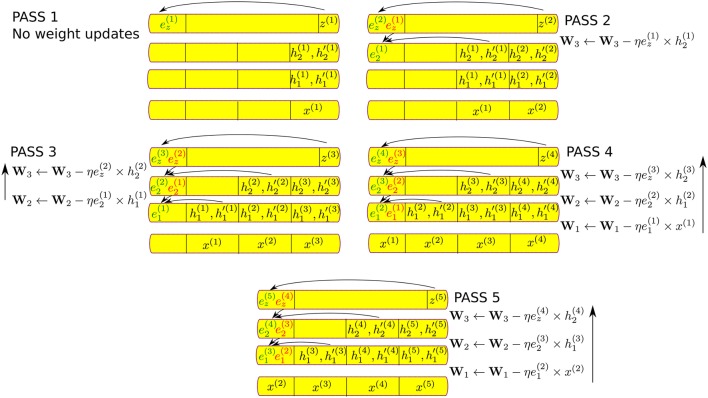

Figure 4.

Illustration of pipelined backpropagation for the two-layer network of Figure 1, showing network history and storage requirements. The upward arrows indicate the order in which the weight updates are carried out, in the same order of weight lookups in the forward pass. Each additional layer in the network incurs for each lower layer additional delay in the error computation and the weight updates, which also requires additional history of the hidden unit states to be stored. Previous errors (shown in red) are overwritten by the newly backpropagated errors (shown in green) at the end of each pass. Hence only one error value is stored per layer.