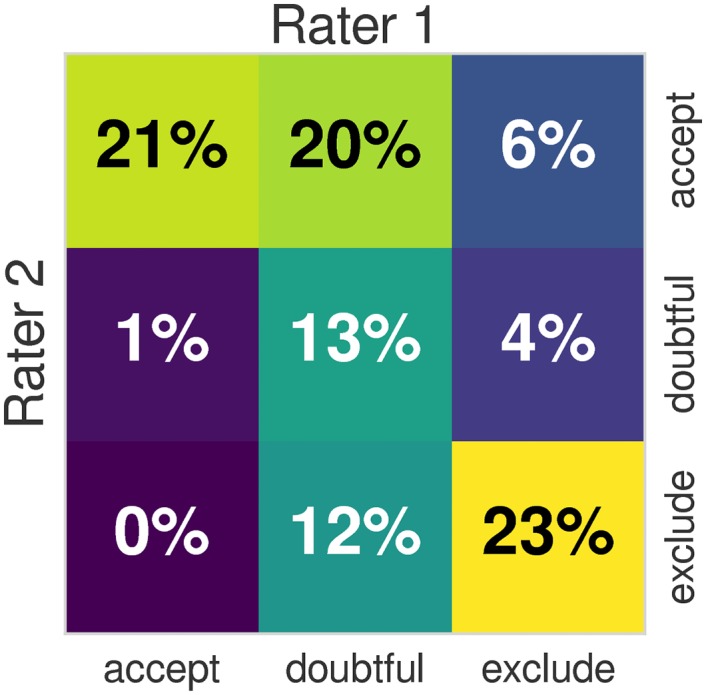

Fig 2. Inter-rater variability.

The heatmap shows the overlap of the quality labels assigned by two different domain experts on 100 data points of the ABIDE dataset, using the protocol described in section Labeling protocol. We also compute the Cohen’s Kappa index of both ratings, and obtain a value of κ = 0.39. Using the table for interpretation of κ by Viera et al. [16], the agreement of both raters is “fair” to “moderate”. When the labels are binarized by mapping “doubtful” and “accept” to a single “good” label, the agreement increases to κ = 0.51 (“moderate”). The “fair” to “moderate” agreement of observers demonstrates a substantial inter-rater variability. The inter- and intra- rater variabilities translate into the problem as class-noise since a fair amount of data points are assigned noisy labels that are not consistent with the labels assigned on the rest of the dataset. An extended investigation of the inter- and intra- rater variabilities is presented in Block 5 of S1 File.